Sparc

-

Posts

41 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Sparc

-

-

But if consumer is too slow, producer will have an empty Q2 when starting to fill, and will have to wait.

If no losses are acceptable and buffers are reused, any mechanism proposed will have this problem. That point is not valid criticism.

If losses are acceptable a "no wait" path is provided by reusing a buffer from Q1.

-

The IMAQ wire is a reference to an image buffer. The data they contain isn't copied unless explicitly copied via IMAQ Copy function or IMAQ Get Bytes function or you wire up the "Dest" terminal on the IMAQ functions.

Scenario 1: If the Consumer must process every item sent by the Producer the number of buffers must be a size to store the back log. Whether this size is 2 or 3 or 907 is only relevant when deciding or being constrained by the platform it's running on.

Scenario 2: If the Consumer is only required to process as many as it can and losses are acceptable, pick a number of buffers that satisfies the acceptable loss criteria. Again the actual number of buffers is irrelevant.

Two Queues. Q1 is Producer to Consumer. Q2 is Consumer to Producer. Queue element is same in both and is whatever you want as long as it has an IMAQ reference (buffer) in it.

During initialization Q2 is loaded with as many unique buffers as deemed necessary by the Scenario.

Scenario 1 Implementation:

Producer Dequeues a buffer from Q2. Fills it. Enqueues it to Q1.

Consumer Dequeues from Q1. Processes it. Enqueues it to Q2.

Since Producer is pulling from Q2, there is no chance it will ever overwrite an unprocessed buffer.

Q1 being empty is not a problem. Means consumer is faster than Producer.

Q2 being empty is an error condition that must be addressed at the system level.

Scenario 2 Implementation:

Producer Dequeues a buffer from Q2. Fills it. Enqueues it to Q1.

Consumer Dequeues from Q1. Processes it. Enqueues it to Q2.

Since Producer is pulling from Q2, there is no chance it will ever overwrite an unprocessed buffer.

Q1 being empty is not a problem. Means consumer is faster than Producer.

If Q2 is empty, Consumer is backlogged and a loss must occur. Producer Dequeues from Q1.

Since an element can only be Dequeued once, there is no chance the Consumer is processing that buffer and it is safe to overwrite.

-

How immune is an IR beacon to rain?

There's an LED submerged in my cat's water fountain. I had to put the thing together (*some assembly required). That LED lead is literally two wires and some shrink tube.

How immune is an IR beacon to rain?

Hrm... Sparkfun has a RFID reader board for $25 + the longest range reader (180 mm max) for $35. That could be attached to an arduino with wireless. It's not the sort of thing that will cover a room, but could scan to open a gate.

$25 + 35 + Audrino ($25) is ~$85 per drop. 180mm is 7 inches (max). DIY vs. OTS seems like a wash to me. With OTS winning for the reasons people buy OTS.

-

Taking a trick from racing events: RFID tags in their shoes and RFID tag scanners on the floor near the doorways of the rooms. Maybe not. After checking, the tags are cheap, the readers not so much. Tags: $1.00. Readers: $1000.00. Dropping to a door-acces like reader (which would require the guide to purposely activate) it's about $100 per door. Of course once you're at purposely activating a push button in each room is simpler.

Accelerometers? Reset at a known position after after each 'lap' of the maze. A non-trivial amount of DIY involved (microcontroller with WIFI + accelerometer + battery + case + wifi access points) and cost is still about $100 per tracker.

Not automatic, but webcams. Your CIC guys would have to check the webcam feeds to see who is where.

Infrared. Each guide wears an IR beacon and an IR detector in each room can ID the guide. Smaller hardware requirements than the accelerometer idea.

Depending on the Webcam, the IR beacon could assist there too.

-

hot mini desktops

These aren't the Big Box Specials where they advertise some awesome spec (9 GHz Octocore CPU!!!) and then cripple the entire box reduce cost with some unadvertised component (66 MHz, single channel memory bus)?

I've haven't yet had a 32 bit app run worse on a 64 bit machine. I've had them run the same, better, or not at all -- but never worse (at least never so much worse that I noticed).

My hunch is the new computers don't have better hardware in all the right places. For instance the existing PCs might be 4 GHz single core and the new PCs 2 GHz Quad core.

-

VI corruption. The fact a copy/paste of the code works is a strong clue.

I've had many inexplicable problems simply disappear by just copy and pasting entire block diagrams in to new VIs. It's almost at the level that the technique is a legitimate debugging step.

To paraphrase a friend: "What? You've never seen LabVIEW do something stupid before?"

-

Does anyone know how to make a driver for such a device using LV only?

Assuming you mean a traditional Windows driver, you can't.

You'll nee to use the Windows Device Development Kit and it uses some version of Visual Studio and write it C or C++, I forget which.

On the other hand, if you make it with a USB interface and HID compliant you won't need to make a device driver. Windows (and just about every other modern OS) will recognize it and use a built in driver for it.

There are many microcontroller products/projects out there that ship with a USB interface and HID compliant stack.

-

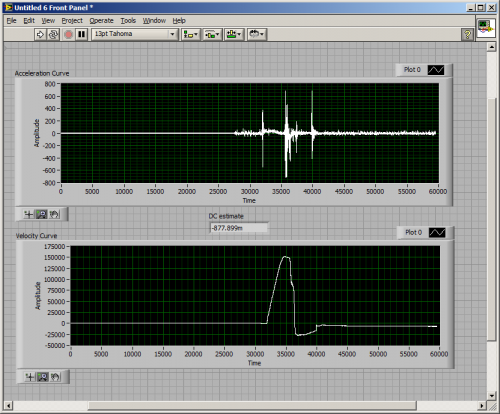

Hi,

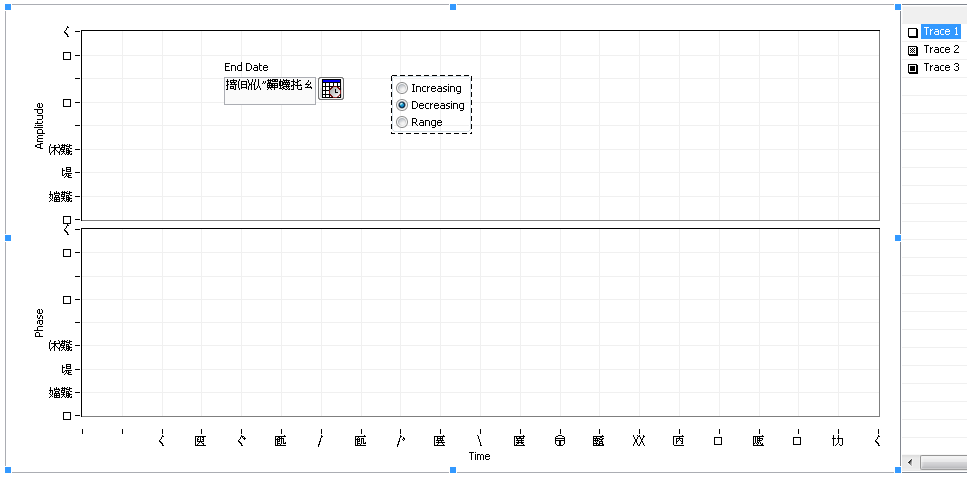

I want to show on a graph some acquired ECG data (Fc=500 sample/s). My wish is to have an X-axis with absolute time starting from a fixed time, ending in "start_time+(number_of_acquired_points/Sample_frequency)" and with the possibility to browse the chart with the X Scrollbar.

I was able to get a correct time axis but not with a Multiplier factor different from 1 (but in this way data is shown too much compressed).

I tried Waveform Graph both for double array and waveform generated starting from the array.

Thanks in advance.

Vincenzo

Does this do what you want?

-

- Popular Post

- Popular Post

-

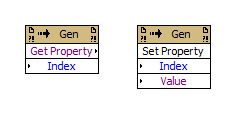

Where did you get them? Scripting Workbench?

Turned 'em on using a super-secret and private key in the LabVIEW INI file.

I just did a search...those methods are not used anywhere (including the Property Pages) in LabVIEW 2010.

I have an educated guess as to their purpose, though. Back when the Property Pages were first added to LabVIEW in version 7.0, there were many properties of VI objects that were not yet exposed in VI Server. As a result, the Get/Set Object methods were added, and hard-coded indices to an internal database of properties were used for getting/setting properties of controls. As the VI Server interface to objects improved, this mechanism was no longer needed, as evidenced by the fact that the Property Pages now (in LabVIEW 2010) rely entirely on VI Server to get/set object properties.

-D

That correlates well with some other "research" I've done. There are a handful of VIs down in <LabVIEW>\resource\PropertyPages\ that look liked they'd be used with these properties, but they also appear to be orphans as I can't catch LV ever trying to use them.

Thank you for the insight.

-

The Help description is so tantalizing for these two methods it's almost unbearable: "Property Pages use this for generic get/set of properties." The interface to them is dead simple too: one I32 array, one variant. Sounds pretty ideal for a "Property Saver" type tool. Instead of nested case structures and class coercions you just wire an array and a variant to a Generic class method.

Unfortunately, I can't get them to work. I've tried a wide variety of inputs to these guys and so far I've gotten nothing but 1058 errors: "LabVIEW: Specified property not found." About the only thing I have learned is if the array has more than 13 elements you'll crash LabVIEW.

Searching the usual places has resulted in nothing. But then searching for terms like "get" and "property" turns up many non-relevant results.

Has any one else had any luck or have additional knowledge about these two functions?

-

-

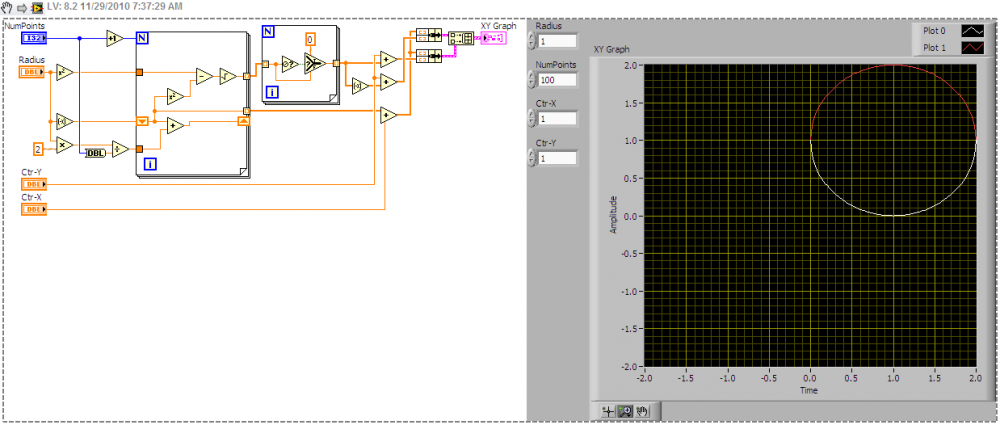

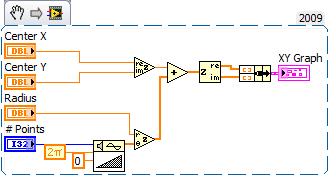

A few times now I've needed to dynamically display and resize some XY graphs. And it never seems to fail that after a few iterations I end up with a chart that resembles this:

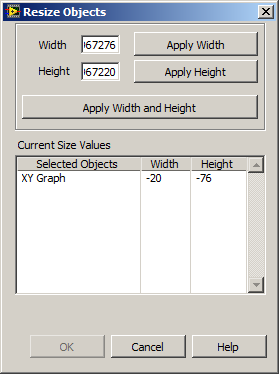

Or worse, I end up with a chart that has a negative size according to LabVIEW:

Once this happens there is no way I have found to manually resize the graph. I have to delete the control and drop a new one, and in the process lose my formatting, local variables, property nodes, event cases, etc. "Replace" gives a new control, but at the exact same (negative) size.

Today I was fed up and looked to why this happens. This is what I learned.

First, you need to know there are two main movable parts to the graph. The first is the whole graph control. This works as you'd expect using the "Position" property. The second is the "plot area" and it is affected by "Plot Area Bounds" and "Plot Area:Size". From the development environment the of size the plot area is constrained by the Graph Control -- you can't make the plot area bigger than the control. When resizing programmatically, the plot area can be sized/moved anywhere in the pane that owns the graph control.

Second, properties "Plot Area Bounds" and "Plot Area:Size" do similar things, but affect the Graph Control differently. "Plot Area:Size" will change the size of the plot area, and it will change the size of the Graph Control. If you use this property to increase the width of the plot area by 10 pixels, the width of the Graph Control will increase by 10 pixels. If you use this property to decrease the width of the plot area by 25 pixels, the width of the Graph Control will decrease by 25 pixels. This will be important later.

"Plot Area Bounds" will change the size and position of the plot area without doing anything to the Graph Control. If you forget "Plot Area Bounds" is referenced to the owning pane and not the Graph Control, its easy to have your plot area moved to upper left corner of the window while the rest of your graph control sits in the bottom middle.

The WTF. If you use "Plot Area Bounds" to make the size of the plot area bigger than the Graph Control, say 100 pixels wider, you can now use "Plot Area:Size" to shrink the plot area to 75 pixels wide and get a Graph Control that is -25 pixels wide.

The steps to avoid headache:

Simple Move\Resize:

This is the typical use case, you need to resize the graph and you don't care how the plot area is aligned within it.

- Move the graph to the desired position using the "Position" property.

- Resize the plot area using "Plot Area:Size" and the graph control will resize by the same amount.

Resize Graph and resize plot area:

In my case I need to align the plot areas of multiple graphs. Because the Y Axis scales are formatted differently, the plot areas of each graph have slightly different positions.

- Write data to the graph

- Update the scales

- Move the graph to the desired position using the "Position" property.

- Resize the Graph Control indirectly by using "Plot Area:Size" knowing this won't be the final position of the plot area.

- Reposition the plot area using "Plot Area Bounds" recalling its parameters are referenced to the owning pane and not the graph control.

Attached is a VI (LV 2009) that'll demonstrate "Plot Area Bounds" and "Plot Area:Size"

-

1

1

-

It's really funny how flexible your brain is. I can (for me) seemlessly switch between dutch and english. Geen probleem. Sometimes I am reading a text and I have to think if it's written in dutch or not.

Ton

The following will make me push and then push harder a few times before I figure out the problem.

-

After carefully exam the characters that you guys are cursing, I have to say it is not really "Chinese", it is chinese alike, but it is a mess, those characters mean nothing, but that maybe because you are not running OS in Chinese or Japanese, that is required in order to see what those characters really mean.

I think that's because it's not translated -- it's just re-interpreted. That is the underlying binary codes for ASCII "Boolean:Value Change" has stayed the same but it is now interpreted to be unicode characters. Kind of like what happens when you open a unicode 'text' file in a non-unicode aware text editor. It displays 'English' characters but it looks like random typing.

But definitely there are some problems with the libraries in LabVIEW. Could that be some plugin that installed in your computer that triggered all characters to jump out of LabVIEW? It makes LabVIEW think you are speaking the character language.

It's a plain install of LV 2009 Pro with nothing else, not even NI DAQ.

The sub-vis in the project appear to be unaffected.

-

Transferred the project to a new machine (Fresh installs of Windows 7 and LV 2009). It is still speaking in tongues.

-

Sadly reboots, wild configuration changes and audible cursing has not changed anything. The problem persists.

-

It wasn't in LV 2009, but there was another thread about a problem like this. Looks like the "fix" was to restart LabVIEW and build it again.

For me it's both the development environment and the exe. LV has been restarted several times. And the EXE built several times. It persists.

I'll do a proper full PC restart after some file transfers complete.

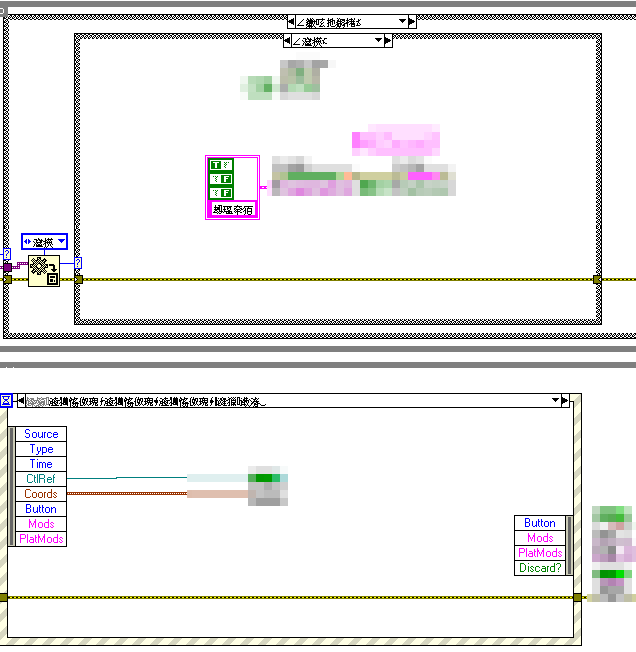

In the mean time, enjoy my block diagram.

-

Given that the following VI is made in an English version of LabVIEW on an English version of Windows (Vista) and has never sought to be international, can someone tell me haw this happens and how to fix it?

All scales and Timestamp controls on my Front Panel are in Chinese (I think it's Chinese). All numbers, labels and enum text on my block diagram was Chinese for a while too, but somehow that has been sorted.

This happened after I created an EXE Build Specification in my project and merely clicked on the "Runtime Languages" option. The config page took a while to load and after it did, bang!, Chinese (I think I saw the VIs flicker in the background, but Vista and LV seem to do this all the time anyway). I did not change any settings on the Runtime Languages screen. I was just clicking through the pages to ensure I hadn't missed any important settings.

Since then I have modified the RunTime Languages options to Support only English, but my Front Panel is still confused.

Any ideas?

-

I have DAQmx 8.6.

I think that must be the problem.

Thank you for your help.

-

-

I'd prefer to do this with just LabVIEW but the NationalInstruments.DAQmx .NET assembly has this:

DaqSystem.Local.LoadDevice("<device name>").GetTerminals()

Which returns the information I'm looking for.

Anybody have anything better?

[Edit: Add following error information]

Great. Guess what happens when you try to use GetTerminals() twice?

Error 1172 occurred at Error calling method

NationalInstruments.DAQmx.Device.GetTerminals

of ObjectId handle: 0x759114C

for obj 0x2086D77[Device]

in domain [LabVIEW Domain for Run]

and thread 3176,

(System.Reflection.TargetInvocationException: Exception has been thrown by the target of an invocation.

Inner Exception: System.InvalidOperationException:

You may only create one instance of a type of Query List once during the lifetime of your application.

) in Device Terminals using dotNet.vi

Works fine the first time. The second time it throws this error. Its great if you only have one device. Not so great if you have more than one (as even changing the Device name doesn't help).

VI attatched in LV 8.5.

-

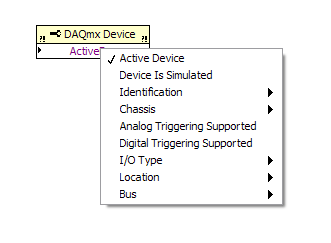

If you are setting up a task in NI-Max, on the "Triggering" tab, if you select "Digital Edge" from "Trigger Type" on the right a new control will appear and let you select from a list of choices like "/Dev1/PFI1".

How can you programmatically get a list of these values?

I know that list of type "DAQmx Terminal" from the "I/O -> DAQmx Name Controls" LabVIEW pallet. It's also known as "NI Terminal" in the floating help window.

The "DAQmx Terminal" control does not have a property node for this, nor does it have an invoke node. The DAQmx function pallet has property nodes for channels and physical channels, but not one for terminals.

I know it can be done in LV. The question is can mere mortals do it. I submit "\Program Files\National Instruments\MAX\Assistants\DAQ Assistant\plugIns\triggering.llb\DAQmxAssistant_subGetAvailableTriggerSources.vi" as evidence. Too bad this VI won't open in any version of LabVIEW I have (7.1, 8.0, 8.2.1, 8.5).

Thanks for your help.

-

QUOTE(orko @ Jul 13 2007, 09:30 AM)

However, looking at http://zone.ni.com/reference/en-XX/help/371361B-01/lvhowto/tabbing_through_elements/' target="_blank">this NI article it appears that you can use keyboard shortcuts to navigate your way through the array elements. Since Michael has so graciously provided a way to simulate keypresses, one way to work around this would be to catch when a user is on the last element of the cluster in each row and send the appropriate keypresses to navigate to the next row.Thanks, Orko. I had moved forward with simulating a mouse click on the next element in the array. Talk about a hack . . . :thumbdown: . This is actually much cleaner.

Limiting width of numeric string

in LabVIEW General

Posted

Compare the magnitude of the number and then choose between %f and %_f. At three digits any value between -0.001 and 0.001 would get %f (fixed precision), everything else gets %_f (significant digits). I may be off a zero, but the premise remains.