PA-Paul

-

Posts

139 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by PA-Paul

-

-

Hi I'm trying to install the OpenG Zip package via VIPM. But when it gets to the LVZIP readme page - the "Yes, install this library" button remains greyed out no matter how many times I scroll to the top or bottom of the dialog... It worked fine on a different computer, but on mine I cannot get past this (and its a tad frustrating!).

I'm on LabVIEW 2021.0.1f2, VIPM 2023.1

I've tried uninstalling the old version, making sure its not installed in any other LabVIEW version, but I get the same results on any version of LabVIEW... Hoping someone can help!

Thanks

Paul

-

Thanks for the feedback, that's helpful.

Related question (convolution!) - where we have a git repo for each "module", suppose we then need to consider a repo of "data type libraries" (there could be more than one to allow for groupings of different data types...).

It all just feels unnecessarily complex somehow, but I guess thats the problem with dependency management, to keep the dependencies between modules "simple" and reduce coupling, it seems you need relative complex strategies for managing the dependencies! Anyone have a feel for whether that's a general thing among other languages or is it particularly challenging in LabVIEW?

Thanks again!

-

Hi All,

I'm writing (what I hope is nice) modular code, trying to modularise functions and UIs etc accross the applications we're developing to keep things nice and flexible. But I'm struggling to keep individual modules "uncoupled" - or rather not all interdependent on each other.

Example - I have module for communicating with an oscilloscope to capture waveforms. I create a typedef in that module for passing out those waveforms and related information to another module, which sends them on to a storage module and a waveform processing module (where I do various things to the raw waveform) and then finally out to a waveform display module...

So my question becomes "who should own the waveform data typedef" - the easiest thing to do is make sure the input side of any module's API accepts the output of the API producing the data... so if it sits in the acquisition module library at the "source" of the data, then assuming I dont keep translating to different data types, all of my other modules end up dependent back to there too - e.g. my waveform processing and display modules now depend on the acquisition module - but I might want to write a post processing and display application that needs to know how to process waveforms, but I dont want to have to include the waveform acquisition module with that as it wont be acquiring anything...

I'm sure there's lots of options, and this post is really to try and find some I havent thought of yet!

I know I could create the typedef in it's own library or even outside of any library, then the users of that typdef are not dependent on each other (I think that's basically a form of dependency inversion?). But then that gets hard to manage and leads to the idea of a "common data types" library, which can quickly grow to lots of other things and more types of coupling in a way.

I could translate the typedef as it passes through a chain of modules so that each module defines the way it expects to receive the data, and then a tree type module hierarchy limits the coupling... but that always feels somewhat inefficient - and I want one waveform data type, not 4 depending on where I am in the application.

My situation is marginally complicated as well since we're using PPLs and some of my data types are now classes, so they need to be inside a PPL somewhere and then used from there (and its a pain we cant build a packed class without first wrapping it in a project library...)

So yes - how do you all manage your typedefs and classes that get used across module boundaries to minimise those dependency issues!?

Thanks in advance, sorry for the slightly rambly post!

-

2

2

-

-

As a developer using open source code, I want to be able to inspect and run the unit tests/verification steps etc that were used to prove the code works as intended before release.

-

2

2

-

-

So, does this sound like a sensible way to evaluate which option (expressing the X values for my interpolation in Hz or MHz) provides the "best" interpolation:

Generate two arrays containing e.g. 3 cycles of a sine wave. One data set is generated with N samples, the other with 200xN samples.

Generate two arrays of X data, N samples long, one running from 0 - N and the other running from 0 to N E6

Generate two arrays of Xi data - 200xN samples long, again with one running from 0-N and the other from 0 to N E6

Perform the interplolation once for each data set using the N samples sine wave data for the Y data in each case, then using the other X and Xi data sets.

Calculate the average difference between the interpolated data sets and the 200xN sample sine wave. If one option is better than the other, it should have a lower average (absolute) difference, right?

I'll code it up and post it shortly, but thought I'd see if anyone thinks that's a valid approach to evaluating this!

Thanks

Paul

-

Thought I posted this the other day, but apparently didnt. I agree it's likely a precision/floating point thing. The issue I have is how it tracks through everything. So the interpolation is used to make the frequency interval in our frequency response data (for our receiver) match the frequency interval in the spectrum (FFT) of the time domain waveform acquired with the receiver. We do that so we can deconvolve the measured signal for the response of the device. We're writing new improved (and tested) version of our original algorithm and wanted to compare the outputs of each. In the new one, we keep frequency in Hz, in the old in MHz. When you run the deconvolution from each version on the same waveform and frequency response you get the data below: The top graph is the deconvolved frequency response from the new code, the middle from the old code and the bottom is the difference between the two...

It's the structure in the difference data that concerns me most - it's not huge, but it's not small and it appears to grow with increasing frequency. Took me a while to track down the source, but it is the interpolation. If we convert our frequency (x data) to MHz in the new version of the code, the structure vanishes and the average difference between the two is orders of magnitude smaller.

And its there that I'd like to know which is the more correct spectrum?! The old or the new?

Any thoughts?

Paul

-

Hi All,

This may be a maths question, or a computer science question... or both.

We have a device frequency response data set, which is measured at discrete frequency intervals, typically 1 MHz. For one particularly application, we need to interpolate that down to smaller discrete intervals, e.g. 50 kHz. We've found that the cubic Hermite interpolation works pretty well for us in this application. Whilst doing some testing of our application, I came across an issue which is arguably negligible, but I'd like to understand the origins and the ideal solution if possible.

So - If i interpolate my data set with my X values being in MHz and create my "xi" data set to be in MHz and with a spacing of 0.05, I get a different result from the interpolation VI than I do if I scale my X data to Hz and create my xi array with a spacing of 50,000. The difference is small (very small), but why is it there in the first place? I assume it comes from some kind of floating point precision issue in the interpolation algorithm... but is there a way to identify which of the two options is "better" (i.e. should I keep my x data as frequency and just scale to MHz for display purposes when needed, or should I keep it in MHz)? Ideologically there should be no difference - in both cases I'm asking the interpolation algorithm to interpolate "by the same amount" (cant think of the write terminology there to say we're going to 1/20th of the original increment in both cases).

Attached is a representative example of the issue (In LV 2016)...

Thanks in advance for any thoughts or comments on this!

Paul

-

Unfortunately, even with debug enabled you cant probe the class wire (Well, actually you can but all you get is the class name, not the private data). At least that is the case on VIs within the PPL (so if you try to probe the class wire on the BD of a VI within the packed library all you get is the name of the class. I'm pretty sure the same is true in the calling code once the class wire has "been through" a VI from the PPL.

Paul

-

Not sure where this question best fits, it could have gone in application design and architecture (as I'm using the PPL based plugin architecture), or possibly OOP as I'm using OOP...

Anyway, I have made an interface class for my plugin architecture, put it in a library (.lvlib) and then packed that library (to PPL - .lvlibp) for use in my application. My question is relatively simple, why, when I look at the class within the packed library, is there no sign of the "class.ctl" (i.e. the class private data control)?

Related - is the lack of class private data control within the PPL the reason I can't probe my class wire in my application built against the PPL? (I can probe it and see the private data values if I code against the original unpacked class).

Any thoughts or insights would be gratefully received!

Paul

-

Thanks Tim,

What I find strange is that on a fresh windows 7 install, the installer built with LV tells me I need first to install .NET4.6.1 and then does it. Why cant it do something similar in windows 10 and alert me to the fact that .NET installs are needed and do those for me!

Ho hum, another reason (asside from DAQmx 16 no being compatible with LV2012) to think about migrating up to a newer version!

Thanks again

Paul

-

Hi All,

I have an application built in labview 2012 which we have been distributing fine with an installer built in labview 2012 for some time. Recently, a colleague tried to install the application onto a windows 10 machine and although the installation process seemed to go smoothly, for some reason, one of the two exe's distributed by the installer gives me the ever so helpful "This VI is not executable. Full development version is required. This VI is not executable. Full development version is required. This VI is not executable. Full development version is required. This VI is not executable. Full development version is required. This VI is not executable. Full development version is required. This VI is not executable. Full development version is required. ..... (you get the picture!)" error.

As an experiment I set up two clean virtual machines, one running windows 7 SP1, one running windows 10. I ran the same installer on both. In windows 7, I got a prompt saying that .NET framework 4.6.1 needed to be installed first, and that happened automatically, and after that the installer continued and everything worked fine (including the exe in question). On windows 10 I got no such warning. The installer ran through and the exe failed with the error message described above.

On the Win10 VM, I then went in to "Turn windows features on/off" and although there's a tick in the ".NET framework 4.6 advanced features", there's no specific sign of 4.6.1. for a test, I added a tick to add .NET framework 3.5 (includes .NET 2.0 and 3.0) and installed that. That made my .exe run fine....

So.... is there any way to force the LV installer to prompt for/install the necessary .net "stuff" when running in Windows 10? Anything else I can do to automate things so that our customers dont need to manually add .net support for the program to run if they're using windows 10?

Thanks in advance!

Paul

-

Background:

Precision Acoustics Ltd have been at the forefront of the design, development and manufacture of ultrasonic measurement equipment for over 25 years.

The company specialises in the research and development of ultrasonic test equipment used extensively in the QA of medical devices, to provide industry with ultrasonic Non Destructive Examination (NDE) and within academia and national measurement institutes throughout the world.

Brief:

Together with the Bournemouth University we are looking for a Software Engineer to work on a joint project focused on the development of a new software platform (and associated output) to control acoustic and ultrasound measurement instrumentation.

For the successful candidate, this is an exciting opportunity to develop the skills required to plan, manage and execute a significant development project, working alongside and learning from industry experts in LabVIEW development and Agile software development. There is also a significant personal development budget available along with the opportunity to undertake a project-focused Masters in Research (MRes) at the University. Whilst the majority of KTP candidates remain with the industrial partner after the project, the skills acquired would be widely applicable and highly desirable in future employment opportunities.

The project will seek to develop a platform and agile development process that has the hallmarks of a well-engineered software system, including all documentation and verification outputs. The solution will ideally integrate existing software developed by the company from internal development, balancing the need for re-use against the need for a best-practice architecture. This must be undertaken in a way that supports the short, medium and long term business operations of the company.

For more information and application process visit the Bournemouth university website: https://www1.bournemouth.ac.uk/software-engineer-ktp-associate-fixed-term

-

As a company we've been using labview for a while to support various products, and now we're going to be trying to improve our work by implementing more of a collaborative approach to roll out some new versions. But we're not traditionally a "software company" so are starting to look at how we can do this from a "process" point of view, so I was just wondering if anyone here has any particular hints/tips or advice for where team members may be working on the same bits of code, how to avoid conflicts etc etc. IF there's any useful literature or resources that may give some insight into this area would also be gratefully received!

For info, we're planning to use mercurial for source code control, but havent previously gone as far as integrating SCC into labview (we've previously used SVN, and managed commits and checkouts through tortoiseSVN, but never used locking or similar as in general people werent working on the same bits of code). Not sure whether we would this time either, but will consider it if it is genuinely useful.

Thanks in advance.

Paul

-

New job opening for a graduate computer scientist for a software architect role on a "Knowledge Transfer Partnership" between Precision Acoustics Ltd (www.acoustics.co.uk) and Bournemouth University in the UK. Looking for someone with LabVIEW experience.

Job advert and details here: http://www.bournemouth.ac.uk/jobs/vacancies/technical/advert/fst102.html

Salary will be £26,000.00 per annum

Precision Acoustics require a dedicated IT Software Architect to join their team on a specialised project.

Precision Acoustics manufactures ultrasonic measurement products for medical and NDT industries. Based in the south of England in Dorchester, Precision Acoustics is owned by its Managing Director and two of the Research Scientists. The company was established in its present form in 1997 and is well established as a major supplier of equipment for the MHz ultrasound markets on a world-wide basis.

Your role will be to drive forward the deployment of the new software architecture, to enable the company to transition to a new and improved way of working. In order to do that, you will need to influence effectively, communicate through a variety of media, and persuade and motivate staff to adopt the new software and associated processes. It is essential that you are experienced in working with LabVIEW.

You will bring or develop capability for best-practice requirements engineering, software engineering, software architecture/design (using National Instruments LabVIEW), and software performance. You will also work with the Software Systems Research Centre at Bournemouth University to disseminate research challenges to the academic staff community. This may include authorship of conference and/or journal papers.

Your role comes with a large development budget and includes the opportunity to undertake a project-focused fully-funded Masters in Research (MRes) degree at Bournemouth University.

This is an 18 month fixed term appointment and could lead to the offer of permanent employment.

-

Thanks for the answers. Had a suggestion from someone else which I'll try - he has a small dellXPS 13, but sets the resolution down to 1600x900 and then runs at 100% scaling instead of going for full resolution and scaling up... I dont think it sounds the ideal solution, but I'll give it a try.

Thanks

Paul

-

Hi All,

Wasnt overly sure where to ask this... I'm having issues related to screen resolution, but mostly to do with block diagram behaviour rather than front panel object sizing which comes up regularly!

Anyway, I have some code which I wrote on my PC (windows 7.1) at the office - screen resolution is 1680x1050. When I look at the BD on that PC, things line up nicely and look generally ok. However, when I open the same code on my MS Surface Pro 3, (windows 8.1, screen res 2160x1440 - and with scaling set to 125% in the control panel display options) it seems that different things on the block diagram are scaled differently and so things dont line up any more. Particularly painful are things like unbundle by name - where the font and undbundle structure seem to have scaled slightly differently, so things that did have unbundles/bundles that were aligned with other BD objects are no longer aligned and things look really messy.

Has anyone else come across these types of issue? I'm not sure if its a windows 7 vs 8 thing, or specifically down to the windows scaling being set to cope with the small high res display on the surface... I'm looking for any suggestions on things I can try/settings hidden away somewhere that might help make moving between the two systems actually possible (At the moment, I'm just not coding on the surface because I cant cope with the unreadability and dont want to waste time aligning stuff all over the place!)

I should have prepped an example with pics to better show what I mean - I'll try and do something with that tomorrow when I have access to both machines next to each other!

Thanks in advance!

Paul

-

Does anyone know if there's a way to get the value of a position along a slide control based on mouse co-ordinates?

For example, the waveform graph has an invoke node which will "Map Coords to XY" I'm after a similar functionality but for a slide control so that I can either prevent unwanted clicks in a certain region of the slider (i.e. I have a 2 slide control and want to either filter out any clicks that fall outside of the region between the sliders, or switch the active slider to be the one closest to the click before the click is processed).

I put a slightly more detailed (complicated?) description over or NI.com (http://bit.ly/1l1iJr6) but haven't had a response yet, just wondered if anyone on here might have some ideas?

I've have managed a bit of a workaround by cobbling together the co-ordinate of the mouse with some bounding box info on the scale and housing of the control and prior knowledge of the scale range, but I just thought that, at least under the hood labview is doing what I want, is there a way I can access it?!

Thanks

Paul

-

Thanks for the link, feels a bit kludgy to me, I think I will just aim to not allow the VIs to be stopped from within the panel!

Is it considered good practice to remove a VI from a subpanel before putting something else in? It seems you don't need to, but would be good know the best way!

Thanks

Paul

-

Thanks for that - I'm not sure at this point if I will need to allow the child VI to stop itself in the end application. In all honesty, I think probably not except that I may want to be able to run the children as standalone VIs as well, in which case I'll need to be able to close them cleanly.

Is there a way to find out whether a VI has been called dynamically? e.g. could is there a property I could query inside a VI to see if it was launched dynamically from by another VI? I guess a simple way would be to have a connector pane boolean for static/dynamic and wire the relevant constant into the connector pane in the start async call so the VI knows if it was run standalone.... if it's run standalone, I can display a stop button, if not, I can hide it....

Still seems strange that the vi running state doesn't get changed even when the VI isn't running any more...

Thanks again!

-

Hmmm.... I missed that one to be honest - Thanks!

That said, I just tried it, and strangely it doesn't seem to work... even when I press the "stop" button to stop the dynamic VIs, the state still returns "running" - what am I missing?!

Attached is an example of how I'm trying to do things, including the new idea of checking the execution state.

Comments more than welcome!

The other strange thing is that I was trying something similar the other day using not a refnum comparator. In that example, I was thinking more about the underlying hardware control "engines" for this application, and having a persistent engine vi for (for example) motion control, which was effectively a QSM, and then a set of API vis to call that, the initialise VI used the refnum idea to see if the refnum it had in a shift reg was valid, if not, it dynamically called the engine VI so that other VIs could then send messages via the queue. That worked fine, and I could see that the engine VI was exiting when I sent an "exit" to the queue and running when ran the intialise vi. The difference here is the use of the sub panel, I wonder if that somehow makes the system think its still running?

Any thoughts as I say, most welcome!

Thanks!

-

Hi All,

I'm designing the architecture for a new application. I'm looking at keeping things modular and breaking down the functionality of the system into modules (that can ultimately be re-used). For the UI, I was planning to use a subpanel vi control and load the modules into that when needed. I haven't used subpanels much in the past (we've always ended up going with a tab control, but it makes the interface less-reusable and less modular as all the user events for each "module" are in the same diagram.

Anyways, I'm having a little play and running into a small problem - how can I tell if a VI that I've called dynamically is still running? I'd half expected there to be a property for it from the same referenced used to run the VI in the first instance (I'm using the start async call to run the VI and then I place it in the subpanel). I'm quite happy that I can switch between VIs once they've been called initially, but I only want to try to call them if they've not previously been called, OR if they have been called but stopped (not sure this will actually come up as I'm still planning, but I think it could!).

I tried putting the VI reference into a shift register and checking for valid refnum, but when I stop the dynamic VI (using a simple stop button on an event driven while loop within the dynamic VI for testing purposes), my shift reg reference remains valid (or at least, the "non a number/refnum VI returns F indicating it's still ok). Interestingly this method "does" work if I restart my calling VI after pressing stop on the dynamic vi!

Any thoughts or suggestions would be most welcome. I'll try to put a sensible demo of what I've been trying together so people can comment if possible!

Cheers

Paul

-

Hi All,

Posted this yesterday on ni.com (http://forums.ni.com/t5/LabVIEW/Problems-calling-a-dll-with-the-net-constructor-node-from-a-vi/td-p/2282712) as I couldn't get in here for some reason. Not had a response yet so thought I'd throw it here... I also note my formatting got messed up on ni.com...

For info, I'm using Labview 2012f3 and windows 7.

I have a hardware control application which we wrote to be nominally device independent. We did that by writing our own device drivers (in labview) which are distributed as .llb files, with the top level vi within the llb being the main "driver" (the top level vi is basically an action engine that can be called then by the main application.). This model has worked well for us with drivers which are written natively in labview, typically using VISA to communicate with the various bits of hardware.

We now need to add support for some 3rd party hardware which has a driver written with .net calls to dlls and the like. I have successfully written the driver in as much as it works fine when used standalone from within its own labview project. However, when I build my distributable llb version of the driver, it gives an error when run (note that the top level VI is executable, its not reporting as broken).

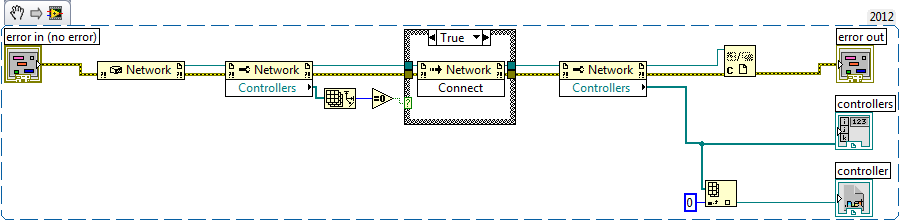

The error is a generic "error 4" at the first property node that accesses something from the dll. In the attached file, the error occurs after the first property node labelled "Network" (reading the "Controllers" property).

Although the constructor node itself is not generating an error when run, I had a closer look at it and when I click "Select constructor", the correct assembly is listed in the assembly drop down. But within the "Objects" list, all I have is the message: "an error occurred trying to load this assembly". I tried browsing for the assembly, but that doesn't change anything. The .dll is placed in a "data" folder which is in the same folder as the llb file. But even if I put the dll in the same directory as the llb, I still have the same problem.

I did manage to get the assembly to appear to load quasi-correctly in the "select .net constructor" dialogue by first browsing to a related .dll (a "common" dll provided by the 3rd party"), but when I then selected the "network" object from the list, all refnum wires to the right of the property node in my attachment break and the VI becomes broken and cant run at all.

As I say, it works fine from within a project environment, but not when loaded directly out of the .llb Any suggestions (either when called by the main app, or even when loaded into labview from the llb)?

Ultimately I need this to be able to work with a built executable calling the top level file in the llb, (otherwise there'll be a huge amount of work involved in changing the way the drivers are loaded I suspect).

Thanks in advance!

-

Liang - that didn't seem to work. The osk came up fine, but when I ran the osk kill vi, I saw a cmd window popup and then dissapear - but the osk stayed on the screen... I'll try it with a couple of other things to see if it will work.

Thanks

Paul

Just tried takskill on the osk within a cmd window and it doesn't like it for some reason (access is denied!) but it does work on other applications, so it should work for what I want to do.

Thanks

Paul

-

Hi All,

I have a bit of an annoying problem. I've written an application which acts as a remote server and controls some external kit. A client PC can connect to the server and then remotely operate said external kit.

In general, it works fine. But it appears that there's an instability of some kind with the dll supplied by the external kit manufacturer which causes my application to intermittantly fall over and die (with a nice windows: "This program is no longer working" error message). When this happens the client can no longer communicate with the server since its obviously fallen over and died! For various reasons I don't think the manufacturer will be solving the dll issue, which leaves me somewhat stuck.

However I had a thought which was to split my application into two sections - one which deals with incoming and outgoing communications from the client and then passes them onto the second application which controls the kit. Then if the "kit controller" falls over, the communications part can detect that (as it wont reply any more) and then restart the application. To do that, I need to be able to programmatically kill the "kit controller" application and clear out the windows error and then re-launch it before continuing. In the meantime I don't lose communications since that part of the system is kept separate...

Anyone got any thoughts on whether I can actually do this using labview? If not, any alternatives?

Thanks in advance!

Paul

OpenG LabVIEW Zip 5.0.0-1 - stuck at the readme

in OpenG General Discussions

Posted

Thanks Rolf, glad to hear its on your radar already.

It's not holding me up in the end as I'm not actively developing anything using the toolkit and fortunately it did work in the place I need it most (the build server!)... but I'll keep my eyes open for the update so I can get it on the dev machines too!

Thanks

Paul