JackHamilton

-

Posts

252 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by JackHamilton

-

-

Be cautious when "Always" wiring the Error In cluster as a rule. Most functions will NOT execute on an input error. This can make error recovery fail, such as Closing a Reference...the Close will NOT execute if the input error = TRUE.

In general I do NOT wire the error inputs of : Enqueue operations, Init Ports, Close references...

-

Dave,

You should NOT have to put a wait between GPIB functions. The GPIB Serial poll is the way to poll the instrument for the MAV bit (message avaliable) and then you should perform a GPIB read it the GPIB serial poll reports bit 64 is ON.

You need to look at the documentation for the Keithley instrument and look at the layout of the Serial poll register - one of the bits should flag when the instrument is done executing a command, or has data for you to read.

You don't need a sequence structure - just have the GPIB write - then a small while loop with the GPIB serial poll inside - then the GPIB read on the outside right. The while loop should exit on the MAV bit set or and error from the poll function.

This is the fastest way to code this - it will run as fast the Keithely instruments can perform the operations.

Regards

Jack Hamilton

I can help you with coding this if you have problems....

-

You're skipping a couple of subtle details.

1. The array coming from the waveform is U32 - and you're passing to the "byte array to array string" function which accepts U8 - so you're going to loose lots of resolution of your data, it will not even look right.

2. The typecast to string may work - as it will convert anything into a string - but they will include alot of non-printable string chars, I am not 100% sure they all go thru the serial port. (Simple to test)

3. If you use the typecast - it's important on the receiving side that the integer prototype on the covert from the typecast string is the right numeric representation. meaning if it's a U32 - then put a U32 numeric constant into the top input. Otherwise it will convert the string incorrectly and you'll have junk data.

Item 3 - you can test without the serial port - just write the cast to string then recast it back to array and compare the graphs...

Good Luck!

-

This bug with tables also occurs with exe's. not nice at all. I suppose setting the table if an indicator to "Disabled" might help.

Regards

Jack Hamilton

-

Reliable TCP/IP is not easy, goto www.labuseful.com and download "robust TCP/IP" this is a very reliable 24/7 TCP/IP connect, disconnect, reconnect VI set. (If I say so myself)

Regards

Jack Hamilton

jh@Labuseful.com

-

If you're using LV8 make sure you have the 8.1 version of DAQmx - it was recently released, and did address the bug in the previous version. however, this is only if you're not actually trying to access the same hardware resource on the card more than once and especially when it's running.

Jack

-

Randall,

I don't EVER know of a good reason to save VI's without the diagrams - LabVIEW will cannot update the code to a different version - and it is impossible to recover the diagram.

I would think the problem relates to the this.

Regards

Jack Hamilton

-

-

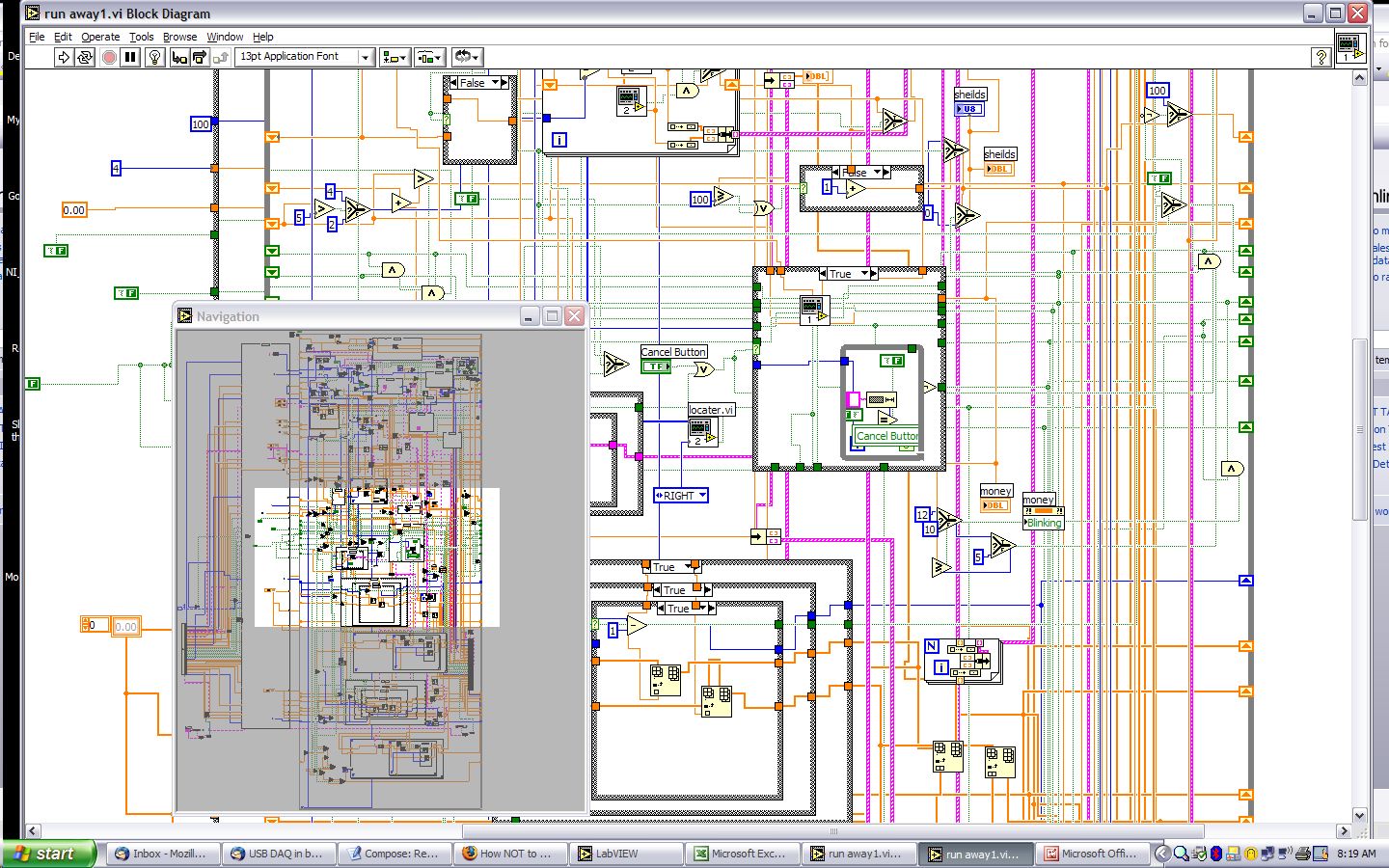

I agree and do not critize code, and believe me I see lots of LabVIEW code. I say any code that works is beautiful.

I do take the gloves off when the client is in the difficult situation of think a 'few minor tweeks' are all that is needed to add: User log-in, data basing, etc.

After a few years and a more than a few projects consuming 2x the time I quoted to 'refactor' the code - I'm pretty candid about the situation of a bad code platform.

Of course the last person to touch the code 'own's it' so this is another reason for being open about the situation and biting the bullet about doing a ground up recode.

Regards

Jack Hamilton

-

Have the drawing saved in DXF or HPGL (plot file), which is plain Text ASCII - there are routines to open DXFs file in LabVIEW.

There is a routine on www.labuseful.com that can open and display HPGL files.

Regards

Jack Hamilton

jh@labuseful.com

-

I would not recommend trying to burn a CD directly from your LabVIEW application. The problems are not technical, but more an issue of making your code unecessarily complex and more prone to failure.

For example:

1. Burning a CD requires that a blank CD is placed (by a human or trained primate) in the CD Drive.

2. The CD must actually be blank or have space for the data to be stored.

3. The CD could already have data on it so it would be adding data to the existing volume.

4. During the burn - a path or file with the same name could be added - causing a rename/overwrite prompt.

5. For whatever reason the burn could fail.

Just trying to handle the 5 most likely problems that may occur associated with burning a CD - You could dedicate quite a bit of time creating a basic CD burn function with some rudedamenty error checking.

It's common for some very nice working acqusistion code to quickly snowball into a Web access, database, reporting generating, eMail the user on error monsterous code, which will likely not do anthing well.

Try to draw a line where adding features does not make the application fundamentally better. Adding features to make something easier for the user - is not always the best thing for the application. Many times is can only increase the chance the code will stop for errors.

Take a look at the code size of a basic CD burning package and consider if you can do the same in a VI or two. That maybe the reason that CIT is charging 500 euros for the driver package. You still have to by Nero Burning.

I've learned to try not to get caught-up in 'fluff' feature enchancements in code. I would really, really need a very good reason to add CD burning into LabVIEW.

Regards

Jack Hamilton

-

The problem with this situation when asked to fix or enhance code like is this analogy:

You're handed a bizarre birthday cake, it was made by someone who doesn't know how to bake. You don't know if they followed the recipe, or used all the ingredients; more than called for or extra ingredients. They quite possible could have throw the frosting ingredients with the batter and bake it all together. You don't know what temp or how long they baked it.

But! Your asked to make another one! :headbang:

I also tell mechanical savvy clients "Working on code like this is like trying to overhaul a car engine that has been welded together"

-

-

I am seeing a problem with a USB POSX Barcode scanner. This device works as a keyboard wedge - sending characters from the barcode scanner into the Windows Keyboard buffer message system. Firstly, I DO NOT WANT a keyfocused string control on the diagram, that's a pretty poor and brute for implementation - and not desireable for my application.

I created a 'background' keystroke/barcode scanner detection using the LabVIEW Input Device routines - accessing the keyboard. I too am seeing the LV keyboard function return missing or duplicate charaters from the barcode scanner. Opening Notepad or Word - properly captures the barcode scan with no dups or missing chars. It would be nearly impossible to filter dup chars - as some barcode actually have repeated chars.

Setting a delay in the keyboard input polling loop seems to improve the problem (I am NOT USING THE EVENT STRUCTURE) - but it does not go away. Also, this is not an effect cure - as loop poll timing is effected by CPU loading, other apps running,etc.

:headbang: I am going to chalk this up to a bug in the LabVIEW keyboard routines - as it should not return duplicate or drop characters. It would appear this function is not doing any housekeeping - it appears to be destroying unread chars in its buffer, or rereading chars in its buffer it already read.

I don't know why the function written this way would be useful or desireable for any application. :thumbdown:

-

This post is generated from the Info-LabVIEW post query about mouse control.

Here are some VI's that allow mouse control via User32.dll under Windows.

These are not written by me: But from the horde of LabVIEW Vi's I've collected over the years. If original authors have issues with this post - please contact me.

Regards

Jack Hamilton

jh@labuseful.com

Download File:post-37-1120065916.vi

-

Don't wire the error cluster sometimes!

There are times where you do not want to input the error cluster. For example in state machines, we often put the error cluster in a shift-register - this allows an ERROR state to read that calling error state and handle it. You should then pass a NO ERROR cluster to the shift register - or else your other states will enter with an error and not execute.

Second, I don't wire error cluster into Queue messaging - as I don't want my messaging to collapse upon a hardware or other error in my code. This gives me a chance to see an error and send a "Initialize" message to the state maching to attempt to recover the hardware.

Some DAQ, IMAQ and other hardware initialization - should not have the error clusters wired between - as for instance cancelling/Aborting a DAQ operation in progress will generate an error - but who cares. Many times I will put a DAQ. IMAQ STOP or ABORT before a config call to assure that no operation is in progress when I attempt to configure the hardware. One would naturally want to wire the error cluster across the ABORT - INIT - CONFIG vi's but error from the ABORT will cause none of the init vis to execute.

I've even seen errors when I perform an abort - telling me no operation was in progress to abort! - again who cares.

If you want robust code sometimes the error cluster is not your friend.

Regards

Jack Hamilton

-

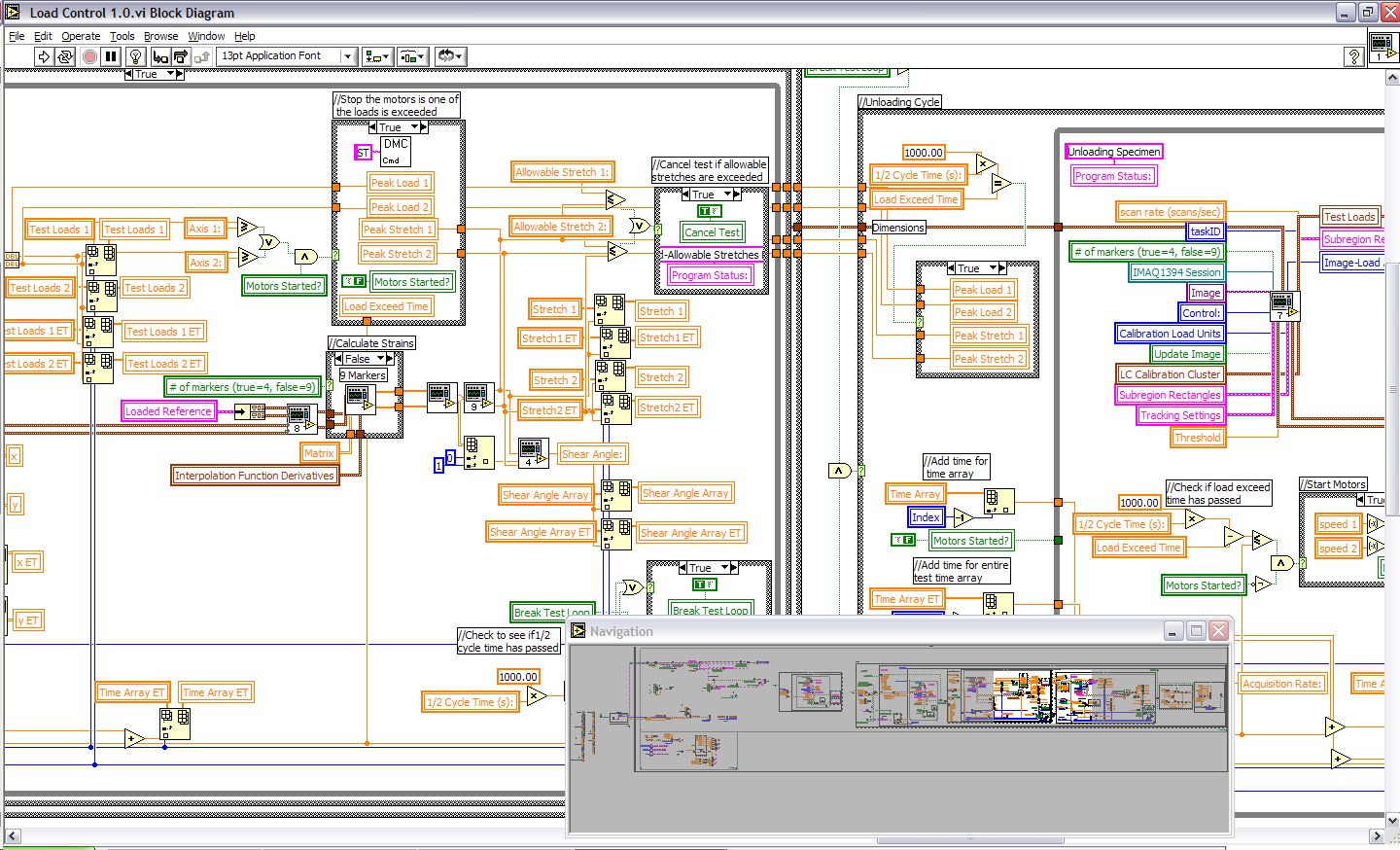

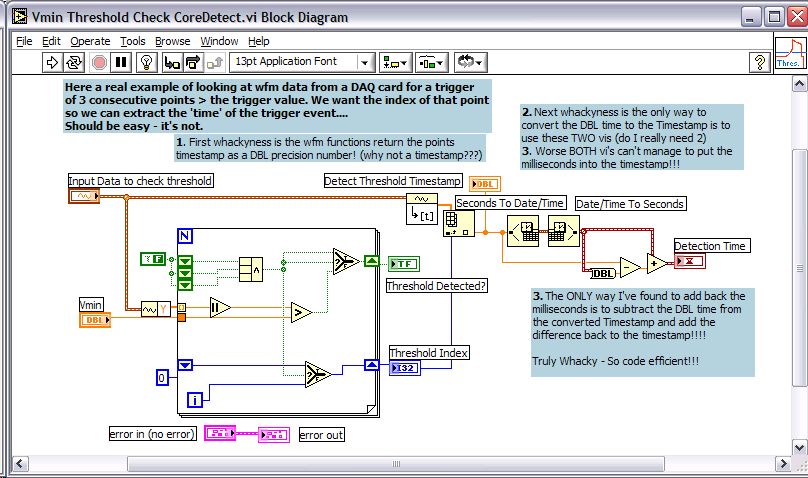

A few more issues on timestamps:

1. Someone posted the "Waveform Attribute" function is not used to modify the timestamp.

> Reading the Help for it - says it does.

2. I missed the "Convert to Timestamp" function because it's clearly in the wrong palette! It should be in the

Time and Dialog" Palatte. It is located in the 'Numeric -> Conversion" palette.

>Anyone who disagrees: needs to justify why the "Seconds to Date and Time" is in the 'Time and Dialog" palette - is it not a numeric conversion?

Regards

Jack Hamilton

-

This paper and sample VI from the NI website is an absolute 10+, a MUST read for all Vision users.

This clever algo - adjusts the background contrast of an image to make it uniform. For pattern rec., OCR or ROI measurements - its a very powerful tool. This will pull those troubled images/lighting problems in rein. Because as we all know - you can't get perfect lighting all the time.

I used this on an OCR problem and like magic! it fixed the problem.

http://zone.ni.com/devzone/conceptd.nsf/we...6256D8F0050B5E3

Cudos to NI! Whoever authored the paper I'll buy a drink for at my next NI Week!

Jack Hamilton

-

Found this API call on the NI Discussion Forums.

Opens the CD Rom Drive door! Add a little fun detail to your applications!

Works even on my Laptop Drive!

Regards

Jack Hamilton

-

There is a VI to do this in OpenG using Windows Internet Explorer.

www.OpenG.org

Be warned calling a PDF can be quirky. I've had it successfully work on my development machine everytime.

But then on installed applications - it will NOT work the first time?! (Windows)

I was using this for on-line manuals and help. But ended up taking it out in most distributed applications.

Regards

Jack Hamilton

-

The new Timestamp data format is cute until you start to use it. The problem is performing any math on the data resolves it to DBL number.

The crux of the problem is once it's a DBL - the only method to convert it back to a timestamp leaves off the msec counts. Here is a diagram shot of a typically application and how the problem really makes this a pain..

This clearly showns the timestamp datatype was not thought out completely...

-

Mike

I would, but I burned so much time on that project 5+ hours on the cursors. I just can't bring myself to go there again!

I did learn that the problem really is with wfm datatypes passed to the graph and the X axis being in time. As we all know X-axis time plotting has always been whacky

You have can't pass the X position value as an integer, you have to take the index and / dt value to get the 'time' position on the x axis.

If I run into it again I'll holler.

PS: They made some changes to this in LabVIEW 8

Regards

Jack Hamilton

-

Be carfeul using FlattenToString with Datasocket. In the LV 6.02 version (and perhaps higher versions but I've never checked), DS recognises \00 as the terminator of the string (ie DS is a 'C' API and resolves 'C' style terminated strings). Flattened strings sometimes carry the \00 within them and the decode on the other end doesn't work because the entire string is never received by the DS Reader. You will need to bypass this with some smarts to make it a truly robust transfer.

cheers, Alex.

Alex, I've actually tested and used this code in 6i so it does work. Good caution, I was sending B&W images which is only U8 data. Color images could be an issue.

Regards

Jack Hamilton

-

Well that's an interesting question.

LabVIEW can cast labVIEW data and cluster as XML and read them back. As XML is an 'open tag' architecture. LabVIEW will only support it's own XML tags.

You can write a custom XML imported for labVIEW - as XML is just a string - you can look for special tags - extract them an converted them to numeric, strings, arrays etc via traditional LabVIEW methods.

There is nothing really magic about XML - just think of it as a kind of ASCII text data/storage file.

Regards

Jack Hamilton

cRIO TCP/IP Problems

in Real-Time

Posted

Sorry to chime in late here. Done lots of cRIO TCP/IP data streaming apps. Some tips.

1. Use Queue's to buffer data between acquistion loop and TCP/IP send loops.

2. Code the TCP/IP yourself - avoid the RT FIFO - they work, but not very hard, they don't expose all error conditions.

3. Download *Free* 'Robust TCP/IP" from www.labuseful.com it's a proven robost TCP/IP send and receiver model.

4. Be aware that you can CPU stare threads in the cRIO system!, you're not in Windows anymore, you've got to write very clean LV code with not alot of VI server tricks!.

Regards

Jack Hamilton