dmurray

-

Posts

36 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by dmurray

-

-

4 hours ago, smithd said:

If you have a VM program you can use these (https://developer.microsoft.com/en-us/microsoft-edge/tools/vms/) to set up an easy deploy system. If you have windows 8 or newer, pro/enterprise, then you have Hyper-V built in.

Do you mean just for testing packages before sharing? Or something more powerful?

-

48 minutes ago, hutha said:

What's path you put "libLV_2835.so" inside Pi directory ? for example "/home/pi" or other path ?

Amornthep

You need to place them in "/usr/lib", but you also need to be logged on as the root user working in the LV chroot. I wrote a simple document on how to create these shared objects and where they need to go on RPi. All the information should be there but if anything is unclear let me know.

-

7 minutes ago, Omar Mussa said:

I'm not sure - actually think I may have messed that up and it may have just worked for the case where the dependency already existed where expected. I think VIPM builld process may grab it if its in the source folder of the package, not sure - would be definitely worth testing by deploying package to a new machine.

Now that you mention it, I am actually getting a second PC in place to test these packages before I share them anyway. That will tell me a lot.

-

3 hours ago, hooovahh said:

Will the steps mentioned also force LabVIEW to recognize the SO file as a dependency, and include it any builds? That's one issue I thought I remember having with Windows built EXE, when specifying the DLL path by the CLN input.

I don't think so, the build process doesn't seem to care too much about the file once it can 'see' it in the Shared Object folder I created in vi.lib. However, users will still have to copy the shared object file into the correct location on the RPi, using Filezilla or some other means. And it needs to go into the user space that the MakerHub LINX tool creates. See Local IO description here for more details on the architecture if you're interested. So a user still has some work to do, which isn't ideal. I'm sure the process could be automated, but that's lower priority at the moment.

Another point is that the .so file in this case would not be compatible with the TSXperts compiler for RPi, which I believe is imminent.

Edit to add: Just realized you mean will there be a dependency problem for users when they install the package. That's something I need to check on a second machine.

-

2 hours ago, ShaunR said:

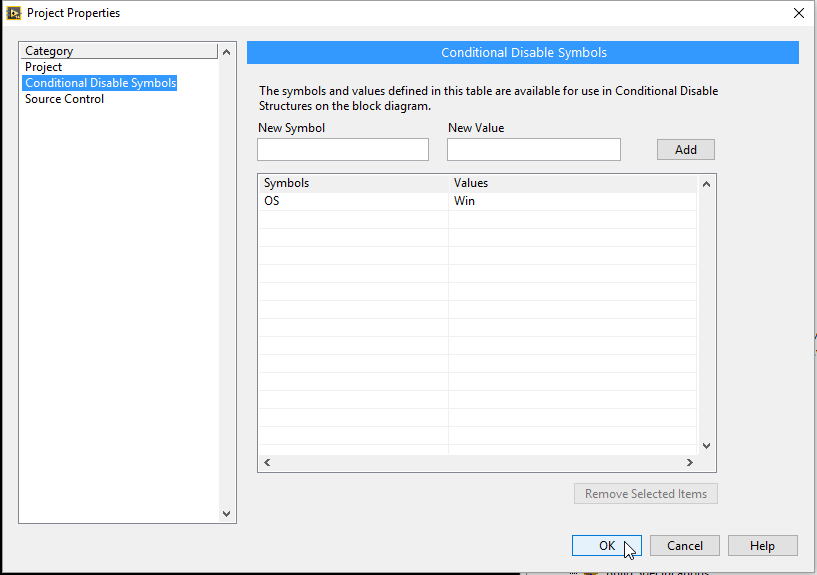

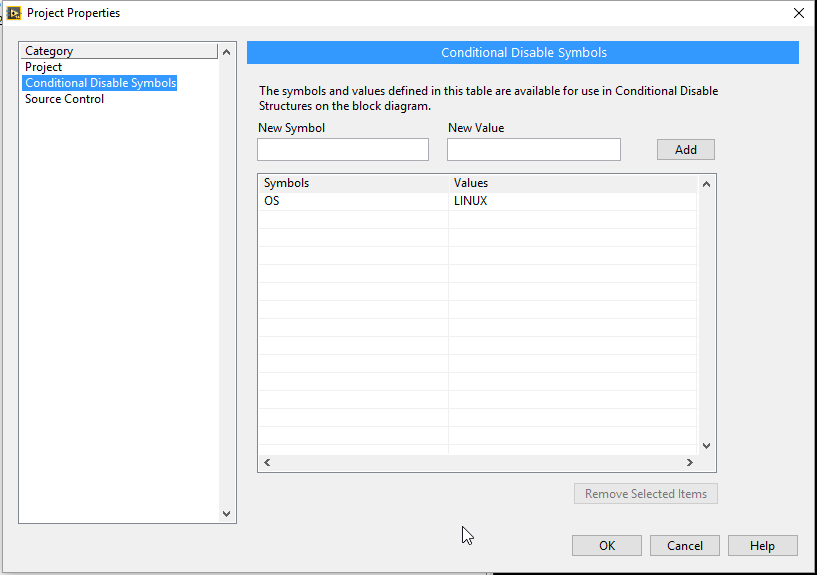

The OS condition is project only. When you deploy your package, the end user needs to have that in his project too (it also won't work if the VI is opened outside of a project). It is better to use the TARGET_TYPE (Windows, Unix and Mac) which doesn't require the user to do anything and works without a project file.

TARGET_TYPE doesn't seem too work in this case. Or, to elaborate, it works fine when building the package, but not when I deploy a project to the RPi (I get an error that the .so library cannot be opened). Also, on the project that I'm deploying to the RPi, I can't now set the TARGET_TYPE parameter to Unix try to get around the error. It says something about the symbol being reserved by LabVIEW. Possibly I'm doing something wrong.

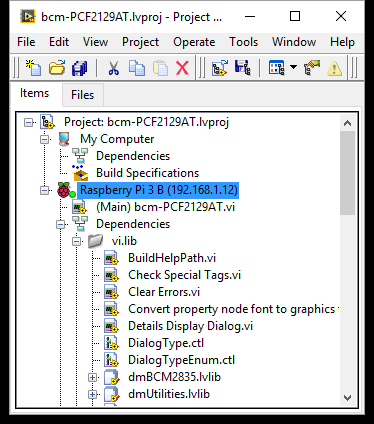

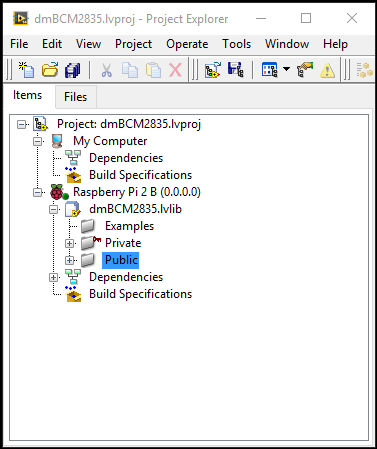

I don't think it matters that the user can only work in a project. I think they actually have to, as they need to set the target to Raspberry Pi (see below).

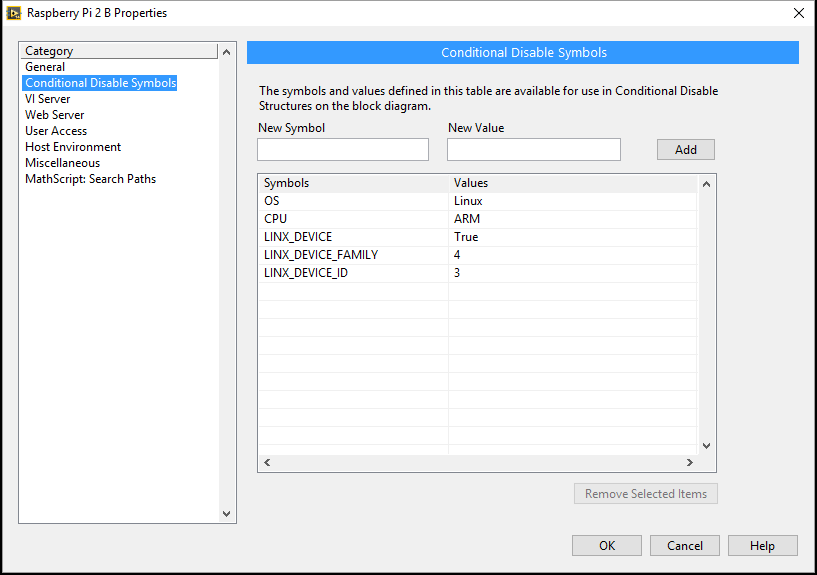

Another possibly important detail about the shared object file in this case is that it will only be compatible with the LabVIEW LINX tools. There are already some conditional disable options set up for the RPi in that case:

-

13 hours ago, Omar Mussa said:

That's great! One other thing you might try - I think the build process should also work if the Conditional structure default case was "empty path" and that the "Linux" path was as you coded it - in that case it avoids the unnecessary hardcoded path to the .SO file in the unsupported cases (non-Linux). I think the code will still open as 'non-broken' if open on a Linux target context (as it should be) and it will open broken on a Windows context (as it should be). Its probably best that the code is broken when opened in an unsupported context - because its better to break at development time than at run-time in this scenario.

Done. Thanks again.

-

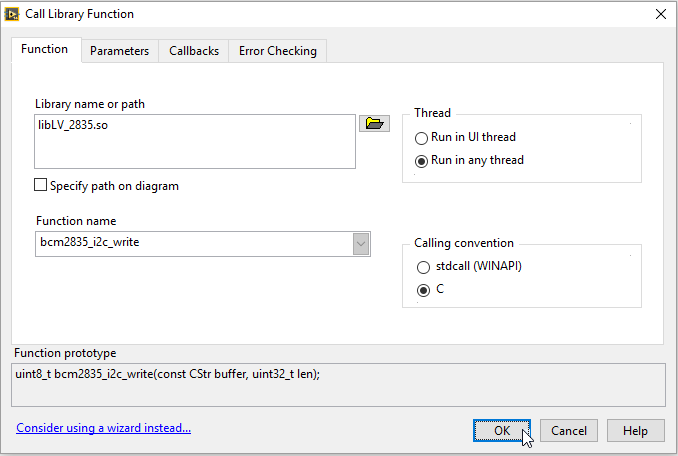

4 hours ago, Omar Mussa said:

I would check the "specify path on diagram" and try passing the path into the CLFN node and use a conditional disable structure to pass in the extension (or hard code it to only support Linux targets). Best practice would be to create a subVI with the constant so that you use the same path for all instances.

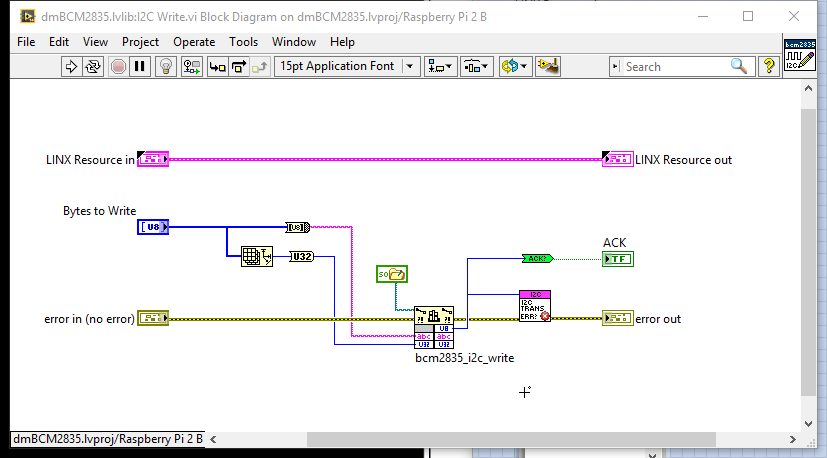

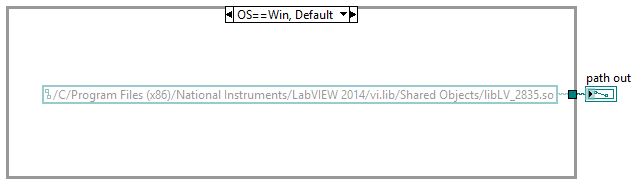

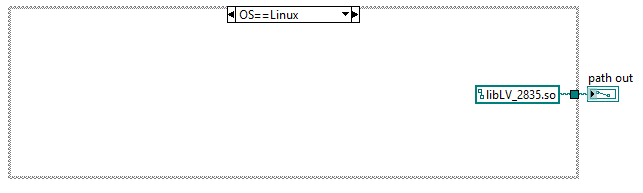

Works perfect, thanks for the suggestion! Here's the steps I used, for anyone else who has this issue:

1. Created a directory in vi.lib for this shared object and future shared objects.

2. Used the 'Specifiy path...' option for the CLFN, and created the sub-vi for the path.

3. Created two options for the path; one for OS == Win and the other for OS == Linux.

3. When I build the VIPM package, I use the OS == Win symbol.

4. Then, in projects that I will deploy to the RPi, I use the OS == Linux option.

Thanks again, much appreciated.

-

3 hours ago, drjdpowell said:

Try libLV_2835.*, as that means to use .dll or .so as applicable.

Doesn't seem to work unfortunately. For some reason a phantom file "libLV_2835.dll" has now appeared in the project dependencies, but the original problem still remains.

-

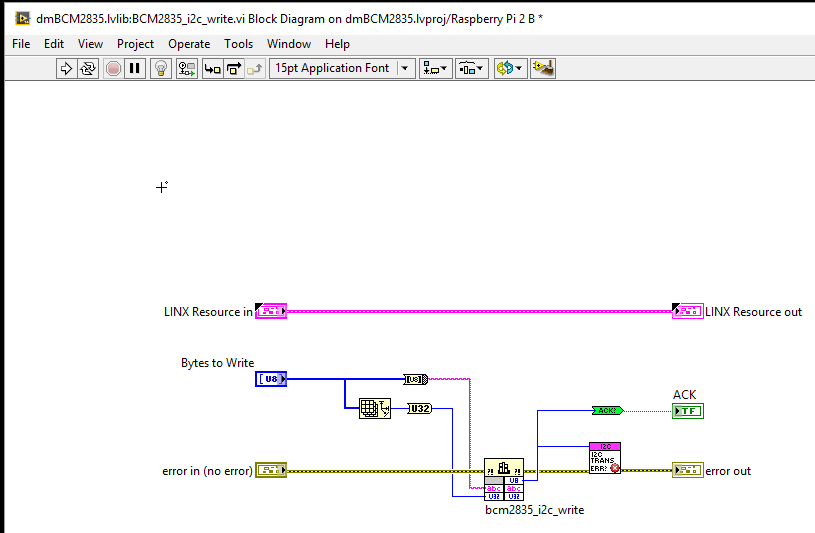

I've built a VIPM package of re-usable code which I want to use with the Raspberry Pi, the RPi effectively being an embedded linux platform. For the code I first built a shared object file (.so extension, basically a linux DLL) for this GPIO library. I then wrap the functions I need using LabVIEW CLFN's. Of course Windows hates this file and LabVIEW will complain if it tries to access it, but I can solve that by building my library inside a project with RPi as the target. When I build my VIPM package, a search takes place for the .so file, but it gets ignored and the package builds without errors. So everything is fine up to this point.

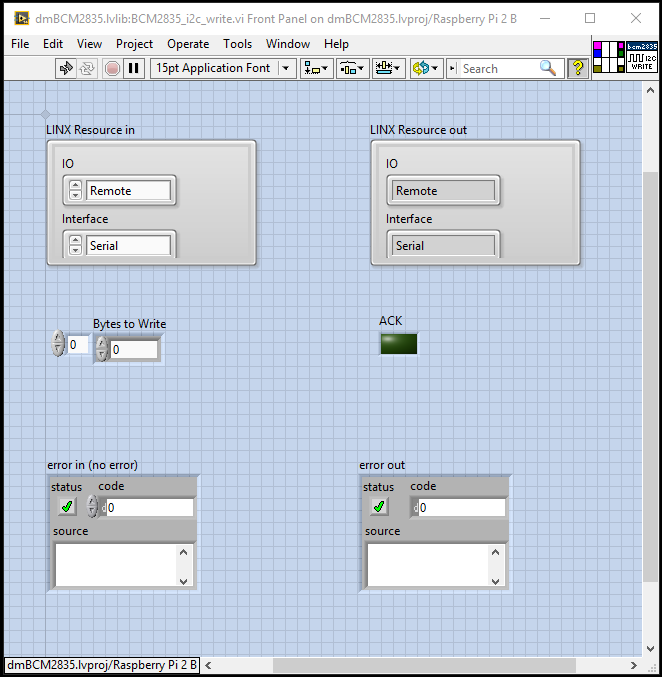

The problem occurs though when I install the VIPM package (to vi.lib, although it doesn't matter where). All the VI's in the package are broken (see pics below). I can solve this by simply opening up each CLFN on the block diagrams and then closing them- the VI is fixed and the Run arrow becomes unbroken. So this will solve the problem. But I need to do this for all the VIs in the package which is tedious. Does anyone have any recommendations on how to solve the broken VI problem? To be honest I don't mind the fix I have (opening and closing the CLFN for all VIs) but other users might not like this idea, and sharing decent code is one of my aims in using the RPi (in the Maker tradition).

Open and close CLFN to fix broken VI:

Fixed VI:

-

Don't change on my account, the discussion is relevant and interesting to me. Any more questions from me will probably be distinct enough that I will start a new thread anyway,

-

2 hours ago, ShaunR said:

I don't know much about that module but if it has that capability then yes. you could do that. Most NI modules are quite expensive and now you would have a hardware module dependency for just a watchdog. Most Linux kernels have a watchdog feature if the hardware supports it so I would look into that first. If that's not possible, cost is an issue and it is likely just your application that will hang rather than the OS; then you can spawn a separate process to act as the watchdog.

It turns out the RPi does have an internal watchdog feature, as you suggested, although apparently it has some issues. But I also found this, which will serve as an external watchdog if I need it. As well as the watchdog functionality, the real time clock itself will also be useful, as the RPi needs to be connected to the internet to keep accurate time (i.e. it doesn't have native or on-board real time clock functionality). So this is perfect for what I want.

Anyway, I'm probably overthinking this, because on reflection the concepts are really simple. But thanks for your help!

-

1 hour ago, ShaunR said:

Watchdogs usually give you the option to restart the software if it stops responding and are usually hardware driven. If you have a software one, the chances are your watchdog will hang too so you need an external process that gets kicked every so often. This external app can then forcefully close the application and restart it. You can communicate with the watchdog via TCPIP or sharedmem and just message it every so often from a dedicated loop in the software

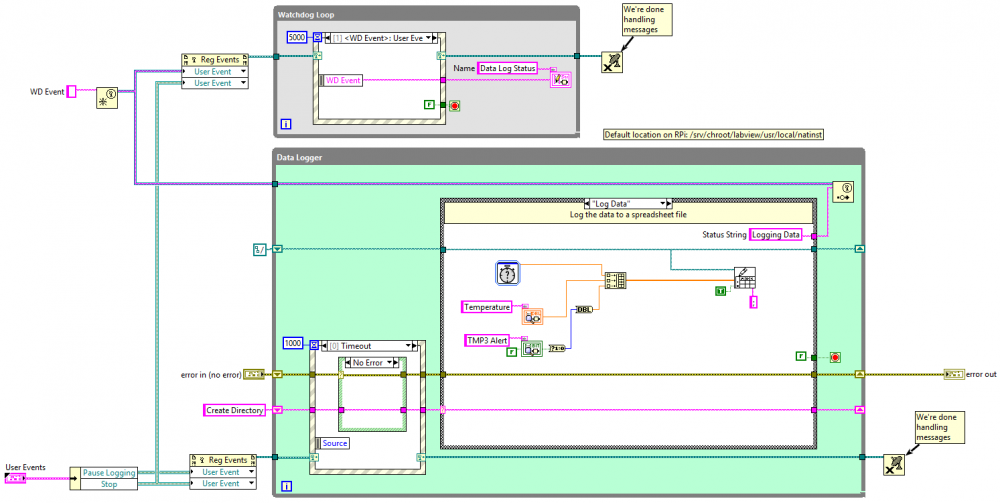

Okay. So is my thinking correct here?... I could use a HW Real-time clock module and configure it with a timeout. When my SW is running on the RPi, I just 'kick' the module timer periodically to reset the timer. And if the module does time out (because my SW has presumably hung), the module sends a signal back to the RPi which will reboot it.

-

-

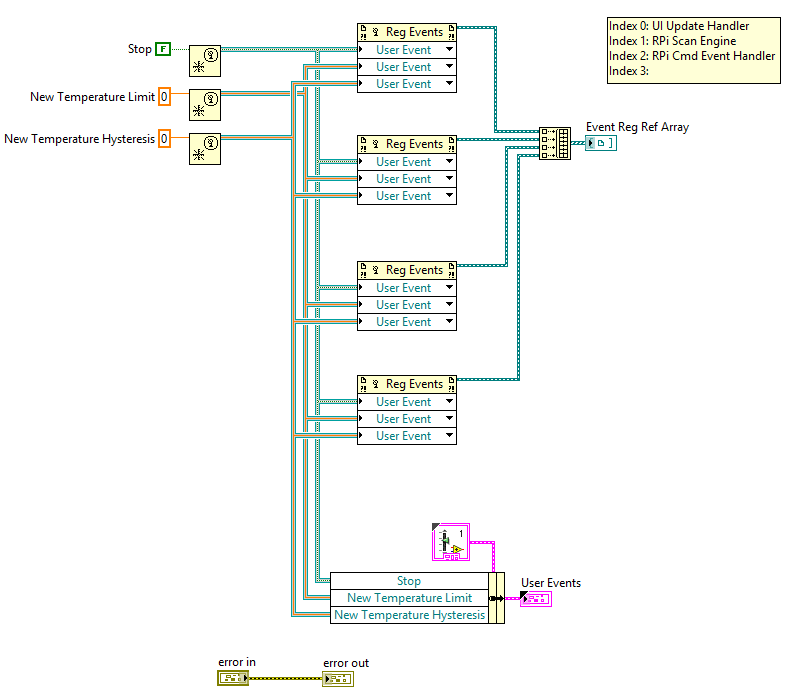

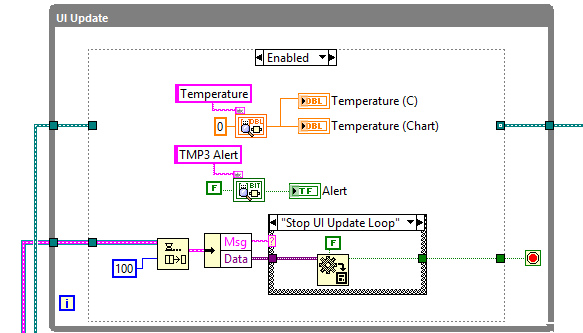

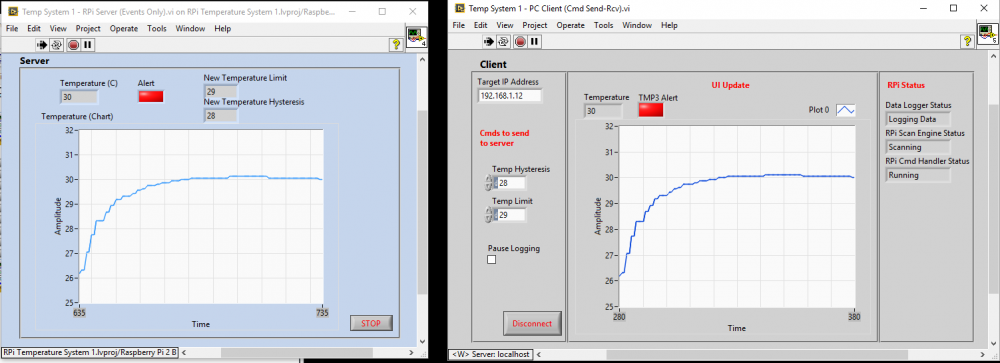

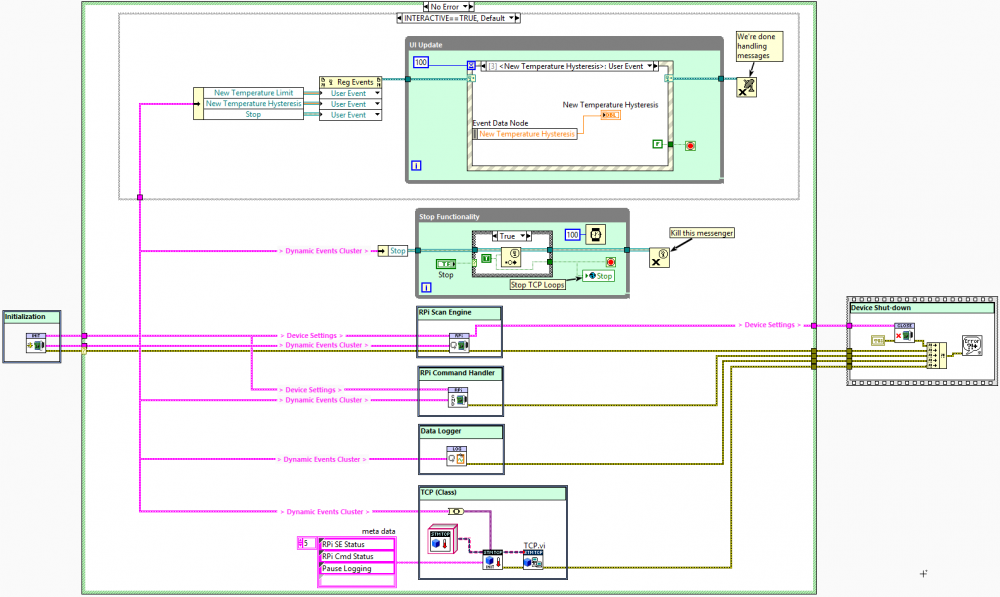

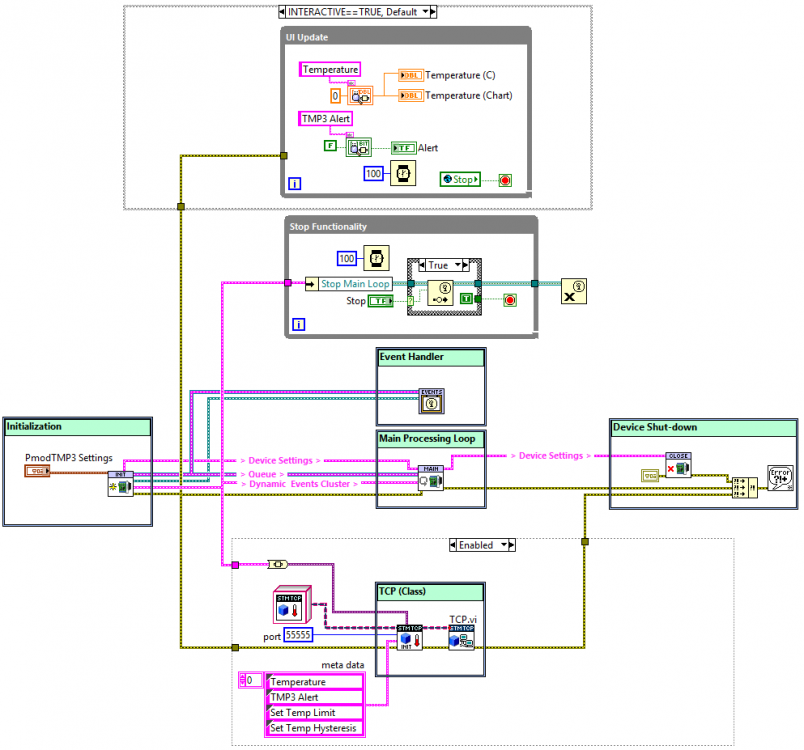

Related to my post here regarding designing a decent architecture for Raspberry Pi, I want to implement a watchdog timer, or timers. My current architecture is shown below. The three main loops that I think I need to monitor are the RPi Scan Engine, RPi Command Handler, and the Data Logger, The Scan Engine reads the system temperature and high-temp alert.The RPi Command Handler accepts commands from a TCP client and updates system settings. The Data Logger just logs the temperature and alert status at 1 second intervals. Regarding watchdog timers, the problem is I've never had a need for one in my usual LV programming, so I'm not quite sure what I want here. If I was using a CRio I could use the built in HW watchdog timers, but that doesn't apply here. So I guess I need some sort of SW timer. What's the general advice here regarding custom-built watchdog SW timers?

-

5 hours ago, ShaunR said:

Just to throw some more wood on the fire of experimenting.....

Queues are a Many-to-One architecture (aggregation). You can have many providers and they can post to a single queue. They also have a very specific access order and this is critical. Events are a One-To-Many architecture (broadcast) they have a single provider but many "listeners". Events are not guaranteed to be acted upon in any particular order across equivalent listeners-by that I mean if you send an event to two listeners, you cannot guarantee that one will execute before the other...

For control, queues tend to be more precise and efficient since you usually want a single device or subsystem do do something that only it can do and usually in a specific order. As a trivial example, think about reading a file. You need to open it,, read and close it. If each operation was event driven, you could not necessarily guarantee the order without some very specific knowledge about the underlying mechanisms. Queus you can poke the instructions on the queue and be guaranteed they will be acted upon in that order. This feature is why we have Queued Message Handlers.

Now. That leads us to a bit of a dichotomy.We like queues because of the ordering but we also like Events because they are broadcast. So what would a hybrid system give us?

This is my now standard architecture for complex systems. A control queue with event responses in a service oriented architecture where the services can be orchestrated via their queues and listeners can react to events in the system. If you want to see what that looks like. Take a look at the VIM HAL Demo. It is a message based, service oriented architecture with self contained services that "plug-in" (not dynamically, but at design time).

There's quite a bit going on in the demo code, more than I can take in quickly. It appeals to me more as something I would use in my every-day LabVIEW coding in work, rather than what I'm trying to do with RPi, which is to build a system that intermediate programmers can easily understand.But again, I need to look at the code more closely to try and grasp the basic concepts in it, and see what I can use. For RPi, I can see useful services being a re-usable data logger, or TCP server, or maybe even reusable sensor modules. As I type this, I'm warming to the idea. And, another plus, I now know that VI macros exist.

-

14 hours ago, Tim_S said:

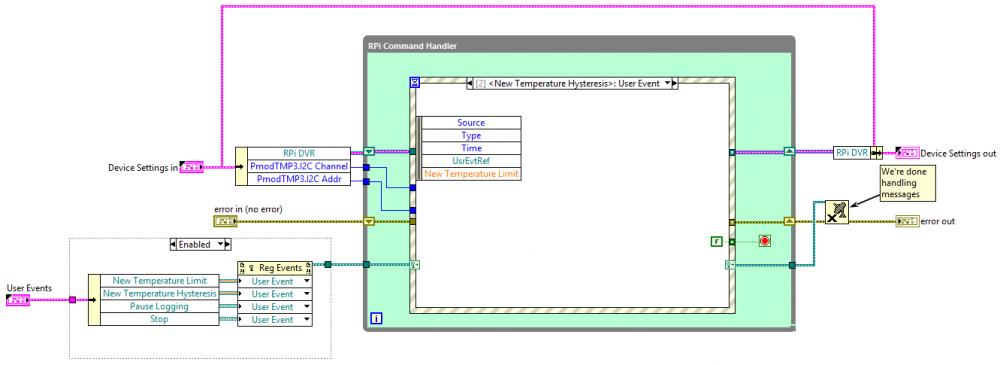

I expect New Temperature Hysteresis contains a numeric with a label of "New Temperature Limit", at least in the User Events control.

13 hours ago, smithd said:Loads of good stuff...

Okay, so the reason I messed up the events was that I rearranged and renamed some of the events in the events cluster. Using method 2 (smithd) I fixed the issue, thanks for the info. Also smithd, thanks for the comprehensive post. Pretty much everything in it is useful to me. I'm going to crack on with some more coding...

-

An issue I've run into: I've been messing around with the events in this project, and at edit-time I've lost some information about the events. An example is shown below, where the event is <New Temperature Hysteresis>, but in the event I get the data for 'New Temperature Limit'. What have I screwed up, and how do I fix it?

-

2 hours ago, smithd said:

You only want to pass around the events themselves, not the registration refnums. You should register the very last thing before you get to the event structure and unregister the very first thing after the event structure. Otherwise you have this queue floating in the ether that isn't being read from.

I personally make a "create" function for any process which generates a cluster of input events and a "subscribe" function which takes any clusters from any other processes and copies the events into a local 'state' cluster. That is, I move the events around only during initialization. This probably doesn't work well for something more dynamic, but...

Register at destination... of course, seems obvious now that you say it.

Your second point, I can't quite visualize. Can you post a simple screen shot?

-

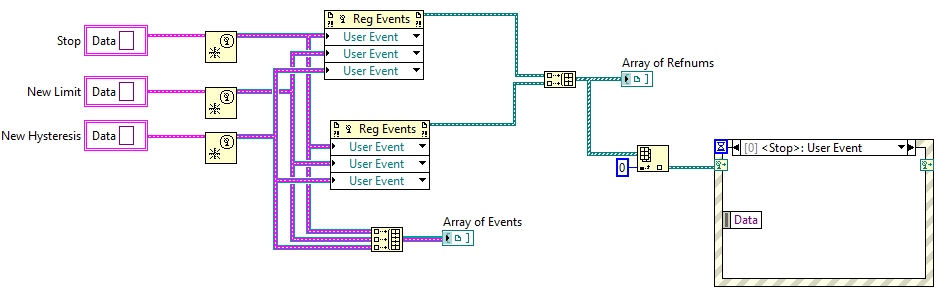

Related to this architecture, I have a question on the best way to pass around Event Refnums and User events to different sub-vi's. Currently what I'm doing is passing the Reg Events around as an array, and the User Events as a cluster (Method 1 below). Is a better solution to make all the events as the same type (variant in a cluster) and pass around an array (Method 2 below)? Or is there something else I need to be doing, or watch out for? I'd like to iron out these basics with this small project before I try to build something substantial and run into unforeseen issues.

Method 1:

Method 2:

-

6 hours ago, smithd said:

Yep, register for events is basically "create receive queue" while create user even is "create send queue".

To clarify my point, it was more to say that while labview has a bunch of different communication mechanisms, I'd just pick one. User events are nice, you can find a decent number of threads after the improvements in 2013 where people say they've totally switched from queues, and I've done that on a few programs so far as well. So in general my suggestion would be to either change the user events out entirely for queues, or change the queues out entirely for events.

While there is absolutely nothing wrong with also using a stop message for the polling loop, for something simple like that I have zero objection to globals or locals. That loop is obviously not something you ever plan to reuse, so worrying that its tightly coupled to a global variable is...well not worth worrying about. That having been said, if you have a global stop event it does make things cleaner to use it.

Yes, I was getting caught up a bit in stopping the loops cleanly, and wanted to experiment a bit anyway. As an exercise my plan now is to do one version completely using queues and another using events. That will push me along the learning curve.

My worry is that things will get too complex though. In the maker tradition, my plan would be to share this code with other users who generally won't have a lot of LabVIEW experience. Even implementing the TCP server as a class like I did here would be too much for most people. But also it's important that I don't do anything really bad in terms of coding. No point in passing bad habits along to others.

Thanks for your help, it's much appreciated. I'm sure I'll have more questions as I go along.

-

6 hours ago, dmurray said:

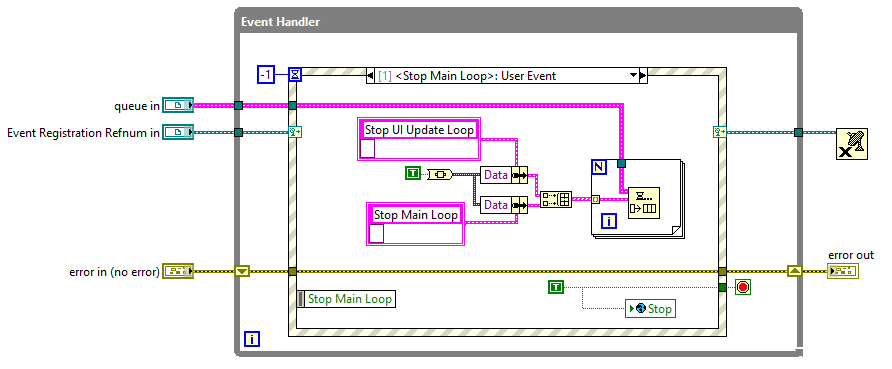

Now on to events: I'm having some troubling implementing the event-style stop. I assumed I could use one event trigger ('Stop Main Loop' in my example) and have more than one event structure be registered for that event i.e. a one-to-many relationship. I did that in my code (one generated event, two event structures registered for the event) but sometimes only one event structure fires when the event is generated. Am I right that events are a one-to-many relationship?

Okay, had a re-watch of the Jack Dunaway presentation from NI Week a few years back, and realized I was splitting the event reg refnum wire incorrectly i.e. I was just branching it and wiring it to the two event handlers. Having two Reg Events functions solved the problem. The fine details in LabVIEW are always my downfall....

-

1 hour ago, bbean said:

I know the title of your post is "CRIO-style architecture for Raspberry Pi" and briefly looking over the architecture I think it looks fine. But have you or anyone tried this architecture on the Rpi:

What are the limitations of the Rpi LabVIEW runtime? When I get some time I'd like to investigate more.

I haven't tried that particular architecture, but I will now that I know about it (thanks!). Web Services support has been added to LINX-RPi; there's a basic example on MakerHub, so I see no reason that the code in your link shouldn't work. The only thing to watch out for is that LINX-RPi doesn't support Shared Variables, in case they (Network Shared Variables) are used in the code you linked to. I need to have a proper look through the code.

Regarding RPi and LabVIEW there are two approaches that I know of: MakerHub (LINX) and TSXperts. What I know about MakerHub-Linx:

- Doesn't support front panels running on a monitor attached to the RPi. You can either run code interactively with the front panel on your development PC, or use a deployed application and use TCP/Web Services/(other??) to interface with the RPi.

- Shared Variables aren't yet supported as mentioned. Not sure if that's much of an issue.

- GPIO support is somewhat limited. No PWM, can't use dedicated CS pins for SPI, can't programatically set pull-ups/downs, events, etc. I'm currently wrapping this library to improve the GPIO functionality, although I guess the MakerHub guys will do something similar eventually anyway.

- As I understand it, LINX is not meant to be used in commercial applications. It's really meant for hobbyists/makers/academia/etc.

- The RPi won't have the determinism of a RT system, but again for the types of applications it's meant for that probably doesn't matter.

- RPi currently only runs on LV2014. But you can use the Home version which is very cheap (compared to the standard/pro versions), and you get the Control and Simulation package, and MathScript functionality as well.

Regarding the TSXperts version, I don't know much about it. There's a LAVA thread here. Main differences are it allows the front panel to be displayed on a monitor attached to the RPi, and it is a commercial application (both non-free and can be used in commercial apps, if it follows their Arduino model). I think it's almost ready to be released. I'll probably buy the cheaper version to evaluate it.

-

1 hour ago, smithd said:

I would in general prefer to use the event to every loop. For example you have a QMH and en event structure -- I would pick one. The issue with the global is now every function is coupled to that one instance of the stop global. Using an event (or a queue message or a notifier) means you can pass in any queue or event. Is it a big deal? No, I'm just saying what I'd prefer.

The other issue with the global rather than a queue, notifier, or event is that the global is sort of implied to be an abort rather than a request to stop. The natural implementation is what you have in your polling loop -- wire the global directly to the stop. For the polling loop thats not a big deal, but for a more complex situation (talking to an FPGA for example) maybe you want to step through a few states first before you actually exit, which means you need to either (a) put all that logic outside of the loop you just aborted with the global or (b) check the state of the global, than trigger some internal state change which eventually stops the loop.

So just to clarify, for a queue I just need to do this:

(1) Add another message to the QMH:

(2) Handle the message in a loop as follows:

Now on to events: I'm having some troubling implementing the event-style stop. I assumed I could use one event trigger ('Stop Main Loop' in my example) and have more than one event structure be registered for that event i.e. a one-to-many relationship. I did that in my code (one generated event, two event structures registered for the event) but sometimes only one event structure fires when the event is generated. Am I right that events are a one-to-many relationship?

-

Okay, so I cleaned things up a bit for RPi side. Basically just put the Stop functionality in its own loop and then was able to have the main loop as a sub-vi. I also wrapped the TCP functionality in a class so that I can re-use it on other projects. The BD is still a bit messy though.

A question on using a Global for stopping loops: in this case I assume that because the global is only written in one location (i.e. the event loop when I'm shutting down), and read in several loops, that this is a safe use case for a global? I think my other options are sing a LV2 style global (not much point as it's effectively the same thing?), or using a CVT value seeing as I already have that functionality in the code anyway.

[VIPM] Building a package which uses a linux shared project (.so file)

in Application Builder, Installers and code distribution

Posted

Okay, just to tidy up this post, the steps I used work with no dependency issues when I tested on a second PC (just got around to testing this now). The package(s) are available on the NI forum here, if anyone is interested. Thanks to everyone who helped me with this.