-

Posts

899 -

Joined

-

Last visited

-

Days Won

50

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Phillip Brooks

-

-

I'm 40, so it doesn't count; 640 x 350 x 16 colors. When I got out of college, I worked for a PC based CAD/CAM reseller. We would buy the IBM EGA graphics cards, then order optional memory to install into the card to get 64 colors. We also upgraded the IBM-AT memory using "piggy-back" chips to 512 KB. (That's how I learned about ESDBonus points for anyone under 25 who remembers what EGA resolution and colors were without going to Google or Wikipedia ... ) I remember installing secondary serial/parallel boards to connect a plotter as well as a digitizing tablet, and you had to pull these fragile little termination blocks off and flip them around to select com2/lpt2.

) I remember installing secondary serial/parallel boards to connect a plotter as well as a digitizing tablet, and you had to pull these fragile little termination blocks off and flip them around to select com2/lpt2.If you know what a Tecmar Graphics Master is, then I'm not suprised that your eyesight is deteriorating

. The interlaced 640 x 480 drove me NUTS! The only monitor that worked well was a Zenith because of it's slow refresh rate.

. The interlaced 640 x 480 drove me NUTS! The only monitor that worked well was a Zenith because of it's slow refresh rate.Woah, what a flashback! I suddenly feel the urge to put on my white high-tops, knit tie and MEMBERS ONLY jacket and then cruise the mall drinking an Orange Julius.

-

-

The whole top panel seems to be dedicated to de-bugging

. (Must be for CVI programmers...)

. (Must be for CVI programmers...) -

OK. I was tired of the little mouse avatar anyway. See my profile.

-

i2dx; if you wrote the solution, then I should get MORE because I provided a business plan! Sales and Marketing make the big bucks, so I'll take 5% please...I wrote the solution.

I also expect an office befitting a pointy haired boss, and will need a car allowance and my golf fees paid. Wait, I don't golf...

-

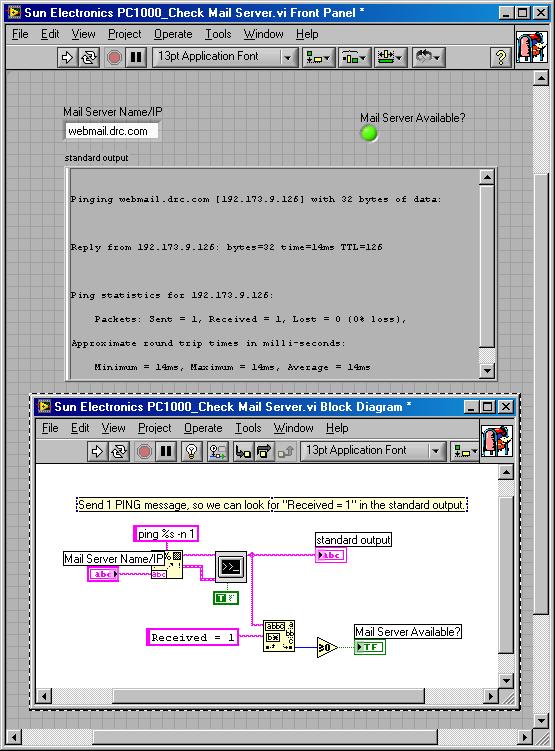

To test the connection with the network before I try and send out the email

You can use the System Exec function with a 'ping' command. Search the standard output for a phrase that will let you know that the ping was successful.

Attached is using LabVIEW 8.0.1

-

I don't have "d" problem on my machine! If Ed's machine shows 'z' problem, then it's obviously related to language.

But seriously; did you happen to use Jim's new excellerated mas-compile; if so, maybe that has something to do with it?

-

Je ne parl pas Francais!Everything else is still English. The Example Finder ini file is French as well.Ed

Just finished my mass-compile (2+ hours

), and checked, mine is in English.

), and checked, mine is in English. -

There is a company called Listen, Inc. in Boston, MA USA that has developed calibration tools based on LabVIEW and good quality sounds cards; SoundCheck.I need to do any type of an experiment ( electric measurments ) in labview in cathegory with sound card as interface!Yeah, great. How does that help me with my project :question: Use a good speaker and a crappy mic, source a series of tones, then chart the sampled data over the source data. Many laptops have a built in mic as well as a mic port. Compare the built in to an external mic. Fart and burp sounds optional...

-

Attached is a sample of a simple INI Functional Global I've used. It contains Initialize, Set, Get, Read INI and Write INI options. It also has an EDIT state where it pops up the FP to allow you to change the values.If there is an easier way to save cfg data and recall it I'm all ears.The LLB also contains cluster based Configuration File functions that I wrote before I learned about the OpenG libs.

Saved in 7.0 for your convenience.

-

Thanks for the input!

Bit the bullet and installed LV 8.0 Looks OK so far, the first thing was setting the menus, etc to look like 6.1

then installing OpenG and my user and instrument libs. So far so good. I'm gonna play with the splitter bars and see if I can make a case to use 8.0.

then installing OpenG and my user and instrument libs. So far so good. I'm gonna play with the splitter bars and see if I can make a case to use 8.0. -

Linux-compatible dataloggers

? This appears to limit your choices somewhat

? This appears to limit your choices somewhat  . The Veriteq that I mentioned uses an unpublished protocol, so you can't just read the serial port; you have to interface to their dll. You will likely have to find a logger with a published format and an interface you can readily connect to (RS232/Enet).

. The Veriteq that I mentioned uses an unpublished protocol, so you can't just read the serial port; you have to interface to their dll. You will likely have to find a logger with a published format and an interface you can readily connect to (RS232/Enet).A quick Google of "LINUX Data Logger" returned links for data loggers I've heard of, but don't appear on the MicroDAQ line card.

Good luck!

-

Do you have any recommendations or suggestions?

Don't know about air pressure; but I worked in an accredited calibration lab and we used Veriteq. These can be calibrated with FDA compliant tracability.

For various types of loggers, start at MicroDAQ to see what's available on the market. I've never purchased anything from them. Their list of offerings should help you find what you need.

The other option is to "roll your own" using FieldPoint and various transducers. This would likely be more expensive, but you could select the features that you need and setup a process control loop and manage heat/ventilation/humidity devices.

-

Has anyone used the splitter bars in LV 8? I'm starting a new project, haven't settled on using LV 8 yet, and was reading about splitter bars. They might add enough jazz to my UI to switch.

http://zone.ni.com/reference/en-XX/help/37...pane_container/

Using Splitter Bars to Create Toolbars and Status Bars

You can use a splitter bar to create a toolbar on a front panel that you can configure with any LabVIEW control. To create a toolbar at the top of a VI, place a horizontal splitter on the front panel near the top of the window, and place a set of controls in the upper pane. Right-click the splitter and select Locked and Splitter Sizing

-

This method works 100% for TCP, and also works for UDP when I put a 1ms Wait Timer inside the send loop.

1 ms is the smallest delay you can place in a loop. Your real application won't be done locally like your test; I suggest that you connect two computers and pass some traffic over a real network segment. There will be delays in your source data and network connection that can't be simulated in the way you are trying.

The best you could try is to use a quotient and remainder function in your send loop, divide the index counter by some multiple and check if the remainder is zero. Include a conditional case that waits 1 ms after every, say, 20 messages to help throttle the send loop in the way real network and data source would.

Otherwise, TCP may be the way to go for you!

-

It is possible my code has some logical error. Maybe you could take a look at it below:

The only thing that catches my eye is that you are comparing the Expected Data Arrival Size (Bytes) which I assume to be static to a value that is increasing every UDP receive (Shift Register + Length of String) :question: . I think you want to connect the equality check to the output of the string length function, not the sum of the lengths read. You could also multiply the interation value by the Expected Data Arrival Size (Bytes) and compare that to your shift register's current value.

-

I think you addressed my new problem (I am losing UDP packets bigger than 8 bytes, or so) ; I think the loop is going too fast. Is that what you meant by "Place the UDP Read function in a tight while loop and pass the output to a queue." ?? I don't really understand what you mean by that paragraph.

Remove any Wait or Wait next multiple calls from your while loop. The UDP demo has a fixed 10 ms wait between reads. Do not specify a max size for your messages. Leave this connector unwired. The OS determines if a UDP datagram is valid, and delivers it to LabVIEW regardless of it's length. If you're sourcing 8 byte UDP messages from another VI, then the receiving VI will receive 8 byte messages.

For the timeout, use whatever is reasonable for you, start with 100 ms? If the timeout occurs, the function will exit and the error cluster will return a code of 56. This simply means that no data was received. Check the code, and ignore it if error = 56. If a UDP datagram arriving on the listening port (regardless of length) passes checksum, the OS will pass it to LabVIEW and labview will immediately exit the Read function. I can't understand why you would be running "too fast" or "dropping messages" considering that the OS buffers UDP messages.

Make sure that you are not closing and re-opening the the UDP session handle after each UDP read. This would likely result in your CPU utilization reaching 100% and datagrams being missed.

I've attached an example that shows the technique I've used successfully. (LV 6.1)

-

My concern is that the results show that TCP is about 5 times faster than UDP transfers! Which is only about half the speed of DLL transfers (i.e. DLL = 0.1ms per transfer, TCP = .2ms per transfer, UDP = 1.0ms per transfer)

Is this reasonable? Any ideas why these results contradict general expectations? Any ideas would be appreciated.

- Philip

The performance difference may be related to how you are sending the packets. If you look at the UDP examples, the UDP Sender has a Broadcast/Remote Host Only boolean. Setting the Address to 0xFFFFFFFF (Boolean true; Broadcast) will force the packets to traverse the hardware onto the wire. Specifying a hostname of localhost will resolve to 127.0.0.1 and the OS will loop the data back before the physical interface.

TCP always includes a source and destination address, so the TCP packets are likely looped back before the physical interface (in the OS). You should really try to do these tests with two computers and distinct IP addresses.

Look at the TX/RX LEDs on your Ethernet controller, and listen for people complaining that the network is slow when you're performing UDP tests. I managed to knock some people out of their database while I was testing my UDP implimentation

If you're setting the UDP packet max size to 64, this could be also be a problem. Leave this variable unwired. From the online help: "max size is the maximum number of bytes to read. The default is 548. Windows If you wire a value other than 548 to this input, Windows might return an error because the function cannot read fewer bytes than are in a packet."

UDP datagrams have a header that indcates the data size. If the data received by the OS does not match this, the packet is invalid and will never be passed to LabVIEW.

Place the UDP Read function in a tight while loop and pass the output to a queue. As soon as the data is stuffed into the queue, the while loop will try to retrieve the next message. The OS can buffer received UDP messages. As an example, try setting the UDP Sender example VI to 1000 messages and change the diagram's wait to 1 ms. Change the UDP Receiver example VI to 10 ms on the block digram. Run the receiver, then the sender.

Note! Don't open and close the UDP Socket between reads or you will thrash the OS! Open the socket, place the handle ID in a shift register, and then close the handle outside the while loop. To avoid memory hogging, set an upper limit for the number of elements in the queue based on your expected receive rate and the interval that you intend to process the data.

I've successfully read UDP messages twice your size at a 400 uSec rate. The data included a U32 counter to monitor for dropped messages. On a dedicated segment, I never experienced a missed UDP message.

-

From INFO-LabVIEW

Could you insert Excel sheets using OLE into the Word document? It might be easier to use named cells to retrieve the values, and if the limits are based on calculations, then the updating of the Word document could be "automatic".

Using Excel could separate the data from the presentation and still meet your requirements for Word.

For simple example Excel and Word files, see http://forums.lavausergroup.org/index.php?showtopic=2533

NOTE: Someone posted an Info-LabVIEW reference to an ActiveX example of Read from Excel. I've edited this vi and combined it with the example Excel and Word doc. The attachement has been updated...

-----Original Message-----

From: Info-LabVIEW@labview.nhmfl.gov [mailto:Info-LabVIEW@labview.nhmfl.gov] On Behalf Of Seifert, George

Sent: Wednesday, January 18, 2006 8:47 AM

To: Info-LabVIEW@labview.nhmfl.gov

Subject: RE: Linking to specs in a Word doc

The reason we're using a Word doc is because that is how all of our top level docs are stored. The docs have to be approved and archived. The procedure is pretty well cast in stone. So I thought rather than copy by hand or copy and paste from the Word doc I would create a routine to either pull the specs from the doc at runtime or pull the specs and stuff them in an ini file. Most likely I will do the latter whenever updates are made to the Word doc. We have 20 or so test stations so I need to make sure they all have the same spec limits to work with. Updating them by hand isn't a good option.

What I think I'll do is require the first row in the table be an easily identifiable marker. From there it's easy to grab the contents of each table and if the marker matches what I'm looking for then I can use the contents of the table.

-

We were about to integrate I/O over parallel port, but it seems that the latest pc's doesn't have onboard parallel ports anymore.

Since we would have it as easy as possible for the end-user to install (also for laptops), we were thinking at an 'USB parallel printer-adapter" and bought one from Belkin.

It works fine for connecting a printer, but I wasn't able to detect it from within LV.

Does anyone have a VI which is capable to read and set I/O lines on a USB printer adapter?

Greetz,

Bart

Why not consider one of the lower cost USB I/O devices on the market? I know the USB to Parallel port adapters are ony ~$30, but there are some nice USB DAQ devices with 8 or more lines of digital I/O for $100 or less that include A/D, D/A and LabVIEW drivers.

I'd look at the LabJack, the EMANT300 or the Hytek iUSBDAQ products.

-

Puzzled though why the others in the project can not

run the 7.1.1 patch? Wouldn't that be more stable/robust

than using 7.1??

Oskar

Mostly an administrative issue. I was the one operating 'outside of the box' and I guess I'll have to revert.

Good thing I had a backup of user.lib and instr.lib before the mass compile! :thumbup:

-

I recently installed the LabVIEW Version 7.1.1 for Windows 2000/NT/XP -- Patch and have been experimenting with dotNET 2.0.

I first installed the 7.1.1 update, mass-compiled, then installed the 'f2' patch. These installs replaced the original files in my 7.1 installation directory. Everything works fine.

Now....

I'm about to start work on a project with others using LV 7.1 from the original CDs installed.

Does the 7.1.1 version change the VI versions? Would my VIs created in 7.1.1 be compatible with 7.1, or will I need to revert. It's not a problem to revert, I'd just like to save myself the trouble....

-

The online CLAD example test is a very good approximation of the actual test.

I've started looking into the CLD test, and noticed a restriction related to your question in the document "Certified LabVIEW Developer Requirements and Conditions".

Confidentiality of Examination Materials: All certification examination materials are

National Instruments Confidential. You agree to not disclose either the content or intent

of examination items regardless of your certification status.

NI does offer a CLD Test prep course on line; I'm trying to decide when to take it. I was told at the NI Symposium last fall that anyone who completed the CLAD test before the end of the year would qualify to take this prep course free of charge.

Good luck with the test! :thumbup:

-

Types of people

Those who overuse control references would be control nuts.

Those who use excessive global and local references would be schizophrenic.

Those who place clusters in clusters in clusters would be organizational freaks.

Those who place wires with no more than two vertical and two horizontal legs would be anal retentive.

Those who use LLBs would be just plain crazy.

Did I leave anything out :question:

send email and sms...help

in Remote Control, Monitoring and the Internet

Posted