rrojas

-

Posts

14 -

Joined

-

Last visited

Never

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by rrojas

-

-

My apologies if this has already been address, but I couldn't find it via the search function.

I have a startup-screen VI that calls three different executables (VIs) depending on what button is pressed. My question is if it is possible to configure the executable VIs to accept a number of passed in arguments when they're called as executables.

Does something have to be done when creating a standalone application? Do the VIs have to be converted to sub-VIs with input nodes assigned, and those input nodes are then the passed in arguments to the executable form?

Thanks in advance for any help.

-

All,

I have a couple of VIs that I have converted into executables. These executables I am attempting to call from another VI using the System Exec.vi. Unfortunately, the calls work only some of the time on my XP machine.

I get the following error (Error code 2):

Possible reason(s):

LabVIEW: Memory is full.

---

NI-488: Write detected no listeners.

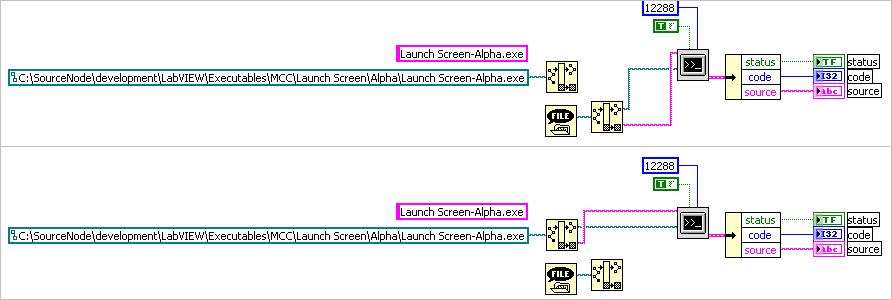

Moreoever, here's a twist. I can place a File Dialog VI on the block diagram and feed the path it produces after I select my executable into the System Exec.vi and it seems to always work. Below I have an example of the two setups I use (the top setup, the File Dialog created path, always works; the bottom setup, constant path, sometimes works):

So I'm left wondering, is there something about the path that I'm feeding System Exec that may cause it some problems?? I really have no idea and would appreciate any help. :headbang:

Thanks.

-

There are 2 things you can do to solve this problem

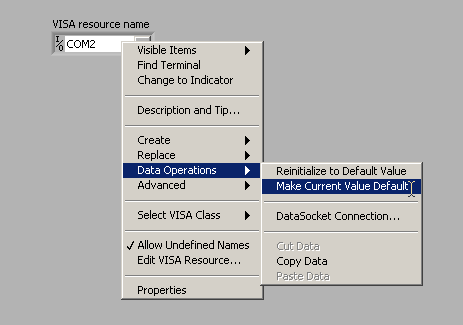

The first is to go the the control on the front panel set the value you want

and make it the default value.

However I would recommend that you connect this control to an input terminal

and wire a constant to in in the calling vi. This method makes it easier to read the

code and easily allows the com channel to be changed later.

Yes, I was considering adding a control to an input terminal of the sub-VI, but there are strict control restrictions on this app and there would be too many complications if a user could change the COM port on the fly: essentially it would be bad to do so, in this case.

However, the default value is my fix. Thanks! :thumbup:

-

All,

I have this odd problem. I'm writing a VI that is supposed to accept input from two seperate serial ports. I am using the VISA Configure Serial Port VI in two different sub-VIs. One sub-VI should output a VISA resource name for COM1, while the other sub-VI should output a VISA resource name for COM3.

For some reason, though, whenever I start up my main front panel, both of the VISA resource names are set to COM1. I have to stop the VI, go into my second COM sub-VI and type in "COM3" for the VISA resource name input to the VISA Configure Serial Port VI, save it, and then run my app again. If I close it and reopen it and run it again, the same thing happens. My VISA Resource names always revert to "COM1".

Why is this and what can I do to make one sub-VI "take" the "COM3" setting that I specify and use it without me having to stop the execution and changing it manually?

Thanks for your time.

-

Hi Rrojas:

As I said in the comment on the front panel, I translated this routine from a C routine written for the equipment I was talking to and ---Mea Culpa---

The rest of the story is that when I made the translation I understood the algorithm only partially, and documented it even less. (The routine was a small part of the project, it works, so I moved on to other issues which were more troublesome.)

The rest of the story is that when I made the translation I understood the algorithm only partially, and documented it even less. (The routine was a small part of the project, it works, so I moved on to other issues which were more troublesome.)As I understand it, the lookup table is equivalent to some of the calculations which appear in the classical methods, but takes less time. There was a time when that was an issue, and I suppose if you are running a really fast comm. channel, it might still be an issue. I seem to recall that the reference my colleauge got the routine from described how to change the lookup table to match different types of CRCs. I'll see if I have a copy of the reference anywhere-- might be Monday before I get a chance to look. If I can't find the reference I'm sure the colleauge who wrote the C code has a copy & I've been meaning to get in touch with him anyhow, so I'll check with him if need be.

Just goes to show, there's no such thing as a self documenting programming language, is there?

Best regards, Lou

Much thanks. Yeah, the problem I was having was trying to match the CRC the VI was giving to a CRC generator written in C by a co-worker. There were complications stemming from the fact that his algorithm was assuming 16-bit words as opposed to the VI's byte-based cycles. Plus, he was running his code on a big-endian processor whereas I was running his code on my PC (little-endian) so there was byte-order switching going on that the VI didn't have to deal with.

I finally got the VI to output the same CRC the C code was giving after figuring out what the VI was doing.

Thanks for all the help and example VIs, though. Very useful.

:thumbup:

:thumbup: -

Here's yet another CRC routine. Seems to give different outputs from the ones Michael found. But it might be of some use.

I extracted it from a more complex set of comm. vi's that include byte stuffing for eight bit data transmission, multiport near-simultaneous message transmission, & a variety of other features unrelated to the question at hand.

Best Regards & good luck, Louis

Could you describe what's going on in the block diagram for me?

-

Thanks a lot, guys. I will check these VIs out!

-

That's what I was afraid of. I appreciate the reply.

-

All,

I was wondering if an attempt had been made to make a CRC VI? It's looking like I've got to write a CRC algorithm but was hoping a VI already existed. I realize the algorithm will depend on the polynomial, so it may be too much to hope for an all-around general CRC.

Anyway, for what it's worth, the polynomial I'm working with is:

x^16 + x^15 + x^2 + 1

Thanks in advance for any help of direction you may offer.

-

1. At which speed do you transmit data over the serial port?

Sending one Byte over the serial port at 9600 baud needs 10ms! At 57600 baud needs .17ms

Make sure your serial port writes to 57600, 115200 baud or higher.

2. To find the process that consume the most time either use the VI-profiler (Menu: Tools>>Advanced>>Profile IVs...) or place Tick Count's in your block diagram (with e.g. sequences to have the Tick Count readed at the whished time), subtract the values from different Tick Counts.

VI-profiler is based on vi, with Tick Count one can "profile" parts of a vi.

Didier

Well, I'm transmitting at 19200 baud right now but I am putting time delays in the loop that reads from the serial port so shouldn't that give up the CPU and allow the loop that writes to the serial port run?

Are there any other mechanisms I can use to set my writing loop to a higher priority than my read loop - other than timed loops?

-

All,

In my VI I have two main loops. One loop writes to the serial port and one loop reads from it.

I am experiencing lag when I press a button to have a message written to the serial port. On the other end I have another computer that is running an emulator that responds to the messages it gets and sends out an appropriate reponse.

The read loop in my VI receives the responses fine and updates the front panel quickly. It's just the write loop that takes it's danged time to write.

A little more explanation:

The write loop is a loop with a case statement inside. This case statement reflects different states. One state is a "user listen" state which waits for a user to hit a button on the FP. Once the event structure captures a particular button press, it goes on to unbundle a bundle of possible messages in order to select the right message to send. Once it finds the correct message to send, it creates the packet, wrapping a sync word and a checksum before and after it, respectively. Then it shoots it out to the port.

Is the unbundling taking a lot of time? I would include images of my VIs except I really have no idea what is causing this lag in writing to the serial port. :headbang:

I have included waits in the read loop hoping that this will prompt it to release the processor and allow another process (i.e., the write loop) to have the processor, but it doesn't seem to make a difference.

Any help at all as to how I can make the writes to the port faster would be appreciated. If you guys need further explanation, please feel free to ask and I will provide.

Thanks.

-

I think you're making things much toooooo complicated.

To modify the content of a control in a sub-vi, just create a reference from the control and feed it to the sub-vi. In the sub vi, use this ref to modify the value/property.

In your example add a bundle before the sub-vi, feed the cluster to the sub-vi, and in the sub-vi unbundle your cluster.

Didier

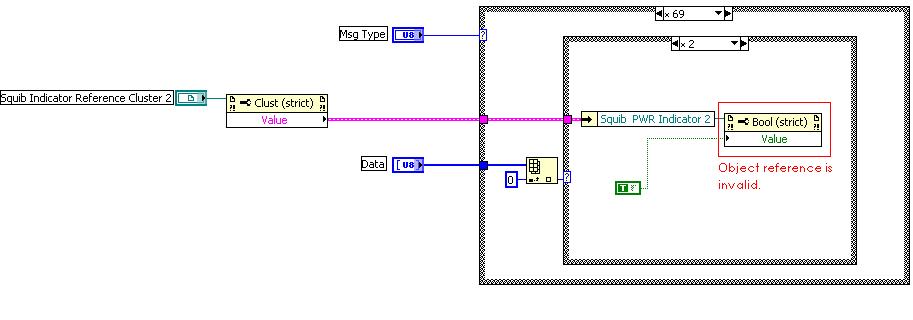

I tried that, but I still get an invalid object reference when I unbundle-by-name and feed the reference into the property node.

But just to make sure you and I aren't thinking about different things, what exactly is entailed in making a cluster out of these references? In my block diagram when I created a reference for each of the controls, I was unable to wrap a cluster structure around them. I had to create controls for each reference, which then appeared on the FP, and then I was able to jam these controls into a cluster.

Is there a way I can directly stick the references into a cluster on the block diagram?

-

I have thirty-eight (38) controls on my front panel that I wish to be able to access, and change particular vaues of, in a sub-VI.

What I am doing is creating references for each of the controls on my main VI. Then I am creating controls for each reference and putting each of these reference controls into a cluster.

Then I created a reference to that cluster or reference controls and fed that reference into my sub-VI. I then used a property node to extract the "value" of this reference, which is a cluster. Then I use Unbundle by Name in order to select a particular control reference. I feed this reference into a new value property node to attempt to change its value, but at this point LabVIEW tells me that the object reference is invalid.

Am I going about this the right way or is there a better way to do it?

The image of my sub-VI and the place where I get an error message is attached.

Thanks in advance.

Passing Arguments To Executable VI

in Application Builder, Installers and code distribution

Posted