-

Posts

24 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Tom Limerkens

-

-

Bujjin,

I never worked with the protocol, but at the following sites are some

interesting documents and whitepapers.

http://en.wikipedia.org/wiki/DNP3

Tom

-

Paul,

I would not only rely solely on the Backup capabilities of the Backup of your server.

We have a similar setup like you have, and our backup strategy of the SVN is as follows :

1. Every project has its own repository in the Visual SVN server

2. Every night, an automatic script runs the hotcopy command on every single repository, and sequentially zips the hotcopy

3. The zipped hotcopy is stored on the backup tape

This way we have a stable backup of the repository, and can restore it to any SVN server without a problem.

We are now looking for an additional offsite backup with SyncSVN, but did not find a reliable solution yet.

General tip : Backup is there to recover from disaster and HW Failure, count it in in your procedures, and test your procedures,

and validate it at regular intervals, HW tends to become obsolete (Tapes, drives, ...)

Kind Regards,

Tom

ESI-CIT Group

-

Do you happen to know if the DB15HD cables are straight-through wires? (i.e. pins 4 and 8 aren't connected) Do you think that would just depend on the brand? I ended up making this cable...

Speed doesn't seem to be affected. I only made our cable about 18 inches or so.

I would be very carefull by using a VGA extension cable, like you mention, not all pins are connected,

and we have had some issues by using VGA cables with non-VGA devices (SICK Barcode scanners).

There are companies that offer straight through DB15HD cables,

I would go for such an option, or make them myself.

Success,

Tom

Edit: Typo

-

Just my 2 cents, if it needs to be affordable...

On the cRIO Backplane side, you could go for a NI 9977 module (about 30 USD/piece), and

alter it, so you can use it as a 'backplane connector'.

on the module side, couldn't you replace the fixing screws there with some type of

standard 'screw-nuts' like used in DB9 extension cables ?

Protocol over the DB15HD connector lines is SPI, but I have no idea about the speed, if you don't

extend it for too long, I wouldn't expect a real problem...

Tom

-

I definitely qualify as as lurker I guess

being member since couple of years, I mostly check out the

advanced forums for seeing what all can be done by LV,

and use the search when encountering problems myself.

Leaving the posting to my dutch colleague Rolf

Great to see some of them presenting live here at NI-Week

-

Assuming you work in Windows, and you want to map/unmap network drives dynamically.

It can be done quit easily

through the command prompt using the 'net use' commands. type 'net use /?'

here some examples :

<BR>example to connect :net use T \\companyserver\measurementdatashare mypassword /USER:mydomain\myaccountexample to disconnect drivemap above : net use T \DELETE

Success,

Tom

-

Hi Shane,

If I were you, check out the following articles on RAW USB communication using VISA.

There are the very basics of how to communicate with a USB device, and also the different

ways how to communicate, Interrupt, Bulk, etc...

http://zone.ni.com/devzone/cda/tut/p/id/4478

http://digital.ni.com/public.nsf/allkb/E3A...6256DB7005C65C9

http://digital.ni.com/public.nsf/allkb/1AD...6256ED20080AA3C

http://zone.ni.com/devzone/cda/epd/p/id/3622

If you have control, or knowledge about the USB device's code, that will make it a easier.

Hope this helps ?

Tom

-

David,

If it is an option, you can try to put a fixed IP at your windows PC. That disables the DHCP.

Tom

-

When building an application, remember to enable 'Pass all commandline arguments to application'

in the 'Application Settings' Tab of the application builder.

Otherwise no arguments will be accessible in the EXE.

Tom

-

Hi Matt,

Now that is an interesting finding.

Were there special strings, patterns or characters in the descriptors ?

If you can share them with us, maybe we can avoid such strings in the

future. Or at least know when the problems arise.

Tom

-

Just an idea,

Maybe you can combine the LVOOP with XControls, so you can put your 'per valve/gauge'

user interaction intelligence in the XControl,

and keep the data in LVOOP objects.

Don't have much experience in the LVOOP yet, bute sure there are some wireworkers

who can point you in the right direction

Tom

-

Hi,

It seems you can do it with the MS ADO activeX component.

Procedure is described at http://support.microsoft.com/kb/230501

Success,

Tom

-

Matt,

just one more thought, could it be that the WDM devellopment installed a different USB stack that VISA is not compatible with?

did you try to install just the VISA runtime on a non contaminated PC, and then install VISA as a driver for your device, using the INF file created

on the devellopment PC?

Success,

Tom

-

The effect we had, was that, if on a USB device, a bulk transfer was started,

a single frame of information was sent, and then the the USB host was closing

the connection.

We could not exactly figure it out what the problem was, because when we

used another (opensource) USB Raw communication framework, it worked fine.

A college pointed me to VISA changes at the NI site,

and in VISA 3.4, a lot of USB stuff was changed :

NI-VISA 3.4 Improvements and Bug Fixes

For USB RAW sessions, communication with non-zero control endpoints is now supported with the use of VI_ATTR_USB_CTRL_PIPE.

For USB RAW sessions, the default setting for VI_ATTR_USB_END_IN has been changed from VI_USB_END_SHORT to VI_USB_END_SHORT_OR_COUNT.

For USB RAW sessions, viReadAsync would fail if the transfer size was over 8KB and not a multiple of the maximum packet size of the endpoint. This is fixed.

For USB RAW sessions, changing the USB Alternate Setting on a USB Interface number other than 0 would fail. This is fixed.

For USB RAW sessions, viOpen leaked a handle for each invocation. This is fixed.

I don't know too much of the USB protocol itself.

We found out about the problem using the USB monitor.

I assume you are using one as well.

If you can find out at which part in the

protocol something goes wrong, it will make it easier to track down.

Maybe the 3.1 version was more tolerant ...

Tom

-

Hej Bjarne,

I don't know exactly if it is a solution for your problem, but a client

of ours is using MatrikonOPC Tunneler to avoid DCOM Security configuration

nightmares between the server and the clients.

Maybe this could also solve your problem in the client server incompatibilities,

and just use it as a proxy ?

Tom

-

Strange,

I had just the opposite problem, an HID raw (composite) device didn't work in VISA before 3.4.

Apparently the VISA USB implementation did undergo a thourough revision in version 3.4,

and things worked as expected after this change.

Tom

-

Check the examples supplied with LabVIEW :

Help >> Find Examples >> Hardware Input and output >> Serial

Start from there.

Tom

-

Not using chinese myself,

But I made one LV Application that had the option to (dynamically during runtime)switch gui texts from English to dutch or chinese.

Tom

-

What you do best, is keep the file open,

and just do a read and parse line by line.

When you enable the 'line mode' in the read file, it reads until the EOL character.

This way the reading goes a lot faster, because the OS doesn't need to close/open the file,

and search line position for every line read.

Combine this with the scan from string from posts above, and your parsing will go a lot faster.

Tom

(In attachment the changed 'Reader')

-

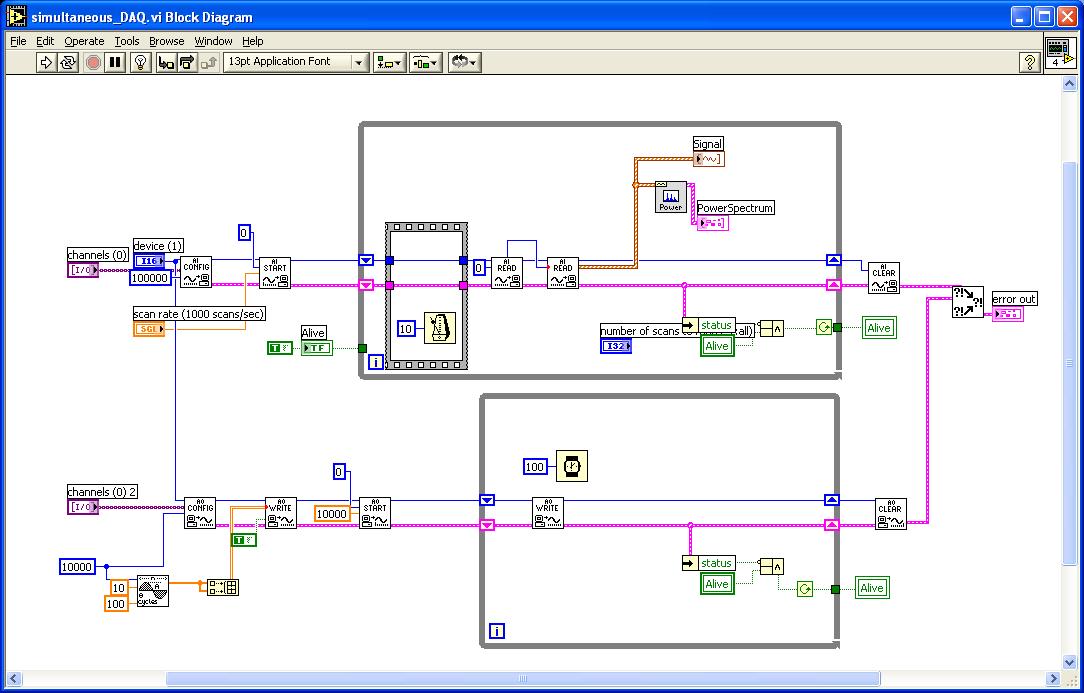

Hi Raz,

I noticed a few things about your code, first of all, the 'allow regeneration' bit is not set in the Write AO buffer (First call)?

Is this intentional ?

I quickly wrote a VI where DAQ input and DAQ output on two channels is done,

but please note, that in this example there is no synchronisation between input and output !

If that is what you are looking for, you have to look further.

This example worked here on my PC. Although not with a 6036e, but a 6110PCI board.

Hope it can help You find the right solution.

Success,

Tom

-

Do you keep your TCP connection to the printers open?

Or is it closed each time something is sent to the Printer?

I once had the same kind of problem when all the TCP ports

at the server side wer in the 'CLOSE_WAIT' state.

This was the result when the Server application communicated with

a slave every 1.5 seconds, and opened and closed the TCP connection

each time. Because I did not specify the 'Server side' port, LabVIEW chooses the next

free port.

But because the previous port was still in the 'CLOSE_WAIT' state, Labview

alocated a new one, and within a few hours no port was free anymore.

Specifying which port is to be used by the 'Open TCP socket' solved the problem.

Don't know the if this is could cause the error 61 though.

Maybe there has been an increase in the number of printjobs?

Did you ever ran netstat when the printserver hung ?

("netstat -a" in command prompt to view all open socket connections.)

Another interesting tool to analyse network traffic : packetyzer.

Success, Tom

-

Hopefully this is the right category for this question..

Has anyone used LV for receiving streaming video?

The video stream is typically .wmv or .asf.

I was thinking of using the IMAQ toolset to develop a webtv receiver which would have PVR functionality.

PVR = Personal Video Recorder

I think there was a project done in Linux that provided a means to distribute audio / video over a local network and create a "video jukebox" of some sort.

JLV

Don't know how to get this done in IMAQ, but I think the Linux 'jukebox' you are referring to,

is the MythTV project.

-

I assume that you are measuring current as a DC signal here.

Could it be that the current signal is not so 'constant' as you would expect?

Probably the multimeter is averaging the noise present on your signal.

Check the input signal of the module with an Oscilloscope, if you have one present, or I you haven't,

You could switch your multimeter to AC input, and that will give you a first idea how much 'noise'

there is present on your DC signal.

We use a CompactRIO/RT-FPGA chassis with a cRIO-9215 module to measure current. (4 V input eq. 15KA), and we use a

dedicated algorithm (at 40Khz sample rate) on the FPGA to get a decent, reliable Current signal.

Tom

*Edit: Typo*

cRIO TCP/IP Problems

in Real-Time

Posted

Hi,

We've had the same problems with the cRIO platform when NI switched the CPU from Intel to PowerPC

and the RT OS from ETS to VxWorks.

Apparently, when you want to access the TCP packet received by the cRIO 2 different times, it takes

about 200 msec to access it the second time. Depending the CPU type and load.

Our typical RT architecture was something like this before : (UDP)

SenderSide:

- Prepare data to send in a cluster

- Flatten cluster to string

- Get String length

- Typecast string length to 4 bytes text

- Pre-Pend string length before data

Receiver side:

- Wait for UDP data, length 4 bytes (string length)

- Read 4 bytes, format to I32 string length

- Read remaining string length

- Unflatten datastring to Cluster and process

While this worked flawlessly in Windows, RT on Fieldpoint, CompactFieldpoint and the first series of cRIO's (the intel line),

the code suddenly broke when NI switched its OS to VxWorks on the newer cRIO targets.

Perfectly working code suddenly had to be rewritten, just because a newer type cRIO was selected.

But anyhow,

We found some kind of workaround which proved to be working.

General description :

Sender Side :

- Only use TCP, UDP proved to be too unreliable on cRIO

- Prepend the String length before the flattened string, and send as a single 'send function'.

- If total string size exceeds 512 bytes, split the string to 512 byte packets, and send them with separate 'send functions'

Receive side

- Send no more then 512 bytes per TCP Send function, if a string is longer, split it in

separate 'TCP Sends'

- At receive side, only access every receive package only once, to have fast cycle times

- Read TCP bytes with the 'immediate' option, check the received package size, and the expected package size (first 4 bytes)

- If more then the received bytes are expected, read the remaining bytes with the 'standard' option

For us this proved to be a working solution, which does not take 200msec to read a package from the TCP/UDP stream.

But it affects the real-time behaviour of the OS, since TCP will take more Priority in the OS scheduler then UDP did,

causing more jitter on loop times.

I'm still trying to figure how I have to explain this kind of stuff to customers who choose cRIO as their

product/industrial platform.

Try to explain that the manufacturer changes the TCP stack behaviour between 2 different cRIO versions.

That the underlying OS has changed is not of their interest. It proves that NI has no clue on how automation is

done in a mature industrial market, instead of a research environment or prototyping work.

But let's not turn this into a rant on NI ;-)

I'm sorry I can't send you any code, but that is under company copyright.

If you have any questions, let me know, I'll try to help.

Tom