2and4

-

Posts

11 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by 2and4

-

-

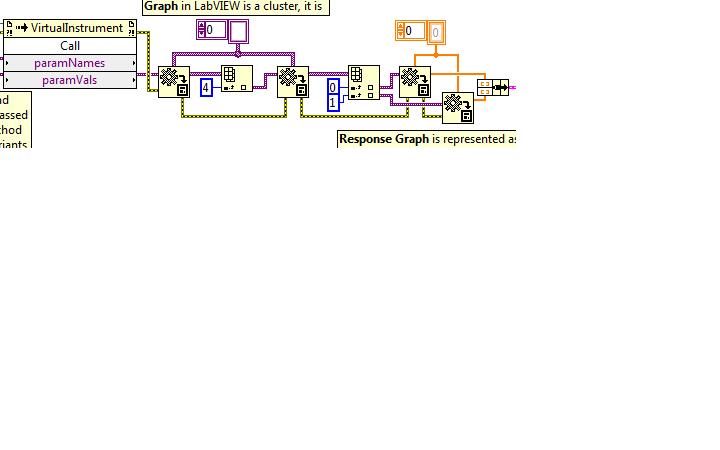

I've had this problem. Take a look at the attached picture. My ActiveX server was a LabVIEW EXE and so was my ActiveX client (the shown VI). In your case your ActiveX server will be your API and your ActiveX client is your VI.

I had to do what is shown in the attached picture to solve my problem. I thought that paramVals was a 2-D array, but it turned out it was a variant which itself contained a 1D array of variants. Each of the 1D arrays in turn contained a 1D array of variants. It took a lot of digging around in documentation to figure this out.

-

QUOTE (jasonh_ @ Apr 18 2009, 09:55 PM)

Recently I created an automated test that must collect data over a number of variables. For example, collecting temperature data on a wifi radio over a number of test cases.The most natural way to write the code would be nested for loops. It would look something like this

for every voltage,

for every temperature,

for every channel,

for every data rate,

take power measurement;

end

end

end

end

however my test must be robust and flexible. I cannot simply put 4 nested for loops on my block diagram. For one thing it will be huge. Also I cannot pause, run special error handling code, and do a number of other things that a state machine allows me to do. The problem is if I have a number of discrete states, such as

init

set voltage

set temp

set channel

set data rate

measure power

stream data to file

exit

I must figure out how to simulate the state transitions of nested for loops. Currently I have a separate state "update user settings" which has all the logic built into it. This logic is implemented as a mathscript node with a large number of if-statements in it, which is about 3 screens high. It works fine, but it doesn't seem very ideal. It makes my code more difficult to understand and more error prone. This seems like a common problem, so I would like to know how other people are solving it.

How about using 6 parallel loops, the first being a UI loop (with an Event structure), and the other 5 are dedicated to the variables you are trying to read. Each loop is a consumer in that it receives messages on its own dedicated queue (as presented in the LabVIEW Intermediate I course).

Each loop will also be a state machine. The messages that the loop dequeues have to tell the loop 1) what to do and 2) what data to use. Flattened strings can contain both a command portion and a data portion. Variants can also be used. A cluster with an Enum and a variant/string is also commonly used.

A loop will receive commands from a previous loop and will also receive data from a subsequent loop. For example the "data rate" loop will receive commands from the "channel" loop. The "data rate" loop will also receive data back from the "power measurement" loop. In other words when the "channel" loop runs it delegates to the "data rate" loop.

Similarly the "channel" loop will receive commands from the "temperature" loop and data from the "data rate" loop, the difference being the data from the "data rate" loop includes the data that the "data rate" loop received earlier from the "power measurement" loop.

I recommend that a loop use the SAME queue to receive commands AND data.

Using an architecture like this you can incorporate error handling, PLUS it is maintainable since each loop is a state machine. You may even be able to remove your Mathscript node.

-

Will the header contain any strings which will indicate the "version"? i.e. whether it is the "old style" or "new style"? If so you can do this in the following way:

1. BEFORE you update the typedef to the new version, make a cluster constant copy of the old typedef on your block diagram.

2. Disconnect that copy from the typedef.

3. Place the code that converts the incoming header string into the typedef cluster inside a case structure.

4. Parse out the version info. from the incoming header string and use that to control the case structure.

5. The Default case will do exactly what you're doing right now. i.e. convert the string to the latest typedef.

6. The "Old Version" case will convert the string to a cluster using the cluster constant copy you created. Remember that is disconnected from the typedef.

7. In the "Old Version" case you can unbundle the newly created cluster and rebundle just the appropriate items into the typedef.

See the attached example. It uses an old and new version of a Config XML file but the idea is the same. Save Config.xml and Config_Ver2.xml to some directory. Then Run the ConfigModule_UnitTest and set the path to either of the XML files. It will work just fine. In your case instead of an XML file you have a header string.

Hope that helps!

-

QUOTE (atpalmer @ Apr 17 2009, 11:20 PM)

I am building a solar cell test system like a student project. I have access to labview 8.5 and i wan't to run the solar toolkit vi at the link below. The toolkit is written for version 8.6 and won't open. Is there any way I can get an 8.5 compatible version? If someone with 8.6 can be so kind as to do a save as and post the result it would benefit everyone.http://zone.ni.com/devzone/cda/epd/p/id/5918

Thank you if this is possible.

The attachment below can also be found at the link above.

I opened the code in LabVIEW 8.6 and "Saved for Previous Version (8.5)". I got some warnings when I did that so I don't know if it worked. Unfortunately I don't have LabVIEW 8.5 on my laptop so I couldn't check it.

Anyway, here's a zip file of what was generated. Hope it works...

-

QUOTE (torekp @ Apr 17 2009, 01:14 PM)

Thanks guys, I will take your advice :worship: Now if only y'all could just agree ... Actually, if SVs are just a fancy version of TCP, and UDP is easier on the DAQ computer than TCP ... then I guess ned's advice wins.

Actually, if SVs are just a fancy version of TCP, and UDP is easier on the DAQ computer than TCP ... then I guess ned's advice wins.If you were looking for a tiebreaker vote, I second the UDP recommendation. Your DAQ program will be the UDP Sender. Your external PC will be the UDP receiver. This will be more efficient than using TCP/IP functions in the DAQ program since UDP doesn't keep track of whether the receiver actually received a particular piece of sent data or not (you indicated that it doesn't matter if occasionally a piece of data is lost or not). Shared Variables introduce the most overhead.

There is a very easy to understand UDP example that ships with LabVIEW: Use Example Finder and go to Networking >> TCP & UDP >> UDP Sender.vi and UDP Receiver.vi. Try it out:

1. Open UDP Receiver.vi on one PC.

2. Open UDP Sender.vi on another networked PC.

3. Set the "Remote Host" control on the Sender to the IP address or alias of the Receiver.

4. Type in some message into the "Data String" control.

5. Set the #Repetitions to something around 100.

6. Run the receiver.

7. Run the sender. Note that the sender doesn't care if it was started first or not.

8. You'll see the message you typed into the "Data String" showing up at the receiver.

Simple as that. You'll have your communication up and running in no time.

-

Are you sure you are using a Waveform Graph and not a Waveform Chart? If it is a Waveform Chart, I remember having a similar problem in LV 8.5. I was updating the chart by wiring an array to the chart terminal. On Task Manager I saw memory being chewed up. I tried to use the Performance and Profile Window to find any memory leaks but couldn't find any (are you sure you used VI Metrics and not the Performance and Profile Window?).

Finally I found out the problem was the "Chart History Length" of the chart. Whenever the size of the array was larger than the chart history length, the extra data was apparently being stored in memory and that was what was chewing up the memory.

If this turns out to be the problem, then you could try increasing the chart history length, OR make sure the array you feed to the chart terminal only has as many elements as the chart history length. I'd be curious to hear if that was the problem.

I tried recreating this in LabVIEW 8.6 and couldn't.

QUOTE (Cat @ Mar 3 2009, 09:08 AM)

Hi all,I need a LAVA category of "Strange behaviour that I can't explain!"

Here's the problem: I dynamically call a vi that, in essence, reads in data via TCP (64kS/s), buffers that data if needed, performs an FFT on it, and displays the results on a waveform graph. This program will run just fine for hours, when all of the sudden it starts chewing up memory, at the rate of about 200k per second (as reported in the "Processes" tab of Task Manager), until the machine runs out of memory and crashes. If I close the vi in the midst of the memory grab and reopen it, it's fine again (until the next time it happens). This bug doesn't always occur (I've run it for days with no problem) but it happens often enough that it's more than just an annoyance.

This has happened in both 7.1.1 and 8.5.1, on a variety of computers, all running WinXP. The code has been built into an executable. I've never had the source code show this behaviour, but it hasn't been used as much as the exe.

Any thoughts?

Cat

-

Changing the "access (0:read/write)" Enum input to the "Open/Create Replace File" function from "write-only (truncate)" to "write-only" seemed to solve the problem. I don't know if that item was available with that Enum in an older version of LabVIEW, but on my copy of LV 8.6 that Enum caused a coercion dot. Here's a slightly cleaned up version of your VI and a screenshot (in case you don't have LV 8.6). You might also want to concatenate a LineFeed at the end of each number.

-

QUOTE (tushar @ Jan 15 2009, 03:25 AM)

In Past we have built a software which can capture images from Analog cameras and Web cams. we used a PCI card for capturing analog images. I think there is no need to go deep into details of driver. almost all drivers will be compatible with directshow. and i think it will be better if you design your software on top of directshow.Afterall it may not be a good experience if you need to scrap your entire code and start from scratch just because you are planning to change your capturing device

I agree: it would be best to use DirectShow. We don't have the option of using a PCI card for capturing analog images: we have to use a laptop.

Thanks for the help!

Akash.

-

QUOTE (tushar @ Jan 15 2009, 03:25 AM)

In Past we have built a software which can capture images from Analog cameras and Web cams. we used a PCI card for capturing analog images. I think there is no need to go deep into details of driver. almost all drivers will be compatible with directshow. and i think it will be better if you design your software on top of directshow.Afterall it may not be a good experience if you need to scrap your entire code and start from scratch just because you are planning to change your capturing device

I agree: it would be best to use DirectShow. We don't have the option of using a PCI card for capturing analog images: we have to use a laptop.

Thanks for the help!

Akash.

-

We are trying to analyze images generated by a microscope using NI Vision software and LabVIEW. The microscope generates Composite Video. We want to send the images to a laptop using a Composite Vide to USB converter. The problem is there are SEVERAL of these Composite Video to USB converters available and we don't know which one has a driver with an API which we can call from LabVIEW relatively easily.

I'm guessing most of these converters come with software that, when installed, registers ActiveX Type Libraries or .NET Assemblies on the OS. The problem I had once in the past is that the classes were not very well built and using the classes' methods was quite cumbersome.

Anyone used any of these converters? Any suggestions which of the many we should try? Thanks in advance...

Bold labels on block diagram...

in Development Environment (IDE)

Posted

QUOTE (Ic3Knight @ Apr 19 2009, 12:20 PM)

It could be your Application font got set to something other than Default. Go to Tools >> Options >> Fonts and see what the Application Font is set to. That font defines how your labels look.