MartinPeeker

-

Posts

31 -

Joined

-

Last visited

-

Days Won

2

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by MartinPeeker

-

-

I just had the same problem. Couldn't create override methods using GDS. Couldn't remove file from project. Rename and remove worked, but still couldn't add override method with GDS. Adding it the override using LVOOP worked, but again, couldn't remove it from project.

Turns out I had vi:s with the same name in dependencies. They were there since I had used the GDS clone method to another class for a number of vi:s, the removed them from the original class. This included a polymorphic vi that referenced these, only the references weren't transfered so they now pointed at the vi:s with the same names under dependencies (these were the files previously removed from the original class after the clone).

Somewhat confusing, but thought I'd share anyway...

-

As I understand it your problem is having two child classes that are very similar except in some functionality. Your solution is to make one child inherit from the other so that you can benefit from the similarities, but this gives you problems when calling the parent, which forces you to make implementations in a parent or grandparent because of what the grandchild needs.

I've been there a few times, and honestly mostly hacked my way around it, but the elegant design for this (if possible) should be to put the functionality that differs between the two children in an aggregated class that is overridden depending on which child functionality is to be used. This gives only one child, behaving differently depending on which aggregated class it is using.

-

Just to check the obvious:

Are you positively sure that there isn't a "\r" in the second array element? Are the strings not limited to a single line? Are there different settings on Environment -> End text with enter key? Have you checked the array string using code display?

-

Without having worked with Control Design and Simulation and without having the possibility to look at the code, my guess is that your TransferFunction 7 and 8 expects its in data on the left and returns its out data on the right. Left to right is the default style guideline in LabVIEW, so try to re-wire those functions so that the output of Summation 3 goes to the left side of TransferFunction 7, the right side of TransferFunction 7 goes to the bottom input of Summation 3 (and similarly for Summation 4).

-

I'm running LabVIEW on Ubuntu, and it's working pretty good. Mostly I'm still at 8.6 but I've installed up to 2012 and it is all running well. In 8.6 I have some quirks, more silent crashes than I would expect in Win, some computers have severe graphics problems with LV, fonts can be a bit messy although I suspect thats a general LV for Linux issue. Sometimes the windows management (KDE) can mess up a bit.

I've developed a product that runs on a dedicated slimmed down Ubuntu distro with LV-RTE and that works fine, although I've hit performance degradation on those computers (while others show improvement) in later LV version, which is why I'm still at 8.6.

-

I'm not sure what would qualify as a major change that fundamentally changes the product. New concepts are introduced from time to time such as Embedded targets (8.xx?), the Project Explorer (8.xx?), native Object Oriented programming (8.20) and Actor Framework (20XX?). All version have good backward compatiblity and you can save your code for previous versions back to 8.0 at least provided you do not use things introduced after that. It's seldom very difficult to upgrade from an older version to a newer, although there are special cases when people run into problems.

When it comes to fundamental changes I guess major changes in how the compiler works would qualify. This white paper desribes the history of the compiler, introduced with LV2 and with the largest changes made since in LV2009 introducing DFIR and 64-bit comaptibility.

Apart from that it's hard to answer your question without knowing more about why you are asking it.

-

Since LV2009 NI releases one major revision per year in august, named by the release year. This is then followed by a Service Pack containing bug fixes typically released in early March. So currently the latest release is LV2013SP1. Many prefer waiting with updating their systems until the service pack is released.

You can find some information on versions here.

-

This might be a good use case for Variant Attributes.

-

Tried disabling the other event structures - didn't help....

-

Thanks for that hooovahh.

To make things a bit more puzzling, I thought I had a workaround by setting the key focus back where I wanted it in the 'Mouse down?' event. Works well in my little example, focus stays on my string control if I type and click the indicator at the same time. It doesn't work in my application though, focus is stolen and the typing jumps to the start of the string control. In all honesty I should mention that the actual application is somewhat larger and has three event structures in it, although no other event structure handles any mouse events nor any filter events.

I tried adding a wait before setting focus back at the control thinking that would kill my workaround as in my application, but it turns out that it works even with a lengthy wait in the 'Mouse down?' event. -

Crosspost from here, didn't get momentum there though.

I'm trying to prevent accidental stealing of focus from a string control by discarding the Mouse down? filter event. It turns out however that mouse down on any control or indicator that can take any input, such as strings, numerics, paths etc takes focus away from the string control.

Is there a use case for this that I can't see or is it a bug?

-

I use multiple ES in the same block diagram, typically a similar situations others have mentioned. I let my system update running graphs and indicators with user events which is handled i one ES, UI interaction is handled by another ES that also checks system status in its timeout case. I even have ES i other parallel loops such as a clock loop, just to listen to a 'Quit' user event.

-

I'm with Darren on this one. Mostly even down to single vi:s actually.

Working with a SCM makes this even worse (might be better if it was integrated, but that is not my luck). I want to rename it in the SCM so that I don't lose the revision history, but that leaves you in the rename-on-disk world of pain for(imstuck) was talking about.

I didn't know of the possibility to move files around in the File tab, one of those things I've overlooked, thanks for letting me know about this. How does this work with an inegrated SCM?

-

Anecdotally, I remember someone saying that hyperthreading can make VIs execute less efficiently in some cases, although I don't remember what those cases are.

This article describes some of the caveats with hyperthreading, having debug turned on being one of them.

-

1

1

-

-

Hello again folks!

A bit of a bump of an old thread here, I still haven't been able to solve this issue, it remains with LV2012.

A short recap of the problem:

What happens is the on the first, single-processor computer I see a dramatic fall in the use of the CPU. The other in contrast shows a dramatic raise in CPU usage. The computers do not have LV installed, only the RTE:s.

Machine1 (1* Intel® Celeron® CPU 900 @ 2.20GHz):

CPU% with LV8.6: 63%

CPU% with 2011SP1: 39%

Machine2 (2* Genuine Intel® CPU N270 @ 1.60GHz):

CPU% with LV8.6: 40%

CPU% with 2011SP1: 102%

In the second machine the max CPU is 200% since it has two CPU:s. The load seems to be pretty even between the CPU:s.I've been trying to track this down again lately, and my suspicions are now towards hyperthreading as this is one of the main differences between the computers.

Machine 2 with a cpu described above as 2* Genuine Intel® CPU N270 @ 1.60GHz turns out to be a single core CPU with hyperthreading enabled, whereas machine 1 with a CPU 1* Intel® Celeron® CPU 900 @ 2.20GHz do not use hyperthreading.

I've tried most performance tricks in the book, turning off debugging, setting compiler optimization etc. to no avail except minor improvements.

Unfortunately we cannot turn off the hyperthreading on machine 2, the choice seem to be disabled in BIOS. We've contacted the vendors and might be able to get hold of another BIOS in a few days if we're lucky. Machine 1 doesn't support hyperthreading.

Anyone ever got into problems like these with hyperthreading on Linux? Any idea of what I can do to solve the issue, apart from buying new computers? Am I barking up the wrong tree thinking this has anything to do with hyperthreading?

-

A somewhat more simple approach to this actual case:

The OP is setting the colours of all cells to one of two different colours to highlight failures. More efficient would be letting the default BG colour be white and just update the yellow cells (bool is false) in a case structure.

-

2

2

-

-

Do you have a bunch of loops which don't have a 0ms wait in them to yield the CPU? Acceptable alternatives are event structures, ms internal nodes, or most any NI-built node which includes a timeout terminal.

For loops or while loops without a node like these will be free-running, executing literally as fast as the scheduler will allow, which is often not necessary and detrimental to the performance of the application as a whole.

No loops that I can think of. Looked through some parts just to make sure, found one timeout event that should never be fired (nothing connected to timeout connector) but adding a timeout there didn't do any difference. The fact that the CPU usage seem to be pretty consistently higher relative to that of the 8.6 application with different parts of the code running makes me think that it is the 2011SP1 run-time engine that is less efficient on this machine.

-

Thanks for your suggestions. Unfortunately, no luck so far in solving the problem.

To give a bit of background information:

The system is communicating with a USB device through drivers written in C and called by the good old CINs. The data then goes through an algorithm and is presented in 4 charts using user events. More user events are triggered for GUI updates, but the GUI i use now don't care about those. The update rate is about 30Hz. It uses about 80 classes altogether although many of these are for administrative use (user accounts, printing etc.) and quite a few are wrappers of different kinds. Slightly more than 2000 vi:s are loaded.

1: On LabVIEW 2011, in the build : Advanced, turn off SSE2

2: On LabVIEW 2011, in the build : Advanced, Check "Use LabVIEW 8.6 file layout"

3: other combination of 1 & 2

The other thing I noted your second cpu is N270, which I believe is Netbook ATOM kind. it my handles Math and double/single operation differently. I can't see why it would change from 8.6 to LV11, but it may have something to do with SSE2 optimization. looking at Wikipedia both Celeron and Atom support it.

SSE2 has been tested back and forth without any change. I use 8.6 file layout as some code rely on it.

I've set the program in different states and compared the CPU usage between 8.6 and 2011SP1 to see if I can nail down any specific parts of my code that would cause the increase:

* With the drivers are switched off the increase in CPU usage is 65% (relative).

* Starting the drivers, still about 65%

* Starting the algorithms and GUI updates gives more than 100% increase (I can't separate those two yet).

* Stopping the GUI updates, i.e. not listening to any of the user events for GUI updating also gave gave more than a 100% increase, although the overall CPU usage dropped more than I would have expected in both 8.6 and 2011SP1.

I've run the application on my development machine that also have two CPUs, this shows better performance using 2011SP1 than 8.6 as in machine1 above.

So the conclusion of this would be that everything takes up more CPU on this specific computer with 2011SP1, and that the algorithms takes up even more CPU power. Further suggestions or crazy ideas on why I see this behaviour are welcome. I need coffee.

/Martin

-

How much of the CPU usage is your application versus such things like operating system, antivirus, etc.?

Hi Tim_S, thanks for your reply.

The operating system is doing very little, ranging from one to a few percent.

-

Hi LAVA,

I need your help!

I've recently started updating a system from LV8.6 to 2011SP1, and have ended up in confusion. I deploy the system as executables on two different machines running Linux Ubuntu, one a laptop with a single processor and the other a panel PC with two processors.

What happens is the on the first, single-processor computer I see a dramatic fall in the use of the CPU. The other in contrast shows a dramatic raise in CPU usage. The computers do not have LV installed, only the RTE:s.

Machine1 (1* Intel® Celeron® CPU 900 @ 2.20GHz):

CPU% with LV8.6: 63%

CPU% with 2011SP1: 39%

Machine2 (2* Genuine Intel® CPU N270 @ 1.60GHz):

CPU% with LV8.6: 40%

CPU% with 2011SP1: 102%

In the second machine the max CPU is 200% since it has two CPU:s. The load seems to be pretty even between the CPU:s.

Why is this happening, and what should I do to get the CPU usage down on machine2 (the one being shipped to customers)?

/Martin

-

Hi Adam!

Thanks for all your help. I see your point now with maximized windows needing to be resizable in order to let them be minimized. I guess there is a good reason for this, having a window as opposed to full screen implies that there may be other windows, hence you are not allowed to let your window fill the screen without possibility to minimize it as this would make it impossible to see other windows.

I have to ask, what value is the title bar if you can't minimize, unmaximize, etc.? Why do you want the title bar visible? If you hide that you can't move it. Problem solved. I think you're making it harder just to show a useless title bar.

The reason i want the title bar is that I like the standardized look. I need a title in some way and that is the natural place for it. It also gives an intuitive placement of the menus as I find menus without title bar look a bit weird.

//Martin

-

Maximized implies resizable. That's just how the window managers work. It sounds like what you want is something to be full screen. To do that you need a combination of multiple settings: disable resizing, hide the title bar (which appears to also hide the border), hide the toolbar (or not, maybe you want that), then set the panel bounds to be the size of the screen. Make sure to reset all of that when your VI is stopping or it could get quite annoying to work with. When I experimented with this it looked like maybe LabVIEW wasn't accounting for the invisible border when I set the panel bounds, so you may need to account for that. You also may want to hide the scrollbars while running (that can be set directly from the front panel).

Hi Adam!

Thanks for your response!

I've been thinking of your solution, and sure, it is one way of doing it, but it doesn't get me all the way.

What I try to accomplish is:

A window that fills the screen except the toolbar (this can be done using the Application.Display.PrimaryWorkspace property together with given size for the toolbar)

A window that is not movable (as it is when it is truly maximized)

A window that is not minimizable.

A window that cannot be resized.

A title bar

It seems I can't get it all:

Maximize, and it will allow me to minimize

Fill the screen using window size, and it will be movable using the title bar.

Also, If is set run-time position to maximized, it does not become maximized. The size seems to close to right, but the window is not positioned at (0.0) and it is movable.

If I save the vi as maximized and choose unchanged as run-time position, the window is properly maximized (not movable)in edit mode, while in run mode it is movable. Not allowing minimize has no effect.

Your right that it most probably has to do with the window manager, but I don't think that is the sole culprit.

If I save my vi maximized, not minimizable and open when called, then run it as a sub-vi it will be too wide, too high and not placed at (0.0). But it will not be minimizable.

If i run it as a top-vi it will almost have the right size, have the right width except for the right border, but it will be minimizable.

I believe there is no good solution to my problem, and since I'm not on a supported distribution I can't complain too much, but if this behaviour exist on supported distributions I would call it a bug.

BTW, I have never worked with OS X, but surely you must be able to configure a window to fill the screen and not be movable? (And then, hopefully, disallowing any resizing or minimizing of any sort).

ShaunR, thanks for entering the discussion. Double-clicking the title bar does not change the size for me (2010 on Ubuntu).

//Martin

-

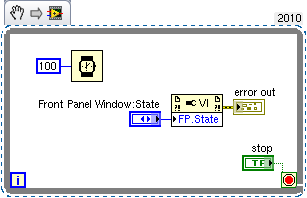

GuiBehaviour.viHi Adam!

Of course, you're right. The user was not allowed to resize the window.

However, I still have problems. What I want to do is maximize my window and making it not resizable. However, it seems that if I maximize the window in all ways possible and sets rezisable and minimizable to false, I get a window that is as big as the screen but not maximized, it is still movable. It is also minimizable.

If I let the window be resizable, it does become maximized. Now an ugly workaround would be to maximize the window whenever the resize event triggers. This only works once though, and I can't really figure out why.

Just saving the vi in a maximized state or setting run-time position to maximized won't help either, due to this cross-post.

Attaching my lab code for this.

Much obliged

//Martin

Edit: I seems that making the window not resizable breaks the maximized state and that disallowing minimize doesn't work at all.

-

problem with array and text file

in LabVIEW General

Posted

Use Read From Spreadsheet File.vi twice, once for each file. Index out first column of both resulting arrays, build new array using "Build Array" primitive. Probably you need to Transpose as well.

But if the time stamps are to be connected to the measurement values, which looks to be the case, I would bundle each time stamp with the corresponding measurement value into a cluster and then have a 1D-array of those clusters.