ayumisano

-

Posts

35 -

Joined

-

Last visited

Never

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by ayumisano

-

-

It might help us to help you if you could post either a single VI or an image of the DAQ/Trigger loop you are trying to debug. As for specific cards you can go up on the NI sebiste under Products & Services and 20 minutes of reading (tops) will tell you most of what you want to know about the different cards.

Hi Michael,

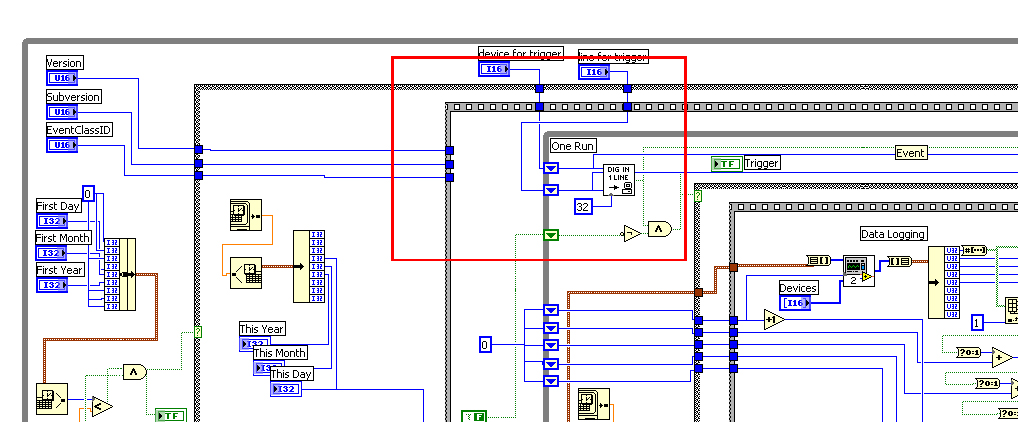

I have attached the image of part of the DAQ program. I am not sure whether this helps but since the program is too large to use 'screen capture'.

I am thinking whether it is the DIG line in the while loop that makes the problem. So now I am trying to use Port Conifg and Port read and DIO clear instead, and I put Port config outside the while loop and Port read and DIO clear inside the while loop. It seems that the memeory leak problem is solved (I ran the program for 12 hours but it didn't show me the message of insufficient ram as it did in the past), but I have not yet tested whether the triggering still works properly (that is, whether it can still receive a signal from the PCI-6534) if I put it this way.

Thank you for your help!

Ayumi

-

Hi Ayumi:

Make sure that you don't create references to the hardware you are using inside the while loop-- that is a sure way to create a bad memory leak and also slow things down badly. Create the reference just once outside the loop, and pass it in to the things that use it repetitively.

Also, close the reference after the loop exits-- This is only important if the program is run many times without exiting, but still its good practice to close the reference when the program is done with it.

This might not be your problem, but its one of the most common ways new users create memory leaks in LabView, so I thought it was worth mentioning.

Good luck & Best Regards, Louis

Hi Louis:

I don't understand what you mean by "create reference". Do you mean using port config inside a while loop?

Thanks for your help!

Ayumi

-

hmm ... how to say this?

if you want to programm a hardware trigger, you have to use a card, which supports hardware trigger. Most of the NI-DAQ-Cards support at least a rising edge start trigger, but only a few support e.g. a "reference trigger"

therefore, yes, you have to use a specific card, but as mentioned above most of the NI-DAQ-Cards are those "specific" cards

Dear all,

Thank you for your reply.

I know that it is memory leakage because I open the task manager and I can see that the the ram "comsumed" by LabVIEW is increasing while there is no triggering. I will try to find a simpler version to post here.

I would like to know whether PCI-6602, PCI-6601 and PCI-6534 can be used for hardware triggering.

Thank you very much for your help.

Ayumi

-

Dear all,

I am writing a data acquisition program with LabVIEW. The program has a while loop which waits for triggering from outside. The program works properly when there is triggering but there is memory leakage when there is no triggering. I would like to know whether there is any method to solve this problem.

Moreover, does hardware triggering require specific I/O cards such as PCI-6534?

Thank you for your help in advance!

Ayumi

-

Ayumi,

Without even an order-of-magnitude estimate of the tolerance to which you need to synchronize, it is impossible to provide much advice. It's a completely different matter if you have to synchronize things to a tolerance of say 12.5 ns (which is the smallest time period potentially resolvable by the counter/timers on the PCI-6602) vs. synchronizing things to a tolerance of 1 second. And all the orders of magnitude in-between imply different trade-offs.

It is also important to provide information as to whether you need counter/timers or DIOs synchronized, and if you are using DIOs, how many are inputs, how many are outputs, and if there are outputs which need to react to the inputs.

-Pete Liiva

Dear Pete Liiva,

Thank you for your reply!

I am using the digital input of PCI-6602 and NI 6509 only, there are at least 20 inputs for each card. (five PCI-6602 and one NI 6509) Actually the average event rate is not that high, it's only 1 event per second, but of course the faster the better. If the event rate is one event per second, how long would the delay be acceptable?

Thank you in advance~

Ayumi

-

Dear all,

I would like to know whether it is possible to synchronize five PCI-6602 and how. (I was told that it's impossible to synchroize more than 4 cards, by an NI staff) My aim is to synchronize a NI 6509 and five PCI-6602.

Moreover, I would like to know the delay time of taking data and finishing taking data of a software type digital I/O card.

Thank you very much for your attention!

Ayumi

-

Dear all,

I would like to buy an I/O card for data acquisition. The event rate is 1 per second on average. What I need is digital input and 96 bits. Which card do you recommend? Thank you very much for your attention!

Ayumi

-

Dear all,

I am writing a library for the LabVIEW Call library function. I have to include sstream and use istringstream and ostringstream from sstream. I am building the .dll using MS Visual C++. However, there is always error telling me that istringstream and ostringstream are undeclared identifier when I build the .dll file.

I have tried building a simple .exe file which also include sstream and I use istringstream and ostringstream in it and I use MS Visual C++ to compile it. There was no error.

I would like to know why the error only occurs when I build a .dll file and what I can do to solve this problem. Thank you very much for your attention! ^^

Ayumi

-

Ayumi,

In the user manual, Page 3-26 (page 45 of the pdf document) has a short discussion on the DIO. It appears that if you are doing only input, you don't have to configure any PFI lines, just use PFI0 through PFI31. It also seems to imply that it treats the DIO lines as a single port of 32 bits, but I haven't tried to do anything to verify this.

The manual also states "It is necessary to specify whether a PFI line is being used for counter I/O or digital I/O only if that line is being used as an output."

Hope this helps,

-Pete Liiva

Dear Pete Liiva,

Thank you so much for your help! Finally I did the job!

Ayumi

-

Ayumi,

Here is a link to the PCI-660X pdf manual. On page 4-10 (or the 61st page of the pdf document), there is the information you are looking for. It looks as if you need to use PFI0 through PFI31 if you want to use all 32 lines of DIO, since the documentation seems to imply that PFI32 through PFI39 can NOT be used for DIO. You may have to specifically assign each DIO to each PFI line, which might not be a bad exercise to go through. I tend to like to set my own defaults in my programming, instead of relying on preset defaults.

This seems to imply that it may not be possible to simultaneously use the full functionallity of the 32 DIO lines and 8 counter/timers on a PCI-6602, but this is just a first impression I get with a quick glance at the problem. I have only been using the counter/timers on the PCI-6602 recently since I don't have a need right now for the DIO lines.

Good luck!

-Pete Liiva

Dear Pete Liiva,

Thank you very much for the user manual of PCI-6602!!

Should I set port 0 and line (for example) 12 if I am trying a read an input signal from line 12 of the I/O card? I am using LabVIEW 6.1 only and it cannot be used with NI-DAQmx devices, I wonder if it can read from all 32 lines of PCI-6602.

Anyway, thanks a lot for your help!

Ayumi

-

I suspect that the NI staff member you talked to was probably wrong - perhaps they misinterpreted your application. I know that I have had a wide range of experience with support from staff from NI, sometimes excellent and sometimes bad, and just about everything in-between. It's a big company, so I would expect it to be impossible to expect brilliant tech support every time for every situation.

Without more description of the digital signals you are reading, it is hard to answer your specific questions. I assume you are reading multiple parallel digital lines, but at what rate? At first glance, it looks like the PCI-6601 and PCI-6602 both have 32 digital I/O lines. I have more experience using the counter-timers on the PCI-6602 then using the DIO lines.

-Pete Liiva

Dear Pete Liiva,

Thank you for your reply!

The signal I am reading is just 9V from a battery (simple circuit with a light bulb, made intentionally for testing these I/O cards). There is no way to add lines (other than 0 - 7) to 6602 and 6601 for NI-DAQ, I can only see port 1, lines 0 to 7 in the test panel in NI-DAQ traditional devices. Can all PFI be changed into DIO?

Ayumi

-

Dear all,

I am connecting 5 I/O cards (1 PCI-6534, 3 PCI-6602 and 1 PCI-6601) and trying to read digital signals from them. I was told by an NI staff member that 5 NI I/O cards cannont be connected together to take signals, however, it works properly for the combination mentioned above. I would like to know whether it works properly is because the 5 cards are of the same generation, or the claim of that NI staff was wrong?

Another question: I would like to know whether it is possible to make PCI-660x family take 32 bits digital signals (I am using LabVIEW 6.1 and NI-DAQ [not DAQmx]).

Thank you very much for your attention!

Ayumi

-

Yes, the executable will run slightly faster, since diagrams are not saved, and Front panels of lower level VI's are not saved, and debugging functions are disabled.

I doubt if you will get an order of magnitude speed improvement though...

The VI is also "compiled". Every time you save a VI, it does a quick compile.. you will see the compile message only if you have a large number of VI's you are saving at the same time.

N.

I have tried both burst mode and executable file.

The executable file of Cont Pattern Output did not give an incrase of frequency of 1 order. It was still around 1.5MHz.

And for the burst mode, I don't know why there was no signal seen when I connected one of the line with a CRO. (I used PCI-6534) :headbang:

-

Thank you for your reply!!

I will try burst mode to see if it can give a 20MHz pulse.

At the same time, I am thinking about creating an executable file instead of running a vi. I would like to know whether running an executable file will be faster than running a vi. (I mean increasing MHz now to the order of 10MHz. It is said that because the exe file does not need compilation anymore but the vi does, is it true?)

Ayumi

-

I don't use Linux (or LV for Linux, for that matter), so I can't give you an answer based on experience, but basically, all the functionality that is available in LV for windows should be available in the Mac and Linux versions as well. There may be some exceptions, but the basics should all be there.

As for your c++ code, Linux has c++ compilers and shared libraries as well, so you should be able to recompile your code in a linux c++ compiler (you may need to make some modifications). I think there is one called G++. Anyway, after you compile it into a linux shared library, you just call it as you would a DLL. That's the basic idea, anyway.

You should try reading the rest of the posts in this board and search NIs site for "linux" to see what kind of problems people ran into.

Thank you for your reply! ^^

Linux doesn't use .dll, right?? So what should I do to make a library in Linux?

I am reading the articles from LabVIEW bookshelf about calling external code. It seems that only C source code can be used in Linux. Can C++ source code also be used in Linux?? (It would be a disaster for me to write the code again in C since I am not familiar with C)

Ayumi

-

Ayumi,

can you PLEASE provide more complete information. It is impossible to follow what you are doing with little bits and pieces.

1 Explain your application

2 State your Software Version (including DAQ driver)

3 Describe your hardware & setup (triggering etc.)

3 Post your example code

4 State your exact problem

I cannot find your particular example in my LV version 7.1.1.

Neville.

Neville,

Thank you for your reply!

1. I am trying to send out pulses at highest frequency possible. (5V TTL)

2. I am using LabVIEW 6.1

3. PCI-6534 and I use electric cable to connect the I/O board and the CRO to read the signal

4. As seen in the attached vi, you can change the frequency of the signal output, the highest frequency which can be acheived is 1.25MHz with this vi. I would like to know whether it is because of the speed of my computer or it is because of other factors.

Thank you very much for your attention!

Ayumi

-

Yes, you can only run an EXE on the platform it was built in, and I remember seeing a fairly detailed explanation from Rolf somewhere on exactly why this is so.

An OS emulator (like wine) would probably work if you don't use hardware, but I don't know how fast or reliable it would be.

It's ok that I can build the vi again in Linux, but I just want to make sure that the functions which are available in Windows LabVIEW are also available in Linux LabVIEW.

-

Oh!! That means I cannnot use .DLLs that were written in Windows?? What can I do?? I used C++ to write and made a .dll (I spent a lot of time on it

) Is there any way that I can use these codes in Linux (so that I will not waste my effort)? I am sorry for being not familiar with Linux.

) Is there any way that I can use these codes in Linux (so that I will not waste my effort)? I am sorry for being not familiar with Linux. -

Do you have the cintools dir in the labview folder?? Thays my problem at this point. Apprently my CD does not have that to be installed. It maybe on the resource CD's, but those are not with me at the moment.

If you do a search on ni.com you can find the maual on how to add the extra files needed. You have start a clean dll, and add like 4 files + your .c code.

Thank you for your reply!

The most difficult thing is...I have the cintool dir in the LabVIEW folder. ^^"""

I was using Dev C++ for writing the .dll file. Now I change to MS Visual C++ and everything seems to be OK so far~

Ayumi

-

No, the Linux and Windows versions are very different. You'd need to buy the Linux edition separately or contact NI directly about it.

Installation under Linux is straightforward enough (run one of the programs on the CD).

Thank you for your reply!

I would also like to know whether the funtions available in LabVIEW for Windows will also be available in that for Linux. (Since I have finished writing some vi with LabVIEW in Windows, I understand that the vi written in Windows cannot be used in Linux, but at least I hope that I can use those vi written in Windows as the blueprint and modify not too much if I want to have the same function in Linux)

Thank you for your attention~

Ayumi

-

Dear all,

I have got a disc of LabVIEW which could be installed in Windows, I wonder if I can also install LabVIEW with the same disc in Linux. If yes, can I just install it in Linux by just putting the disc into the CD-rom? Sorry for my ignorance, I am not that familiar with Linux. Thank you for your attention~

Ayumi

-

Dear Ayumi,

I don't know what platform you are using, but if its PC running Windows, then forget about deterministic nanosecond timing response.

If you use LabVIEW-RT on a PC or PXI target you should be able to get loop rates of the order of the high kHz or even a MHz.

The fastest and most reliable platform would be the compact-RIO running LV-RT on a FPGA.

Note that writing to file, or displaying data in plots would affect loop speed as well. Best way to do timing tests is to repeat the test about 10,000 times or something like that and then calculate and display (or save to file) the average speed.

See the NI site for some rough timing comparisons.

Neville.

Thank you for your reply!

Now I am trying to make an output signal at highest possible frequency and the result was 125000Hz by the example named Const Pattern Output.vi (available with LabVIEW). Over 125000H it would show the following error message:

"Because of system and/or bus-bandwidth limitations, the driver could not write data fast enough to keep; up with the device throughout; the onboard device reported on underflow error. This error may be returned erroneously wen an overrun has occured"

But the PCI-6534 should have a maximum of 20MHz. Is it limited by Windows?? Will it be better if I use Linux instead?

Thank you for your attention!

Ayumi

-

Dear all,

I would like to know what factors affect the speed of how fast a vi respond. I am trying to find out the time between sending a digital 5V TTL signal and then writing down the time in a file and after that asking the vi to send out a TTL signal back. (Since my final goal is to make it finish doing everything in the order of nano second) Is the speed of the whole process affected by the I/O card, the computer's speed and any other thing else? I am using PCI-6534. I saw the data sheet on the web that it works at 20MHz. How can I maximize its speed?

Thank you very much for your attention!!

Ayumi

-

Memory leakage

in LabVIEW General

Posted

Dear Michael,

I put both Port Config and DIO Clear in the while loop and luckily the triggering still works.

Thank you for the help and suggestions from all of you!

Ayumi