ak_nz

-

Posts

88 -

Joined

-

Last visited

-

Days Won

7

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by ak_nz

-

-

I've had examples working fine in TS 2019 and LV 2020. All LabVIEW dependencies are packed libraries built in the dev environment, then loaded in TS using the adaptor configured as RTE.

Have you tried a very simple example eg. a simple VI that runs till panel closed?

-

If you mean how data is passed via the connector pane - it is all passed by value from a logical stand-point. That includes references, so you get a copy of the reference number in the VI, but it points to the same memory location.

I say "logical stand-point" as there are some instances where the compiler will get clever and optimize the call (or places where you have specified it such as sub-routine). However, this doesn't affect how you as a programmer can view the behavior.

-

I'll preface my answer with - I haven't opened your code (no access to LV right now). So I can't speak to specifics.

But my opinion - you should have a single composition root (main.vi) that creates and injects all the main resources at the beginning of execution. Then, that same root manages disposition (including any specific ordering) at application exit. Main either keeps references for later disposal, or each level of your execution 'hierarchy' returns it's injected dependencies recursively to enable that (eg. as part of a Dispose() method).

-

Personally I don't think of delegation as a pattern, just a natural consequence of encapsulation.

In your example, you've included demonstrating polymorphism and delegation. If you want to focus only on delegation, I would probably make some adjustments:

- Two classes - "Duck" and "QuakingFunction".

- Duck class contains a QuakingFunction object. It has a 'constructor' or method to set the required object for that task (dependency injection).

- Duck class exposes the static "Quak" method. This method internally calls QuakingFunction.Quak() method of the object (your delegation).

Note - if you make the QuakingFunction.Quak() method virtual, it lets you implement new child classes in the future that Quak differently (Strategy pattern). You inject the one you want at the time.

You don't need the derived Duck classes at all in order to demonstrate delegation. The Duck.Quak() method can of course be called with the child class objects so that they too can quack .. like a duck (Inheritance).

-

I'm afraid you're stuck with NIPM, as far as I'm aware. There used to be a method by which you could install packages manually but I don't know if it still exists.

To get around conflicts with ICT at our workplace, we virtualize the dev environment. Then we can do whatever we want (off the network). That might be something you could pursue as well.

-

3 hours ago, mabe said:

Maybe it's just me, but I find it very difficult to work with the new color scheme of NI TestStand. My eyes are burning after few minutes... Am I the only one?

I'll add my voice to that. When I ran Sequence Editor, I had an almost violent physical reaction. I don't mind the white as much (yes, I'd prefer something darker). I dislike the lack of clear contrasting colours on buttons, labels and the missing cognitive aids such as grids lines.

We were planning to transition to 2020 but we've decided to stick with 2019 instead. Pity; the new Quick-drop aka LV is really nice.

-

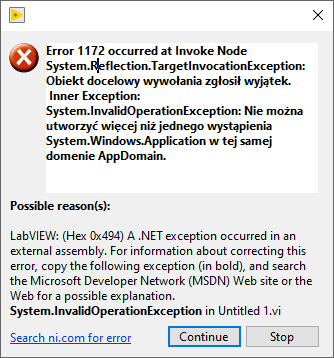

On 8/7/2020 at 6:30 PM, bartek618 said:

The method is static - you don't need a constructor node so try without that.

The exception text isn't in a language I'm familiar with but I suspect it may be due to which thread is executing the clumps. Try also setting the VI execution properties for the enclosing VI to run in the UI thread only.

-

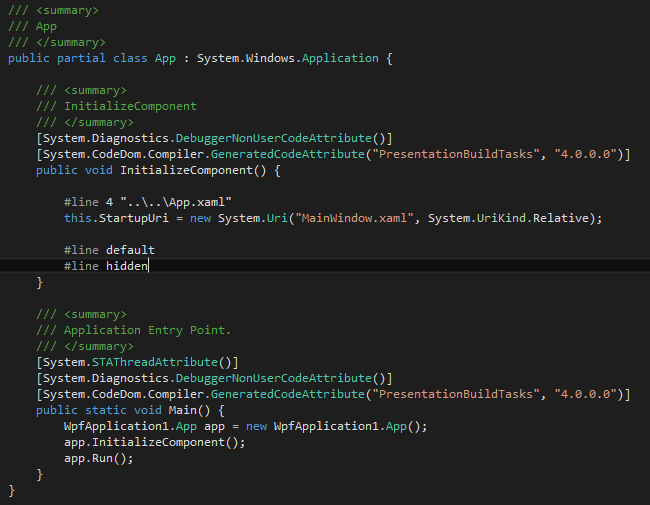

I assume you're talking about a WPF app.

WPF apps are normally launched via the static entry point App.Main() method, just like Windows Forms and Console apps (which by default specify a Main method in the arbitrarily named Program class).

The contents of this method is normally generated for you by the compiler, and typically creates an instance of the App class, calls it's InitializeComponent() method followed by the Run() method. The default implementation of the InitializeComponent() method is parse your App.xaml, determine the startup Window class (in your case, MainWindow.xaml) so that Run() method can startup the Dispatcher for the message loop and load your window class.

Try calling the static Main() method in your assembly.

-

Curious how your company worked or works out the OS licensing. I tried to go down this rabbit hole with MS a couple of years ago and ended up giving up because it was so difficult to figure out for a small business.

We're not a small business, we're part of a large one. Guess there are benefits to such things after all.

-

VM works best for me. We're lucky that we have covered the Client OS licensing part of things so it frees us up to create a VM per "project" if need be and to share the VM (+ change the product key). You need a good external storage to keep all these VMs backed up, but when transitioning back to an old project it just a case of copying it back over to the dev machine.

-

Just a note that the C# code shown by the OP isn't magically interpreted differently by the C# compiler and will throw an exception at run-time just as rolfk says and for the reason he says.

-

9 hours ago, Tim_S said:

What you ran into is that, by default, LabVIEW 2012 uses .NET 2. There is a significant change to .NET 3 and later that requires different behavior down in the bowels to use. NI has an article on how to specify using later .NET versions. LabVIEW 2015 uses .NET 4 by default (not sure the version where the switch occurs). One option would be to have LabVIEW use .NET 4 as that should be present on Windows 10.

The only other options I can see are

- Have an exe that would run before the installer to verify all the required components are installed.

- Don't use the LabVIEW installer as other installer applications can perform this type of checking

Out of interest it has to do with the version of the run-time CLR rather than the framework (which has the runtime as well as bas libraries etc,).

LV2012 and earlier - use CLR 2.0 (which exists in .NET Framework 2.0 - 3.5)

LV2013 and later - use CLR 4.0 (which exists in .NET Framework 4.0 and later - 4.6.2 inclusive)

You can easily download the .NET Framework 3.5 offline installer and add this install to your installer as an action. You can run the offline installer in "silent mode" so that it installs or bypasses if installation already exists.

-

1

1

-

My preference would be to have a "Set to Default" method of the class that initialises the object with reasonable defaults that you call on teh startup of your application. Then your settings UI can call methods on the object to tweak teh settings as the user desires.

As a general rule I dislike the "Default Value" properties of controls because they can be very hard to control and enforce over the development lifetime of an application.

-

1

1

-

-

Long and short your answer is what you suspect - there are several VI server methods that are not implemented in the run-time engine and are thus not available if you build an executable. The same issue crops up in other areas like build automation.

The way I get around this is to use the IDE but automate the process via scripting. I have a build VI (happens to be a VI Package) that runs automatically via LabVIEW command line arguments that performs the necessary operations and then quits LabVIEW. Not ideal I know but the only realistic option I have found.

-

Yes, we found they usually lag behind current for a bit. Just adds to the pile of inconveniences leading to uninstall :/

Doesn't the toolkit use SharpSVN internally? You could probably manually over-write this assembly with the latest online and chances are it would work.

-

TSVN Toolkit works fine in LV2015. The only issue I have found is that exposing the SVN icon states onto the Project Explorer items is a bit of a network hog - as a project grows increasingly larger (100s of items in the project) then project operations tend to suffer. But other then that I have found it a useful tool with SVN repos, especially when it comes to keeping files on disk and items in projects in sync (eg. re-naming).

-

There is normally an idea of "interface" or "trait" that allows a class to say to callers that it implements certain behaviour. In standard out of the box LabVIEW, these ideas are only possible via composition rather than by inheritance. The GDS toolkit will allow you to create interfaces in a way but natively LabVIEW only supports single inheritance and has no notion of abstract classes or interfaces that exist only to enforce a contract for behaviour.

Don't forget that OOP hierarchies are not about "things" they are about common behaviour in the context of your callers. if you find yourself over-riding methods to make them no-ops then this generally indicates that the child class doesn't really respect the contract and invariants of the base class and there is a potential issue to resolve. This can be difficult to fully achieve in LabVIEW so you often have to make compromises and document use cases for the next developer who follows you.

Generally the best rule of thumb is to keep your inheritance hierarchies as small as you can to avoid changes in base classes rippling through your hierarchy. Composition can help reduce dependency coupling but, again - this can be hard to achieve easily in LabVIEW.

In your example of a power supply with an additional method - this is a functionality that only pertains to a certain unit and only makes sense for that unit. In other OOP languages the natural thing would be to move the behaviour into another hierarchy and inject it in but in LabVIEW this is labor-intensive. My gut feel in this case would be to move the method down to the base HAL and implement the method in each child class - with the exception of the class that actually understand the request, all others can throw a run-time error ("Request not appropriate for the LAVA Power Supply type"). It's not ideal since you can't statically verify your application code but it is a reasonable compromise. It does also force you to deploy your entire HAL again but that's another story.

-

Are the .NET assemblies referenced in your project library in the source of the component prior to building a package?

I have several components that use .NET assemblies - I have never copied them to the LabVIEW folder when changing source; they have always been in the source project folder or sub-folder. LabVIEW effectively adds this folder to the Fusion search paths when resolving assembly locations.

-

In this stance your Cat and Dog hierarchies are actually different functionally - one Meows and the other barks. Your find Animal and logic after that presumes to work on any Animal - but Animal doesn't have a bark or meow. This is an LSP violation for the caller that expects an animal to do something specific.

Your use case of the API (your example) is that the animal needs to make a sound - bark or meow is irrelevant. In this instance I think it is cleaner to:

- Have a Make Sound method in Animal that Cat and Dog base classes over-ride to Meow or Bark.

- if it is important to be able to make any Cat meow specifically then you are better off adding a "Make Meow" and "Make bark" to your Cat and dog base classes that is only available to them and their children. This way your clarify to your callers that any animal can make a sound but only cats meow and only dogs bark.

The best approach here is probably to favor composition and move sound making into it's own hierarchy of classes that are composed into your animals but that's a whole different story.

-

1

1

-

-

We use VI Package Manager for this (vip files) and then deploy a single VI Package Configuration file (vipc) for the actual project that contains the correct version of the package dependencies. This way a developer can open the source and deploy the correct version of the packages.

-

What you describe is exactly how I deal with objects I want to share. Yeah, you have to consider the ramifications of multi-threaded access and managing what occurs in the IPE node (I almost never dynamic dispatch in there to protect against deadlocks in a future derived class) and you have to be sure you have captured your atomic activities either through a DVR or an internal static / non-re-entrant member of some kind.

But I only go with DVR route when I know that sharing is an objective; it's never the default.

-

We can run VI Tester tests in an automated fashion using the API (just like the UTF). You'd need the development environment on your CI machine though. There are plenty of posts around about setting up a CI system with LabVIEW.

I'd also like to add that VI Tester will not be deprecated by the UTF. They are tools that target testing in different ways - nether one will supplant the other any time soon.

-

Yes, and those are great because there is a time out feature that allows the application to continue, recover and provide useful information for fixing the problem. Do you also mean storing the object itself in a SEQ instead of sharing a DVR? Does that mean that all of your class methods must be surrounded by the Dequeue/Enqueue?

I don't think that the idea is to use DVR to lock a resource. For many of our objects, we call methods on them using DVR (ByRef). Every now and then, we realize that two objects end up with a circular dependency that was not expected and we experience a deadlock that just cannot be recovered from.

I appreciate that limiting the use of DVRs to basic accessors reduces the risk but it also restricts us from using more useful methods that are properly protected inside the object. Drawing the line can be challenging between reducing the risk and protecting class methods. Is there any VI Analyzer functions that allow for automatic check for an IPE inside an IPE where both have a DVR? It may not prevent the problem but it could help locate the problem.

Welcome to the wonderful world of references, right? You can see the lack of time-out on the DVR as a mild objection to reference-based designs from R&D. Personally we store the DVR in the private data of the class; the DVR is never exposed to callers.

As far as I know there are no in-built VI Analyzer checks, though I can see that it wouldn't be too hard to create your own.

-

The best practices we follow is to limit activities in DVRs to setting or retrieving data. We don't use DVRs as a "lock" mechanism to limit access to a resource. If we need such behavior we implement any other locking functionality that has time-out capability such as semaphores, SEQs or the ESF.

This has allowed us to avoid a host of issues, such as deadlocks in dynamic dispatch methods between DVR'd classes in a hierarchy.

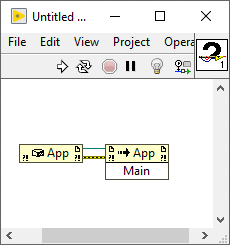

Calling Asynchronous VI in Teststand not working with lvlibp & LVRTE

in TestStand

Posted

I should have clarified my workflow better. The PPL files, once built in the LabVIEW Dev environment, are then copied into a reference location that the specific TestStand project can access (this is normally within the root folder of the sequences or a sub-folder eg. "Components"). The Async steps are then configured to call the VI in the PPL directly. So component building (eg. PPLs but also other types like .NET assemblies) is independent and decoupled from the TestStand environment.

That works fine for the projects I have done.