Bruniii

Members-

Posts

28 -

Joined

-

Last visited

Profile Information

-

Location

Italy

LabVIEW Information

-

Version

LabVIEW 2020

-

Since

2014

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

Bruniii's Achievements

-

Hi all, I have an application running on a system with a discrete Nvidia GPU and an integrated one. The application call a dll to perform data processing on the Nvidia GPU while, in parallel, updating the UI plots in a parallel loop with the data from the previous iteration of data collection. Right now the integrated GPU is not used (at least according to the windows task manager); enabling the plotting results in a small (but visibile) increase in the elaboration time of the data processing. Hence, I'm assuming that LabVIEW is choosing to use the discrate GPU for the UI/plotting also. Is it possibile to force LabVIEW to use the integrated GPU for the UI rendering? Thanks, Marco.

-

We do not have one. We are discussing it...but, unfortunally, we also started collaborating (because we have to...) assuming we will, eventually, and I had to provide the application. The application started as a very basic and incomplete one but in the last weeks it started to became a little bit to "functional and usable" to be honest. Of course, if we will really have a contract I will remove the time-bomb. Thanks! I used dotNET Cryptography Library, a package on VIPM.

-

I though about the hack to set the back the clock. I don't know, honestly: the application produce data where the real timestamp tagged with the data is very important. If they will change the clock of the system than everthing is compromised. Yes, it will always be possibile to compensate the offset in the log files or in the stream of data.... I don't want to make it pro hacker proof, I simply want to make inconvenient to keep using it and, most importantly, to go to a third party client and distributed the application forever even if our partnership doesn't go thorough. Regarding your suggested solution: I'm still pushing updates to the application, to solve bugs or add futures. I guess I could put the counter inside the main .ini file, between the entry generated by LabVIEW. Maybe as a second check, just in case they will start to change the system clock... Right now I was trying to generate a license file (with the expiration date), signed with a private key generated with OpenSSL. Now I'm looking for a way to read it using the public key in LabVIEW. Marco.

-

Hi, I'm looking to license an application that will run exclusively on offline systems. I came across the "Third Party Licensing & Activation Toolkit API" available through JKI VIPM, but from what I understand, it requires an online activation process, which wouldn't work for my use case. Are there any other libraries or toolkits you’d recommend? I also checked out Build, License, Track from Studio Bods (link), but I’m not clear on what the free tier offers, or if it's even available. I realize the irony of asking for a free toolkit to license an application! However, I’m not looking to profit from this. I simply want to protect an application that I need to provide to a potential industrial partner while we finalize our collaboration. Unfortunately, I have to hand over the executable, but I want to ensure the application won’t run indefinitely if the partnership doesn't go through. Thanks, Marco.

-

Optimization of reshape 1d array to 4 2d arrays function

Bruniii replied to Bruniii's topic in LabVIEW General

Thank you for the note regarding the compiler and the need to "use" all the outputs. I know it but forget when writing this specific vi. Regarding the GPU toolkit: it's the one I read in the past. In the """""documentation"""", NI writes: https://www.ni.com/docs/en-US/bundle/labview-gpu-analysis-toolkit-api-ref/page/lvgpu/lvgpu.html And, for example, I found the following topic on NI forum: https://forums.ni.com/t5/GPU-Computing/Need-Help-on-Customizing-GPU-Computing-Using-the-LabVIEW-GPU/td-p/3395649 where it looks like the custom dll for the specific operations needed is required. -

Optimization of reshape 1d array to 4 2d arrays function

Bruniii replied to Bruniii's topic in LabVIEW General

Thanks for trying! How "easy" is to use GPUs in LabVIEW for this type of operations? I remeber reading that I'm supposed to write the code in C++, where the CUDA api is used, compile the dll and than use the labview toolkit to call the dll. Unfortunally, I have zero knowlodge in basically all these step. -

Optimization of reshape 1d array to 4 2d arrays function

Bruniii replied to Bruniii's topic in LabVIEW General

Sure, the attached vi contains the generation of a sample 1d array to simulate the 4 channels, M measures, N samples and the latest version on the code to reshape it, inside a sequence structure. test_reshape.vi -

Optimization of reshape 1d array to 4 2d arrays function

Bruniii replied to Bruniii's topic in LabVIEW General

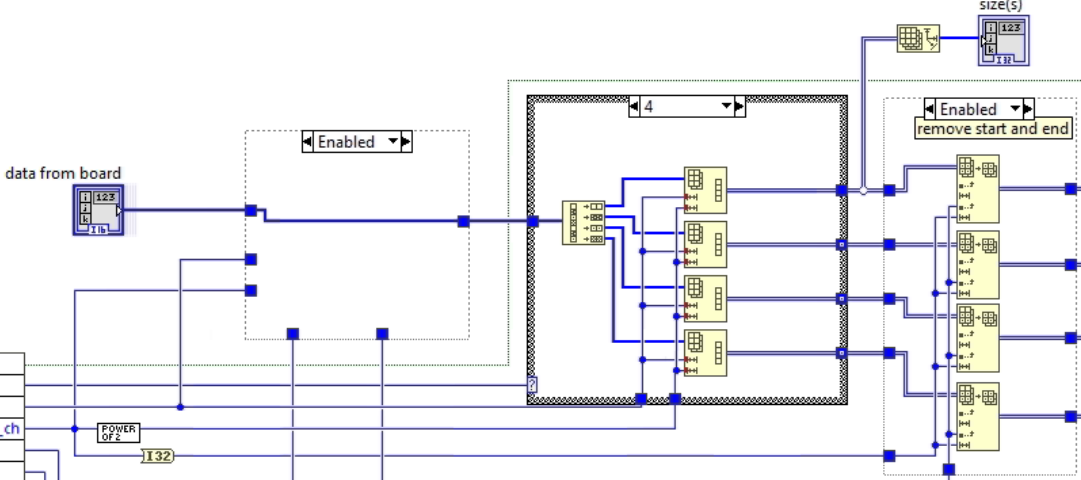

I do not have a "nice" vi anymore but the very first implementation was based on the decimate array function (I guess you are referring to decimate and not interleave) but it was slower than the other two solutions: -

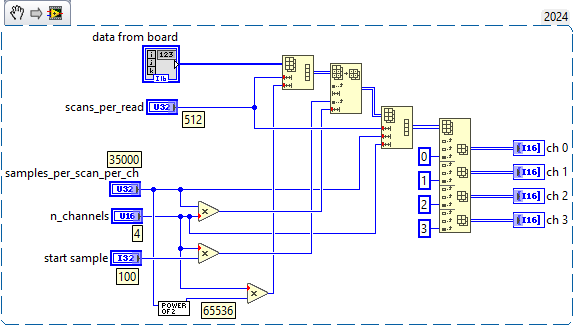

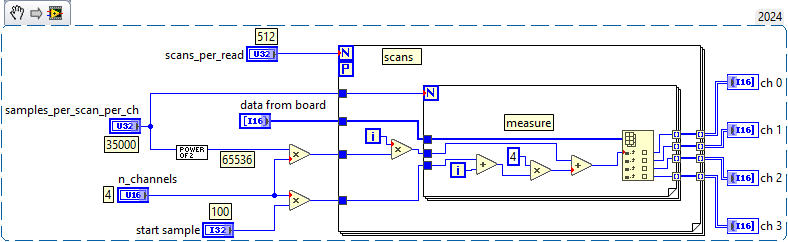

Hi all, I'm looking for help to increase as much as possibile the speed of a function that reshape a 1D array from a 4 channels acquisition board to 4 2D array. The input array is: Ch0_0_0 - Ch1_0_0 - Ch2_0_0 - Ch3_0_0 - Ch0_1_0 - Ch1_1_0 - Ch2_1_0 - Ch3_1_0 - ... - Ch0_N_0 - Ch1_N_0 - Ch2_N_0 - Ch3_N_0 - Ch0_0_1 - Ch1_0_1 - Ch2_0_1 - Ch3_0_1 - Ch0_1_1 - Ch1_1_1 - Ch2_1_1 - Ch3_1_1 - ... - Ch0_N_M - Ch1_N_M - Ch2_N_M - Ch3_N_M where, basically, the array is the stream of samples from 4 channel, of M measures, each measure of N samples per channel per measure. First the first sample of each channel of the first measure, than the second sample of each channel.... Addtionally, I need to remove the first X samples and last Z samples from each measure for each channel (basically, i'm getting N samples from the board but I only care about the samples from X to N-Z, for each cahnnel and measure). The board can be configured only with power of 2 samples per measure, hence no way to receive from the board only the desired length. The end goal is to have 4 2D array (one for each channel), with M rows and N-(X+Z) columns. The typical length of the input 1D array is 4 channel * M=512 measure * N=65536 samples/ch*measure; typical X = 200, Z = 30000. Originally I tried the following code: and then this, which is faster : Still, every millisecond gained will help and I'm sure that an expert here can achieve the same result with a single super efficient function. The function will run on a 32-cores intel i9 cpu. Thanks! Marco.

-

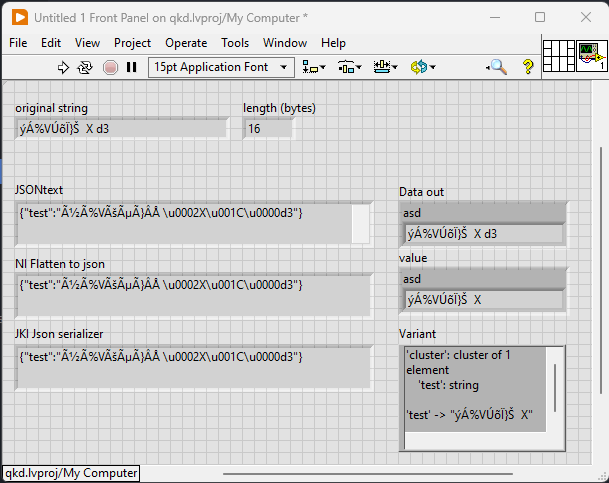

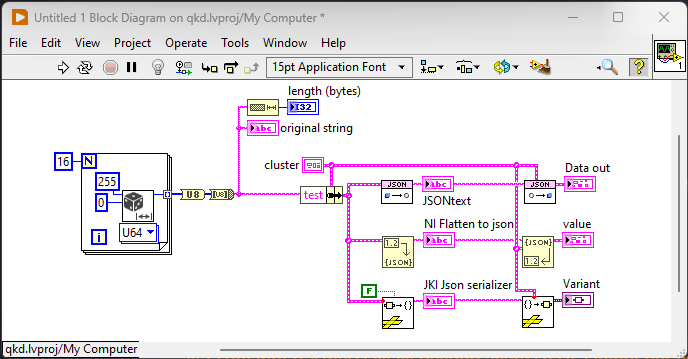

Convert to JSON string a string from "Byte Array to String"

Bruniii replied to Bruniii's topic in LabVIEW General

Do you mean 'orig' and 'json_string' ? The secondo one, json_string, is the string that the Python API receive from labview. The labview vi in my previous screenshot is a minimal example, but imagine that the json string created by labview is transmitted to the Python API; the python code is, again, a minimal example where the idea is that 'json_string' is the string received from labview trough the API. When I try to convert the json string, in python, the string in 'convert['test']' is not equal to `orig`/original string in the labview vi. While, the conversion of the same json string return the same original string if done by LabVIEW. -

Dear all, I have to transmit a JSON message with a string of chars to an external REST API (in python or javescript). In LabVIEW I have an array of uint8, converted to a string with "Byte Array to String.vi". This string has the expected length (equal to the length of the uint8 array). When I convert a cluster with this string in the field "asd" to a JSON string, the resulting string of the field "asd" is 1. different 2. longer. This is true using three different functions to convert (the NI Flatten to json, the package JSONtext and the JKI json serializer). If i convert the JSON string to a cluster, in LabVIEW, the resulting string in "asd" is again equal to the original one and with the same amout of chars. So everthing is fine, in LabVIEW. But the following code in Python, for example, doesn't return the original string: import json orig = r'ýÁ%VÚõÏ}ŠX' json_string = r'{"test":"ýÃ%VÚõÃ}ÂÅ \u0002X\u001C\u0000d3"}' convert = json.loads(json_string) print(convert['test']) # return ýÃ%VÚõÃ}ÂÅ Xd3 print(convert['test'] == orig) # return False Can anyone help me or suggest a different approach? Thank you, Marco.

-

...Maybe?! It works, but will it helps? I guess I will have to wait another year, statistically...... but thank you! It's the hw watchdog of the industrial pc, but I have no control on on the kick/reset/timeout, so no way to do any operation before the restart.

-

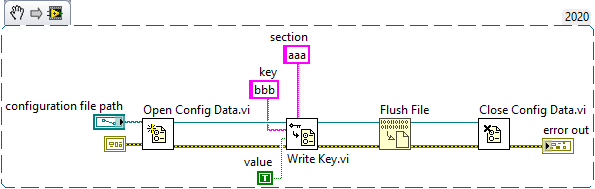

To be clear, I'm not at all sure that the Flush File will solve the corruption of the file in the main application; but NI believes that Flush File is the "main reccomandation" in this situation, hence I thought that adding this simple and small function would be super easy...

-

Hi Neil, thank you. In the main application the ini file is closed immediatly after the write, as you do. Still, three times in two years of running, after a restart of the pc (caused by a watchdog outside of my control) the ini file was corrupted, full of NULL char. Googling for some help I found the official NI support page that I linked that describes exactly these events and they are suggesting the use of the Flush File. But, as you said, the refnum from the INI library doesn't look compatibile with the input refnum of the flush function...and I'm stuck

-

Hi, in a larger application, sometime a small ini files get corrupted (a lot of NULL char and nothing else inside), and it looks like it happens when the computer restart and the application was running. I found this NI page: https://knowledge.ni.com/KnowledgeArticleDetails?id=kA03q000001DwgyCAC&l=it-IT where the use of the "Flush file.vi" is suggested. But a vary minimal and simple test like this one: returns Error 1, always. And I really really cannot see what I'm supposed to do differently. It's problably something VERY stupid. Anyone here can help me? Thank you, Marco.