-

Posts

5 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Bhaskar Peesapati

-

-

5 hours ago, LogMAN said:

Doesn't that require the producer to know about its consumers?

You don't. Previewing queues is a lossy operation. If you want lossless data transfer to multiple concurrent consumers, you need multiple queues or a single user event that is used by multiple consumers.

If the data loop dequeues an element before the UI loop could get a copy, the UI loop will simply have to wait for the next element. This shouldn't matter as long as the producer adds new elements fast enough. Note that I'm assuming a sampling rate of >100 Hz with a UI update rate somewhere between 10..20 Hz. For anything slower, previewing queue elements is not an optimal solution.

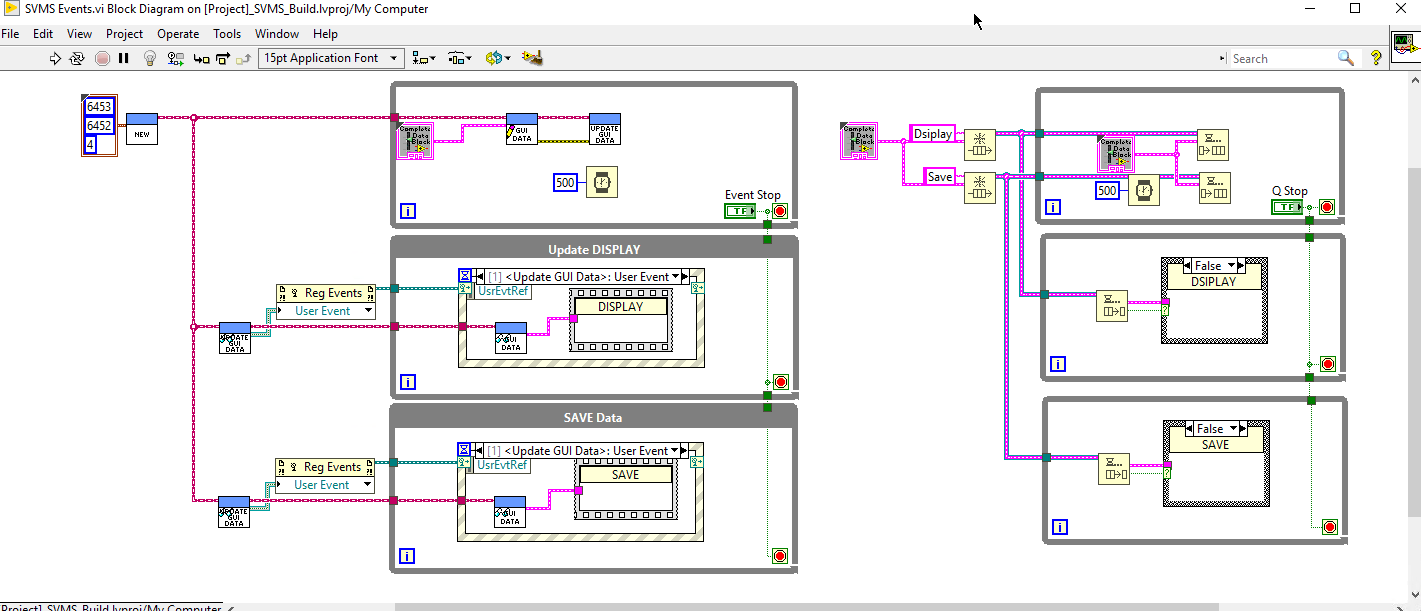

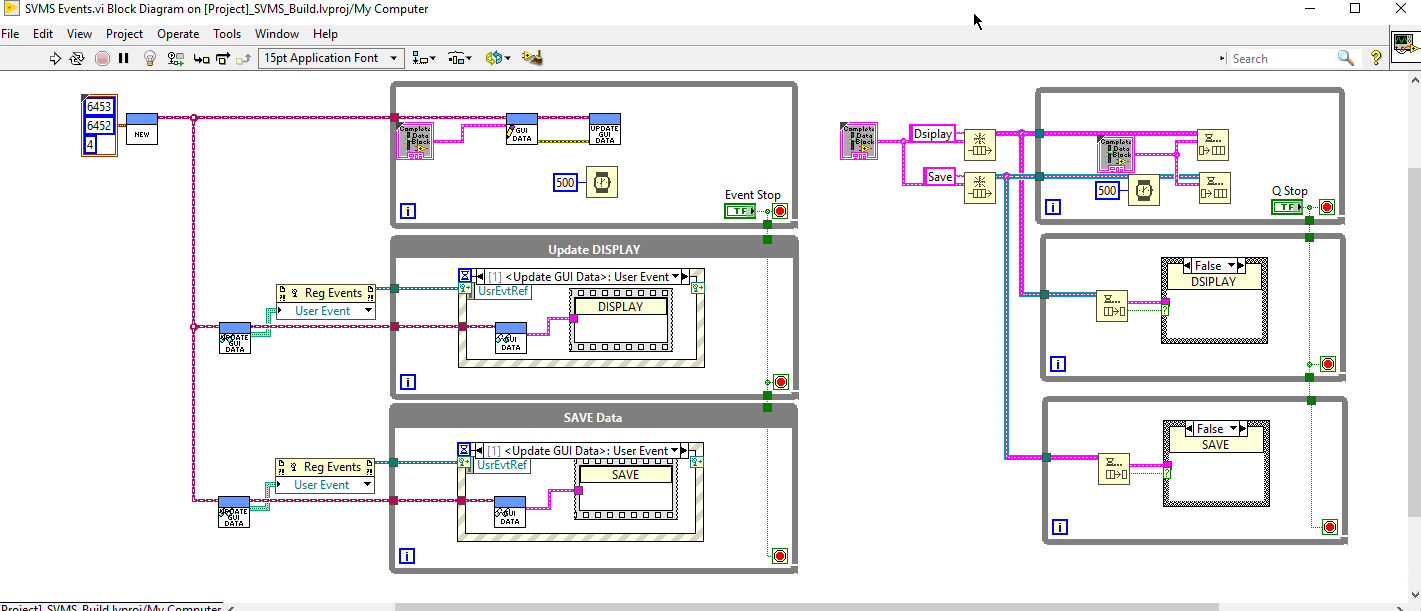

From this discussion I understand this. My requirement is that I process all data without loss. This means I need to have multiple queues. That means 12 MB * # of of consumers is the data storage requirement. With 1 DVR that will be 12 MB storage plus 1 byte to indicate to consuming events that new data is available. It is true as you mentioned that if a consumer does not process data fast enough (like in a logging process) it could consume up memory that I need to guard against. Even though EVENTS are not designed for data processing, the data storage requirement does not increase with consumers. All consumers will listen only for 'NeDataAvailable' trigger and all of the events can read from the same DVR. I appreciate any corrections to my thinking. Thanks

-

-

Thankyou very much for your reply. The Boolean is wired to stop terminal only indicate to terminate. The data is being written to tdms files. In reality the different loops are independent. Essentially what your are saying is only one loop dequeues and other loops only examine the element and perform necessary operations on it. That way only one queue is necessary. The data block is in a DVR so there is only one copy the data block. In the case of events, The producer triggers the event to indicate new data is present and the consumers process the data. Is there a drawback in this. Again, I thank you very much for your reply.

-

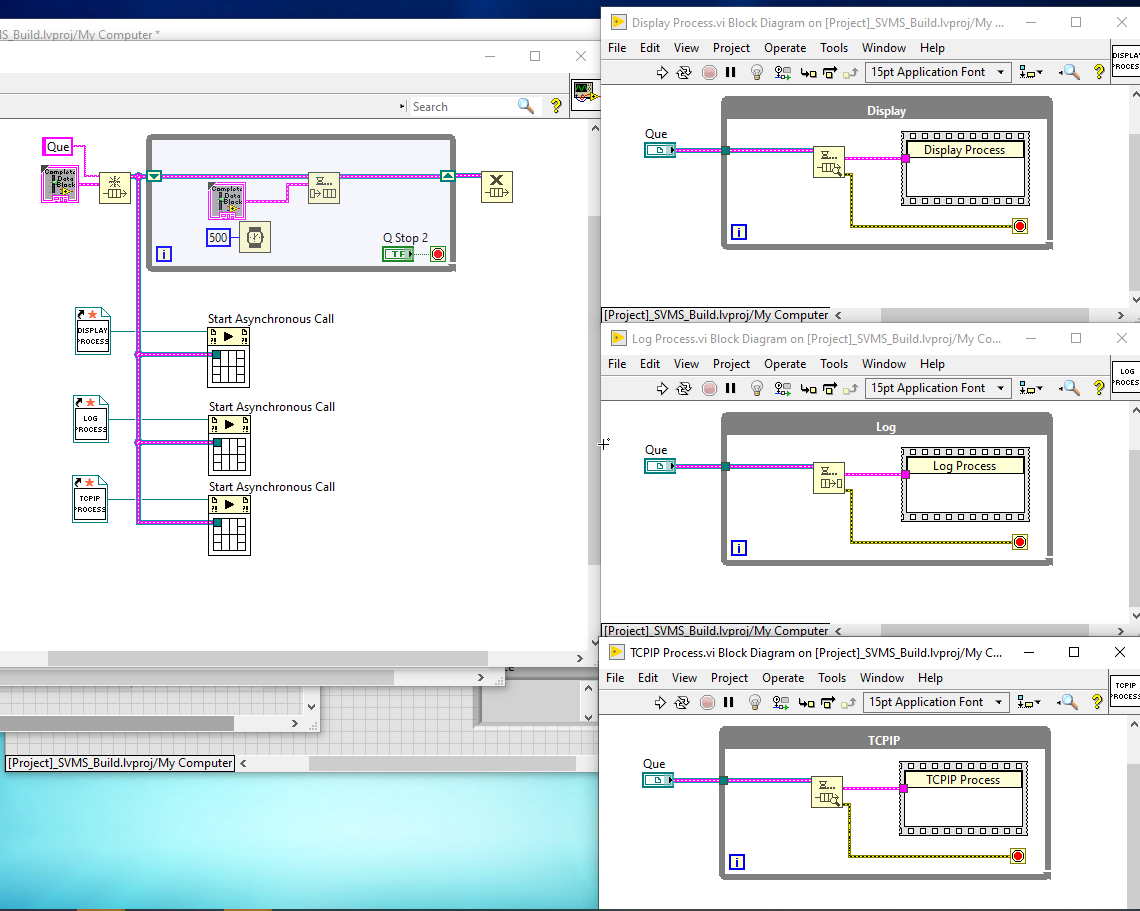

I have a design question. I am designing a system which transfer large amount of data (about 12 mb ever 500 ms for displaying, saving etc. Of the two choices presented below which one of two will be a better choice. Thanks in advance for all the advice.

Events or Queues

in Application Design & Architecture

Posted

Looks like you do not like to use DVRs. I read and write to DVR as property in a class in the following way only. Do you still think I am might run into problems. If so what do you suspect. The write or SET happens only in open place.