manigreatus

Members-

Posts

15 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by manigreatus

-

Remotely launching GOOP objects on a cRIO

manigreatus replied to manigreatus's topic in Object-Oriented Programming

Hi, It seems there is very low interest in NI-GDS... I found out that in LabVIEW 2020 that dynamic member VIs are not supported by Call by Reference node. Regards! -

Hi, I had developed a centralized HIL system based on NI-GDS (EndevoGOOP400->Simple DVR template), but now the client's new requirements requiring me to make it a distributed system by adding cRIOs. Previously I used to launch all the processes with corresponding IOs on the same system and have made use of dynamic dispatching extensively... in short I have interface classes those in turn load different child objects, but now I want to launch these processes on different cRIOs. Is it a good idea to launch all the objects on different cRIOs using VI server, and talking to those objects from one cRIO using VI server? The thing is if I had loaded an object and want to call its members, how shall I do it? Do I need to launch the Create.vi remotely and open the reference to the object and keep the object reference locally and use that object reference while calling the members of that object remotely? What does the following statement means? "4.When you no longer need the VI to run on the Real Time target, close the VI reference, then the LabVIEW application reference. Note that the dynamically called VI can only run on the real time target while the reference remains open, and that closing the reference will stop the VI on the Real Time target." which can be found here; https://knowledge.ni.com/KnowledgeArticleDetails?id=kA00Z000000PAhpSAG&l=sv-SE When you make a source distribution of say 20 classes and deploy on a Linux-RT cRIO, and want to launch say 50 objects out of 12 deployed classes, will the remaining 8 deployed classes will be in RAM too? Thanks!

-

I am working on a cRIO based application in which I am using some SEQs and these SEQs are being used within different parallel processes... Is there any difference in terms of processing to keep SEQ's reference in a shift register or to obtain the SEQ reference (using a name and "Obtain Queue" function) each time it is used? Thanks for any comments.

-

Organizing Actors for a hardware interface

manigreatus replied to Mike Le's topic in Object-Oriented Programming

I also agree with this because I have experienced it in non-LVOOP it simplifies debugging and design; but what if you have a HAL.lvclass being stimulated by that "HW process" and you don't want to fork wire; but when you have a situation where you want to stream more than one channel up to the GUI/Controller simultaneously then what should be done? I am relating it with my HAL.lvclass: http://lavag.org/topic/16510-dvrs-within-classes/ -

Thanx all for your invaluable comments! Now I am almost convinced not to have DVRs for my driver objects but the thing still in my mind is that HAL wire is going be heavy anyways so wont DVRs do better in that case? For me with DVRs you can easily play around with arrays since you don't have data copies. The example shown was a non-reentrant vi being called from different parallel processes. As you see there shouldn't be anything wrong without DVRs so did I but the reality was different. Probably I had some other issue but it was resolved when I used DVRs. I will try to re-create the error when I get some time.

-

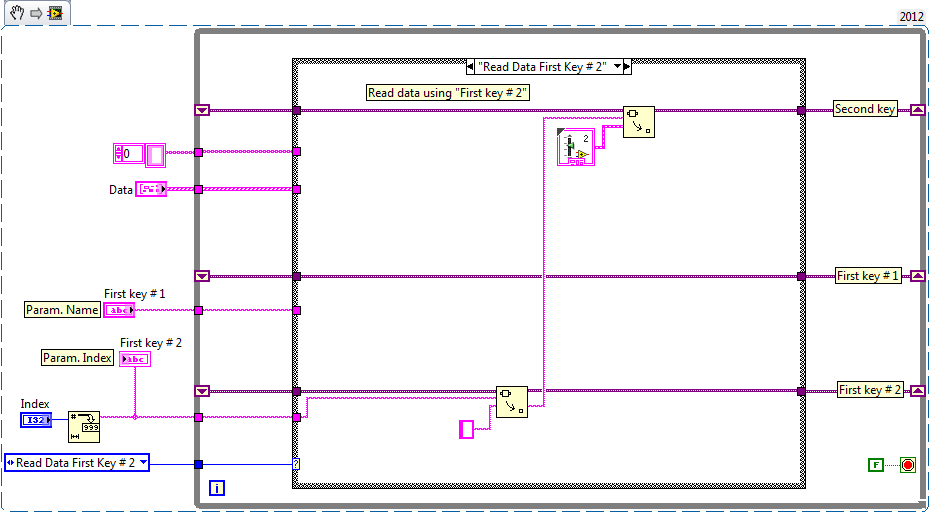

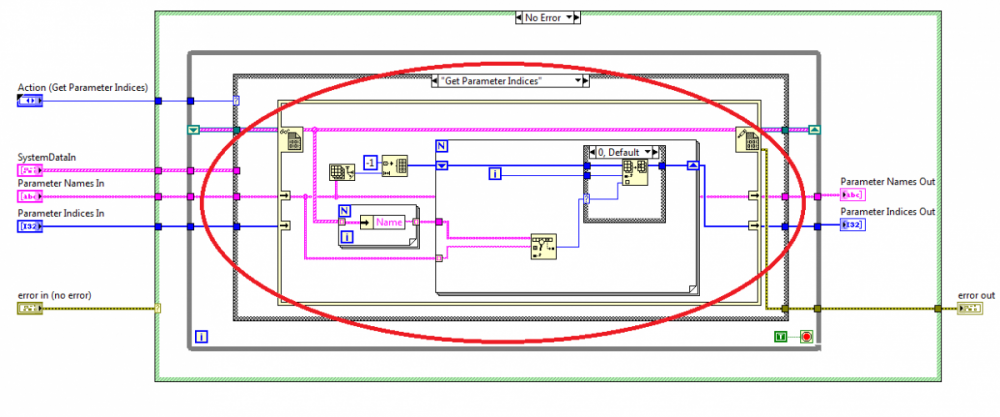

I did consider that but couldn't utilize them. It works very nice when you look-up against the same type of key say you are looking-up a 'data-cluster' against the 'name string' but what if you want to look-up the same 'data-cluster' against another type of key?

-

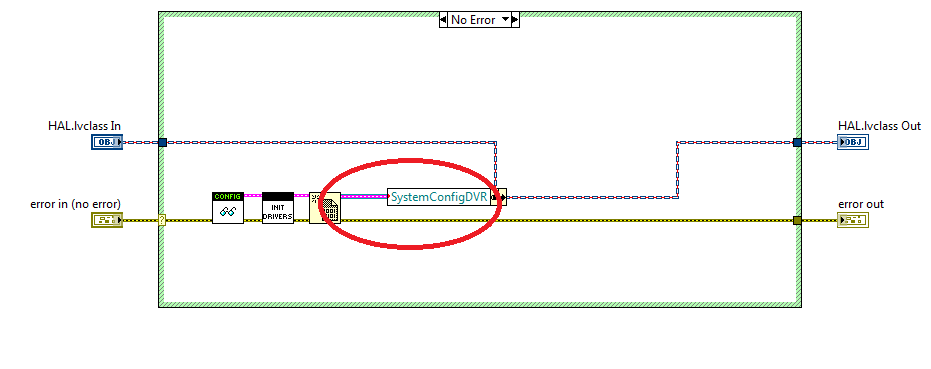

Yes you are right, but just see the code inside dvr because of which I had to use dvr (which was not part of my design).

-

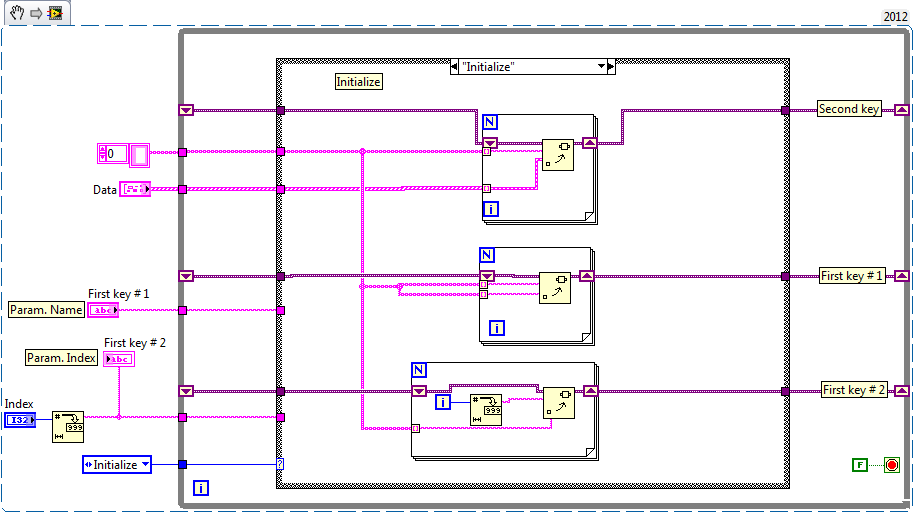

the reason why I chose this practice is a recent experience with a simple LV action engine being used in a GUI based application with different parallel processes. The application hangs when I tried to look for different parameters in the configuration, and the issue was resolved by using DVRs as shown below. I that case I was just having 100 I/Os with scaling info and in case of HAL I might end up with more than 200 I/Os including scaling info and calibration (a separate object) as well. It seems a very valid point to replace heavy HAL-wire with just a 4byte DVR ref. currently I have no intentions of branching if I need then I would go with the Actor approach or simply having an independent process.

-

Is it a good practice to keep objects as DVRs within another object as shown below In this case I am loading different drivers within HAL and I have no intentions to void dataflow by using DVRs rather I want to keep less memory for HAL.

-

Is LabVIEW Object Oriented or Actor Oriented?

manigreatus replied to manigreatus's topic in Object-Oriented Programming

Not necessarily but it can be utilized to achieve the actor-oriented design. -

Is LabVIEW Object Oriented or Actor Oriented?

manigreatus replied to manigreatus's topic in Object-Oriented Programming

I didn't mean a single VI with "Actor VI", of course a single VI can't do the job that efficiently. Actually I meant to say that some type of file .lvclass (say .lvactor) that will hold all the stuff (messages, functions, etc.) related to that actor. Currently you define actor by one .lvclass and messages to it are some other .lvclass; why don't we combine these together? I mean if you are using actors then these should be actors not objects. Anyway it would be almost the same thing what we currently have, I thought if we abstract OO part from actors then it would be easier to play with them. -

Is LabVIEW Object Oriented or Actor Oriented?

manigreatus replied to manigreatus's topic in Object-Oriented Programming

Thanks for your explanation. But I see a VI/module in LabVIEW as an actor which implies that LabVIEW is inherently actor-oriented (AO). We can implement an actor even without using VI server and queues by just having a while loop and a local. For me one of the most attractive things in LabVIEW is its simplicity, you can design complex systems even without knowing the programming paradigms (say AO) and that's the beauty. I think in Actor Framework things have been made pretty complex by mixing OO and AO. At this point I have a question that is Actor Framework reflecting what is described here: http://ptolemy.eecs.berkeley.edu/publications/papers/07/classesandInheritance/Classes_Final_LeeLiuNeuendor.pdf For advanced users its really a nice idea to introduce two different programming paradigms and then show them how these can be combined but for average users it is complex. I think apparently we needed "inheritance" and "encapsulation" from OO in AO why not introduce something say "Actor VI" and associate encapsulation, inheritance etc. with that without having a .lvclass. In this way we can abstract the OO stuff from the design since in Actor Framework one needs to keep track of classes and also the actors which makes it complex. Please give your suggestions and correct me if I am wrong since my goal is just to keep LabVIEW simple but powerful -

Is LabVIEW Object Oriented or Actor Oriented?

manigreatus replied to manigreatus's topic in Object-Oriented Programming

Thanks all for your replies. But my understanding is actor-orientation and dataflow do not fall in the same category, actor-orientation is a design notion and dataflow is a Model of Computation (MoC). Which is almost same as James wrote "The model of computation is how the software executes. In 'G' this is the dataflow paradigm. I would suggest that OOP or Actor oriented programming is a higher level than that. It is a means of design rather than execution and so we can use OOP with dataflow or other paradigms." If you get deeper into the definition of Actor-Oriented design you can design heterogeneous systems by utilizing different of MoCs not just dataflow. It seems a very good explanation but here I still have the same point as in my first post that why in one design notion (i.e. actor-orientation) you needed another one (i.e. object-oriented). Please correct me if I am wrong since I am also an engineer not a computer scientist -

This is my first post on LAVA although I have been using LabVIEW for five years. I thought LVOOP in LAVA is the best place to ask this question so I am doing. I have been considering the Models of Computations (MoC) in LabVIEW, it is based on actor oriented design that is utilizing data-flow programming MoC and bla bla... But I couldn't answer myself why Object Oriented programming paradigm was required in an Actor oriented based design. Either we have a relation with OOP based languages that's why pushing OOP into LabVIEW or LabVIEW is not actually based on actor oriented programming paradigm or actually I don't understand it If LabVIEW is based on actor oriented design then shouldn't we exploit actor oriented design principles? OBS! Remember this post is not related to Actor Oriented Design Pattern available in LV 2012. regards, Imran Certified LabVIEW Developer