-

Posts

155 -

Joined

-

Last visited

-

Days Won

3

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Thoric

-

-

James, the code works in development mode, so using the probe (assuming it works on RT) will show no issues.

It's only once built into an executable and deployed that the problem occurs. Once built, I don't see how I can use probes :-( -

So, the first thing I tried was a delay between registering for notifications, and sending the first message. This fixed it. So there's a race condition. Basically I can assume that the registration request is performed asynchronously and is not complete before the subactor sends back a message in response to the "Deploy_FPGA" message.

James - how can I ensure a regsitration request is complete before the subactor sends a notification?

-

Errors are captured in the final "" state, so if there was an error it would be sent to the caller (Main) where I log all errors. I don't see any such error. Also, no TCP usage.

In the meantime I just quickly created a new project with two actors, one calls the other, and proven:

1. The Self:Initialise state cannot send a notification to the caller - it won't receive it (you knew this, bug 13)

2. Sending a message to the subactor directly after registering for notifications, which gets the subactor to send a reply, does work. But it didn't in my actual project work, only in the test code. So there's either a race condition, timing error, or something else different.

I'll look into this more tomorrow.

-

-

I should add that this is in LabVIEW Real-Time 2017 SP1.

When launched from the IDE it works fine, but when compiled and deployed as an RTEXE that's when I see this issue.

-

I'm returning to some Messenger work after a short break, which might explain why I can't see what's wrong with my Messenger Actor based project.

Right now I have a Main actor which is responsible for launching a number of sub-actors. One of these is the FPGA actor. Directly after the launcher I register for all notifications.

Within FPGA actor, the "Self: Initialize" message runs a number of states, the final one being to publish a notification event. This is to be received by the Main actor to known when it has initialised.

Unfortunately this event is not received by the Main actor. I believe this might be because the registration request has not yet been processed by the FPGA actor, and therefore the notification event has no listeners and is effectively unheard.

So my question is: what's the correct way to launch a sub-actor such that you can receive a message from it once it has completed initialisation steps?

Main Actor launches FPGA subactor and Registers for All Notifications

FPGA Actor performs three initialisation steps, the third being to provide a Notification Event

-

For your front panel to resize to fit a changing front panel control, you will need to programmatically adjust it. There is no way to do this automatically.

Smarlow has shown a great example above of how to do this.

-

Just be careful with kerning. A "w" character followed by a "a", for example, might be narrower than the sum of the "w" and the "a" separately. I'm not sure how advanced the text printing functions are in LabVIEW, but true-type fonts are often kerned.

-

2014 upwards, including RealTime.

-

Can you set the FP to not show when called, then programmatically show the front panel within code. This ensures the FP will not display until it's actually running. Just a thought.

-

12 minutes ago, drjdpowell said:

There must be something, otherwise why up-rev the format with each version?

James, I believe the risk is only with variants and there's actually been no change to their flattened syntax since LV8.2

A 2017 flattened string refused to be interpreted by older LV code is purely a precautionary act. However annoying it is one can understand perhaps the design intent.

So unless there are improvements in the NI pipeline for flattening variants then I don't believe there are any risks with forcing the version to the same as the Messenger toolkit source version (2011).

-

I have a separate issue. When I load my project which uses Messenger toolkit, LabVIEW warns that the "Library information could not be updated". This pops up three times consecutively, yet once cleared everything seems to work OK.I've seen this before, when I used Messenger library about two years ago on another project LabVIEW used to complain with the exact same notification (today that project loads without the warning).I've only see this warning when I open a project using Messenger toolkit, so I think it's fairly safe to assume it's related?I was wondering if anyone else had seen this behaviour? I'm using LabVIEW 2017 on Win7 SP1 currently, but two years ago it was on 2014 within a VM (still Win7).OK - I did some deep digging, this issue has nothing to do with Messenger toolkit. Apologies.

-

My experience having used this technique in my own application (not Messenger Framework related, but nonetheless the same issue) is that it works without loss.

This flatten function honours any attributes within the variant. It does not, however, include a bitness setting, so you cannot set the bitness to your own choice. However, this shouldn't be an issue when passing data between Messenger actors.

There are, as far as I understand, no changes to the variant flattening syntax since LabVIEW 8.2, so unless something changes in the future there would be no loss of info using this.

-

My issue is not with Messenger, but with my own variant handling code between differing versions of LabVIEW.

The LabVIEW version data requires 8 bits, not just the top 5 bits. For example, LabVIEW 2012 is represented by x12008004. But if the Messenger library (built in LabVIEW 2013?) were to enfornce the same LabVIEW version format for all flattened data then they ought to be compatible across all instances.

I'm still battling to get this to work in my own code - I'm encountering problems associated with nested variants (A variant as part a few items in a cluster array, which is passed through a message which uses its own variant container), whereby the inner variant is always unflattened to empty. Might simply be that I have something mixed up somewhere, need to keep investigating...

-

Unbelievably, I'm encountering this exact same complication today.

As far as I can tell, the reason is that variants are flattened to strings differently in every version of LabVIEW. Althoug the schema is the same, the actual version of LabVIEW is prepended in the flattened string. Thus, when a 2016 flattened string is passed to LabVIEW 2014 it does not assume to know the schema and throws error 122.

NI's white paper on using "Variant Flatten Exp", which allows you to set the flattening format structure, is their recommended approach. Thus you can set your 2016 code to create 2014-style strings.

Note: I think this only affects the flattening of variants. All other datatypes are unaffected.

However. I am still noticing differences between my 2014 strings and 2017 strings. For me, the issue is now down to the links to the typedefs controls in the clusters that I use. when flattening clusters (that contain variants) to strings, that are linked to typedefs, there is still a problem when passing between LabVIEW versions...

-

Hi Rolf,

Thanks, that sounds very interesting. I wonder if there's documentation (MSDN?) that covers these requirements. I'm looking forward to hearing back from you on the availability of your toolkit.

-

I'm attempting this today. I have a LabVIEW 2014 executable to be launched as a service on Windows Server 2012 R2.

I followed Microsoft's instructions to run "instsrv.exe <service_name> srvany.exe" (exes are from the resouce toolkit), and then configured srvany to launch my LabVIEW exe as a service. I can see it listed in the Windows Services manager. Success.

However, this appears to be a dirty way to convert any application into a service. What it really does is turn "srvany.exe" into a service, and then you configure srvany to launch your application. This means there's no real communication between my application and the Windows Service manager layer. Consequently, if my application were to be asked to shutdown gracefully, srvany receives the request and simply pulls my application out of memory.

I'd much prefer for my application to be aware of shutdown requests, and then it can close down its resources safely/appropriately. It maintains database connections and TCP pipes, so I don't want these left orphaned.

Does anyone know any more about creating 'actual' Windows services? I'd like to avoid this wrapper based approach which casually spins up and tears down exes.

-

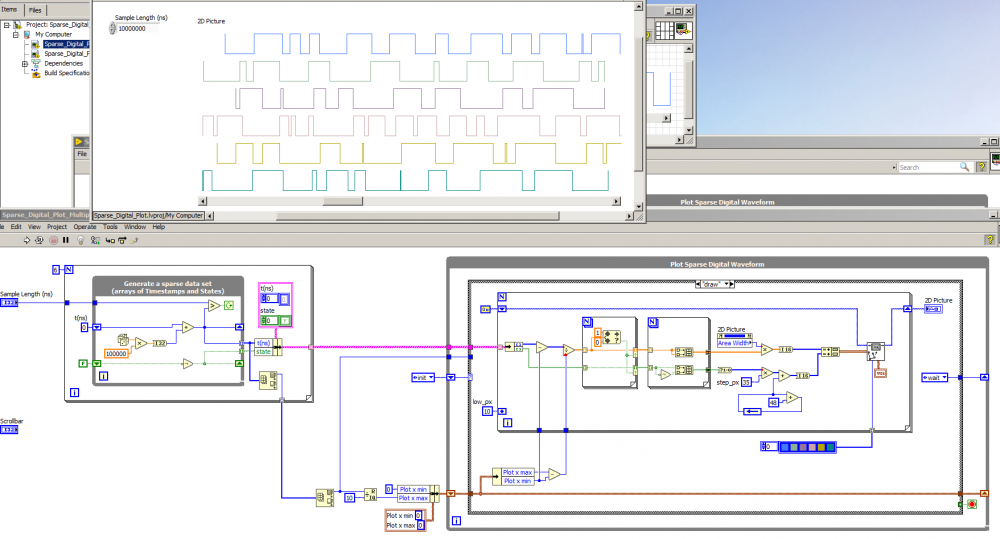

A quick VI that creates sparse representations of digital data, and plots them. The plot supports multiple waveforms and includes a horizontal scrollbar to scroll through the time axis.

It needs axes plotting adding, and support for zoom (just need to capture a user event, such as mouse scroll, to change x axis range and replot).This any use?

-

1

1

-

-

That's a very interesting need. If you represent your data sparsely, such that you store transitions and timestamps only, then you're unlikely to find a plotting tool that handles this data format. It might worth looking for a .NET control that exists already for this and interfacing with that. But I would prefer a pure LabVIEW solution if possible.

My immediate thought is that you're going to need to create your own graphing tools. If you want to look at the entire signal then you'll need to reinterpret the sparse data in order to use it on a traditional LabVIEW graph, but then you have memory limitations (as you already experienced). The next option, and it might be possible since you're talking about simple rectangular digital signals, would be to draw your own lines with the picture tools and build up your own graph image. You can then re-interpret the sparse data as instructions for plotting a line. It's a little work, but not a great deal.

My ambition then would be to wrap this up as an XControl, including horizontal and vertical scrollbars, so that one can pan through the image (it might need to be redrawn on the fly as the user moves a scrollbar), and zoom in and out (changing the range of values to include in the plotting functions). You won't want to recreate all the functionality of the LabVIEW native graphing tools, but the basics mentioned above wouldn't be too difficult.

-

1

1

-

-

Neil - Random thought. If you build your LabVIEW numeric keypad into a DLL and call it with the CLFN, maybe then it will exist in Windows separate from the LabVIEW windows and can be self-manipulated to be NonActive? Wouldn't take long to trial, possibly worth a shot.

-

According to this it's not possible due to the complex manner in which LabVIEW owns and manages it's own FP windows

-

1

1

-

-

It didn't work! Here is the ini file, alongside the exe:[DWJar] server.app.propertiesEnabled=True server.ole.enabled=True server.tcp.paranoid=True server.tcp.serviceName="My Computer/VI Server" server.vi.callsEnabled=True server.vi.propertiesEnabled=True WebServer.TcpAccess="c+*" WebServer.ViAccess="+*" DebugServerEnabled=False DebugServerWaitOnLaunch=False HideRootWindow=TrueAny clues as to why my front panel still showed? It wasn't hidden or minimised, it was fully visible and on screen.I've also set "Allow User to Minimise Window" to disabled in the VI Properties, as indicated by smithdOK - my bad. I thought the ini key worked on it's own, so I'd disabled the property nodes for hiding the Front Panel. I put that back in and it's working :-) Thanks!

-

Thanks everyone. I'll use this approach.

I am indeed using the Averna notification icon toolkit, which is why I wanted no task bar item to show for the application.

So does anyone have an explanation as to why the VI behaves differently in the dev env compared to when built? I hate it when things change just because you build your code into an exe. Some things, like paths, are understandable, but visual behaviour changes are unacceptable to me.

-

This quick test VI, when run in the dev env, immediately hides its front panel and the taskbar item for it disappears too, then two seconds later it sounds the PC beep, then closes itself an additional second later. The beep is purely there so that I know the VI is still running and not quit out.

This hidden front panel is the behaviour I want for my VI. But when I build an executable from it and run it, the item in the Windows Task Bar remains. It's there for the duration (three seconds), then vanishes when the VI closes.

So, why does the built executable cause a visible taskbar item to show (like most standard Windows applications have), but not when in the dev env?

And more importantly, since I want this VI to be running in the background without any tray icon, how can I get my VI to run invisibly?

[LVTN] Messenger Library

in End User Support

Posted · Edited by Thoric

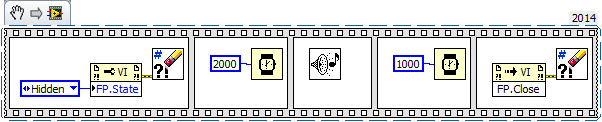

Hi James,

I tried your probe anyway - unfortunately it causes a deployment error (see image). Without investigating, I'm guessing there's something in there not compatible with RT?

I already log the 'states' to a log file, which is how I know if the appropriate states are being fired in each actor. Plus I log all errors to a separate log file.

Trying your ideas:

1) Already made that change, just in case

2) Double checked wiring - it's fine.

So I took a look at "vi.lib\drjdpowell\Messenging\ObserverRegister\ObsReg Core\ObsReg to Table.vi” which shows how to take an ObsReg Core class and represent it's data in tabular form. How can I use this to investigate the number of Observers on the subactor notifier before I send the reply? I presume this is the motive?

Deployment errors when using probes:

This is how I record the states to file: