JackHamilton

-

Posts

252 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by JackHamilton

-

-

Albert,

That is very true. I am attempting to build a tutorial, one step at a time. Most applications don't employ sophistcated error handling at all.

That said I do pass the calling routine and sometimes error details & data in the error description I've used VI server to pass the calling path of the subVI - but in most cases this is useless to the user. (and sometimes me)

I rate error handling in code in 3 levels.

1. Code works! error reporting via application crashes.

2. Code reports errors - all of them.

3. Code employs methods to recover from error conditions - reports only those non-recoverable errors.

Micrsoft reports that in percentage of code, 50% of Office code is dedicated to error handling & reporting. I am including routines that deal with user interface errors. "Undo" and "Cancel" are really user input errors - but we have to write code to deal with them.

Also, I think it more helpful for programmers to understand the underlying madness and methods over simply installing and using some pre-built solutions.

The example code attached in LV7.0 version.

-

-

Heard this second hand from an NI week attendee to the PDA meeting.

Sony has decided to focus on PDA phones and HP is following suit. PDA's with PCMCIA support will be few and far between. Some industrial companies will continue to make ruggedized PDA's with card slots but they will be in the $2K - $3K range.

Takes all the fun out of creating applications for what was a $300.00 device. :thumbdown:

-

Too many wires!

that's whats wrong with that code...

I can do that code with 5 wires!

-

m3nth,

I use a producer - consumer architecture with a mix of event driven queues.

I use a GUI loop and typically put the any User Modal dialog boxes there. I actually have a state in the GUI call "WARN" which can be accessed by a queue message. The command parameter contains the message displayed in the dialog box. The dialog box will only suspend GUI events until the dialog is acknowledged and closed - as the GUI loop is ONLY processing Front Panel User Events - it's ok for it to be suspended - the Processing Loop is actually doing all the work.

This is one of the pitfalls with registering Dynamic Events in the GUI Event structure - you tie the GUI with internal code exection - a No No (IMB).

I don't put any modal VI's or dialogs in the "Processing Loop" this loop is the 'Central Station' where ALL commands are passed to the particular control loop.

I've had applications were commands needed to be received from the GUI, API dll calls, a serial port and TCP-IP - all at the same time. The Processing Loop is the central receiver of these commands.

I tend to keep the GUI loop a simple shell that passes commands to the Processing Loop - the Processing Loop will send a secondary message to the particular hardware/control loop or loops - should a single command require the coordination of several hardware interactions. The processing loop also contains message tracking for debug so I can following all the commands if a problem occurs. Of course each message must contain a sender ID.

I don't use "Dynamic Events" - I know what they do. But I'm sitting on the side-lines waiting for a complelling situation to use them. I seem to be able to handle most problems with my existing architecture. My wish list for dynamic events is to actually assign the Event Structure to poll and fire on the registered event - instead I have to poll the event somewhere else in the code and propogate that to the Event Structure? - seems messy to me. Plus - this is not the purpose of the Event Structure. Back in the day you had huge while loops with tens of controls inside with 100msec delays just to see if the user pressed a button! My Deux those were the days...

Regards

Jack Hamilton

-

:!: Problem:

How to generate meaningful error messages and codes for an application. Without it being a pain.

Issues:

1. LabVIEW internal error messages are not germane to your application - only the programmer (may) understand them.

2. Too much work! There are hundreds of possible errors that an application can generate - and creating a special message for each is too much work!

3. I'm not gonna take the time to scatter hundreds to text boxes in my code - loading the application with a huge database of error messages.

4.I don't have the time to STOP coding to think about an error message - it interrupts my work. It needs to be fast & easy to use!

5.When you're done - how do you document those nifty error codes.

Error Re-coder

The error re-coder - reformats the low level LabVIEW error messages and codes - to a custom code for your application. It works by taking a input error cluster flagged TRUE - and using a strict type def enum as the control to reformat the input error message to the text string stored in the enum. It also uses the enum numeric value as the error count - output as the error code.

Using a Strict-type Def enum - allows you to

1. Reformat default LabVIEW error codes to have a custom meaningful message to your application.

2. Assign a custom error code.

The beauty is:

A. You can add custom error codes as you work - open the strict type def and Add another value.

B. Code is self documented - the Enum displays the error code and text.

C. When your project is done - you can dump the enum strings using property nodes and create an application error table!

The attachment is the error coder with an example wrapper VI. All you need do it change the Enum value text to your own special message.

This is the first part in an error handling methodlogy that I have been working on for the past years. I hope to share some other error handling routines later.

Written by Jack Hamilton

JH@Labuseful.com <mailto:JH@Labuseful.com>

www.Labuseful.com <http://www.Labuseful.com>

LabVIEW Tips, Tricks and Downloads

-

You'll need a experienced programmer to write code to do this.

I can see a toolbar that allows the user to select the type of measurement they wish to make. Draw the ROI over the image artifacts to measure - then change or modify parameters to fine-tune the results.

Although not a huge task - its not trivial either. I find that these applications start the 'avalance' of requirements.

If you want to measure something - you will want to record it.

You'll want to compare the results to some upper and lower limit.

If you want to record something - you'll want to retrive, browse compare it.

Soon the data itself beccomes the most important aspect of the application - the acquisition of the data becomes secondary. This is the opposite view of the programmer - who's more enamored with the acquisiton & control.

Soon they only want 'one button' to do the whole thing. There are consultants who can help you. Or you can program the application yourself. Using the application builder - it will give you example code of how to accomplish a task.

Regards

J. Hamilton

-

I agree with Mike; Why do you want several instances of the same application running?

If the single application was written correctly - it should handle the job? Are you wanting to create mulitple 'views' of the same data?

If you're creating a demo CD - could you not create a menu/lanching application that will let the user browse and select demos?

You could use HTML to do this too.

You can run mulitple LabVIEW.exe's on a machine - that should not be a problem.

-

Be careful when using the "Size controls to front panel" option. Buttons with text and text in graphs & plots will look very bad when the panel is too small. Worse - it does not resize up very well. Also the install computers font settings - if other then default - can cause the text to be larger than the control they are adjacent to.

My advice - set your development computer monitor to the application target resolution when you develop the front panel of your code. I've even had to build 3 versions of an application with a graphical-intense front panel.

Regards

J.Hamilton

-

Andrea,

Welcome to the wonderful world of Active-X. Which proves there is no substitute for good programming. Without proper documentation - you can't make 'hide nor hare' of that control.

I was recently given an Active-X control 'written especially for LabVIEW' that passed me a pointer to the return data!. LabVIEW can't handle memory pointers.

I would contact the company that made the control - maybe they can give you some VB sample code you can look at the see how it's supposted to work.

Regards

J.Hamilton

-

M3nt,

There are ways around 'creating large' clusters in your code. I started using what I call "monocluster state machines" a few years back and discovered a few problems. One of which is that the memory footprint is huge - even though it is a strict type def - and should really be only a few in memory, the llb size was quickly getting over 10megs for a meduim program.

The other problem is the code becomes unwieldy. You forget what functions should read and write vars in the cluster. I've had other programmers in the team - change values in the cluster (thinking they were unused??)- wreaking havoc in the program. Reading about Object Oriented programming - especially the concept of "Public" and "Private" data helped me develop a new kind of architecture that is much cleaner, more resuable and is pretty efficient.

In general the core data that is relevent to a function - should be contained in that function - and no where else. For instance the GPIB address of the device does not need to be a global, its only important to the GPIB Read and Write core functions know the address.

If you use queues or other messaging methods - you can easily modify and change the settings of a function. You can add features to a function - without (seriously) impacting the rest of the code.

That said I do use alot of strict type defs in my code. There is no right answer but too use of one type of method should cause one to question if a better method is out there.

J.Hamilton (yes, I am still alive...)

-

I am looking for an efficient method to detect a long duration TTL pulse of varying occurance. (0.500 -> 160 seconds). This is an additional requirement for large application and we are very sensitive to CPU usage.

Although the time between pulses is varying and large, I need to detect them within about ~ 1msec. I am using a 6023 E-Series card. I can wire the signal to a PFI, counter or DI line (my choice). I don't need to acquire any data with the trigger only detect the transistion of the line.

What I have tried:

PFI Line: Creating a continuous analog acquistion triggered by the PFI line. To make this the most efficient, I created a DAQ occurance for this. Odd behavior: 1. If I set the timeout to 500msec - my timing sensitivity is +/- 500msec??? - (I though the occurance would timeout at 500msec - but would fire on the actual event immediately.??) The CPU loading is about 4% (good). Shorting the timeout (50msec) makes the latency better - but increases CPU usage to 50% (too high).

Using the "Easy DIO'with convential software polling at a high rate loads the CPU.

I see that there are DIO Occurances in the 'Advanced DIO' Palette. But I can't figure out or find any examples of how they work.

I called NI tech support and I was told software polling is the only way to do this. I got a blank stare when I mentioned the 'DIO Occurance' functions.

Any help is appreciated.

Jack Hamilton

714-542-1608 Office/Fax

jh@labuseful.com

www.HamiltonDesign-Consulting.com

2612 N Valencia Street

Santa Ana, CA, 92706

-

In the end the Arb card did not work for us either. As we needed to analyze 4 channels of analog feedback data to determine what response to playback - we could not in software call the arb card within 60msec of the event.

Although a simple model of using a DAQ occurance to flag a notification to change the arb card works. We needed to also pass to the arb card which sequence to play based on software analysis of input analog data. We could not get those two events to occur fast enough to arm the arb card before the timed hardware trigger fired.

I don't see this in any way as a failing of NI hardware. Trigger/Response applications with on-the-fly data processing should really should be done in hardware.

I was able to lay hands on a 7041 Real-Time card - which did work, including acquistion, analysis and output response. with only about 200usec of dither over repeated tests.

I guess I would take exception to the arb card marketing literature which states it's "ideal for stimulus /response applcations".

-

:thumbup: Wow! The 7041 is a PCI base Real-Time card with a piggy-backed E-Series 6040 (12-bit) card attached.

It also includes a built-in watch dog timer, serial port and User controllable LEDs.

Now this is a very nice product for time-critical applications that don't require more than one card, where a PXI chassis is over-kill. The US Price is $2,995.00 which when compared to a high-end E-Series alone is very reasonable.

The specs state a 26kHz maximum PID loop rate! I got to play with one of these for 2 days on a time-critical AI /AO application. With some quick and dirty clocked 4-channel Analog input at 24kHz and simultenous single-point analog out at 24kHz this card showed about >300usec of response dither! (yes, thats micro seconds) This included performing triggering on the input signal and then outputing one of 2 different waveforms. Not bad for software response time.

I can't recommend this card enough...One happy camper! :worship:

-

Jim,

The 5411 Arb card has one very strange caveat that does NOT exist with the E or S Series cards.

The response time to a hardware trigger is 78 clock cycles - which is clocked by the sample waveform output clock! so outputting at 3 Khz will have a 25msec latency in response!

We are outputing at 3Khz with a hardware trigger targeted for a 100msec delay - but we actually get 125msec???

This is nowhere stated in the spec (who'd want to!) Also the triggering is pretty unsophisticated - you can only trigger one sequence. So the marketing lit about "Stimulation and Response" is pretty limited.

My wish list would be better drivers for fast output pointers on the waveform buffer, we need to do some real-time stimulation and response - and need to determine what exact waveform to transition to on-the-fly. The drivers are not optimized for this. But the card does take only about 43msec to stop, reconfig and output another waveform in memory.

-

Okay, I'll answer my own questions...

Erase ROI does work - but you need to update the image after the function. As the new "Value" property does NOT work - you need to use a Global variable - update that.

You must have a loop running that updates the image from the Global variable. Not very efficient- but should be fixed in Vision 7.1 maybe???

You can't use the older Image functions which call the window by window number. the new front panel embedded image does not have a window number (not even 0)

New Vision does not seem any slower than 6.1, overlay stuff seems to work fine. I don't know if I like the right-click menu now avaliable on the front panel embedded image. Allows you to save image and change the palette.

-

Thanks to the efforts of Greg Smith, NI Corporate and Zach Olson Southern California Sales. NI has changed their support policy for Alliance members. Now Alliance members do NOT need a SSI subscription. Technical support both web based and Phone is as it always was.

Thanks to the efforts of Greg Smith, NI Corporate and Zach Olson Southern California Sales. NI has changed their support policy for Alliance members. Now Alliance members do NOT need a SSI subscription. Technical support both web based and Phone is as it always was.It was getting interesting - As prior to this I heard of an Alliance member WITH SSP being told this was his 4th tech support phone call. "So what?" he asked I have SSP. "Yes, but it only gives you 3 phone calls per year" replies NI support.

I don't know if this is/was the actual NI policy to limit even the SSP calls. But this would be pretty strange. Alliance members work on numerous applications over a year and I would think generate more than 3 requests per year.

-

Craig,

That may work, But I take the position of 'Fear'

and would program TCP/IP command capability to your routines. You can instigate command the data requests in a controlled manner.

and would program TCP/IP command capability to your routines. You can instigate command the data requests in a controlled manner.I'm not a Real-Time Power user by any means, but some of these nifty 'features' can have unintended consequences.

Regards

Jack Hamilton

-

Chris,

I did not use the "Automatic Protection" Scheme. If I recall, this encrypts the entire LabVIEW exe and acts as a shell.

I think this path is the potentially fraught with the most problems. As your application communicates with hardware - I would think the shell would not provide full access to the OS resources.

Any reason why you did not use a simple keywork/encrypt scheme? You can call the key with a single VI - which will send it a string to decrypt - your code will check the response and if it's correct allow your code to programatically determine what to do (Quit, or warn the user)

Rainbow has LabVIEW VIs. I have modified them severely I can help you over the rough spots.

Regards

Jack Hamilton

-

I am working with IMAQ Vision 6.1 and am not new to vision. I am getting alittle fustrated at all the groping I'm having to do to order to understand the new references, which don't seem to all work.

From my NI support post.

Where are examples for using new IMAQ features? Image References etc.

I have the following questions/problems

Q1. Using the IMAQ Reference - Writing a new image to the property "value" does not seem to work.

Q2: How does IMAG Wind Draw - which uses the "Window Number" reference work with the new property node and global variable image reference?

Q3: I am attempting to erase an ROI - it does not work using the new property node "Clear ROI"

P3: When I use the "traditional" Clear ROI vi - it requires the window number - how does one establish the IMAQ window number for the new control type window that resides in the front panel? Window=0 does not seem to work.

Q4: Can you send me an example of simply clearing an ROI - without erasing an image? It is possible?

I've searched the examples and documentation for Vision 6.1 and can't find any cogent examples of the reference usage and limitation? What gives..

-

:clock: Employing a API call to Windows it is possible to gain access to the windows high-resolution timer. This timer has sub-millisecond accuracy.

Before developing the DLL ourselves - we checked the NI web site and viola! someone already wrote it.

Since I think this is such a great and useful tool to have in your VI arsenal I am posting the NI VIs and source here.

-

:thumbup: Thanks to a great demo from our local NI rep. The Arbitrary Waveform generation card PCI-5411 & 5421 series will do the trick. You can load up 2 million samples in the card and using 'scripting' select which segment of the memory to output. Even has markers which will output a TTL pulse for a specific sample.

Cudos to NI - a very nice product.

-

I've had a project come to a grinding halt - mainly over a glossed over requirement - that is quite odd.

We need a DAQ card to output a stimulus signal (waveform) of varying types, and not just classical sine and square waves - but a constructed waveform loaded into memory.

:!: Now the catch... we need to output a continious signal which is used to 'stimulate' a sample unit under test. When a special spike signal is output to the test device - it 'responds' to the stimulus - we need to then output a different waveform pattern - to make the device believe the test device has effected the signal.

The problem is we need to

1. Output a continuous waveform pattern - then via a software trigger

2. Change the output to another pattern. All within a few msec.

What were finding is that S & E Series DAQ cards were never intended for this scenario; why? I'll tell you. Thinking we'd be clever we are using both analogs outs and routing them to a signal input. So we can play one waveform and preload another when the response is required.

A. You can't configure one waveform output to be software triggered and the other hardware triggered. DAQ Error results. This is true with Traditional DAQ & DAQmx.

B. The latency in the actual waveform output from a software trigger is atrocious! Were seeing 100msec ~ 250msec latency with a 100% variance in response. S-Series DAQ card on a P4 2.0gig processor. LabVIEW 7, DAQ 7.1. Hardware triggereing is superbe - but again- if your already playing a waveform on one channel - which we are - you can't configure the other for hardware triggering.

The card reaction time is so long and variable, we can't even measure the performance of the test device, we need reliability of better than 4msec and want 1msec. This is really odd that a MegSample card performs so poorly.

The card reaction time is so long and variable, we can't even measure the performance of the test device, we need reliability of better than 4msec and want 1msec. This is really odd that a MegSample card performs so poorly. The card we're using 6120 has 32meg of waveform memory - but you can't load several waveforms in that memory and then play a 'segment' of that memory out - a la memory pointers. Were only looking at 5Khz output sample rates.

We are now taking a look at the arbitrary waveform cards PCI-5411, but the NI rep is telling us it won't perfom either! They are recommeding the FGPA, but it's got very little memory (1 meg) to store waveforms. Real-time was another suggestion - but again your using the same hardware.

It appears to be a driver issue, it's just not intended or optomized for this scenario. Using 2 DAQ cards will stilll have the problem - because the driver is using common memory buffers in the OS.

We've tried double-buffering, but it's CPU intensive and believe me the system has many other things to do. We've got about 18 threads running in this code.

Anyone have any other hardware suggestions? We've looked at some Agilent Waveform generators but they take about 30 seconds just to load 1.k of waveform data via GPIB - this is too long for us.

-

I don't recall 'complaining' about TCP/IP, just stating that to communicate between a real-time VI and the host PC - you need to use TCP/IP.

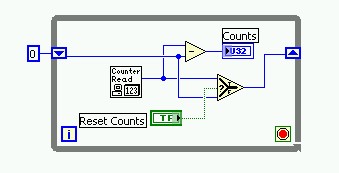

Reset the counts

in LabVIEW General

Posted

Yes, you can - but you may loose counts during the reset of the counter. My suggested method - you don't.

It depends upon your needs.