-

Posts

291 -

Joined

-

Last visited

-

Days Won

10

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Omar Mussa

-

-

Hi,

are you speaking about closing dynamic or static SubVIs?

If you are speaking about dynamic subVIs, then do as John suggested, i.e. call the "Close VI reference" on all opened references, but do it after you have closed all open frontpanels. If you don't close the front panels first, the VIs will stay in memory, causing the save dialog to appear when your application exits.

Then the question is why you don't want to save these VIs? A VI that contains changes are loaded with FrontPanel/BlockDiagram and are then recompiled, i.e. consumes much more memory and takes longer to load. If you have many VIs with changes, the main VI loads much slower.

/J

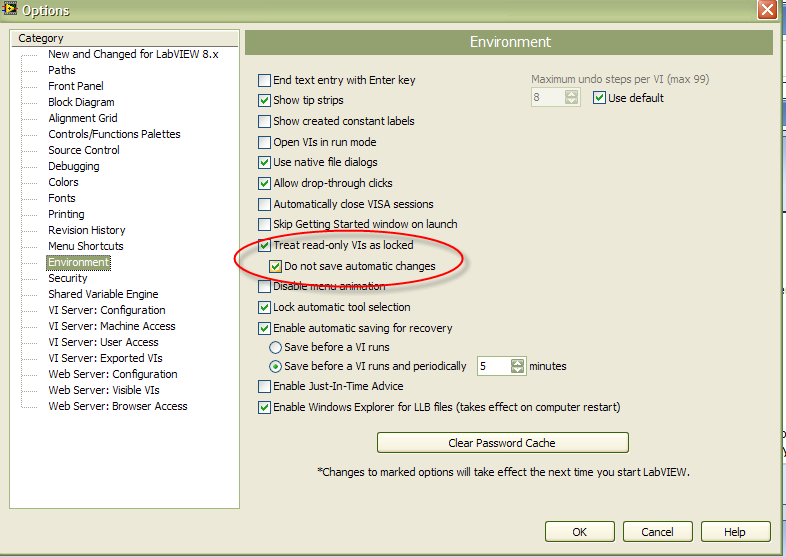

As you are running from the Development Environment, you may want to ask yourself: is the end user also running from the Development Environment or are they going to be running from an EXE? If the answer is EXE, you shouldn't really have to care bc the dialogs will not appear. If you are going to be running from the development environment, an easy way to prevent the dialogs is to make all of your VIs 'Read Only' AND set the LabVIEW Options to "Treat Read Only VIs as Locked" And "Do not save automatic changes" to checked.

-

Hi, I am coming back! Haaaaa,

I meet a new problem that is SQL operation. I have written some independence programs which fit to my destinations, and now I want to use two input parameters

-

You should use events to do the trick. Check the examples below to get an idea how you can use events to communicate between .NET and LabVIEW processes. You can either set LV to generate .NET event or you can set .NET event to to catched by LabVIEW.

labview\examples\comm\dotnet\Events.llb\NET Event Callback for Calendar Control.vi

labview\examples\comm\dotnet\Events.llb\NET Event Callback for DataWatcher.vi

This is the best idea on this list :thumbup:

-

Have you copied all the DLLs that the EXE looks for into the directory with the exe? Sorry I can't help you more, but like I said, I got the IT department to take care of my runtime problems for me. I don't have any blank machines to try your idea out with, the LV RTE is one of the first things I install.

Good Luck,

Chris

I tried to get this to work and I also failed. Luckily for me, my app was able to down-convert to 7.1 where the trick still works.

-

Good morning from sunny Massachusetts, USA...

I'm doing maintenance on an image processing/laser holography application written in LV7.0. The application is structured with LV calling a C# DLL (accessed via .NET constructor and invoke) that handles the heavy lifting of manipulating pixel data and adjusting the position of a few mechanical stages. Other than the C# aka .NET assembly being a little slow to start up, this approach works fine...

...until my client, after observing the system in action, said, "That's great. Could you add one more feature, namely, every so often the C# should log the value of the laser power meter?" The problem I'm facing is the laser power reading is being acquired by the 'parent' LV program, inside a while loop, so it's continuously available, but I don't know how to 'ask' LV for the value from my C# DLL. Could the forum suggest a mechanism to accomplish this?

I'd be glad to supply any additional info that might be useful. Thanks for your help.

Regards,

John

One of the easiest ways to do this would be to establish a TCP connection between the C# app and the LabVIEW app. Then, the LabVIEW app could listen for requests from the C# app for commands like 'GET: POWER METER VALUE' (typically, you'd wait for requests in an idle frame or in a loop that is reading the TCP buffer). The good thing about this method is its easy to scale up with more commands if the user decides they need other data from the LabVIEW app in the C# app. The downside is that there can be a lag between when the C# app requests the value and the value on the meter so if your power meter value is changing really fast and your system has timing constraints this method may not work out too well.

-

Hello Khalid, all,

Thank you for your quick answer.

How I understand the situation until now is:

- if you have a sub and you want to pass a parameter to it, you assign a CONTROL of this sub to a collector of the sub and that collector becomes an INPUT. Later on you can connect a CONTROL from the main program to it.

- if you want to get a parameter from the sub, you assign an INDICATOR of this sub to a collector of the sub and that collector becomes an OUTPUT. Later on you can connect an INDICATOR from the main program to it.

Now, my problem is little different:

I want to pass the pointer (address) of my array to the sub, so that, WHITIN the sub, I will be able to:

1. save the array on the file. In this case I use the pointer above as an INPUT. No array modification. Just get it from the main and save it.

2. Load new values into array, from a file. In this case I use the pointer above as an OUTPUT.

Questions:

How to correct my sub attached in my first "e-mail" here, so that it responds to the above 1,2 ?

How to address the sub from the main, once the sub corrected?

A quick answer will be greatly appreciated,

Thank you very much,

Marius

Basically, from your example code and questions, you need to learn more about data flow programming. I'd recommend reading LabVIEW for Everyone (in the interest of full disclosure, one of the authors is my boss

). It is a good book and will get you beyond your initial LabVIEW hang ups.

). It is a good book and will get you beyond your initial LabVIEW hang ups.First, you have to understand one of the major points of data flow programming... data goes in, data goes out. First, let me explain the problems you're having in (sub_save_02.vi)... You arent able to read the data in your subVI because you are writing the value to a control (Initialized Array) but LV does not by default read values from a control. Instead, you should create an indicator and write data to the indicator (call it: Data Out or something so you can differentiate it from the data you write (Data In) and wire it to the connector pane of the subVI. Also, you probably want the File Path to be an Input to your SubVI so that you don't have to overwrite the same file every time... right now its a constant. I would also change the inputs to the SubVIs from booleans to a single Enum value (numeric palette) and have a case for 'Read', 'Write'. The do nothing case should be managed from your main VI (save_02A).

Second, your Main VI (save_02A.vi) is a bit of a wreck as well (no offense). In the interest of writing a long spiel about how to clean it up, or fixing it on your behalf, I would refer you to the LabVIEW Examples section - take a look at the Read/Write Binary Data examples. Also, look at state machine examples. If you don't find them in 7.1, you can definitely find them on LAVA and NI Websites. It will help you to understand why, for example, your loop doesn't run more than one iteration.

Lastly, as a data flow language, LabVIEW doesn't support 'pointers' directly. If you want to make LabVIEW simulate using pointers, you need to do other things and I wouldn't recommend any of them based on your example code. Instead, just relax and remember that data is passed along the wires. It goes into the subVI, is modified (or not) and comes out of the subVI and back into your 'main' application. LabVIEW uses explicit declaration of inputs and outputs based on the wiring of your front panel controls (inputs) and terminals (outputs) to the connector pane. Unlike other programming languages where a function argument can be both an input and an output, in LabVIEW this is not the case (there are ways to make it behave like this but they are not something you should do normally).

Good luck!

-

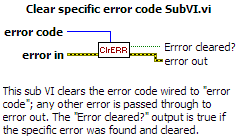

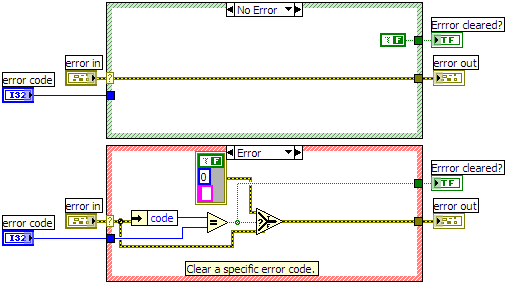

I have a small utility VI that allows me to trap a particular error and handle it silently. Here's a screenshot and the VI compiled for LabVIEW 8.0:

I don't know if clearing the error is the use case described. What you really want to do is log errors to file ... so if you clear the error, you've lost the info you need to log. Instead of using the code as it is presented here (an error filter), I would simply add some logic to write the error code, source and time to a log file (ie build an error log). You may or may not want to log all errors --> so you may or may not need to input the error code. If you want to log all errors, you can place your error log function in the Error case of a case structure. After writing the error to file you can clear the error by using the Clear Errors.vi on the Time & Dialog palette and your loop can keep on truckn'.

-

A 1-bit number simply means its value can be 0 or 1. And luckily enough, a rose is a rose... If you use any of the int type of data and send a 0 or 1 your code should work. Reads and Writes to that value shouldn't require any 'bit banging' because the value is just 0 or 1 (there is nothing to manipulate).

-

There are off the shelf instruments that do BER testing. This is helpful in that their methodologies are published and spec'd and you can interface with them using TCP, GPIB, etc. Just Google BER Test Instruments and you should get more info that can get you started.

-

Here's one that I wouldn't even consider a trick until somebody I was debugging some code with was recently wow'd by it...

While executing a VI:

If you right click on an Array Index Display of a control, indicator or probe, you can select 'Show Last Element' to go to the last element of the array.

In development mode:

If you want to look at the last element of an array control or indicator, right click on the Index Display and select Advanced-->Show Last Element (there is no 'Advanced' menu for probes so it always works by just right clicking).

-

Here's a way to get an annoying LV 8.20 bug to show up...

Create a chart with multiple plots and turn Autoscaling True, then set Plot Visible to False on the first plot. The autoscaling does not kick in for the remaining plots so you end up with what looks like a blank graph (in my example... behavior could vary if you have a different scenario then mine).

I've attached a VI that demonstrates the bug. Run the VI, set Plot0 Visible = Off and watch the scale on the left (if you manually change the scale, you'll see the data is still being plotted for Plot1 for a brief moment (set the Max Value = 15) but the data will not stay visible because the autoscale bug will ruin your day). If you run the VI, in LV 8.0 you'll get the expected behavior. I've attached the VI in 8.0 format so that you don't have to Save For Previous.

NI's suggested workaround:

"I believe that the easiest work around is to disable autoscaling all together. If this isn't an option, then you'll have to write an autoscaling routine that detects the range of data in the history and then sets the yscale.range property node accordingly."

CAR# 42UF33LG

-

Hi Everyone,

I've been using LabVIEW since 1998 and really am having more fun with it now then at any other time. I consider myself a power LabVIEW programmer, and am working at JKI (www.jameskring.com, www.jkisoft.com) with some of the best LabVIEW programmers you could hope to work with! I've been meaning to get involved with LAVA for several years and ended up just not going it for it. I actually met a lot of LAVA-ers (-ians, -ns, -ites,... members) at NI Week this year, managed to get all pumped up, and then got sidetracked from posting

So, as my first New Year's resolution (ok, I'm a bit early) I hope to add to this great community of LAVA-iathans!

Cheers!

deal with the problems in SQL commands

in Database and File IO

Posted

The reason why nobody has the ultimate 'SQL' library is that SQL is not completely standardized across different databases. The syntax for SQL for an Oracle database is different than the syntax for a MySQL or SQL Server database. Each database has slightly different versions based on their own optimizations, legacy codes, etc. This makes it really difficult to create more than an interface to the database which is what the Database Connectivity Toolkit is. Once you've connected to the database (which thankfully has been standardized across the vendors through interfaces such as ADO), you are on your own to create SQL commands that get you useful info. The other part that makes it difficult to create a 'Universal' database library is that each database implementation is unique. Different constraints, different table structures, custom stored procedures, etc make it pretty difficult to build a really flexible toolkit. Basically, this is where design patterns come into play. If you can get YOUR organization to adopt different design patterns, you can start building reusable SQL queries that will be flexible for YOUR solutions.

Its no easy task and often takes many iterations but it can be useful in the long run. Good luck!