-

Posts

416 -

Joined

-

Last visited

-

Days Won

12

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by dannyt

-

-

A very minor comment, the Read Buffer context Help is incorrect and a duplication of the Read Element By Index Contents Help.

-

I place all the VIPM packages I have a dependency upon into a Mercurial repository I have specifically created for that purpose and if I upgrade to a new version ensure both are kept in the repository.

In an idea work I would upgrade from the community edition of the VIPM to the Pro version and create VIPC files instead

-

This might be way more than you are looking for in terms of functionality, but I am using a full cloud based Test Data Management system called http://www.skywats.com/.

It is a cloud base Test Results Database with Yield & Trent Analysis, UUT reports, Root Cause analysis and much much more. They provide a number of different ways of getting test results into the system, TestStand integration, LabVIEW Toolkit and also free trail.

-

1

1

-

-

Again to stress one thing I mentioned in my post.

I have really found it to help to have all NIC's always in an ACTIVE state, the nice green link on light lit and that is why for £10-£50 I stick a cheep powered switch on the NIC, something like a Netgear GS105UK 5 port.

It seemed to me that if you just hung something like a UUT straight off the that was not always power on, the powering on and making the NIC go from inactive to active made windows do "things" that sometimes meant thing did not work as expect or just take a damn long time, Windows XP seemed better than the newer version.

Install and play with Wireshark it can help tell you what is going where

-

I know it makes me a slower coder but when coding in LabVIEW I always use autotool and if I want to switch it off I actually use the tools pallete, rather than tabbing. About the only thing I use on the keyboard is Ctrl-E, it is partly also the reason I do not use the quick-drop option.

I did have major problems with my mouse hand a few years ago and found that a left handed Evoluent mouse really worked for me. Plus much to my surprise as I am very left handed, I found I could use a Logitech roller mouse with my right hand for things like web browsing or general screen use.

So now I have two mice connected to my PC a left handed Evoluent one for LabVIEW programming or anything where real precision is needed and a roller ball on the right hand side for anything else, so I am switching which hand does what throughout the day. As I said it does make my slower and less efficient, but no the overhand it allows my brain to keep up with what my hands do.

EDIT. I just noticed that even when doing LabVIEW I used both mice, I am using the roller-ball to moves FB or BD around my screen or from one screen to the other and automatically switch to my left hand when I start putting down wires. wow I had not realised I was doing that.

-

As has been said you want to play around with the routing table on a windows machine.

Read up about the net route command https://technet.microsoft.com/en-gb/library/bb490991.aspx and windows routing in general, you will need http://serverfault.com/questions/510487/windows-get-the-interface-number-of-a-nic to get the if interface for the route add.

I have PC's fitted with two NIC I ping out of NIC 1 to an IP wireless device across to air into another IP wireless device and then back into NIC 2 on the same PC. I can also with this setup do Iperf across the air and telnet out the each NIC as desired. Without setting up the routing table correctly, I would in truth just ping and Iperf across the backplane of the PC from one NIC to the other, but the routing ensures I go out one NIC.

I do all this with LabVIEW and net route commands issued as system exec calls, it works well. I tend to delete all all routes and then set up my own. If you do this remember to alos add in a route to your network for the main PC LAN or you cannot get to internet or local server :-)

Also note if any NICs are disabled or enabled, Windows will try and be clever and sort out the routing as it see's fit and break your rules, so to help overcome this I nearly always have a simple powered dumb Ethernet switch hanging on the NIC between my test device and PC, then if I power off my test device to NIC still sees a good active connection to something.

-

2

2

-

-

Been running it for a week now on my development PC and seem OK with LabVIEW.

The update from Win 7 to Win 10 was problem free for me, but did take a long time.

My only real issues so far is with a Cisco VPN Client, that no longer works at all so I need to use a Windows 7 PC still to get access to my factory located test systems.

-

1

1

-

-

Yes a big thanks from me as well, give me something to watch in the evenings

-

Amazing news has anybody else seen this

http://sine.ni.com/nips/cds/view/p/lang/en/nid/213095LabVIEW Home Bundle for Windows.

Great news from NI

-

1

1

-

-

You could add the information to the LabVIEW Wiki at http://labviewwiki.org/Home , I am never quite sure if this site is related to LAVA or not.

-

I never did a full list of the bitset mappings I found what I wanted and left it at that, I did find this on the web http://sthmac.magnet.fsu.edu/LV/ILVDigests/2003/12/06/Info-LabVIEW_Digest_2003-12-06_003.html that might help you, but it may well be different for different LabVIEW versions.

I cannot get to grips with your idea of putting the property within the VI's you are interested in, it does not sit well with me the idea of putting in code that does not really have anything to do with the function you want your VI to do, maybe that just me.

You are doing something harder than my recompile check, in my case, one or two VI's that actually needed to change would be edited saved and closed. These VIs would then be committed to the source control system with a comment about the change just made. Then by running the tool VI I could get a list of all the VI;s that the original edit has caused to recompile and these could be saved and committed into the source control system with a comment saying they were recompiles. The nice thing in my case was all the VI's were closed when I ran my tool.

In your case I think you want to get a list of files in memory and then look at them all one at a time

-

1

1

-

-

- Popular Post

- Popular Post

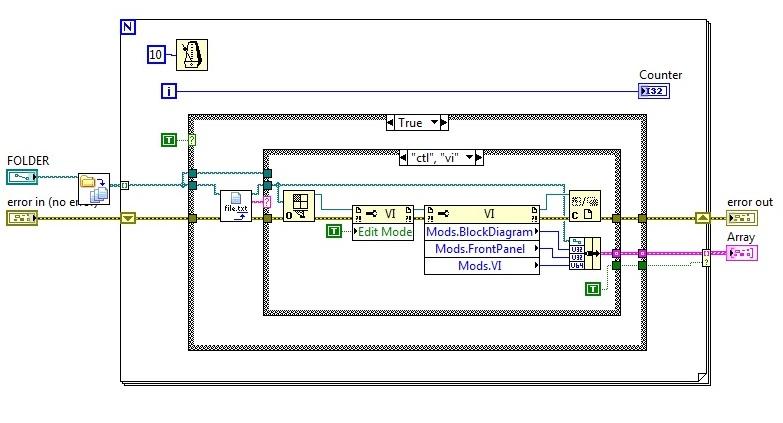

I am sure there was something for this. At my previous company before the separate source and compile code option existed I used a scripting property to check the Modification bits to look for VI's that only had "recompiled" as their change. Cannot remember what I did thought.

EDIT Just found it, I would list all the files in a directory then open one VI at a time in edit mode and then there was a property values of the VI showing "Modifications -> Block Diagram Mods Bitset" , "Modifications -> Front Panel Mods Bitset" and "Modifications -> VI Panel Mods Bitset". I had to play around a little to find out what the values meant but I did get it working

-

3

3

-

You maybe right, but several decades ago I think only Alan Turin was into computers.

I am very nearly that old

certainly used punch cards when I started.

certainly used punch cards when I started.My experience covered that of a stand alone Release Manager and as part of a Release Management Team at two major financial institutions. In one we released around every 1-2 weeks between 6pm and 6am while the trading markets were down and in the other we did release approx every six months over a weekend again while trading markets were down.

I both case the role covered doing the format builds and release of software to both the test environment and live production systems, this was done mainly by automated scripts write by myself and the release team. We were the first port of call for the test teams when there were problems with the builds or environment as we knew how the system went together and what changes had just been added and which programmers to go to if required. We coordinated and controlled the software changes by the programmers with the required database changes by the Oracle db admin team and or unix system changes required. Finally we had to ensure we were happy we could in the case or a problem with a live production release roll the release back to the previous working system.

The key thing thought was to act as the glue between the test teams, the various principle engineers that were responsible for different aspects of the system and project managers. We were a team where everybody had a part to play to make a successful release occur.

I did not look at the test managers test results but did check he was happy that the testing cycle was complete and passed, I did not look at the programmers code, the principle engineering made sure coding standards etc were adhered to, I was not a unix admin or an Oracle dbA but I still need to ensure they were in place and ready if needed.

At the end of it all I actually did the physical releases I knew what when wrong and needed closer supervision next time , who knew who cut corners (always with the best of intentions) and needed to be encouraged not to, I know who I could be relied on and who would always forget to provide an SQL roll-back script, or forget to tell the Unix admin's of some dependency package needed as part of the deployment.

Another un-thankfull task I have done is that of SCC control Manager (ClearCase), I am old enough to have had the arguments with programmer who directly refused to keep their code in the SCC system "why do we need to bother with SSC have just copy and rename my folders"

Finally I am sorry that I seem to have gone of on a bit of a rant with this subject, and possibly bored people, this is not something I usually do. I just feel there are many roles involved in getting good quality software released to customers, some are obvious in their impact and glory, other less so and I do not like to see parts of the team that make it happen dismissed.

-

Your trust comment is valid.. so not hot coals, but the engineer requesting the change does need to be able to explain and justify the requested change in a reasonable robust manner, cover why it is needed, what the basic change is any have a reasonable discussion about the risks involved. But even people I trust make mistakes.

.As I had already tried to point out before your comment, the hot coals was in jest and I did go on to say "justify" the change and in my understanding the word justify implies evidence and rationalisation not emotive argument, but maybe it is not seen to mean that by other.

I think there is a very narrow view or experience of a Release Manager's role being used here, of somebody who lives outside of the development team and development process and has no technical ability or validity, but that has not been my experience, I can remember a time when that was the case but that is going back several decades.

-

So basically what you're saying is that we need to let an arbitrary length of time pass between making software changes and releasing it to the public.

No not an arbitrary length of time, but a delay defined by your test cycle time else you are sending out untested software. So you have to decide if the bug your tring to fix is something your can live with or is a must fix issue. There are legitimate reasons to allow know issues with a system to go out rather than risk a release that is not fully tested.

I am all for customer beta release and testing however there are many situation where that is just not possilbe. It is also not always possible to push out a fast release even if the deveoplers can get the fix in place and test OK. There are plenty of real world situation where a release and deplyment of software is a very expensive and time consuming process.

I'm a fan of releasing early and often. Yearly monolithic release cycles are big, scary and should be avoided. But hey, that's how most are used to working.

I totally agree with your statement above, but in the real world even small functionality drops to a large system can invloved a lot of time and money so they had better be right.

A manager requiring a passionate argument from a subordinate to make a decision is a test of belief and has nothing to do with the software or hardware. It's about one party convincing another about their conviction irrespective of fact. This is why I don't agree with the technique as a valid decision making process..

Who said anything about a passionate argument or belief's, I do not. But they have to be able to present the facts and evidence and testing.

-

In the final run up to the release no change other than bug fixes should be allowed and even then the developer should be made to walk over blazing hot coals first to see that they really are serious in their commitment to them.

I should have added a

to the above line as I was aiming for humour.

to the above line as I was aiming for humour. I have never seen the Release Engineer role as simply a bureaucratic role, that is a very dated idea like the "Quality Manager" roles of the good old defence industry days. A Release manger should be working hand in hand with a Chief Engineering and the Test Manager should such a position exist. A Release Manager should have a technical understand of the project, technology and code and testing and be fully a part of the decision making process.

Your trust comment is valid.. so not hot coals, but the engineer requesting the change does need to be able to explain and justify the requested change in a reasonable robust manner, cover why it is needed, what the basic change is any have a reasonable discussion about the risks involved. But even people I trust make mistakes.

I would say that in general I am a very trusting and open person, but in large developed teams or even multi-development teams there can be many agendas in play. I have actually experienced developers if not actually lie to me, certainly bend the true as they see their feature / change as more important than the project as a whole. I have even caught developers trying to circumnavigate fixed procedures and SCC systems.

I might now have a proper job, but the pay is not nearly as good

Finally the Release Manager needs to take into account the impact of the system they a releasing and scale their paranoia accordingly. The checks and balances for a small stand alone test system are totally difference for a system that handles a large percentage of the world Oil Trades over three times zones that takes a weekend to deploy. In the later case if it screws up saying he is a very good developer and I trust him does nobody any favours.

-

I have to strongly disagree the timing of changes against actual release date is a very key. Also as you get nearer the release date the types of changes allowed into the release should change.

In the early part of the release cycle the release manager should be locked away in a cage with a cover over it; the only gatekeeping should be your standard SCC control process, which should include things like nightly builds and automatic regression testing. At this time new "agreed" functionality is added and bugs fixes applied and yes it can and in some cases should be a free for all.

As the time to release gets nearer the process changes, there comes a point that new functionality should not longer be allowed, functionality added that may not really work as expected or at all, may need to be pulled from the release, this sort of decision is hard and based on a mixture of risk against what the customer has been promised. The release manager at this point is out of his cage and working up to full paranoid mode.

By now your regression testing suite should be extensive, however you will also need to be doing manual testing and stressing of system as regression testing can never cover the full functionality of a system.

In the final run up to the release no change other than bug fixes should be allowed and even then the developer should be made to walk over blazing hot coals first to see that they really are serious in their commitment to them.

In my past I have worked as a release manger on several high value financial systems (oil trading and Europe bond markets) The reality is that even following due diligence and good regression test does not mean you have not introduced an unexpected problem. On the systems I was working on a full test cycle to run through all the test case was in the order of a week.

As a release manger, I have had many experiences of developers saying "I have found a bug and this is the fix, do not worry nothing else will be affected" followed a week later by "ahh I did not see that coming".

As a developer I have had experiences where I have found a bug and thought of simple small fix only to be caught out by and unexpected side effect or impact. Programming is not easy especially in modern large systems and even the very best developer can and at time will get it wrong.

So lets hear it for release mangers they are human too.

-

2

2

-

-

You have basically got it right, the huge leverage you get from using SCC's does not come when using LabVIEW and SCC's.... however that is not a reason or excuse NOT to use a SCC tool as there are still significant befits.

You just have to work in a different way when using LabVIEW, the same is true is you use a SCC for your word documents, image files etc.

The LabVIEW integration you get using the "integrated" Tools/Options/Source Control/Provider option is really nothing more than you can get using an SCC not supported and putting your own tools in place.

So you will want to sort out VI diff's and merging, they exist and can be done. For myself I have never really got them functioning to a really useful extent, but I have not spent a great deal of time playing with them. I preferred to go down a route of decoupling peoples work loads to avoid there need.

I have used LabVIEW with ClearCase and now work with Mercurial.

If you look around there is a lot of people using LabVIEW with SubVersion, Mercurial (hg) and a growing number with git.

for git https://decibel.ni.com/content/groups/git-user-group/blog/2013/02/20/some-basic-git-info and https://decibel.ni.com/content/blogs/Matthew.Kelton/2011/09/30/labview-and-versionsource-code-control--introduction

for subversion https://decibel.ni.com/content/groups/large-labview-application-development/blog/2010/03/29/using-subversion-svn-with-labview

-

I do not know if this will be useful but there is a really good presentation with example code and a video covering User Events in general and various pitfall / misunderstandings. T

The example code is here https://github.com/wirebirdlabs/LabVIEW-User-Events-Tips-Tricks-and-Sundry

I cannot find a link the the VID but the title is Jack Dunaway_User Events Tips and Tricks but think it was given at NI week 2013 (try https://lavag.org/topic/17040-niweek-2013-videos/)

EDIT Sorry I have just seen this has already been mention in one of the links you posted......

-

In the REx - Send Command.vi is there a reason you do not expose the the caller by default

Wait For Response ? & Timeout, ms as controls and Command Timed Out ? as an indicator

they would seem to be logical things that any calling VI would want to set or check

-

I starting using separate compiled code from LV2010 when it first came out and am still doing so in LV2014. I have never had any problems with this feature, to the contrary it totally changed my option of LabVIEW and how it has become a 'real' programming language that is usable in a team development environment using proper source control tools.

I admit I have only been using it on Windows based platforms and though the projects have been big in number of VI's they have been quite simplistic compared to what a lot of you guys do.

-

1

1

-

-

I very much agree with the comment below

Running applications from source is a bad idea in almost all cases. I highly suggest building EXEs. That being said it is more work to make an EXE. Especially if for some reason the builder craps out and you need to start over again. Locking down an application is critical if you are taking any kind of measurement so you know a developer wasn't fudging the numbers, or adding filtering and averaging to make it pass.

and think you really need to look at going down the executable route for all your deployments.

You say say you only have 4 developers so moving to a system of deploying executables you move yourself over to simple 4 LabVIEW 2014 licenses, maybe 5 if you want to get clever and have an automated build machine.

-

Does old man football count

-

Very cool Norm. Just saw the presentation by Nancy and I can't wait to try this out.

Hi,

Very late I know, but I have just caught up with the presentation given by Nancy at the 2013 NI Week and 2014 as well.

Does anyone know if the example that was shown during this presentation with Rex communication to three different clients is available for download ? It look quite interesting and I would love to have a closer look.

Malleable Buffer (seeing what VIMs can do)

in Code In-Development

Posted

Another minor comment for next release.

I had an issue building a executable using the package as it introduces a missing Item within Malleable Buffer.lvproj

It is looking for

C:\Program Files (x86)\National Instruments\LabVIEW 2021\vi.lib\addons\functions_JDP_Science_lib_Malleable_Buffer.mnu and cannot find it.

There is a functions_JDP_Science_Malleable_Buffer.mnu in the addons folder.

I simply removed the functions_JDP_Science_lib_Malleable_Buffer.mnu from the project and builds OK now.

I realize I have not said THANK YOU 🙂 🍺 another great community toolkit.