-

Posts

416 -

Joined

-

Last visited

-

Days Won

12

dannyt last won the day on February 12 2016

dannyt had the most liked content!

Profile Information

-

Gender

Male

-

Location

Devon UK

-

Interests

Family Time

Power Kiteing and Buggying (3.5 and 5 meter Beamers)

Reading (Ian Banks, Grisham, LorT type stuff, The Time Traveller's Wife, David Gemmell ,Robin Hobb)

Playing down the beach or on Dartmoor

TV Films (24 & Battlestar Galactica)

LabVIEW Information

-

Version

LabVIEW 2018

-

Since

2017

Recent Profile Visitors

10,870 profile views

dannyt's Achievements

-

Malleable Buffer (seeing what VIMs can do)

dannyt replied to drjdpowell's topic in Code In-Development

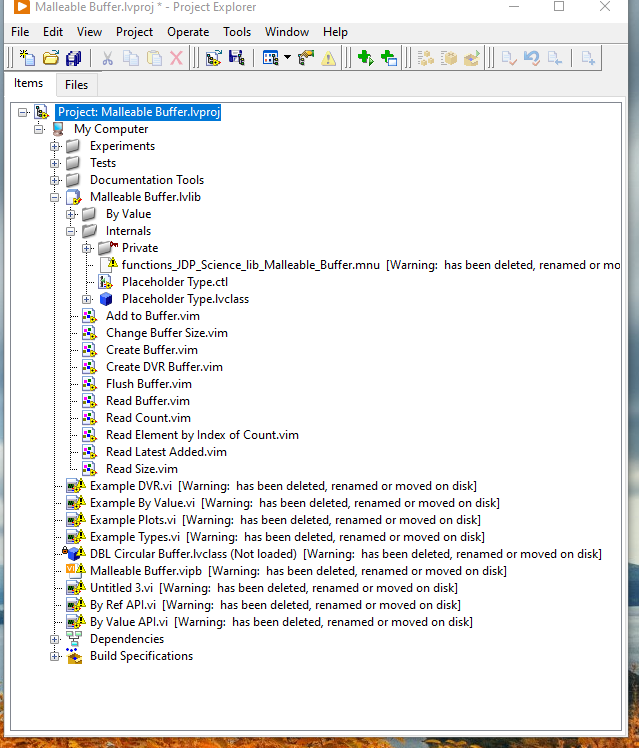

Another minor comment for next release. I had an issue building a executable using the package as it introduces a missing Item within Malleable Buffer.lvproj It is looking for C:\Program Files (x86)\National Instruments\LabVIEW 2021\vi.lib\addons\functions_JDP_Science_lib_Malleable_Buffer.mnu and cannot find it. There is a functions_JDP_Science_Malleable_Buffer.mnu in the addons folder. I simply removed the functions_JDP_Science_lib_Malleable_Buffer.mnu from the project and builds OK now. I realize I have not said THANK YOU 🙂 🍺 another great community toolkit. -

Malleable Buffer (seeing what VIMs can do)

dannyt replied to drjdpowell's topic in Code In-Development

A very minor comment, the Read Buffer context Help is incorrect and a duplication of the Read Element By Index Contents Help. -

dannyt changed their profile photo

-

I place all the VIPM packages I have a dependency upon into a Mercurial repository I have specifically created for that purpose and if I upgrade to a new version ensure both are kept in the repository. In an idea work I would upgrade from the community edition of the VIPM to the Pro version and create VIPC files instead

-

This might be way more than you are looking for in terms of functionality, but I am using a full cloud based Test Data Management system called http://www.skywats.com/. It is a cloud base Test Results Database with Yield & Trent Analysis, UUT reports, Root Cause analysis and much much more. They provide a number of different ways of getting test results into the system, TestStand integration, LabVIEW Toolkit and also free trail.

-

Again to stress one thing I mentioned in my post. I have really found it to help to have all NIC's always in an ACTIVE state, the nice green link on light lit and that is why for £10-£50 I stick a cheep powered switch on the NIC, something like a Netgear GS105UK 5 port. It seemed to me that if you just hung something like a UUT straight off the that was not always power on, the powering on and making the NIC go from inactive to active made windows do "things" that sometimes meant thing did not work as expect or just take a damn long time, Windows XP seemed better than the newer version. Install and play with Wireshark it can help tell you what is going where

-

I know it makes me a slower coder but when coding in LabVIEW I always use autotool and if I want to switch it off I actually use the tools pallete, rather than tabbing. About the only thing I use on the keyboard is Ctrl-E, it is partly also the reason I do not use the quick-drop option. I did have major problems with my mouse hand a few years ago and found that a left handed Evoluent mouse really worked for me. Plus much to my surprise as I am very left handed, I found I could use a Logitech roller mouse with my right hand for things like web browsing or general screen use. So now I have two mice connected to my PC a left handed Evoluent one for LabVIEW programming or anything where real precision is needed and a roller ball on the right hand side for anything else, so I am switching which hand does what throughout the day. As I said it does make my slower and less efficient, but no the overhand it allows my brain to keep up with what my hands do. EDIT. I just noticed that even when doing LabVIEW I used both mice, I am using the roller-ball to moves FB or BD around my screen or from one screen to the other and automatically switch to my left hand when I start putting down wires. wow I had not realised I was doing that.

-

As has been said you want to play around with the routing table on a windows machine. Read up about the net route command https://technet.microsoft.com/en-gb/library/bb490991.aspx and windows routing in general, you will need http://serverfault.com/questions/510487/windows-get-the-interface-number-of-a-nic to get the if interface for the route add. I have PC's fitted with two NIC I ping out of NIC 1 to an IP wireless device across to air into another IP wireless device and then back into NIC 2 on the same PC. I can also with this setup do Iperf across the air and telnet out the each NIC as desired. Without setting up the routing table correctly, I would in truth just ping and Iperf across the backplane of the PC from one NIC to the other, but the routing ensures I go out one NIC. I do all this with LabVIEW and net route commands issued as system exec calls, it works well. I tend to delete all all routes and then set up my own. If you do this remember to alos add in a route to your network for the main PC LAN or you cannot get to internet or local server :-) Also note if any NICs are disabled or enabled, Windows will try and be clever and sort out the routing as it see's fit and break your rules, so to help overcome this I nearly always have a simple powered dumb Ethernet switch hanging on the NIC between my test device and PC, then if I power off my test device to NIC still sees a good active connection to something.

-

Been running it for a week now on my development PC and seem OK with LabVIEW. The update from Win 7 to Win 10 was problem free for me, but did take a long time. My only real issues so far is with a Cisco VPN Client, that no longer works at all so I need to use a Windows 7 PC still to get access to my factory located test systems.

-

Yes a big thanks from me as well, give me something to watch in the evenings

-

Amazing news has anybody else seen this http://sine.ni.com/nips/cds/view/p/lang/en/nid/213095 LabVIEW Home Bundle for Windows. Great news from NI

-

Programmatic Access to the Explain Changes dialog?

dannyt replied to TimVargo's topic in VI Scripting

You could add the information to the LabVIEW Wiki at http://labviewwiki.org/Home , I am never quite sure if this site is related to LAVA or not. -

Programmatic Access to the Explain Changes dialog?

dannyt replied to TimVargo's topic in VI Scripting

I never did a full list of the bitset mappings I found what I wanted and left it at that, I did find this on the web http://sthmac.magnet.fsu.edu/LV/ILVDigests/2003/12/06/Info-LabVIEW_Digest_2003-12-06_003.html that might help you, but it may well be different for different LabVIEW versions. I cannot get to grips with your idea of putting the property within the VI's you are interested in, it does not sit well with me the idea of putting in code that does not really have anything to do with the function you want your VI to do, maybe that just me. You are doing something harder than my recompile check, in my case, one or two VI's that actually needed to change would be edited saved and closed. These VIs would then be committed to the source control system with a comment about the change just made. Then by running the tool VI I could get a list of all the VI;s that the original edit has caused to recompile and these could be saved and committed into the source control system with a comment saying they were recompiles. The nice thing in my case was all the VI's were closed when I ran my tool. In your case I think you want to get a list of files in memory and then look at them all one at a time -

Programmatic Access to the Explain Changes dialog?

dannyt replied to TimVargo's topic in VI Scripting

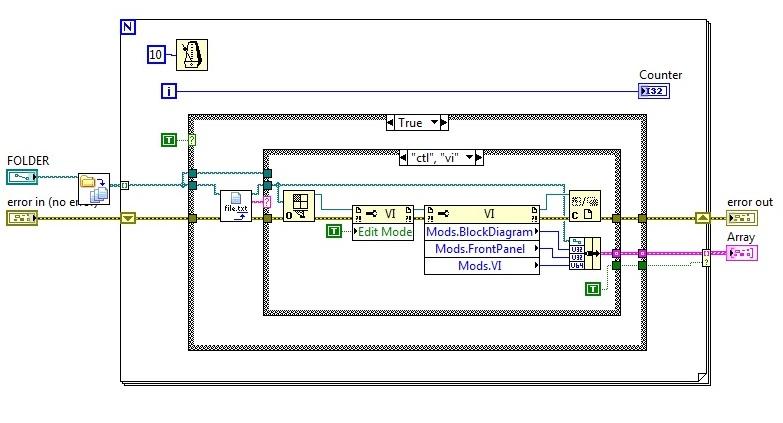

I am sure there was something for this. At my previous company before the separate source and compile code option existed I used a scripting property to check the Modification bits to look for VI's that only had "recompiled" as their change. Cannot remember what I did thought. EDIT Just found it, I would list all the files in a directory then open one VI at a time in edit mode and then there was a property values of the VI showing "Modifications -> Block Diagram Mods Bitset" , "Modifications -> Front Panel Mods Bitset" and "Modifications -> VI Panel Mods Bitset". I had to play around a little to find out what the values meant but I did get it working