-

Posts

1,824 -

Joined

-

Last visited

-

Days Won

83

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Daklu

-

-

This page has the NI roadmap for OS support. Official Windows 7 support comes in March 2010.

That page shows NI dropping support for Mandrake. Anyone know why? (I don't use it, just curious.)

-

Twitter (like most internet traditions) is one of those things that either you get it - and love it - or you don't.

Being a stereotypical anti-social introvert I'm downright stumped why I would even care that somebody just bought a latte and "OMG! The barista was so rude!" or why anybody else would care that I "Closed 4 bugs today." I signed up solely so I can get up to the minute information on any new stuff announced at NI Week. (Hope nobody gets offended when I drop all their feeds afterwards.)

...and no, I don't have a facebook account either.

-

3. Perhaps I can beat the OpenG Builder into some horribly disfigured mess that will do what I want.

I can't respond to 'best practices' as I haven't done a complex plugin architecture, but I have used OGB a lot. If I'm understanding you correctly, you don't have to beat OGB into submission to get it to do your build. (You might have to beat your dev process a bit though...) The problem with OGB is that the 'Exclusions' tab takes precedence over the 'Source VIs' tab. Any VIs you attempt to include on 'Source VIs' that are in the directory tree of a folder you have chosen to exclude will not be included in the build. To solve the problem make sure the directory where you develop your plugins is outside of the directory trees of any of the main sub VIs your plugins use. Then you can simply exclude the directories that contain the sub VIs you are reusing.

There are a couple things I can suggest trying:

- Develop your plugins against distributed code instead of your source code. In other words, install the main application on your dev computer and link to those sub VIs instead of the sub VIs in your development directory. This is what I would do. Your source code tree is likely (and should be) in a completely different location than the install location, making excluding all the reused sub VIs a one step operation. (Exclude the installation directory.)

- You implied there are lots of sub VIs you are reusing. If the majority of them are not in a parent directory of your Plugins directory you can exclude them by excluding those directories. Then you could go through and individually exclude the few remaining reused VIs that exist in parent directories.

[Edit - This addresses how to build your plugins in a way to avoid including the reused sub vis. I'll leave the packaging to someone else.]

-

Nice try

Heh heh... maybe you can answer this one... is it something you've seen discussed frequently on Lava? (Or ever discussed on Lava?)

-

while this technique is certainly cool, it should only be used when appropriate!

So... under what circumstances is it appropriate?

-

guess that's why I'm not in marketing

But you could be. You've just done your consumer research and realized the product doesn't resonate with customers. That's more than 90% of the marketing dweebs experts ever do. Of course, you'd have to check your brain at the door when you get to work...

-

I think of Endevo when I hear GOOP.

Hmm... could be I was mixing up my OOP implementations. Go figure.

I think the next release of LabVIEW might make things even more interesting (if used appropriatley - that'll be the real challenge...)

Oh come on... don't leave me hanging like that...

Interfaces? Mixins? Multiple inheritance? Friend classes? Hopefully there's more than just the extension onto... uhh... different run time platforms.

Interfaces? Mixins? Multiple inheritance? Friend classes? Hopefully there's more than just the extension onto... uhh... different run time platforms.(You know you want to tell me Chris... it'll just be between me and you... I won't tell a soul.)

-

hi all,

actually i want to start goop programming is anyone help me regarding that

beacause i know the labview basic progaamming........

FYI, object oriented programming using native Labview classes is typically referred to as "LVOOP." When people say "GOOP" they usually mean Sciware's code generating toolkit, GOOP Developer. Using the accepted terminology will help avoid confusion when you come back with more questions.

-

Hmph... I guess this means I'll have to finally get off my Luddite arse and sign up for Twitter.

...freaking birds on the internet... Humbug!

-

Rusty nail.... Loving scripting... get the rusty nail through the heart..... shit... didn't think it was that hard to get.

I thought it was a railroad spike.

Even with your explanation it's still a WTF. The message I get out of it is more along the lines of "scripting broke my heart" or "scripting is dead and I'm heartbroken."

-

Norm,

Thanks for the update on EyesonVIs. So I have downloaded all the packages, but am unclear on where/how to install the LVSpeak llbs.

I have reviewed the VIPM online help but am not seeing how to tell it where to find the LVSpeak llbs. I am also unable to get it to connect to servers outside the corporate firewall, but I seem to gather that shouldn't be necessary. Also, only using the free VIPM.

After you download the LVSpeak packages, you need to install them using VI Package Manager before starting Labview. In VIPM, go to File -> Add Package(s) to Package List... Once the LVSpeak packages are listed in VIPM you can install them like any other package.

-

She said it was a very interesting but bizarre experience.

Just watching a Tim Burton movie is an interesting but bizarre experience... I can't image being in one.

-

I don't plan on having the user, use user.lib as a source code dev directory. I'm merely using it as a home for files that will be copied upon the start of a new *widget*.

I didn't mean to imply that you were. I made that comment mainly for newish LV users who might be wandering down the same path I did.

In the past I've wondered a bit about how to deal with the situation you're describing. The best solution I've come up with is to distribute the base widget code to two locations: user.lib for using it in vis directly and another directory that serves as a source for copying. I've also considered (but never experimented with) setting up file or directory links (forget what they're called) on the os level and having the vis in user.lib simply wrap the functions in the other directory. Unfortunately all these violate your original assumption so I didn't mention them.

SciWare's solution is definitely much more promising than anything I came up with.

Kudos!

Kudos! -

I recognize this is a corner case, but am trying to see if anyone has encountered this issue before or if there is a better way of working around other than loading the vi hierarchy from their new location bottom up so that the callers see them in their new location first.

I have encountered that before, but it was because I wasn't properly separating source code and distribution code. (Using user.lib as a source code dev directory != good idea.) Once I adopted better project organization practices the problem went away.

-

Pure speculation here...

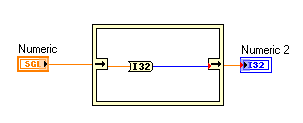

My guess is that in memory, the computer not only needs to know the value of the 32 bits, but it needs to know the type of data it is as well. It's been eons since I've done any C/C++ programming, but I believe changing the data type in place would mess up the pointers. They would think they are still pointing to a sgl but are in fact pointing to an int. ('New math' takes on a whole new meaning!) Therefore, type casting will always create a new buffer with a new pointer (and new pointer type) pointing to it.

-

Here is a great piece of knowledge base from Adam Kemp. Twas posted on Info LabVIEW earlier this year.

It maybe the reference you are thinking of? The PowerPoint is a good read.

This might be what you're thinking of?

Yes on both counts. (I need to figure out a way to collect and organize all the tidbits of information I gather.)

-

For example, if you create a subVI with the usually-appropriate error case around it, there's no reason (other than asthetics) to have all of your inputs outside of the case - they should be inside the "No Error" case (except for the error clusters of course

).

). Are you sure about that? I'm sure I've read that putting input controls inside a case statement prevents LV from making certain compiler optimizations. I can't find the reference at the moment though.

If you have other control outside, you're tempted to have all your indicators outside to, and then you have to handle what values go to them, even when the case that usually handles their value is never executed.

Meh... 'Use Default if Unwired'

-

As a general rule I always put subvi controls and indicators on the top-level diagram outside any structures. I believe that usually results in the most efficient compiled code.

In normal looping situations with n loops option 1 will typically outperform option 2. In option 2 every loop creates two additional copies of Data In for a total of 3 copies; one for the front panel control, one for the wire, and one for the front panel indicator. (Note: It will do this if the front panel is open. It may not if the front panel is not open... I don't recall for sure.) However, since this loop always only executes once logically it shouldn't make any difference in terms of memory allocation and data copying. Another thing to look at is using for loops instead of while loops. I read somewhere that a for loop with a constant 1 turns out more efficient code than a while loop with a constant TRUE. IIRC the compiler doesn't recognize they are functionally equivalent and still executes a check at the end of the while loop to see if it should continue.

-

How about create constant?

Or create anything near the edge of the structure. Or when you change a typedef and all your typedef constants reset to 'align vertical' and your structure grows to fit?

-

Now, what happens if, on the diagram of B:Increment.vi, we wire a C object directly to the Call Parent Method node? When we call "Get Numeric.vi" on that C object, we get the answer "1". That violates one of the invariants of C objects. The defined interface of the class is broken. And that's a problem.

Thanks for the clarification AQ. I admit that once I understood what you were saying (it only took a dozen reads) my immediate question was "so what?" I didn't see how it would have much impact in real world applications. I mean, sure it violates the absolute principle of encapsulation and it could cause some odd bugs in obscure corner cases, but is it anything to get alarmed about? Probably not, because in your example Class B (the parent) code was modified to violate Class C's (the child) defined interface. It appeared to require injecting a rogue class into the inheritance tree.

Then I read gb's comments. Now... it may take me a bit longer (okay... a lot longer) to get all the way around the block, but eventually I'll get there.

I started wondering if this could be used to exploit released code. I rigged up a simple class hierarchy with a Textbox object and Password object. This is certainly not a good example of secure coding practices, but it surprised me how easy it was to break into the Password class even though I didn't modify the Textbox or Password object in any way.

On the third hand, it makes my head hurt

Yeah, me too.

-

Please tell me that is NOT what you are doing. It sounds like it is, but it shouldn't be happening. There should never be a reason why you would ever want to do this. If you directly pass that D to the Call Parent Method, you are destroying the data integrity of your D object.

Can you explain what you mean by 'destroying the data integrity of your D object?' I wired up a simple project to try and follow through the different scenarios you described and I didn't see data internal to D get corrupted or reset.

Then my students come and say "ok, this is fine, but I want to measure over both field and temperature". So I think, I could just expand my program to work with 2 instances of class A separately, but somebody is bound to ask to iterate over 3 parameters, so instead the cunning way to do this to make a new descendent class of A (B.lvclass) that takes an array of child classes of A and for each method simply calls the corresponding method for each element in my array of class A. Since dynamic despatch works, I'll call the correct method for whatever class is actually stored in my array. So I'm doing the second version of your list of operations below.

I'm far from an OOP expert so I may be way off base here, but that inheritance structure strikes me as odd. Class A is your GenericMeasurementObject and Class B is an array of GMOs. Doesn't that violate the "Class B is a Class A" rule of thumb for inheritance? What you're describing might work but I'm concerned your path will lead you off into the weeds (having spent much time there myself) with an application that is difficult to maintain or expand.

-

What is this?!? Beat up on crelf week?!? Not that I don't like it

Isn't every day beat up on crelf day? No? Maybe it should be...

(Think of how high your post count would get responding to all those comments...)

-

Actually, it is a standard design pattern in LabVIEW. NI has presented it in many places. Most recently, it was in the spring 2009 Developer Days conference presentations for "Design Patterns in LabVIEW." The slides are here.

So is there a preferred way for a class to expose events to subscriber vis? Here are a few options I've considered but I'm not sure of all the tradeoffs associated with each.

Exposing a User Event - Easy to implement for the class developer, but forces the caller to handle all event registration duties, probably leading to duplicate code if events are registered in more than one place. Since user events have data types associated with them it could be harder to implement this is a complex class hierarchy.

Exposing a Message Queue - Aside from the UI polling issues, this seems similar to exposing a user event except it 'feels' like it would lead to more tightly coupled code. Different classes may have different message types. Event subscribers would have to implement separate event loops for each class.

Accepting an Event Registration Refnum - Have the class expose Register and Unregister methods that implement the prims. Event subscribers pass an event registration refnum to those methods. I think I would prefer this implementation except calling Unregister for Events removes all events from that refnum. The class has no way of knowing what other events are registered to that refnum, meaning the event subscriber has to implement bookkeeping and auto-reregistration routines.

Accepting Callback VI Refnums - Same idea as the event reg refnum except the subscriber passes vi references to the registration methods. When the event fires the class automatically executes the callback VIs. The problem with this is I haven't figured out a good way to pass data with the events. If I invoke the callbacks using a Call By Ref node the callback has to finish executing before the thread returns to the class. When multiple callbacks are registered for a single event one poorly written callback could significantly delay (or prevent) other callbacks being executed. Maybe the answer is to require the subscriber to query for data after the event...Any other ideas?

-

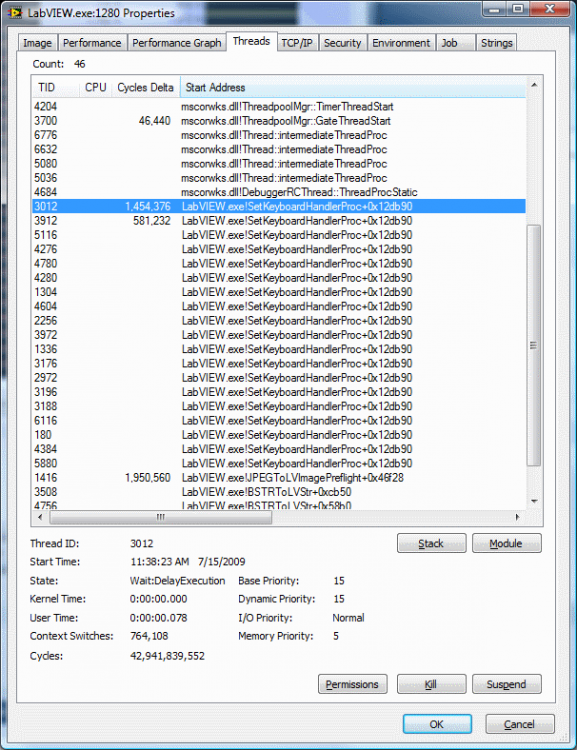

Process Explorer will list threads associated with an application. There is a lot of information provided about each thread, but it's not clear what it all means. Does anyone know if it's possible to relate a Process Explorer thread to a LabVIEW thread (e.g. Data Acquisiton, Standard, etc.)?

Gary

In some cases it is possible to deduce which threads are performing certain actions. Looking at PE, the highlighted thread and the 15 others with the same name are the user threads. If you monitor this you can see which threads become active when you start a new parallel loop. It might be possible to identify certain LV primitives by examining function offsets on the thread call stack, although that would be a lot of work since the call stack is a snapshot and there's no guarantee the thread is executing that prim at any given moment.

It's also not always obvious by looking at the bd when LV will use one of those threads. A spinning loop uses one thread, but a spinning loop with a wait prim uses two and occasionally kicks in a third.

There is possibly one exemption which would be the UI Execution System with always only one thread and this is the initial thread that was implicitedly created by the OS when the LabVIEW process started up.

Is this is the 'UI thread' often talked about on the forums? I've never quite understood if they meant the Labview UI, the VI UI, or maybe even the Windows UI.

I'm also curious about the who does the user thread management. I take it LV is responsible for deciding when to invoke one of the threads from the pool. Does LV also manage all of it's own thread switching? Does the OS get involved in LV threads or does it stay at the process level?

See who voted up your post

in Site Feedback & Support

Posted

Hah! I knew there was a reason I hadn't signed up for twitter!

On the slightly more serious side, I have a real hard time feeling bad for somebody who got skunked while blatently fishing for kudos. And if they then get offended because you didn't vote it up? Puh-leze. That person needs to get out a little more and develop a sense of self-worth that isn't based on an arbitrary rating from strangers.

PPPPS: Are you misusing the abbreviation for 'post script'?

PPPPPS: Yes, you are. ("Post script script script?")

PPPPPPS: Am I a total lamer for calling in the grammar police?

P^7S: Yes, I am.

This issue really makes me question the point of having kudos in the first place. Kudos are meant to reflect that someone has contributed to the LAVA community--either with humor, by helping others solve problems, sharing tools, etc. The minute social pressure contributes to the decision to give kudos is the minute they become absolutely worthless.

Personally, I think it is interesting to be able to trace kudos on the dark side. It gives me another way to find people and topics that match my interests. It also lets me discover that AQ's 29 kudos were all given to him by Darren and a lurker named 'Aristos Notifier.' It's a feature that would be nice to have.