-

Posts

1,824 -

Joined

-

Last visited

-

Days Won

83

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Daklu

-

-

I've resolved the problem. The root cause is one of the following, I'm not sure which:

- The project file became corrupted or Labview got into some weird state that required a restart or reboot.

- I had open control/project/vi files that had not been saved, thus breaking everything.

It was probably #2, meaning Labview was saving me from my own idiocy.

QUOTE

Open the List Errors Window. In the list of files, scroll to the very top. The items with a red X are the items that are really broken. Everything else is broken as a result of the red X items.Thanks for the tip AQ, that has helped a lot.

- The project file became corrupted or Labview got into some weird state that required a restart or reboot.

-

QUOTE

Do you have the Access Scope for the typedef set to Private? You have to make sure it's Public (or Protected, if you're only using it in related classes) or else VIs outside the class won't be able to access it.It's not a scoping issue. This happens when the typedef and vis that use the typedef are within the same class. (But yes, I did try rescoping it anyway.

)

)QUOTE

However in addition to what Justin said, it may be that you are having some sort of type recursion that is not allowed.Hmm... that could be. I'm using essentially the same data in my typedef as I am in my control private data, though I'm not using the typedef as the control data. One that is annoying is the number of errors in the error list that spawn whenever anything goes wrong. It makes it difficult for me to figure out what the real problem is.

QUOTE

Is it preferred/discouraged to put several classes within the same lvproj?I think the lingo is throwing me off a bit. Classes and Project Libraries use the Project Explorer but are not projects themselves--at least I haven't been considering them projects since they do not have a .lvproj file. Is this correct? The class hierarchy I'm developing is intended to be used by other developers in several battery testing systems. I intend the classes to be copied to the application directory rather than placed in user.lib.

On the one hand, these classes aren't a project in the sense that there isn't a complete application when finished. On the other hand, all the classes are related so having them in a lvproj makes it easier to manage the group. If I have the class tree in a lvproj, does another developer need to use the entire class tree or can they extract a single branch of the tree, assuming there are no sibling dependencies. (I'm thinking of the kind of restrictions that go along with project libraries... use one vi and you get them all.)

This really isn't a critical issue. Whether or not I place the classes in a lvproj seems to be a matter of personal preference. I'm really just trying to figure out if there are standards or best practices with this.

-

So I'm dipping my toe into the chilly waters of LVOOP and encountered something that doesn't work the way I expect. Is it a bug? Is it a 'feature?' Is Labview protecting me from my own idiocy?

In my class I have a vi that takes a cluster of data as an input. I would like this cluster to be a typedef that I could use internally in the class as well as have available to class users. However, any class vis that use the typedef as a input, as well as the typedef itself, break when the typedef is in the class structure. If I disconnect the typedef on member vis it works, or if I place the typedef outside the class heirarchy it works. Is this expected behavior?

Other questions I've been unable to find answers on:

-Is it possible to create a class or method as 'must inherit?'

-NI examples always show classes and lvlibs contained within a lvproj. Is there any particular reason for this other than being able to set up build specifications?

-Is it preferred/discouraged to put several classes within the same lvproj? i.e. I have a Battery class which will serve as a parent for several specific battery child classes. (i.e. Energizer, Duracell, etc.) Currently I am planning on putting them all in the same lvproj. Good? Bad?

-

-

QUOTE (BrokenArrow @ May 13 2008, 05:45 PM)

Well, I think that's true to the extent of complexity of the "thing" you're driving. I've used devices that just do one simple thing and the VI can fit on 1/3 of a screen, such as a high-current relay output board with just two relays. So to your point (or against it) I guess that would be an example of one VI being a well written driver.QUOTE (BrokenArrow @ May 13 2008, 05:45 PM)

But I assert that it isn't a driver, but is a LabVIEW VI.Why can't it be both? "VI" and "driver" are not mutually exclusive. A vi is simply a bunch of G code saved as a single file. It refers to where the code is. A driver refers to what the code does. The case you presented is both a vi and a driver.

I'm certainly not claiming my definition is the best there is, but it works well for me. I constantly struggle with "where should this code go?" when writing code. Keeping that definition in mind helps me make better decisions. It also easily answers questions in AQ's post.

QUOTE (PaulG)

"The actual dll or C code is the "driver". Or am I full of pixie dust?"If you're going to follow that path, is the source code the driver or the compiled code? If it calls the OS API to perform low level functions is the operating system the driver? Drivers written in .NET and compiled into intermediate code? Drivers written in interpreted languages? What a mess...

The dll is a driver--so is the Labview code wrapped around it. I see no problem with drivers having multiple layers. To refer to my translator example, suppose I need to do business with someone from Russa but there are no English-Russian translators available. My Swedish translator has a friend who speaks Russian so we use both of them to bridge the gap. My translator handles the English/Swedish translation and his friend handles the Swedish/Russian translation. Can I claim one of them is the "real" translator?

QUOTE (AQ)

What if the DLL is aLV-built DLL? Do the VIs in the DLL count as a driver?It waddles, quacks, and floats on lakes... must be a driver.

-

To me a driver is a piece of software whose sole purpose is to pass information from an application's business layer to/from a piece of hardware. If the driver contains business logic it is no longer just a driver and becomes an integrated part of the application. Or as I call it... a PITA.

I see a driver performing much the same role as a translator. If I need to conduct business with someone in Japan, I find a translator so we can communicate. If the translator is making business decisions on his own, then when I get another translator to do business in Sweden my system breaks down. I need to train the Swedish translator to make the same decisions as the Japanese translator. I restrict the translator's function to translation.

As to the original question, "When is a VI a 'driver,'" I'd say a single vi is almost never a well-written driver. (I suppose there may be some trivial cases where a single vi could serve as a driver.) A good driver is generally a collection of vis. If your hardware has a single vi as a driver it likely needs to be broken up or rewritten.

-

QUOTE (Jim Kring @ Apr 9 2008, 05:38 PM)

The installer build specification has its own version number, which I want to be identical to the version number of the EXE (since, it doesn't make sense to have a version number for the installer that is different from the version number of the EXE).I was just poking around trying to figure out the whole Labview versioning system and come up with a good scheme to keep track of applications. It suprised me the installer had it's own version number, but when I experimented with changing the numbers I couldn't find where the installer version number is used.

I also discovered the VI property History:Revision Number isn't available when built into an executable. It makes it a little difficult to correlate what I see on the dev screen with what I see in compiled applications.

-

QUOTE (crelf @ Apr 9 2008, 06:21 PM)

When I read you post's title, I thought "Wow - this guys got 8451 VIs in a lvlib - that's a lot of VIs!"

When I read you post's title, I thought "Wow - this guys got 8451 VIs in a lvlib - that's a lot of VIs!"Heh, I hadn't noticed that. I'll have to go back and fix that.

(Upon graduation they should give every engineer an english major to act as editor for life... it would help with the homeless problem. :laugh: )

-

I've been creating a Labview Library using VIs from NI's USB-8451 Interface module. I noticed that when I use the ni845xControl.ctl in a vi and I wire it up so it can execute, I can't open the vi through the library interface. When I try, Labview doesn't respond but eventually will crash.

What I find odd is that I can open the vi through Windows' File Explorer just fine. Also, if the vi is wired incorrectly (the arrow is broken) it will load correctly. In the attached file the vi containing the control as a constant was loading correctly, but then it stopped.

I searched the known issues for 8451 and lvlib but didn't find anything. I also tested it on a second computer with the same results. Is there something obvious I'm missing?

-

Currently I'm going through all our Labview tools created over the last 2 years and archiving them in our source control system. Most of them are simply vi trees dumped in a single directory with many outdated and unused vis present. I'm switching them all over to 8.5 projects so I can create build specifications to ease my task.

My questions are:

- When storing projects in source control, what do you include as part of your project as opposed to leaving under dependencies? VIs from user.lib? instr.lib? vi.lib? DLLs?

- When you check in code do you create a zip build and check that in? (As near as I can tell you need to do that to collect the vis not in your project directory, unless you want to find them and add them all manually.)

- Does your strategy change when you are checking in code for a project currently under dev versus closing a project?

Many of these projects are being archived and if they are ever checked out again it's likely it will be by a different user on a different computer with different addons installed, etc.

- When storing projects in source control, what do you include as part of your project as opposed to leaving under dependencies? VIs from user.lib? instr.lib? vi.lib? DLLs?

-

I did some benchmarking to compare the standard unbundle method to JFM's boolean mask method.

Initially the unbundle method was faster with increased benefit if the item was near the beginning of the array. This makes sense as it will stop searching as soon as the item is found while the masking method iterates through the entire array twice before searching. However, later testing with slightly different test code had the mask method consistently faster. Odd.

Unfortunately my thumbstick appears to have digested my code.

-

QUOTE (Norm Kirchner)

Will the data ever be displayed or was your thought to use the tree to only store the data in a hierarchical format?It will never be displayed as a tree although I will display parts of the tree in combo boxes occasionally. 99% of the time I use the tree I don't care about displaying it.

QUOTE (Norm Kirchner)

The reason I ask is what is the overhead you're speaking of, because to grab values/properties from the tree is very fast depending on what you're doing, and w/ the Variant based DB that I have integrated, if you're extracting and modifying information and adding information, the only overhead is the usual time associated w/ the variant operations.[WARNING - Long boring application details follow]

This will be a little easier to explain if I give you an example of the data heirarchy and explain a little bit about how I implemented the application. One particular data file type I work with is organized into the following 'filters.' (I'll explain the naming in a minute.):

Test Number -> Speed -> Angle -> OffsetEach data file may have multiple Test Numbers, each of which may contain multiple Speeds, each of which may contain multiple Angles, each of which may contain multiple Offsets. It is important that I am able to have cousins with the same name, since I will have (for example) data with the same angle but different speeds. There will be several data points associated with each unique filter combination.

When I load the data I decode a 'tag' value recorded with each data point to determine what filter combination that data point belongs to. I group all the points with identical filter combinations into an array and bundle that array with a tree representation of the filter combination. This cluster I refer to as a 'Level 1 Element.' (Creative naming, I know.) I store all the data that has been loaded in an array of L1 elements. (Since multiple files of the same type of data are loaded, I prepend the filters above with a file alias filter to uniquely identify the data file, but we'll ignore that.)

My application is for data analysis. For example, I'll take a set of data and calculate the min, max, mean, and st dev of the error. The data set may include a single L1 element, or it may include an entire data file. Users need to be able to choose how much data to include in the data set when displaying data and calculating metrics. I populate combo boxes with appropriate filter values and users use the combo boxes to select the data they wish to view, hence 'filters.'

Undoubtedly some of the overhead has to do with poorly written code and bad architecture on my part. These may not apply to your code, but some of the inefficiencies I've encountered are:

- Since I couldn't store a tree as a native type in the L1 element I had to store it as a variant. But, since you can't convert a tree directly into a variant and maintain the structure, I built a pair of vis to convert between a variant and a tree control. Any time I want to operate on a tree I have to convert it into a tree before I do anything. Let's say I have a data file with 10,000 data points and there are 100 unique filter combinations in this data set. With each data point I have to search through my L1 elements to find the one this point should be added to. On average I will have to search through 50 L1 elements before I find the one I want. That means I have to convert a variant to a tree 500,000 times just to load that data.

- Every time the user changes a filter combo box I have to do a variant -> tree conversion for each L1 element as I search the data store for the right data. (My initial implementation attempt stored the data heirarchically instead of as an array with a unique filter combination. That would have improved data searching efficiency but it got very confusing very quickly.)

- All the operations I'm interested in require a reference to the control. As I understand it reference operations are inherently slower than operating directly on data. I might have to build thousands of individual tree branches for a given data set. It appears to add up quick. Simply deleting all the nodes in a tree seems to take an unusually long time. (Which I have to do often seeing as how 'Reinit to Default' only changes the selected value in the tree and doesn't alter the structure at all.)

- My tree nodes are defined by the path, not by the node value. Due to my kissing cousins (cousins with the same name) knowing a node string doesn't do me any good unless I know the entire path. Because the tree control doesn't allow identical tags they have no value to me beyond using them for add/delete/etc. operations. I spend a lot of time finding the tag <-> string relationships, especially when checking a tree to see if a branch exists.

I'll be the first to admit I didn't spend a lot of time looking through your api. Perhaps I dismissed it too quickly without giving it a fair shake. I'll take another look at it. With the unique requirements for my tree my hunch is that a simple, application-specific tree will work better than shoehorning it into a multipurpose tree api. (Of course, my hunches often get me in trouble...)

QUOTE (jdunham)

Don't be scared. You don't have to do anything with the classes.I have looked at it since my last post and it clearly belongs in the deep end of the pool. (Where'd I put my floaties?) Assuming I could decipher it enough to use it, I'm not sure that implementation would work since it appears to be a binary tree. Features like auto-balancing would also really mess me up.

QUOTE (jlokanis)

Let us know how you end up solving the problem!This is what I've cooked up so far. It is far from a general tree implementation but I think it will do what I need. I haven't tested it much nor benchmarked it yet so I don't know if it is better than what I've got. Changing the tree implementation is fairly major application surgery; I'll need to test the various solutions before weilding the knife.

- Since I couldn't store a tree as a native type in the L1 element I had to store it as a variant. But, since you can't convert a tree directly into a variant and maintain the structure, I built a pair of vis to convert between a variant and a tree control. Any time I want to operate on a tree I have to convert it into a tree before I do anything. Let's say I have a data file with 10,000 data points and there are 100 unique filter combinations in this data set. With each data point I have to search through my L1 elements to find the one this point should be added to. On average I will have to search through 50 L1 elements before I find the one I want. That means I have to convert a variant to a tree 500,000 times just to load that data.

-

QUOTE (Aristos Queue @ Mar 26 2008, 01:18 PM)

Swap the order of your numeric and your string in the cluster, and I'll bet the Search 1D Array prim slows down to be a lot closer to your for loop.That's a good tip. Thanks! I hadn't thought about the order in which the cluster elements would get searched. Kind of a moot point in this particular case though as the search prim doesn't work for what I'm trying to do.

(I coded it up as an example of how I imagine it would work.)

(I coded it up as an example of how I imagine it would work.) -

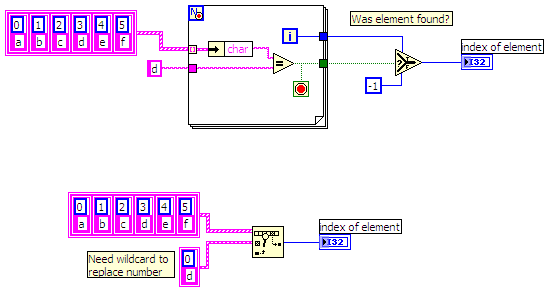

In my code I often have arrays of clusters in which I need to find the element that matches one piece of data in the cluster but the other pieces of data don't matter. For example, if I have an array of clusters in which each cluster contains a number and a string, I'll need to find the element in which the string matches a specific value but the number doesn't matter. (Often I won't even know the value of the number.)

I've always handled this by iterating through the array, unbundling each cluster, and comparing the strings. (Upper code line.) My benchmarking shows the search array prim to be ~20% faster than iterating for arrays that don't use clusters. Is there a way use a wildcard in place of a value in a cluster that would allow me to use the search array prim? (Lower code line.)

-

QUOTE (krypton @ Aug 8 2006, 12:05 AM)

As far as job hunting is concerned, it is better to get a wider range of selection. Get a certificate to get a job, or get a job and then get a certificate, which is better?Certificate before a job, or job before a certificate? My personal experience is there's no way to predict what will impress a prospective employer, especially coming right out of college. For instance, when I graduated college my first boss hired me in part because I had earned my private pilot license after 11 months of training. He thought that demonstrated perseverance and dedication. He was primarily looking for someone with good work habits and certain personality characteristics. Everything I needed to know I learned on the job.

Once you've been in the industry for a while certificates may be useful or even expected if you are looking for a Labview specific job, but I think they have limited value when looking for your first job. Rather than getting a certificate, I'd do projects, write a one page summary about each one, and give those to your prospective employers. When documenting your projects be sure to include what problems you had and how you solved them. *Every* interview I have been on has asked me about how I overcame challanges.

My $.02

[Edit]

However, there's nothing wrong with getting the CLAD if the cost is not prohibitive. You can also purchase the course kits for intermediate training. They are expensive for a student budget but much more affordable than instructor-led training.

-

QUOTE (Aristos Queue @ Mar 24 2008, 07:08 PM)

-

QUOTE (crelf @ Mar 20 2008, 04:11 PM)

I did see that and looked at it a bit, but it also uses the tree control and carries the overhead associated with it that I'm trying to avoid. (I wish I had seen that before I cooked up my version though.)

QUOTE

Have you checked out the?Interesting, but...

QUOTE (AQ)

THE CODE IN THIS .zip FILE IS REALLY ADVANCED USE CASE OF LabVIEW CLASSES. Novices to classes may be scared off from LabVIEW classes thinking that all the code is this 'magickal'."...I'm scared. I understand the concepts of classes but have little pratical experience with them. The discussion alone is over my head much less the code

. Maintaining it could be difficult. I'll have to play around with it when I'm ready to rewrite the tree section.

. Maintaining it could be difficult. I'll have to play around with it when I'm ready to rewrite the tree section.QUOTE (jlokanis)

The variant tree structure is fast for reading but slow for writting. So, if you build the data tree once and then read from it often, this is a good approach.Lots of reading and writing. I guess I'll either have to go with AQ's map class or an array of clusters.

-

-

For my current project I have string data that I need to have organized into a tree structure. Since there is already a tree control I decided to use that and created a bunch of support VIs to do the operations I need, including decomposing the tree into a variant and vice versa. Now that I've done that, I've discovered it's much too slow. I suppose it shouldn't surprise me; there is an awful lot of overhead that isn't really necessary for my simple needs and there are tons of operations using references.

So my question is, what is a good way to simulate a tree structure without using the tree control? Two solutions immediately come to mind:

Store the Tree as an Array of Clusters - Each cluster in the array corresponds to a single tree node. The cluster contains the data string, the index to the parent node, and an array of indicies to the children nodes. It seems like there are lots of advantages using this method over the tree control: Easier (and faster?) conversions between the data type and variants (with an array I might be able to skip using variants altogether), no jockying with tags, can act on the data directly instead of using references, etc.

Store the Tree as Variant Attributes - I found an .llb in the Code Repository written by John Lokanis that uses this type of implementation, although I haven't looked close enough to see how well it will fit my needs.

Additional stream-of-thought questions:

- If I use an array, what's the best way to manage it? If I fill it sequentially I imagine it will get rather large and sparse after repeated add/remove operations. Hashing seems a bit overkill, assuming I could even come up with a decent algorithm. Perhaps preallocating an array and having it run through a compression routine when it fills up? Hmm, if I preallocate an array with default clusters how would I keep track of which element is the 'next empty' element as the array gets passed around? Reserve element 0 as a pointer? Encapsulate the whole thing as a variant and keep track of the pointer using an attribute? (ugh)

- If I implement it as variant attributes I'll have to convert it every time I want to do an operation on the tree. How does variant conversion compare to array access in terms of speed?

- Are there any other inherent advantages to using arrays or variants that make one "better" than the other for this?

Thanks.

- If I use an array, what's the best way to manage it? If I fill it sequentially I imagine it will get rather large and sparse after repeated add/remove operations. Hashing seems a bit overkill, assuming I could even come up with a decent algorithm. Perhaps preallocating an array and having it run through a compression routine when it fills up? Hmm, if I preallocate an array with default clusters how would I keep track of which element is the 'next empty' element as the array gets passed around? Reserve element 0 as a pointer? Encapsulate the whole thing as a variant and keep track of the pointer using an attribute? (ugh)

-

Given that we've only been using Labview in my group for a little under two years, all the projects I can think of are either in 8.2 or 8.5. Any new projects I create are done in 8.5 although that is not the case for everyone here. Keep in mind, however, that I am the local "expert," having used Labview for all of 1.5 years.

Most of the users here have not heard of OpenG nor would know what to do with it if I sent them a link.

Most of the users here have not heard of OpenG nor would know what to do with it if I sent them a link. -

-

Since we're all professional programmers and do things strictly by the book, such as designing the application before coding

, I'm curious what tools you use during the design process. On my current project I started with Excel but switched to Visio when it became apparent the application complexity (~100 VIs) wasn't easily captured in Excel. By using the shape data fields I can get Visio to sort of work, but it's difficult to model nested structures, variant attributes, and a few other things.

, I'm curious what tools you use during the design process. On my current project I started with Excel but switched to Visio when it became apparent the application complexity (~100 VIs) wasn't easily captured in Excel. By using the shape data fields I can get Visio to sort of work, but it's difficult to model nested structures, variant attributes, and a few other things.What I have now is a flowchart layout with blocks for each event case and each processing loop state. I'd like to be able to easily see notes associated with each block, such as what data it generates or changes. I'd also like to easily see notes describing the data types I'm passing between blocks, including variant attributes, type of variant, nested structures, etc.

[As I typed up this post I realized writing an architecture.vi would work much better than Visio. I don't think it's quite what I'm looking for but it's definitely a step up.]

-

Thanks for all the info and tips. I understand Labview references much better now.

-

(Apologies if I misuse the terminology. I'm still a little fuzzy on the language of Labview references.)

Labview documentation implies that you only need to close references that you specifically open using the Open VI Reference primative. Is this acceptable programming?

I tend to use a lot of VI Server references to front panel objects in my code. (i.e. I right click on the control and create a reference, property node, or invoke node.) What are the implications of the following:

- Branching the reference. When I branch should I close each branch?

- Sending the reference to a sub vi. Should the sub vi send the reference back out for the calling vi to close? Is it okay to have the sub vi close it or simply leave it dangling?

- References within references. If I use a reference to a cluster to get an array of references to all the controls in the cluster, should I close the references in the array as well as the cluster reference?

- Aborting a vi. What happens to references during application development when I abort the vi rather than gracefully closing it?

Reading over my questions I guess it comes down to how Labview deals with copying references and garbage collection. I understand the concept of pointers in text languages but I'm not sure how Labview handles it.

- Branching the reference. When I branch should I close each branch?

How to get a control or indicator for an 8451 I2C Configuration Reference?

in Hardware

Posted

Topic says it. When I right click the NI 8451 vis or I2C Configuration property node, the options for creating a control, indicator, or constant are grayed out. The only way I've been able to get a Configuration Refnum as a control or indicator is to open NI's 8451 vis and copy from there.

Isn't there an easier way?