Search the Community

Showing results for tags 'channel'.

-

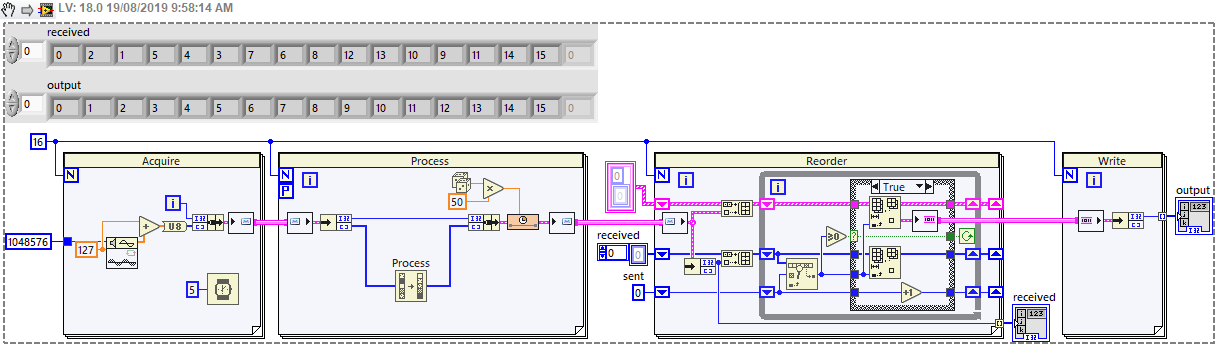

The Parallel For Loop is perfect for parallel processing of an input array, and reassembling the results in the correct order, however this only works if the array is available before the loop starts. There is no equivalent "Parallel While Loop" which might process a data stream - so what is the best architecture for doing this? In my case, I'm streaming image data from a camera via FPGA, acquiring 1MB every ~5ms - call this a "chunk" of data - and I know I will acquire N chunks (N could be 1000 or more). I then want to process (compress) this data before writing to disk. The compression time varies, but is longer than the acquisition time. So I'd like to have a group of tasks which will each take chunks and return the results - however it's no longer guaranteed that the results are in the same order, so there's a bit of housekeeping to handle that. I have a workable architecture using channels, but I'd be interested in any better options. Easiest to explain with a simplified code which mimics the real program: It requires the processing to use a Messenger channel (i.e. Queue) because a Stream channel cannot work in a Parallel For Loop, but this doesn't maintain order. And the reordering is a little messy - perhaps could be tidied using Maps but I don't have 2019 at the moment. The full image is too large to keep in memory (I'm restricted to 32-bit because the acquisition is from an FPGA card), so I need to process and write the data as it becomes available. I've considered writing a separate file for each chunk, but writing millions of small files a day is not particularly efficient or sustainable. Is there a better approach? Have I missed something? I feel like this must be a solved problem, but I haven't come across an equivalent example. Could there be a Parallel Stream Channel which maintains ordering, or a Parallel While Loop which handles a defined number of tasks? Thanks. Greg