Search the Community

Showing results for tags 'large dataset'.

-

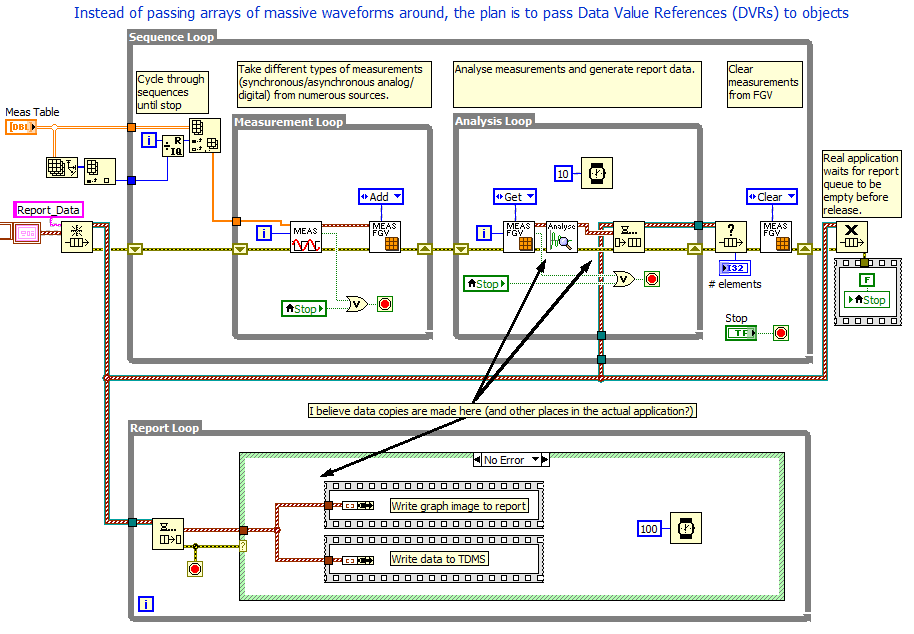

[cross posted to forums.ni.com] Greetings LVOOP masters. I realise this is not a simple question but I would really appreciate your opinion please. I'm trying to make some data handling improvements to a test sequencer application that I've written. User defined tables determine how much data is acquired. Long tests can easily generate 100's of MB's of data. Collecting, analyzing, reporting and saving that data tends to create copies and we can easily run out of memory. It's currently written without any LVOOP methodology but that is about to change. I'm new to OOP but have been reading alot. I have 2 objectives in refactoring the data handling VIs: 1) Enable different measurement types to be collected, analyzed and saved according to their unique properties. Presently any measurement type that isn't a dbl waveform is shoehorned into one and treated exactly the same. XY style data is resampled losing resolution and digital data is converted to dbl. This results in a lot of extra data being generated for no good reason other than to fit in a waveform. 2) Reduce data copies. Because the measurements are shuffled around from one module to another the copies become a problem. I'm hoping to use DVRs to the objects and pass around the references instead, reducing copies. I'm quite comfortable with objective 1, it's number 2 I'm not sure about. After reading this excellent whitepaper here I feel I need to get some expert advice on whether my approach is correct. In particular these two statements have raised questions in my mind: "Be aware: Reference types should be used extremely rarely in your VIs. Based on lots of looking at customer VIs, we in R&D estimate that of all the LabVIEW classes you ever create, less than 5% should require reference mechanisms." "LabVIEW does not have "spatial lifetime". LabVIEW has "temporal lifetime". A piece of data exists as long as it is needed and is gone when it is not needed any more." Q1. With that in mind, will all my effort to use DVRs actually reduce data copies significantly in my case? I plan to have a single FGV VI where the objects are created and stored in an array. Then when other modules need access to the data, a DVR is created and handed out. Once these modules are finished with the captured data, they flag it as deletable and the measurement FGV deletes the DVR and removes the object from the array. Q2. Is that the right way to go about it or am I missing the point of LVOOP and DVRs? Below is a simplified mockup of the way measurement data is currently used in the application. LVOOP Experts: Your feedback/suggestions are much appreciated. LVOOP DVR Measurement Optimization.zip