Search the Community

Showing results for tags 'testing'.

-

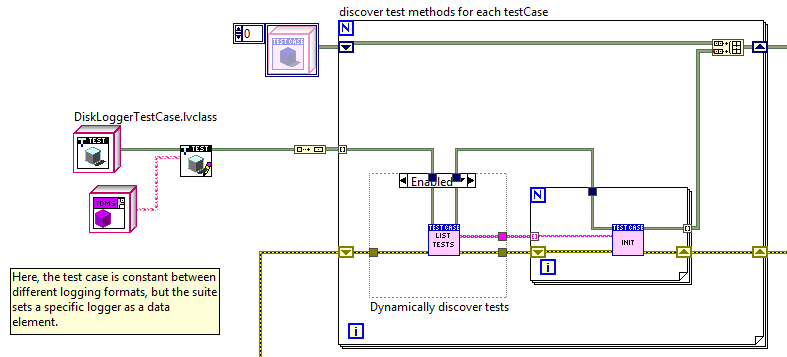

Hi, I'm writing unit tests for some logging code (OK... so I suppose testing the actual logging class would be integration testing, but...) and have (at present) two disk logging classes and an in-memory test implementation. My test suite/case arrangement is currently a bit of a mess (understatement) and what I really want to do is run the same set of tests using each logging implementation. Obviously I don't want to code up the same tests repeatedly (and have to maintain a set of multiples). The solution as I understand it is to store a class object of the type to test in either the Suite or the Case (or both?) and then always read from private data when running a test. Writing the individual tests in this way is fine. My confusion lies in the best way to arrange the Suites and Cases to do this. In particular, if I have some DiskLoggerTestCase containing a collection of tests (for simplicity, all of my tests) and with the Logger object as private data of the Case, then I can create a Suite with code like below in New.vi (duplicatedCasePerSuite.png). Here, I build an array of each Logging object, then use a Write accessor and an autoindexing For loop to build the Suite. Problem: In the VI Tester UI, I see "DiskLoggerTestSuite:DiskLoggerTestCase, DiskLoggerTestSuite:DiskLoggerTestCase". There appears to be no way to distinguish or add names separately (if this is incorrect, I'd love to know how to change it). My second attempt (SuitePerImplementation.png) was to create a new Suite for the single TestCase, with each suite having a new name but the same TestCase (and using the write accessor, without a For loop). This works out, but means I now need to create a new suite for each additional implementation. It's not so terrible, but changes have to be reproduced in each suite if I want to modify something. Further, if I have more than one TestCase, it isn't possible to place them in a loop without reorganising them to inherit from a shared class providing the "Write Logging Object" VI as Dynamic Dispatch. Adding cases that don't use this require a new loop to discover their test methods. Duplicate code again rears its head. My next attempt will be to create one Suite of TestCases, in which the Suite has a Logging object, and it passes the object to the necessary cases, whilst allowing me to then create a set of owning Suites that each contain the first Suite. This is probably more or less the same as the second approach, but should at least compartmentalise some of the changes needed when adding Test Cases and separate adding Test Cases (change to the first Suite) from adding implementations (add a new Suite of the second type). Does anyone know the way this should really be done? I feel l'm missing a good solution and just creating difficult-to-maintain messes.

-

Hi, First, I think there should be a main topic called testing instead of TestStand or along TestStand. I implemented a Hardware Abstraction Layer as described by NI and I think it is poorly designed for the following reasons: The ASL (application separation layer - API for general HW access) is not OO and lacks logic, error handling and stubs/mocks for simulation. I found that during my app development I waist a lot of time since my HAL has no good testing framework with code reuse and each test unit needs all the system to be configured and running. Besides that, if I have no access to the hardware it is very hard to develop since I need to write code, compile it, move it to a test machine, run + debug, come back to LabVIEW and try to find the bug with no live input for LV debug tools and without being able to exactly reproduce the scenario from the test machine. This process will repeat itself until I'll build an ad hoc recording mechanism which is not reusable for other features I develop. Thus, I wonder, do you guys have an agile solution to this bad methodology? Is there a tool that will automatically create some Mocks/Stubs to my code (stub is a recording of the application actions and responses. It can be used to virtualize the environment while actually working on a stand alone application. Thus, testing and development time is reduces). I wrote such a recording mechanism for some of my features but it is not general or integrated into the HAL design I copied from NI. Thanks in advance, GOOfy Wires.