Louis Manfredi

-

Posts

274 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Louis Manfredi

-

-

Hi Geeth:

I don't know offhand if the issues related to table navigation referred to in the 1998 message have been addressed or not-- I'm pretty sure not.

There is, however a new tool which might be very helpful for working around the problem-- the event structure could be used to capture the keystrokes and handle them as desired. I think this is new since the 1998 message was written.

Perhaps someone has already written an example that they could share :question: But if not it would be fairly straightforward (but somewhat time consuming) to write the code to trap all the arrow keys and handle them using the event structure.

It might also be possible and perhaps easier to embed an excel spreadsheet into the vi. I'm not a heavy user of OLE, but perhaps someone else has done that too.

Hope you get a more helpful response from others, but if not, think about using the event structure.

Best Regards, Louis

-

Hi Robert:

I agree with Micheal-- not just from the point of view of moving extra data around, which is a valid concern for big clusters, but also from the point of view that providing the correct values to all the items you don't want to change ends up being a big amount of clutter in the calling vis which can only make your program harder to read (never mind write.)

However, for really simple clusters-- say something less complex than an error cluster-- you might want to do things the other way, for the sake of readability.

I've done it both ways, but more often the way Micheal suggests. I'm interested in hearing other opinions.

Best regards, Louis

-

Hi Nexus:

The attached zip file contains some vi's for a different communications protocol you might find useful as a clue.

I think I've included enough for you to see the drift of things, let me know if the vi's arrive broken arrow or otherwise unusable.

They are provided as-is and with no warranty or guarantee of anything.

Download File:post-1144-1112373848.zip

They were designed for a slightly different communications protocol: Variable message length, eight bit data. Shared comm. channel for multiple units with differing addresses.

Every message is of the form:

<DLE><STX><MESSAGE BODY><DLE><ETX><CHECKSUM>.

Typically, <MESSAGE BODY> contains <ADDRESS BYTE><MESSAGE TYPE BYTE>[<DATA BYTE(S)>]

If <MESSAGE BODY> contains an embedded <DLE> it is replaced with <DLE><DLE> (byte stuffed) so it isn't confused with the beginning or end of a message.

Although different from your protocol, the examples should provide some help. Pointers to note:

1. Both my knowledge, and Labview itself, have progressed since these were written, there are some stylistic faults (use of globals, etc.) which are out of style now (or always were), but I haven't bothered to change them since the routines work for me.

2. The message receiver is a state machine. Building error-tolerant communications receivers are good examples of a place where a state-machine architecture is a really helpful approach. (Some Programmers will claim that everything should be done with state machines-- I'm not so sure, but this is a pretty clear-cut case where it is useful.)

3. Best to use single message transmitter and receiver vi's, called from all the places where the program communicates with the remote thingies-- That way you can keep track of port use & don't end up using the port for two different messages at once. If messages are short, and timing isn't super critical, make a single vi that calls the transmitter, waits for the response, then calls the receiver. Make it non-reentrant. Might not be most efficient use of time, but saves all kinds of headaches with sending two messages and getting two (scrambled) responses.

4. Generally, only need to call the initialize routine once, when you boot your program.

5. Seems like there's about a billion different CRC routines out there--- Myself and a number of other forum members have posted lots of information on some of them in a forum thread that should show up under a search.

Hope this is at least a bit of a help, feel free to ask more questions if the examples aren't clear.

Best Regards, Louis

-

Hi Nexus:

I've done a fair amount of messing around with serial ports, as have many others in the forum.

Much of my work has been with byte stuffed protocols for eight bit data transmission & also with tricks to bypass Windows so I can get better timing control on outbound trigger messages.

Others in the forum can probably help better with other kinds of comm. protocols & issues.

Tell us a little more about your application, and I'm sure the right person will jump in.

Best Regards & good luck, Louis

-

-

Hi Michael:

I must be missing something, but I can't see the reason why you need to use two loops and the local variables?

Can't you just move the contents of the I/0 loop into the main loop and wire the outputs of the sub-vi's directly into the add node? Then once the values for all the sub-vi outputs are updated (once every 100 ms) and ready, they will be posted out by the port output vi.

There is no point in running a separate port output loop more often than the sub-vi's provide new inputs. On the other hand, if you expect the various sub-vi's to provide output too transient to be detected by a 100 ms update, then they too need to be in a loop that is executed more often.

Hope I've helped at least a little,

Best Regards, Louis

-

Hi All:

I'd add to DAK's suggested vi a beep within the case structure, so the user knows he's getting truncated. I've used similar in user's interfaces in the past, & it seems to work ok.

Best Regards, Louis

-

Hi lambiek:

Here is a possible clue: I think that once the program enters the formula node, it will use the data which existed at the time it entered the node (unless it is changed within the node) until it exits. So your program will use the original value of cnt over and over-- depending upon what the initial value of count is, it might never exit the formula node. I suspect that isn't what you intended? (Also, will never exit the formula node to execute the wait till next ms operation.)

Also, once you get past that point, double check to see that the else if (cnt = 16000) comparison gets made when you want it to-- I suspect the cnt>5300 case will always block it.

Hope to have helped, Louis

-

Hi Ben:

I also find myself struggling with the simplest things in LabView-- And it is funny that I had the exact same problem Friday, except on my front panel--- even though I've solved that problem many times before, I keep forgetting the answer, and its always painfully obvious when I remember...

Click on the paintbrush in the tool pallette, then click on the black (forground) rectangle next to the paint brush. When the pick color dialog box shows up, click on transparent choice (T box in the upper right corner.) Then use the paint brush to paint the box, and the edge of the box, which is a foreground item, will be changed to transparent. (And so forth to change the color of the text box or its border to whatever you prefer.)

Pulldown menu Tools>Options>Block Diagram>Use transparent name labels checkbox will change the default for when you add new items, but it won't change what is already there, and I'm not even sure that everything you add will always follow the default.

Hope to have been of help.

Best Regards, Louis

-

Hi Dave:

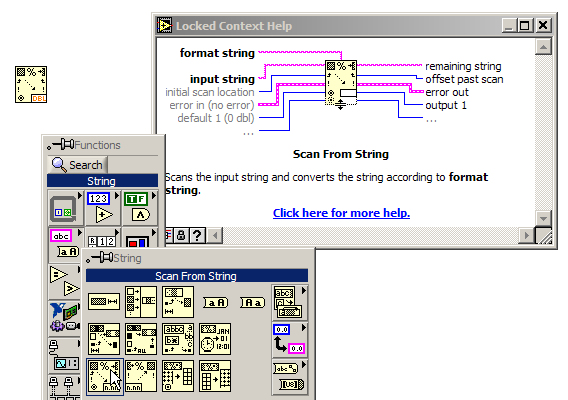

Assuming that you have the load cell data available within LabView in string form, Scan from string should help you convert the load cell data into a numeric form:

You'll need to merge that data with the other data, might be the easiest way is to edit the routine you already have for recording the TC data, and add the load cell data into it.

I can't hope to solve the whole problem, but I've helped out a little on at least one key part of it.

Best Regards, Louis

-

Hi John:

Thanks for the info--

I'm running 7.1.1 now, but come to think of it, seems like this has been going on for a long time... perhaps off & on since I switched From Macs

to Windows :ninja:

to Windows :ninja: Perhaps I should check with NI support.

Anyone else having the problem currently :question:

Thanks, Lou

-

Hello All:

Sometimes when I'm working in LabView, it starts acting as if the Ctrl key on my keyboard is stuck.

Sounds an awful lot like a hardware problem, doesn't it :question:

But it only seems to happen with LabView.

Sometimes clicking the ctrl key a lot makes the problem go away, sometimes I have to exit LabView to get rid of it.

Anyone else see anything Like this?

Best Regards, Louis

-

Hi All:

Jeff's description of how the effect occurs seems very plausible to me.

I'm afraid I'm not at all comfortable with classification of the event as non-bug, however.

My mental image of LabView is that anything (like a button) that can be visualized as a direct analog to a physical piece of electrical hardware should behave more or less identically to an idealized version of that hardware. So, when I push a hardware button it clicks, and for all intents and purposes the circuit closes at the simultaneous time.

Not matter what electronic circuits I use to observe the state of the button, or its transition from one state to another, the state of the contacts on the button should be simultaneous to the pushing of the button. And, if a transition from not-pushed to pushed occurs, any observation of the switch contacts triggered by the transition's occurrence should correctly indicate that the transition has, in fact, occurred-- that the button is now in the pushed state.

To my thinking, once the LabView kernel process (or whatever) starts to process the mouse-down event it should inhibit all interrupts until both the event firing and the new state of the button are set to the correct values.

I'm getting perhaps a little too philosophical, don't want to get into the angel-on-the-head-of-a-pin thing, but it seems to me that once you accept unintuitive and undocumented behavior of LabView objects, the language quickly begins to lose value-- Isn't one of the wonderful things about a virtual instrument that you usually don't have to build debounce circuits for every switch on the panel?

Hope I'm not being too nitpicky.

Very curious to hear other's thoughts on this. :question:

Very curious to hear other's thoughts on this. :question: Best Regards, Louis

-

-

Hi Ale914:

There must be an easier, and more elegant way to do this, but, if worst comes to worst, and if both systems can read and write to a common directory location, you can use a small dummy file as a semaphore.

When application #1 wants to grab the hardware, it first opens the dummy file for edits and prohibits access by other applications. Application #2 then would get a file error when it tried to open the dummy file, and would have to wait its turn, until App #1 released the hardware, and closed the dummy file.

I know this is rather kludgey, but we used it a lot in the bad old days, and if no one suggests something more elegant, it will probably still work today.

Hope to have been of help & Best Regards, Louis

-

Hi Shazza:

Report systems are not my gig, but I can guess that you need to ask yourself the same questions I ask myself when setting up storage for a data acquisition system:

Who needs the data? What data do they need? What questions are they trying to answer with the data?

In what form do they need the data? Is a table of numbers enough? A simple plot? Does the user be able to manipulate the data? Should the data be manipulated and reduced before it is stored? If so, should it also be saved in its raw form?

How big is the data set? Does the data always arrive in the same format? If not, are the formats similar enough that they can be converted to a uniform format? In what ways (chronological, geographic, topical, etc.) does it need to be indexed or cross-referenced?

How widely available should the data be? What are the consequences if someone unauthorized gains access to the data? What are the consequences if the data is accidentally or maliciously destroyed or altered? What is the likelihood that someone will try?

How often and how quickly must the data be accessed? Frequently, quickly and repetitively? Or rarely and without urgency?

And there must be other questions I'm missing... :question:

After I've done that I can start to answer my questions about what the data storage format should look like, what medium the data is stored on, whether I can use a storage format similar to one I've used before for a similar need-- Or whether, just perhaps, I'm so lucky that I can use one of the pre-defined storage formats of LabView.

I'm not at all sure I-- or anyone else on this forum -- will be able to help you if you answer these questions, but even if you need to design the system completely on your own, you'll be ahead of the game once you give some critical thought to what the reporting system will be used for. While there may not be international standards for your report system, perhaps there's somewhat widely used format for storing similar information.

Hope I have been at least of a enough help to justify the time it took to read my rather wordy response.

Best Regards, Louis

-

Hi Levietphong:

The "express" vi's are pre-written LabView programs which help to get you started quickly if you are new to LabView. They aren't really different from LabView 7.1, they are a part of it. Depending on who you talk to, they are either a useful addition to the language, or just a marketing tool NI uses so they can pretend you don't need to be a programmer to use the language.

If you are new to LabView, and have not yet had time to learn it, and if there is an express vi that does what you need to do, exactly, and if operates efficiently enough for your needs, go a head and use it.

In the long run, to truly use the versatility of LabView you will end up learning learning the language and writing your own vi's from scratch. The express vi's only do certain things, and what versatility they do have comes from complexity inside the vi's which put unneccessary memory and exectution time demands on the system.

You can also start with an express vi, open its diagram, throw away the portions of the code you are not using, perhaps modify what is left a little to do exactly what you want. If what you want to do is only a little different from what the express vi is designed to do, this can be a good approach.

Even with 10 years experience with LabView, I find the express vi's useful. I have used them to learn how to work with hardware and LabView features that I've never used before. I simply use them as examples, copying key bits of code related to the feature I want to use into my own program.

Hope this helps-- also there's been other discussions of express vi's in the past, with comments by myself and others. Try searching past posts in the forum, there may be some helpful information there.

Good Luck and Best Regards, Louis

-

Hi Michael:

For a similar problem (lots of different Vi's running sort of independently, but all reading data from the same source) I've been very happy using Notifiers. Notifiers are sort of like a broadcast equivalent of a queue.

Check: labview\examples\general\notifier.llb, as well as the help system discussion of notifiers. If this doesn't answer the question, let me know & I'll try and strip down one of my applications to serve as an example for you.

Best Regards & Hope I've been of help, Louis

-

Hi Ashmex:

Good to see you've got attachments to bulliten board posts worked out! It is a little confusing, since they don't seem to appear for me in message preview either. :question: Is there something we are both doing wrong? (also got your Email, but, as I said, the bulliten board is better.)

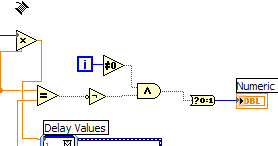

The problem is that the inegrate operation returns an array, while the formula node wants a scalar. I'm guessing that what you want for the formula node is the most recent value of the integral-- If that's what you want, the following change to your vi should work. If not, it should at least give you a clue for how to get what you want.

Hope I've been of some help.

Best regards, Louis

-

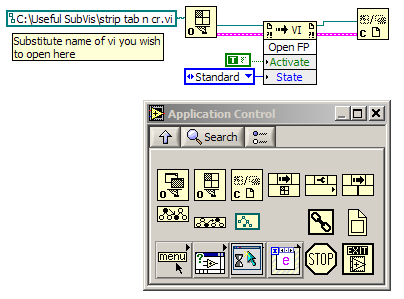

Hi Ravi:

Perhaps application control menu>Invoke Method>Open FP will do what you want.

It isn't really possible to "call" a sub-vi without executing the code, but this will open a vi's front panel from another vi, without actually calling the vi whose panel you open.

Hope this helps.

Best Regards, Louis

-

Hi Ashmex:

I can't duplicate your problem, or perhaps I don't quite understand the problem you are having. If you can post a simple vi showing the problem, or even a screen-shot of your diagram, we might be better able to help.

LabView is pretty tolerant of doing type conversions automatically for you when it needs to do so, Maybe the problem is something other than the type conversion. (It is better programming style to avoid unnecessary type conversions, they cost time and memory, but usually type conversions won't make a program crash.)

Hope to be able to help, & Best Luck, Louis

-

Hi Ed-

Using the vi you posted, I duplicate the problem (also using 7.1.1) & IMHO it shouldn't be happening.

Not much help, I know, but at least you're not the only one seeing it.

Louis

-

Hi Robert:

I think the express vi's are bulkier than they need to be for any single application because they include code, or hooks for code, for a variety of different uses.

It seems like the express vi's were developed for new users, to make it easy for them to get their feet wet. They don't seem to offer all that much to those of us that already are familiar with LabView. I do find them very useful for learning how to use some feature or hardware that is new to me-- I use the express VI for the new feature, then open it, see what portions of the code I really need to do my particular job, and delete the rest, or more often & perhaps better, write clean code from scratch using the techniques exposed in the express vi as a model

That's just my opinion however, perhaps I'm wrong, and the unused portions of the express vi's don't really cost that much in time or memory. I'll be interested to hear if the rest of the forum agrees. :question:

Best Regards, Louis

-

Congratulations Michael! :thumbup:

...and good luck with the job hunting. I'm certain something will come along.

Many companies here in the US will go to the trouble of pushing through a H1B work visa for someone young, bright & ambitious. (Sad for an old fart like myself to admit, but they can get a lot of brain for the buck from you, even if you lack the old timers' experience.)

Also, don't be shy about getting the engineering degree if you really want it. I don't know about U.K., but here in the U.S., it is very common to change fields between undergrad & graduate school. My undergraduate degree is in achitecture (in my alma-mater considered a branch of fine art, like painting & sculpture.) I never did anything with that, went straight to graduate school for Ocean Engineering (ship and submarine design.) I had to take the prerequisites for the graduate courses, but other than that there was no requirement for me to take any undergraduate engineering courses. After 28 years I have yet to do much ocean engineering, my career so far has been mostly designing & testing windmills as well as doing test engineering and test programming for other types of hardware.

A close friend started as a mechanical engineer, took a break to do sailing and commercial baking, then became a P.E. (equivalent to a "chartered engineer" in the U.K?) then spent some time doing test engineering & LabView programming & ended up working on an pearl farm in Australia. A while back, he decided he'd rather work with people than machines, (or oysters) and he's now about to graduate as a medical doctor from the same university where fifteen years earlier he earned his BSc in engineering. (That wasn't an easy switch, but he did it.) So, the point is don't let yourself be trapped into a life as a programmer if you are really interested in something else. The logic, maths & basic science courses you took for the computer science degree would be transferrable to any engineering discipline, & your programming experience would be a great benefit for a career in many branches of engineering.

Good luck, we'll let you know if we hear of any job leads.

LV 7 CPU performance

in Hardware

Posted

Hi Michal:

I suspect that CPU performance is at most part of the problem. More likely the weakness of Windows as a real-time operating system.

Take a look at the disucssion by myself and others in this thread:

http://forums.lavausergroup.org/index.php?...976&hl=priority

and see if perhaps it helps a little. (Of course faster processors are always better, but my bet would be that the fastest processor around won't fully solve the problem, which I suspect lies in W98. Windows XP might be a little better, but it still is weak for real-time work.

Hope to have helped a little, Best Regards, Louis

Download File:post-949-1160069447.vi