Louis Manfredi

-

Posts

274 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Louis Manfredi

-

-

Hi Michael:

Happy Holidays

Happy Holidays  You'll get used to it. Having grown up in New England, my first few years in the Bay Area it always seemed really strange to see Santa Claus in Bermuda shorts (even though they were red with conventional white fur cuffs).

You'll get used to it. Having grown up in New England, my first few years in the Bay Area it always seemed really strange to see Santa Claus in Bermuda shorts (even though they were red with conventional white fur cuffs). However, after a few more years there, I began to take it for granted that I could do outside Chistmas decorations without freezing my fingers, and I really learned how to get into the holiday spirit without the benefit soaking my shoes in salty, sandy slush in some dreary mall parking lot.

Now returning to New England after a decade in California-- That is a challenge to the spirit, be forwarned!

Best Regards for the New Year, Louis

-

Hi MKS-

Just a half-thought-out idea-- but perhaps you could have a VI in one PC serve as the sole interface to the GPIB gadget, and have it set up as a server so that other VIs on the other PC can access the gadget through it. This just might be easier than dealing with the GPIB environment and figururing out how to pass the CIC baton back and forth between a number of machines.

As I said, haven't thought deeply about this, but perhaps its a useful approach.

Best Regards, Louis.

-

Hi Temp:

Try "Hexadecimal String To Number" (easiest) or "Scan From String" (more versatile) both of them are in the String menu. Let us know if you need further clues

Best Regards, Louis

-

Hi Folks:

Just my two cents, but seems to me like there is already a simple, effective, means of preventing posts by those too lazy to think on their own from wasting our time.

--We can, as individuals, simply ignore those posts. Very effective, and not a very significant downside.

I've asked some pretty dumb question questions on my own sometimes-- and not through lazyness, or through being a one-sided taker, but as Michael suggested, sometimes its been 3AM and I was in a bind.

I've also answered a few questions where my first impression was perhaps the asker was being lazy about taking their own initiative to use the built-in help tools.

Seems like in many of those cases, my first impression was right, and I was wasting my time. Other times, the questioner's subsequent response to my suggestion made it clear that the questioner just needed a litlle help to get to first base, and that I'd helped out someone who really was willing to try and help himself.

Seems like in many of those cases, my first impression was right, and I was wasting my time. Other times, the questioner's subsequent response to my suggestion made it clear that the questioner just needed a litlle help to get to first base, and that I'd helped out someone who really was willing to try and help himself.So, if the clutter of lazy questions on the site were so bad that it was making the site unusable for me, I'd agree that something, perhaps a admission quiz, would be OK. But I don't think its that bad yet, and I think we're better off keeping things simple. A few obnoxiously persistant questioners seem to get dealt with by the moderators, but it seems like most of the really lazy ones don't take the effort to persist if we ignore them for a bit.

If I had had to take a quiz to become a member, I don't know if I'd have bothered.

Just my two cents, as usual, I seem to have used about 50 cents worth of words to get it across

Best Regards, Louis

-

By the way, i used couple of variables on arrays in my program. Does it effect the memory? If it does, how to free it?

Hi Abe:

If you assign an array to a global variable (either to a true global or to a LV2 style global) that data will remain in memory until all the vis that call it are closed. So if you are storing a big bunch of data in a global, and not doing something specific to clear it out when you are through with it, that might be the problem.

If you can, try to do project with real dataflow wiring-- That way LabView should then know when its through with the data. If not, try writing an empty, (or very small) array to the global after you are through with it. That should at least make the memory available to your LabView application for other uses if it needs it.

Not sure if your problem is releasing memory for other programs to use, or releasing memory used in LabView for use elsewhere in LabView, but if you need the memory for LabView, the above suggestions should work. Its less likely, but they even might work if you need the memory for elsewhere in Windows.

Hope this helps, Louis

-

Of course, there is something that is going to be quite difficult. In about a month, I'll be taking a class in JAVA. So, I have a month to set aside my text-based mindset, then 3 months of setting THAT down, and then coming back to work ready to dive into LabVIEW again.

Hi Mule:

Don't worry too much about the differences between text langauges and LabView-- At the heart of it all, computers is computers--

The most important aspects of programming in any language are common to all languages--

Be sure and understand, and document, what the user wants before you begin(even if the user is you).

Spend some time understanding what the inputs and outputs should be.

Spend time breaking the project down into logical chunks (subroutines, subvi's, modules, whatever) that each perform a certain task, and that can be easily tested individually before assembly into the whole (That's easier with LV than with most text languages).

Look around to see what tools are available in the language core, in your own past work, or in the work of others which you can apply to the problem to avoid re-inventing the wheel. ('cept of course don't cheat on your homework or steal stuff you haven't the right to use...)

Test and document as you go along. LabView is not self documenting, no computer language is! Once you try to do anything more than the most trivial task, in any language, you need to put some up-front effort into explaning what you're doing. Otherwise you'll be scratching your head trying to remember what the heck you had in mind when you go to modify it a few months down the road- (This happens to me all the time.)

Perhaps most important, remember that computers (almost) always do what you tell them to do, not what you want them to do. Once you accept the idea that its much more likely that problems are caused by differences between what you want and what you told, not by differences between what you told and what the darn thing actually did, debugging gets a lot less time consuming.

At any rate, good luck in the studies, Louis

-

However, to the suggestion - are there specific limitations in LabVIEW itself that say "No you can't do this?" Obvisouly in the current versions there is, but why I wonder. Why must EVERY tunnel be connected in EVERY case. That just doesn't seem flexible to me. Any reasons why not to make the program more flexible?

Hi Mule:

LabView is really quite flexible as it is, but like any programming language, it has a few invariant rules that are necessary for the logical structure of the language.

In the graphical data flow model of programming on which LabView is based, every wire has to have a source for its data in every possible state of the program. Its central to the very core of what the language is. I can't take the time to go into all the details here-- But, for example if a wire doesn't have a source, the language can't even know when to execute the code connected to it.

It works out that this isn't a real burden once you get used to dataflow programming. Spend some time looking at the examples that ship with LabView, and try not to think in terms of using the language in the same way you might use a text based higher level language, or even how you might use assembler for that matter.

As a convenience, you can choose that all unwired cases are set to the default for the data type, but that is simply a shorthand in place of explicitly feeding a default value to the wire's terminal in all the cases where you want the default. Even that shorthand tool is a recent addition to the language and I think you would be surprised how few cases there are where a well-organized LV program will use that capability. (Offhand, about the only place I use it is to feed a boolean value from the case structure to loop stop button of a state machine.)

Best Regards, Louis

-

Hi Mule:

I'm not totally sure what you mean by a variable "dying." If a wire comes out of a case structure (or from any other source), it has to have a defined value. Whatever the wire is hooked up to needs to have some value associated with the wire. Much like the a function in a text language always returns a value-- the value might be a default, or a random unkwown, but there is a value.

There are, however a couple of suggestions I can make:

1) If you want, you can right click on the box where the wire leaves the case structure, you can select "Use default if unwired" If a case executes which doesn't have the box wired from inside, the output will be the default value for the variable type. (zero, false, empty string, whatever...) This is ocassionally useful, especially with state machine architectures, for example providing the value to the stop terminal of the loop.

2) If you really don't need a value for wire except in one particular case of a case structure, you should be able to move the code that uses the wire to inside the case structure-- without the wire heading out of the case structure, there is no problem with what happens in other cases.

Hope this helps, Louis

-

I will say, I think I'm going to end up liking the new system a lot, but right now I wish I had waited till I was in a dead spot between gigs to make the transition...

Hi Folks-

A final note on this topic. (Unless others have something to add)

I am very happy

with the new project system in LV8. It seems like a much more professional tool than the old .bld file based system. To me it now seems easier to use, and more versatile too. Nice to be able to have variety of build specifications, E.G. one to make only the .exe file, and another to make the installer-- all within a single project. Also, I haven't make any careful comparisons, but it seems to build build the .exe faster than the old version.

with the new project system in LV8. It seems like a much more professional tool than the old .bld file based system. To me it now seems easier to use, and more versatile too. Nice to be able to have variety of build specifications, E.G. one to make only the .exe file, and another to make the installer-- all within a single project. Also, I haven't make any careful comparisons, but it seems to build build the .exe faster than the old version.I never did go back and figure out what the problem was with translating from the .bld file to the .lvproj file. Seems unnecessary now that I understand how to work with the new system.

I'll stand by my statements from before:

Don't try to make the transition till you have some time to spare.

Don't try to make the transition till you have some time to spare. Do plan on printing out all 38 pages of the "Organizing and Managing a Project" help topic, and reading most of it before you accomplish anything very useful. This might not be necessary for users up to date with other modern software development environments, but it certainly was true for me.

Do plan on printing out all 38 pages of the "Organizing and Managing a Project" help topic, and reading most of it before you accomplish anything very useful. This might not be necessary for users up to date with other modern software development environments, but it certainly was true for me.Also, It seems to me (again, I haven't made careful comparisons) that the first installation of a LV8 based application --especially one that uses DAQmx-- on a virgin target machine takes much longer "Now installing part 1 of 54" than a similar installation of LV7.x. I think there may have been references to that issue on another thread in the forum.

Summary: :thumbup: LV8 project builder, but

takes some time and effort to get up to speed.

takes some time and effort to get up to speed.Best Regards, Louis

-

Hi Folks:

Here is an obscure little feature (bug?) with LV8.0.

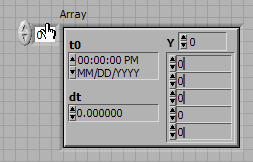

If you have a front panel control which is an array of Waveforms, and if you click to edit on one of the elements of the data array within one of the waveform elements, all the elements get a cursor displayed.

The cursor only blinks in the element you actually selected, and when you finish the edit, the cursor only goes away in the element you selected, see below.

A little obscure & harmless? Only causes slight confusion while manually editing a waveform control, which is only likely to happen when testing a sub-vi, (I can't think of any other reason to manually edit an array of waveform type) but a bug none the less, and perhaps there's some less benign manifestation of the underlying problem.

Best Regards, Louis

-

I heartily agree-- and we should be able to seach for bookmarks in all vis in memory, not just one.

(A kludgey trick I use to partly substitute for the "back" button: If I'm working on something that has a run arrow, or only a few errors, I'll plant an unwired add in a spot to which I plan to return. Then all I have to do is click on the broken arrow, and select that add from the list to get back to where I was.)

Regards, Louis

-

Hi Reinato:

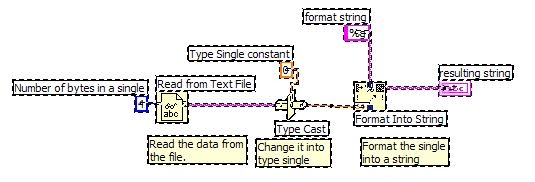

Can't go through the whole thing, but the basic idea is as follows:

1) Read four bytes from a file.

2) Type cast the four bytes as a single.

3) Format the single into a string.

I've left lots of details for you to work out-- put all this in a loop, with the file refnum wired to a shift register so you get more than just the first data point, might have to skip some header info in your file, might want to do something with the formatted data (Write to another file?), etc. but this should point you in the general direction.

For some types of files, there might be an easier way to do this, but the approach I've shown above is pretty general, you should be able to adapt it to whatever your needs are-- some of the easier approaches might be less versatile.

Hope this helps at least a little, Best Regards, Louis

One more point--

Some possibility, depending on where the data came from, that you might have to reverse the order of the bytes after you read them & before you type cast them as single. If so, look under String Functions>Addition String Functions> reverse string.

Louis

-

Hi Avalanche:

I had good luck with a project where the data was published to an OPC server by the PLC, all I had to do was subscribe to the data with the controls of my input vi, otherwise pretty straightforward LV programming to do what needed to be done with the data.

If your sample rate requirements are not too high, and if the PLC can publish to an OPC server, this might be the most straightforward way to go. (Although I've done PLC work in the past, I didn't know how to program the PLC I was listening to. Didn't have to, just asked the PLC folks to publish the values I needed to the server give me the datasocket URLs.)

Very neat and tidy, no need for the Labview programmer to fuss with the mysteries of how the PLC worked or vice-versa. If you've got high bandwidth requirements, might be another issue, I don't know, I was doing some fairly pokey temperature measurments on that gig.

Hope this helps. I'm sure others will be able to chime in with some ideas if for some reason you need to communicate more directly with the PLC.

Best Regards, Louis

-

Hi Michael:

Either I'm misunderstanding what you're looking for, or the built in indicator-- Controls>Numeric>Horzontal Progress Bar will do the trick. (Found in LV8 menus, don't recall it offhand in earlier versions, but then I havn't looked either.)

Best Regards, Louis

-

I'm taking it slow.

....A good plan, I think.

Best, L

-

Hi Folks:

Here is an interesting one. I was attempting to use the read text from file vi to skip a line in a text file, it didn't seem to work, so I wired up an indicator to its output as a diagnostic tool. When I did that it worked..

Took the indicator out, didn't work again. Wired the output of the read text from file vi to a seemingly pointless concatenate string gadget, works again.

It makes perfect sense that wiring or not wiring an input might affect how a vi performs, but its really counter-intuitive--- to the point of being a bug, I'd claim-- that a vi will behave differently depending on whether its outputs are wired.

See attached vi:

Download File:post-1144-1132158963.vi

Be curious to see what others think.

Ah well, serves me right for violating one of my firmest rules (never install a softare version ending in .0 if you've got an earlier version that ends in .x that works.) Anyway, a good excuse to use that neat new smilie that I haven't tried yet:

Best Regards, Louis

(Edit-- replaced 'ouput are wired' with 'output is wired' to fix grammer.)

-

Hi Folks:

Seems odd to reply to my own post,

but wanted to give a little update on this.

but wanted to give a little update on this.I haven't got time now for my usual long-winded discourse-- but the "Reader's Digest" version of the story is that we (Myself and NI Support) are still working on the problem and making a good deal of progress, though I'm not fully out of the woods yet.

The new Project Manager seems powerful, but the transition from the old .bld file to the new .lvproj was far from automatic for me, and might be for you too.

My advice would be to not translate any critical applications for which you might need to provide executable files or application builders until you've got a good few days to focus on the task in case things get ugly. I ended up printing out all 38 pages of the "Organizing and Managing a Project" topic from the help file, and reading most of it, before I got a successful build. If you work often with similar projects in other programming languages, you might pick it up quicker than I did, but even so, I'd suggest some caution in making the transition :!:

I will say, I think I'm going to end up liking the new system a lot, but right now I wish I had waited till I was in a dead spot between gigs to make the transition...

Still curious to hear how others are faring with the transition...

Best Regards, Louis

-

Hi Steve:

I haven't been at the hiring end of such a telephone interview, but as a consultant, I've been the interviewee more than a few times. I find the best way to confirm my credentials is to talk about some of my more challenging programming projects. If the potential client doesn't voluneteer it, I try to find out something about their application. From there, I steer the discussion to a past project of my own in some way similar to the client's project. This gives me the opening to discuss the specific technical challenges of those projects in a way that (I hope

) demonstrates that I've got a good understanding of the relevant general programming principles.

) demonstrates that I've got a good understanding of the relevant general programming principles. So I'd suggest that you start by telling the interviewee a little bit about the potential assignment and its challenges, and ask them to dissuss similar work they've done which has similar challenges. Keep steering the interviewee towards the nuts and bolts. Any real programmer-- any real engineer for that matter-- will be happy to chatter about the technical details of their prior work.

I think you'll get a far clearer picture of the interviewee from such an approach that you can hope for by asking a pre-planned canned list of questions. The canned questions are going to get you the canned answers. Motherhood, Amerca, Apple Pie -- and State Machines. The discussion of the inteviewee's specific prior assignments should give you a better feel for the person. Of course, there may be confidentiality issues related to prior work for some interviewees. In that case, try "O.K., without going into the details of what you were doing for XYZ company, perhaps you can discuss some of the programming techinques you've used (or hardware you have written interfaces for) in your past work?"

That said, there is a comprehensive list of questions which I feel does do a pretty decent job of evaluating a person's competence as a LabView programmer-- National Instruments' exam for Certified LabView Developer. Those of us who have taken the exam are not allowed to discuss the questions, but I think it is safe to say that the exam is fairly tough. There are still plenty of established and very competent programmers out there who haven't yet taken the exam whom you might not want to reject out of hand, but if someone has passed that exam, its a good bet that he or she is at least a decent LabView programmer. (And you can check CLD status on the NI site, you don't have to take the interviewee's word for it.)

Hope my answer is at least a little help, Best Regards, Louis

-

Hi Folks:

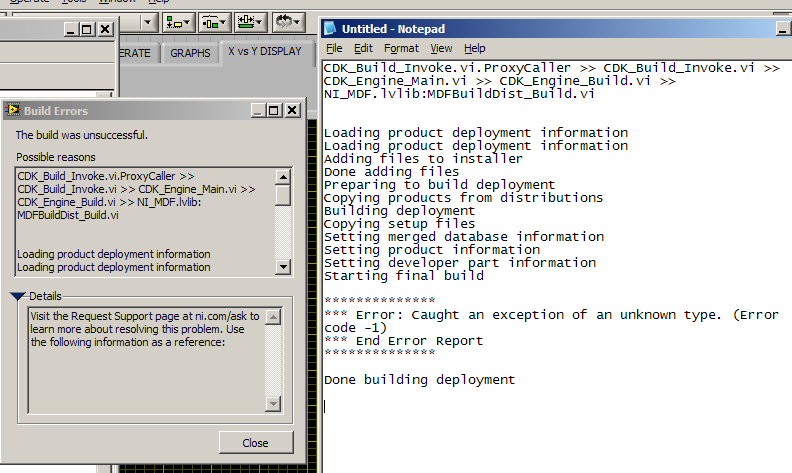

Got an error message when trying to build an application and create an installer in LV 8.0.

Translated from a .bld file which worked well in LV 7.1 Looks like LV 8.0 did well on building the .exe file, but got a problem with the installer.

Attached png file shows both the error message box which came up, as well as a

.txt file showing full message hidden by scroll bar in upper part of message box.

Came up just like it shows, with nothing following the sentence "Use the following information as a reference:" Glad I kept a separate copy of my project in LV 7.1, but would like to make the transition to 8 as soon as practical.

Came up just like it shows, with nothing following the sentence "Use the following information as a reference:" Glad I kept a separate copy of my project in LV 7.1, but would like to make the transition to 8 as soon as practical.I've sent this in to NI support, but meanwhile curious to see how others have fared making installers in LV8.0 :question:

Thanks, & Best Regards, Louis

-

Hi Labvu:

I'm not at all sure this is the easiest way to do it-- but last time I had the same problem which was years ago with an earlier version of LabView, it was the only way I could figure out:

make two copies of the data.

In the first copy of the data, change all the values that are in saturation to NaN.

In the second copy of the data, change all the values that are NOT in saturation to NaN.

Plot both copies of the data on the same chart in different colors.

Hope there's an easier way to do it that someone will share with us, but if not hope that this helps...

Best Regards, Louis

-

I know for sure that it is very difficult to establish and maintain synchronization between several data acquisition devices which talk to LabView over rs-232

I'm pretty sure the same is true of USB-- Any synchronization message going through the Windows operating system is going to have an unpredictable delay-- usually less than a dozen milliseconds, but occasionally much, much, more. Also, the timebase of the various devices won't be exactly the same, so over time they will drift out of synchronization. Though for short data sets, or for longer sets if a means of checking and resynchronizing were available, you don't need to worry about this timebase drift.

Consider a Real-time operating system. (LabView RT, etc, even linux should be much better than windows) Also, depending on your installation, it may be possible to add time base references to each data acquisition device. I've used the time tick from GPS recievers to measure the synchronization of independently sampled data streams. (One microsecond accuracy, and pretty cheap, though sometimes difficult to integrate into the DAQ device.)

Best Luck, Hope to hear from others that there's an answer to your question that I didn't know about, since it often bothers me too.

Best Regards, Louis

-

Hi William:

Yes, you can use a LabView vi to create another vi. In one simple manner (a method I used years ago to create multiple display panels for program) you can simply use file i/o vis to make multiple copies of an existing vi.-- You can even call these vi's up by file name after creating them, open them and start them running.

Of course, it can get much more complicated than that-- see the whole section "Scripting" in this forum. Not something for the casual user, I think you've really got to invest some effort into it. But you can do some pretty complex stuff if you need to :!:

I'm not at all sure that I agree with you that Labview isn't Object Oriented... but I'll leave that topic for others, as I haven't done a lot of OOP in C++ or VB, there's probably others in the forum better able to tackle the question, but my understanding is that both in its construction, and in its use, you can think of LabView as a Object oriented language.

Best Regards, Louis

-

Hi LGK:

I agree with Ed Dickens-- Unless there's a reason not to (and I can't see one) you would be better off wiring directly to the terminal, rather than either the property node or the local variable.

I don't know offhand why the property node and the local variable are behaving so differently-- perhaps someone else can help with your original question. I do recall reading about differences in another thread in the forum, but I can't remember which one.

But if you can wire directly to the terminal, you will bypass the whole question, and probably have a routine that runs faster than either of the thumbnails you've shown.

Good Luck & Best Regards, Louis

-

Hi Mayur:

Have you tried Thorlabs? www.thorlabs.com.

Might be a tough one, a micron seems very skinny.

Good luck, Louis

Graphics sucking up processor... how to improve?

in User Interface

Posted

Hi JP:

One thing to try-- Looks to me like you've got a lot of things overlapping on the display-- Digital displays overlapping the gauges, etc.

I seem to recall I had really poor performance last time I made a display with overlapping graphic items--that was some time ago, and perhaps LabView, Windows, and Graphics cards have gotten better since then-- but I don't know, since my early experience with overlapping graphics things was so bad, I've got out of the habit of letting things that get updated overlap. (and, just as an opinion, I think its easier for the user if the display is less croweded anyhow.) Might try eliminating the overlap (first try the actual visible overlaps, if that doesn't fix it, try eliminating the overlaps of the rectangles that bound the round dials too.)

Also, a little of a hassle to program, but the temperatures probably change so slowly that you can update them once every couple of seconds-- I've owned cars where the temp. gauge moved almost as fast as the tach, but not for very long .

.

Anyhow, best luck & hope the suggestions help, Louis