-

Posts

410 -

Joined

-

Last visited

-

Days Won

13

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Black Pearl

-

You are right in this simplified example. The compiler can optimize this code. But the conclusion (parallelism) is implying a branching (into multiple threads!). Propably I get's a bit clearer if I extend the example: j=i+1 foo(i) i=j Seems to be perfectly sane if i,j are ints. If they are pointer (e.g. to objects), we already have a race-condition desaster. Furthermore, I see no way a compiler could prevent this. The programmer will need to take care (e.g. foo(i) my_i=i.clone //this should happen before returning -> caller might change the object in the next line return ) Felix

-

Thanks for that positive story. Actually it is hard to believe the attitude of text-based programmers looking at a graphical code as a kind of 'toy'. I work in a very thight interdisciplinary team. When we talk about electronics, we look at the schematics and not at text. If we need a mechanical construction, we also look at drawings. And whenever we do design some optics, we have beams, surfaces and lenses drawn. And when something is measured/characterized, it's also graphically plotted. So really weired to do text-based software. Here I would disagree. There is a very important difference between data-flow languages and all other languages. At first, this is a bit hidden in the compiler (there are some good explanations in wikipedia, and of course some pretty detailed issues posted mainly by AQ): In a text based language I can write i=i+1 where the data before and after this operation is stored at the same memory location. In LV you can't. Each wire (upstream and downstream of the +1 prim) is pointing to a unique memory location. This data flow paradigm has sever consquences: Parallelism is nativ We have a duality of by-val (native) and by-ref, while text based compilers are limited to pointer :throwpc:/by-ref. Felix

-

I don't think both are really similar. Just to highlight some major differences: * Q's can be used as data structures: FIFO, stack (FILO) -> recursion, SEQ. * Events can be used as a one-to-many or many-to-many communication. Felix

-

Congrats from me as well. Felix

-

I asked the technical lead about JotBot's maintenance engineering and was shocked to learn that it is still under active main-line development. It currently has eight thousand six hundred and forty-four official features, and their feature database is currently tracking eleven hundred more to be added in future releases. Any feature that they've ever gotten even a single customer request for is on the "planned" list, which isn't actually planned at all. The feature additions go in whenever any engineer sees it in the database and likes the idea enough to go spend some time on it. That's how they "plan". Sounds like Idea Exchange. Felix

-

With my limited understanding of the compiler, here the exlanaition. When you wire both terminals (inside the subVI), the data is unchanged. If I don't wire the terminals in the SubVI, it actually changes whenever an error comes in. If I don't wire the SubVI in my benchmark VI, a new buffer must be allocated each iteration. Anyhow, I'm also more interested in the functionality aspects on error handling in this discussion. Felix

-

As expected changes the numbers (more than factor 2 in most cases). But again, in a recent LV version I don't see much difference between using an error case or running the wire through. So the performance improvement by not doing dogmatic error checking in each and any VI will only be measurable in the most critical sections. I'm going to do some more benchmarks when a subVI's error terminals are not wired at all. EDIT: Result: Bad impact on performance. Always wire the error wire to the SubVIs! Felix

-

Benchmarked in 7.1.: 1 million iterations straight wire through 186 ms error case structure 306 ms so the case structure costs about 120 ns. Hey, just checked without any wire (so a clear error vi). 424 ms! So this is most likely slower due to the buffer reuse in above cases. Test in newer version: takes more than 500ms and the difference between straight wire and error case struct is almost gone. Felix

-

Nugget by Darren on the NI forum. Destroy queue (and equivalents) are on that list as well. This is why I didn't expect the error hitting my in this case, as without error-handled getters for the queue, it works. Felix

-

I just threw away my old dogma. So still in the process of establishing a new paradigm on error handling. So for the Getter's, it is clear to me that I shouldn't do error checking here. The error checking will be done by the code that receives the objects properties. Furthermore, the error isn't indicating that anything is wrong with that data. But for setters, it's not so simple. An error might indicate that something is 'bad' about the incoming data, so I wouldn't want to overwrite 'good' data (from a previous call or the default value) with that, kind of corrupting the objects data. Still some more thoughts (or much better, just do some coding) on my side are required for the even bigger picture of parallel loops, communicating the errors and my simple case of a pure data queue (as opposed to a message queue which can transmit an error message). Felix

-

Well, this is the fun (I was talking about that before) you have when doing signal analysis. The Hilbert Transformation is the Phase Shifter of the Lockin (sin -> -cos = 90°). The multiplication is the demodulation (some more on this next). The mean is just a low-pass filter. Now you can look at the FFT at the different steps: The demodulation is frequency shifting your signal to |f1-f2| and f1+f2 (where f1,f2 are the frequencies of both signals). As both frequencies are equal f1-f2 is actually giving you a DC signal. Now just low-pass filter the reult to obtain the DC signal. Felix

-

Techniques for componentizing code

Black Pearl replied to Daklu's topic in Object-Oriented Programming

Ok, I'll need to look into LapDog to see how you do it. Exactly. Felix -

Just to throw in my salt on OOP and high-performance apps, get rid of the OOP-vs-non-OOP concept. (LV)OOP is building organically onto the concepts (e.g. AEs, type'defed clusters) we used to do before. It's just adding architectural layers. The other way round, don't forget what you have learnd befor LVOOP, there are the same nodes still in the code! We need to take the same care when going into high-performance code as before. Having additional layers seems like a pain, if you ignore that they are vectors in a different dimension. To be a bit more practical than my introduction, let's take the example of a very big data set -> no copies allowed. So what did you do conventionally? You changed the code from wireing the data (passing SubVI boundaries, branching the wire) to encapsulating the data in an AE. Now let's don't make the mistake of thinki think that an LV-Object is just an AE with a wire. Of course, now we get the encapsulation-possibilities of AEs, but not the inplaceness of AEs. Going back to step 1 again, why the f*** are we 'required' to place all data of the class in the 'private data cluster'. Nothing forces us. There is a thread by AQ discussing a WORM-global as being acceptable if it would be private to a library. To be more specific, when you don't have any access to the class private data, you won't be able to tell weather it's stored in the class private data, a global or an AE. Now let's even go a step further, the public interface (Accessors + Methods operating on that data) are the sole place you need to change to switch from a too-slow in-the-cluster datastorage to a faster in-the-AE datastorage. On the architecture level (thinking in uml), this would be just the use of a 'nested classifier' (e.g. a class which scope is limited to the containing class; and as an AE missing functions like inheritance -> you really do not need that if it's private anyhow!). Just to summarize, I can't really see a big conflict between OOP and before-OOP (OOP is just adding a set of new features, albeit I considere them so important that I like to call them dimensions). It's all data-flow. Felix Lost thought (I don't know where to insert it above): If a C++ programmer was doing a high performance app, what would he/she do? Make as class data a static array for that data and shoot with pointers at it. How OOP is that?

-

No, I'd say you need to calculate the phase for each full circle. Then average the results. But there are certainly exceptions to this. If you had a constant sine (you actually did exclude this) with a lot of noise (or very bad SNR, averaging dX and dY first and then calculating the phase would be better. I have not a mathematical model for this, but I'd say a very important guidline is to look at the dividers in any formula. If they are close (in the distance of the noise, e.g. 3sigma) to zero, the noise can produce values close to 0 -> the whole term becomes inifinite and dominates any averaging. To my experience, doing signal analysis is a fascinating experimental science. In case you don't have a real measurement ('cause the setup is unfinished), use some simulated signals. At various processing steps, just plot time signal and a FFT. It will always be a good learning experience. Sea-story: My latest setup I showed to a PhD'ed physicist and got the response: 'wow, like in the textbook'. And the student writing his master-thesis got very-very big eyes when we didn't see any signal anymore in the FFT and I recovered it using lockin technique. Felix

-

Good luck & the best for you. Felix

-

Just a rough scetch of a different idea. Using an anlog oszilloscope, you would use the 1st signal for the X and the 2nd signal for the Y channel. If both are sine signals of the same frequency with a phase difference, you'd get an ellipsis. Then get the distance between the crossings on the X-axis and the Y-axis respectively. I don't remember the exact formula, but I think the phase was simply sine of the ratio of both, easy math I did at age 17. If you apply this to the signals you have, just get all crossings of the signals at 0A and take the value of the other signal. Using lockin-technology (mentioned above) is also nice. You need to shift 1 of the signals by 90° (so you actually need to measure the frequency first). Then multiply the 2nd signal by the unshifted as well as the shifted signal. Sum both signals. Then do the arctan (?) on the ratio to obtain the phase. I'd also search for phase-locking, phase-(de)modulation and phase-measurement on wikipedia. There might be some better techniques than my first ideas ('cause in both situations I was more/also interested in the amplitude). Felix

-

Techniques for componentizing code

Black Pearl replied to Daklu's topic in Object-Oriented Programming

Just ran into some error handling issues and reviewed this document. How do you handle errors? Specifically, when in the master loop an error occures, I would guess the ExitLoop-Message won't be send. My own problem was that an error in my producer loop did make the Accessor VI for the queue return a 'not a refnum'-const so the Destroy Queue didn't stop the consumer. Felix -

Best Practices in LabVIEW

Black Pearl replied to John Lokanis's topic in Application Design & Architecture

Just on the two (four) topics I have experience with. LVOOP & uml: I would take any chance to use LVOOP. I found it not really complicated using the simple equation 'type def cluster'(+bonus power-ups)=object. Once you are using LVOOP, you will want to look into common OOP design patterns, also they not always translate into the by-val paradigm. I think for any larger OOP app, UML is a must. Just reading OOP design patterns will confront you with this, and if you are already a graphical programming freak using LV, why for hell skip UML. On GOOP I'm not really sure. It's the tool that integrates with LV. But if I understood it right, it's favoring by-ref classes. I find by-val more native. On free alternatives, I found eclipse/papyrus to be a very powerful uml modelling tool (coming from the java world). Reuse, SCC and VIPM (+ Testing): I had great fun of getting a good process in place to distribute my reuse code using VIPM. It's paying off in the 2nd project. I think it's important to design the reuse-code deployment also with your SCC in mind. As I don't use perforce, I can't really say much about the specifics. One of the places I really would like to have automated testing is my reuse code. As it's used frequently, this should very much be worth the effort. To that complex I'd also put in a new tool: bug-trackers. You can misuse them as feature trackers as well. Integrate them with the SCC seems important. Sadly I gave up my own use of mantis (lack of time to maintain the IT infrastructure) Felix -

Bug - PM member link in friends list

Black Pearl replied to Daklu's topic in Site Feedback & Support

I also join that exclusive club. Felix -

Techniques for componentizing code

Black Pearl replied to Daklu's topic in Object-Oriented Programming

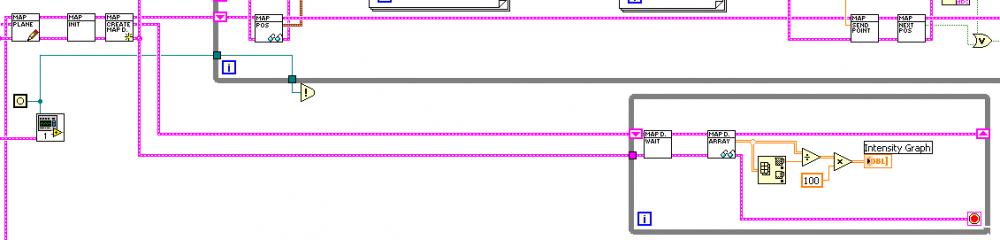

This shows the top level implementation. The main object is the 'MAP'. The slave is the 'MAP D.' Felix -

Techniques for componentizing code

Black Pearl replied to Daklu's topic in Object-Oriented Programming

Thanks for the input, but I'm unsure if this will work. There is no written specs for this app, and I even plan to use in in a whole family of setups. That's why I dedicate a layer to the 'componentizing'. One worst case estimate would be running the meaurement over the weekend at 500kS/s. With processing I get an estimate 1S/s (from literature, my processing is better, so I'll certainly hit other limitations; well they can be overcome as well and then ....). But a hybrid solution could work, do the signal pre-processing with producer-consumer pattern and push the reduced data into the DB. Then do the physical calculations as a post-processing. The more I think about this, the more I like it to use a DB... I'll add it to the feature list. Felix -

Techniques for componentizing code

Black Pearl replied to Daklu's topic in Object-Oriented Programming

The DUT is excited by n-channels. I only measure a single response signal. Each of the excitation channels is modulated by a unique frequency. Each of those n processing loops will filter out the response at one of these frequencies. Each of these channels will be also presented seperatly. Ahh, things will even be more complicated, as I measure other channels as well, do the same kind of processing. With all this data, now I'll do some physical calculations. Now I get some more data sets to present, both n-channel and 1-channel. But I have a clear directional data flow, as opposed to a messageing system where the objects talk in both directions. So certain stages of the processing could be done better by traditional procedural chain wireing of vi's with some for-loops. But then again this is tricky when it comes to the filters, as they need to keep the history of the signal. So I'll need to keep their state seperately for the different channels. Well, nothing I really want think too much about sunday morning. Felix -

I just realized that one of the most outstanding members of this community has passed the 1k post limit. Thank you Daklu for all the discussions we had. I'm looking forward to read the next 1k posts! Felix

-

Techniques for componentizing code

Black Pearl replied to Daklu's topic in Object-Oriented Programming

Yes, that's how I do it. Creator is a better term than factory in this case. The creator-object knows (directly or indirectly) all information that is needed to initalize the object-to-be-created. Using a create-method, there is no need to expose this information publicly. Interesting point. I guess, to use 'roles', I'd need inheritance. But the requirements are really simple for that section of code. Aquire->Analyze/Process->Present. So it could even be done with 3 VIs wired as a chain inside a single loop (for now, aquire and process are still together). There is no need to call back to the aquisition loop. But the code needs to scale well and be modular, as I have to implement more features (from single channel-measurement to multi-channel measurement, this will lead to n Process-loops; changes in the Aquisition code should not affect processing). I can make a screenshot the next time I develope on the target machine. But it's just prototype-code that gives us a running setup where I and others (non-software people) can see the progress of our work/test whatever we do. Other modules are not clean-coded yet, but just a fast hack on top of the low-level drivers. Felix -

Techniques for componentizing code

Black Pearl replied to Daklu's topic in Object-Oriented Programming

I was contemplating about producer/consumer design pattern and LVOOP. Well, it's a real world app I'm coding in LV7.1, so I don't really have OOP. I use type def'ed clusters and accessor VIs, organization 'by-class' in folders and Tree.vi, marking Accessors as 'private' by the Icon. Pretty primitive, no inheritance and dynamic dispatching. But as this is all self-made rules to mimic some of the advantages of OOP, I need to do a lot of thinking about such design decisions. Now the code is simple DAQ/presentation. A super-cluster/über-class (think of it as a composition of objects for all kind of settings made for the measurement) is coming into that VI. Then it's 'split' in a 'method' of that super-cluster/über-class. The super-cluster/class continues to the DAQ/producer loop with all the settings for the actual DAQ measurement and the signal processing (might be split into more loops later). A kind of factory method is creating a new object that contains the display-settings and the pre-initialized data. This goes down into the consumer loop. At this stage, I also create the P/C queue and it goes into both clusters/classes. To compare this to your implementation: * I use a factory method to create the slave-classes. This is where I pass the queue (in this case I also create it) and other information (*), the queue is invisible on the top level. * As this is a pure data P/C and not a message passing, I stop the consumer by destroying the queue. * Because the consumer is writing to an indicator, the 'loop' is top-level, but only contains a single VI+the indicator. (*) I'm unsure how this will evolve. Maybe all the settings needed in the consumer are just a pseudo-class on their own. But queue and those settings would be a nested-classifier only shared between the über-class and the consumer class. Now, just some thinking I had about this design: The main concern is merging the data from producer and consumer. The measurement report will need the settings from the über-class and and the measurement data. So several options I have: * Merge both loops clusters, kind of brute-force. * Modify the 'Send data' method to both call an (private) accessor of the über-class and hold the measurement data in both wires. Then I just 'kill' the consumer/display object. * Just put everything into the über-class. Then I call a parallelize method that creates the queue, branch the wire in both loops. Combine with above method. When the producer/consumer part is finished, actually both classes should be equal. Then I can reconsider everything when moving onto the next task. So, I was really planning to post the question here. But part of this projects challenges is, that I always get distracted by something more pressing. So it's hunting me since more than 5 years. And of course, each time I rewrite all from scratch because I know a much better way of doing it. Felix