-

Posts

323 -

Joined

-

Last visited

-

Days Won

6

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by GregFreeman

-

-

It's just basically this idea here posted by Ben over on the darkside. Thanks for your comments and suggestions; I'll play around with some of these ideas.

-

In general using "standard" G, I have a GUI manager FG that has all my references so my UI updates (basically anything that requires writing/reading from a property node) are encapsulated. But, if I need to write values to indicators on the front panel, I just wire directly up to them rather than having an action in my FG for something like "Update Control Value" that writes to the value property of the specific property node. In OOP, is it still kosher to do the writing to indicators on a main BD, or should even values be updated from within, say, the GUI Manager class?

-

Here is an example. Unzip this to <LabVIEW>/projects. Create a new project and a new VI. You will need to add a comment on that new, unsaved, VIs block diagram that says "test" (omit the quotes). Then run my tool. It should find the VI but when it tries to open a reference to it, it returns an error.

-

The application reference I have wired up is the one returned from "menu launch VI information.vi"

-

I have a tool I'm creating and it searches the active project for all VIs recursively. I have found if I try to use Open VI Reference to an unsaved VI it fails (I am wiring up just the VI name as a string). It gives me error 1004, VI not in memory.

However, if I go to that specific project and create a new VI and do open VI Reference with "Untitled x" wired as a string, it opens the reference fine. Does the tools menu somehow run in a different context where it can't open a reference to an unsaved VI? Is there a way to make this work?

I should add, there are no problems when it's saved to disk and wired up as a path (somewhat obviously).

-

pretty awesome but questionably useful.

Agreed. Not very useful, I just thought it would be cool! There is a notify icon API someone wrote over at NI.com. It is set up very nicely, and uses dynamic and user events to register all the callbacks to be utilized in the event structure. If you can't find it let me know and I will dig it up and send you the zip or forward the link. It's always a bummer when I want to do something like a notify icon but realize someone has already done it, and done it well. Then I get torn between doing it for my own use, enjoyment and learning, but always have in the back of my mind that I'm just reinventing the wheel and as an engineer that pains me!

rolf, thanks for the suggestion. I may see what i can do, but as I said since this is for my own enjoyment and not a top priority, I have a feeling writing this wrapper will fall by the wayside!

edit: you said shell notify, i got mixed up! Either way, the notify icon is pretty cool so I'll leave my shpeal even though it is a bit unrelated!

-

I need to read up on this more. A perfect example of where this would be valuable is we had handshaking in a system where one loop was managing the DAQ and another managing the logging. We had to handshake with a PLC using relays controlled in the DAQ loop based off different logging conditions (the log file was opened successfully, closed successfully, ftp'd successfully etc). Right now the code queues up a command to the logging loop to FTP a file but then sits waiting for a response (success or fail) and does not move on! Talk about possibilities for locking up. Then after the file is FTP to the host, the buffer has overflowed on the DAQ and needs to be reconfigured. For this application it's not a big deal because the file is only ftp'd after the test is completed. But for future reference it may be beneficial.

Edit: After reading through everything more in depth maybe I did not have a great grasp on it at first. Seems to me that in my case I don't need some current data at some time in the future. There may be better methods for doing what I need to do, but I'm not sure if this solution is necessarily what I want. I'll keep reading and rereading to make sure I have a full understanding of everything and if this will "fit the bill"

-

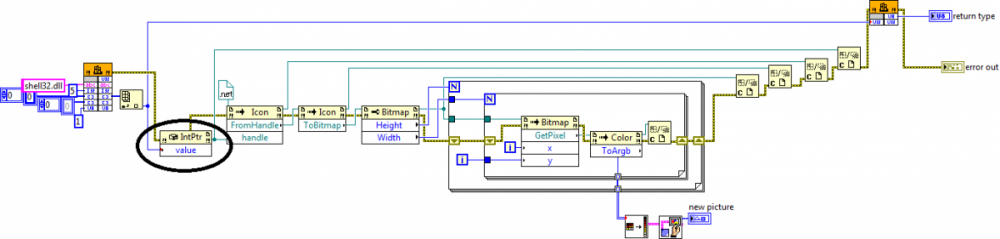

I was thinking it would be cool to write an API that would allow programmers to customize the thumbnail popups in windows 7, especially with the ability to add buttons (see http://dotnet.dzone....ndows-7-taskbar and attached image). From what I can tell, even after taking the knowledgebase steps on NIs site to make .NET 4.0 available in LabVIEW, the necessary namespaces are not available in LabVIEW. Even if they were, I'm not sure how I'd get this to work because it requires xaml files. I suppose I would have to write a .NET wrapper, but then I don't think this would be dynamic enough to make into a standard API for LabVIEW applications that need more or less buttons without modifying the wrapper text code. Unless, somehow you had all buttons fire a single event to be captured by LabVIEW in which the event data had the name of the button pressed, allowing you to determine what to do on that button press. Suggestions, or is this just not going to be possible?

-

Thanks for your help guys. It seems to be working OK. I changed my code so I read from tDMS and write to a CSV file in chunks rather than reading the whole TDMS and building a large array then converting to a spreadsheet string and doing one file write. Not only does this seem to help the memory management, but I think you were right about LabVIEW being lazy but somewhat smart enough to reuse that same buffer because as I exited each subVI there was some deallocation done, and after the first subVI was run, although this allocated memory hung around, it didn't seem to increase. Unfortunately I chased what seems to be a non-existed problem (i.e. " It's easy to theorize what it should be doing and convince ourselves what it's probably doing, but that's not always the case. Usually, it does make more sense to just test it out and see what happens.") So anyways, good to know for future reference!

-

I may try posting it to the darkside if it will fit, and attach a link. There's probably some Rube Goldberg code in there and some things that could be improved, but I will go back and optimize if need be, so feel free to offer suggestions. I should also add the "other issues" I had were in different VIs, not the one I have posted. This one shows the issue with the memory freeing.

Edit: couldn't get it to fit, but here is a drop box link. Sorry if this method isn't "kosher" but I was tired of battling it!

-

Well, I ran into some other memory management issues in the mean time (i.e. tried to read all of the sections I cared about and put them in a 2D array then write that array at once...fail, not enough memory to make that large a 2D array). So I'm going to have to write in chunks, but that's on a different topic.

I was going to try adding my attachment again, but it's larger than the 10MB I have available due to the TDMS file. I'll try to recreate another one later that's smaller so I can put it up. You may be correct, and that it will just hold this memory and reuse it. I am going to try to get everything working and see if it's an issue (have to fix what I mentioned in the first paragraph), It still worries me that closing the VI doesn't free the memory. Yet, if you make a VI, just allocate a 100 million element array of doubles then close the VI, the memory in that situation is freed when the VI is closed. In my VI using TDMS, there are still 400,000 kb that are not freed until LabVIEW is actually exited. I have worked with an AE a bit on this who suggested using the request deallocation, which I found doesn't work in this situation.

-

I am having an issue with memory when reading a TDMS files and converting to an array to write to a spreadsheet file. If I look in task manager when I run the VI the first time it allocates a bunch of memory and only a small fraction of that memory is freed after the VI is run. Even closing the VI and project doesn't free the memory, I have to exit out of LabVIEW before the memory is freed.

Basically what happens is I open the VI and in task manager I see the memory increase (duh). Then I run the VI and it increases greatly (up to around 400,000 K), but when it finishes running only about 10 k is freed. Then every subsequent time I run, there is only about 10 k allocated then freed. So, the memory stops increasing, but it still holds on to a big chunk. The reason I worry this could be an issue is that I have many of these subVIs running one after the other, and if each one is hanging onto 300,000 K even after it's done running, I will begin to have memory issues. I am wondering if part of it could be that I'm in the dev environment, so I may build an exe to check. All my SubVIs do not have the panels opened, so I don't believe I should have a big array indicator showing data and taking up all that memory. Any thoughts or suggestions are appreciated.

Edit: Is my attachment there? My internet is going super slow so I'm not sure...

-

Got the news that I'm being sent to my first NI week! I'll be there walking around clueless so someone save me from myself.

-

found getUserAppReference.vi in LabVIEW 2011\vi.lib\Utility\allVisInMemory.llb

-

1

1

-

-

When a new VI is created, what is the best method, from within lv_new_vi.vi, for determining which project is owning this new VI?

-

Well,as so often happens when learning something new i either:

1) think I get it but actually don't get it

2) when it comes to implementing it I get overwhelmed.

I believe this is a case of both. The reason for part of my confusion is Wikipedia seems to show delegation as being a class which has a member object that may be set and this object is a parent of multiple different children. This can then be set to a child object and the programmer has the ability to always call delegate.method which will call childthatwasset.method. because each childthatwasset method overrides parent.method the class can delegate based on the object. This results in only changing the member class set in the delegator but then the function calls exposed to the programmer don't change. A wrapper of sorts (which just sounds to me like inheritance with a wrapper layer so maybe I'm reading the c++ code example wrong) Then, the NI example seems to show calling the same code from within different classes. Maybe I am misreading Eli's description. So, any clarification is appreciated.

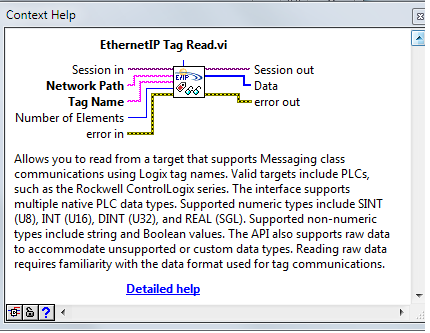

Now, the second issue I have is this. When setting this up I'm not exactly sure how to handle everything. I basically have 3 different classes that all do the same thing, manage some handshaking. One will use daqmx to read DI, one Ethernet IP, one TCP/IP. I suppose each could still have an open, read, close method which are delegated. A simple example would be much appreciated as I seem to be making minimal progress!

Sorry for any typos or confusing descriptions. Wrote this from my phone.

Edit: Wikipedia I referenced http://en.m.wikipedia.org/wiki/Delegation_pattern#section_1

-

I currently have 3 monitors (two up top, one in the center below). Hope to get a 4th soon.

I see what Darren is going for...

-

Just wanted to follow up. This actually should work really well because one system has digital inputs for handshaking, another has E/IP tags, and the third would be my simulation with TCP/IP. This should allow for easy switching between them.

-

"To have two independent classes share common functionality without putting that functionality into a common parent class.

Sounds familiar

-

I have a question relating to inheritance in one of my programs. When at a customer's site we have Ethernet IP available, which will ultimately be what is used for communication between a PLC and our application upon delivery. However, because I don't have ethernet IP available elsewhere, I was hoping to use TCP/IP when in the office and write a simulator to substitue for E-IP when I don't have it available . The issue is, it doesn't really make sense to have TCP/IP and ethernetIP classes both inherit from a same base class (say, communication class) because the ethernet IP drivers don't really have similar functionality to TCP/IP exposed. With ethernet IP, although it uses TCP/IP underneath it, the API is just a polymorphic VI with an input for the address and tag name. You don't need to open anything, or hold a reference to the session etc. I am wondering if there is some way to set up the class structure so I can easily switch out ethernetIP with TCP/IP and vice versa, even if it does not make sense for them to both inherit from the same base class.

-

Yeah, I am not actually using this (luckily) it was just a thrown together example. But, I will remember that in the future because the speed (or lack there of) didn't even cross my mind. Not to mention, I didn't realize that library existed.

-

-

Not all dlls contain .NET metadata so they can't be loaded as .NET assemblies. I believe shell32 would be one of those. You would need to use LoadLibrary from the Win32 API to load it. Also you should just load it by name. The system will locate the appropriate copy.

Thanks, I will go the CLFN route.

-

Yes, those events will always come in the predictable order, as asbo postulated.

asbo: Never forget that "for" can be abused as "while" in a lot of C-like languages. Read it as "for(;imstuck;)" where imstuck is true. Semicolons would be needed in C++, but there are other similar languages where you wouldn't need them.

Makes me wonder if we're allowed semicolons in our usernames...

...although, this actually stops fairly quickly.

...although, this actually stops fairly quickly.

CLD Exam Traffic Controller. My Attempt

in Certification and Training

Posted

I took the CLD two years ago, but it was a 4 hour exam and we had these same examples and if I remember correctly the time limit for them was also 4 hours. I do believe the CLA used to be a 2 hour coding 2 hour written exam so you may be getting confused about that. But the CLA example posted now is definitely a 4 hour example! I will say, the CLD sample exams were all slightly shorter than the actual one I took.