-

Posts

323 -

Joined

-

Last visited

-

Days Won

6

GregFreeman last won the day on January 9

GregFreeman had the most liked content!

Profile Information

-

Gender

Male

-

Location

Texas

LabVIEW Information

-

Version

LabVIEW 2013

-

Since

2008

GregFreeman's Achievements

-

Strange Behavior Checking Running State of VI

GregFreeman replied to GregFreeman's topic in LabVIEW General

I don't believe there are any DVRs, but I can't be certain. There are however various references types everywhere. That being said, for now I have just extended the timeout and it seems to be fine. I am going to assume there is something going on akin to what you mentioned in some way, shape, or form. The code base is a tangled mess and trying to get to root cause may just be a futile exercise. -

I am working with some legacy code that I didn't write and am seeing a strange issue but really am not sure where it has come from. In the last year I have worked with this code, this hasn't come up and I haven't changed anything that I think would effect it. In this code, VIs are launched dynamically from a launcher loop using the Run VI method. When the dynamic VI is finished, it sends an event back to the launcher loop, which is then responsible for dynamically launching the next VI. When the launcher loop receives the message that the dynamically launched VI is finished, it polls that VI's Running state until that VI's state changes from Top Level VI to Idle before launching the next VI. It does this polling with a two second timeout. This all works fine most of the time, but then randomly the state of the launched VI will continue to be returned as Top Level VI, not Idle, even though from what I can tell the VI has stopped. I proved this by logging a timestamp to a log file as the very last thing my dynamically launched VI does. I then logged a timestamp after the timeout and they are 2 seconds apart. So this tells me even though I think the launched VI is finished, its Running state as seen by LabVIEW is not changing to Idle. I am aware this is not the best way for this to be implemented, but as I mentioned this is a legacy system and I really don't have the ability to refactor the entire application. I'm just trying to grasp an understanding of what may be going on. For now, I've just increased the timeout, hoping this will mitigate the issue in the random cases that this pops up. But I'd really like to find the root cause.

-

I agree and I didn't mean to imply they are mutually exclusive. I assume you mean the fact that you have to create many messages or many refnums which results in a lot of extra classes/code? If so, I do somewhat agree with this. If not, maybe you can provide some more detail into the "why?" I also completely agree with this, I am just partial to leveraging built in mechanisms where I can, and I think it makes for clean, readable code with native structures. That's not to say there aren't other viable, valuable solutions. But it is the reason why I tend to lean towards user events. I guess if I were to summarize, the thing I have trouble with can be boiled down to two points: 1) I want to limit the amount a developer has to know about how to create additional user events for a particular process as a regular part of a framework, and how to pass them back to the caller when necessary. I just used the AF as an example here because I think it handles the ownership of the queues nicely. But if you do want to add in additional queues or events, for example, you have to write that same boiler plate to create the communication mechanism and pass it back to the caller. I want to enforce this, with say a must override VI to create child events and a must register VI in the caller to register for them, but the strict typing of events makes this difficult due to connector pane differences. I have tried leveraging malleable VI's to limit some of the boilerplate code but haven't come up with an ideal solution yet. 2) I don't like that I have to expose raw refnums to other processes in order to register for events. But I suppose this is something I have to live with for now.

-

Thanks! The mediator pattern may be a good option. The main drawback I have with any sort of command pattern is that I can't leverage a huge benefit of user events which is the fact that you can have multiple processes register, and only have to send the message out once for them all to receive it. It's great to be able to send a single message and have 3 different windows receive it, my logger receive it, etc and all handle it differently. The command pattern I believe would require you to dynamic dispatch on the messages Do.vi to get a concrete message type, and then immediately pass that concrete type into an Actor's dynamic dispatch "Handle <xyz> Message" VI, which you could override for each child actor. The problem here is you're bumping the "Handle Message" into the base class of the Actor which may not be applicable to many of its children. It seems the correct answer is that everything has its tradeoffs, and it's just a matter of which you want to work with.

-

Yes, and not only this but you are essentially required to expose a refnum to a non-owner in order for it to register, which has never sat correctly with me. I think I should just live with it since I've revisited this what seems like hundreds of times. I've tried wrapping the logic up in a class with register methods exposed, but it becomes more difficult if a VI is launched asynchronously. In this case you want the parallel VI to create its references so they are tied to the lifetime of that VI, not the caller. Because classes are by value, if you do this you have to pass back all the created references, a-la actor framework and its queues. AF does this well, but I find the overhead of implementing this paradigm with multiple user events in child classes a lot more tedious. In some old thread I saw the idea pitched for "registration only" user event refnums which would I think be a fine compromise and something I could live with.

-

I have been going back and forth on the best method for handling shared data between two processes which may or may not exist at the same time. Assume you have processes A and B, and process A is running, B is not. At some point in the future when B begins running, A needs to register for some events from B. I see two ways to handle this. The first way is aggregation. Some parent process that has visibility of both A and B is responsible for creating the user event refnum and passing it to both processes if/when they are created. This is a simple solution but can be a bit ugly in my opinion, since the refnum will exist even in scenarios where it is unused, among some other issues with encapsulation and the like. The second option is to use composition and create the refnum in process B which it is launched, and pass it to process A. This would be done by sending some message to process A to give it the refnum in order to register. This seems to be the cleanest method from an encapsulation standpoint, but also seems to add a lot additional code to the framework as compared to the aggregation method above. There are of course other pros and cons to each of these but those are the most generalized ones I see. I just want to get a feel for what other people out there are doing to solve this.

-

Static VI References with Class, in Class not allowed?

GregFreeman replied to hooovahh's topic in Object-Oriented Programming

FWIW, I have always just stored this in a variant in the class private data, and written a private wrapper VI to convert to and from the reference/variant. It makes me twitch slightly every time I do it, but it's functional so that's good enough for me. It's ironic because I just used this the other day, and was debating posting a question on this subject -- whether or not anyone else was using start async call nodes with wait on async call to run things like dialogs. I have been using this to manage the shutdown sequence of windows or processes that run dynamically, even if I don't need data back, and ensuring they finish before I close references etc. Each dynamic process keeps a reference to any child processes they launch, and when my app shuts down, each layer is responsible for waiting for all async calls to finish before they finish. It seems to nicely propagate up the stack. Just here waiting for someone to tell me about some caveat I didn't consider -

Reusable Events Between Parent and Child Classes

GregFreeman replied to GregFreeman's topic in Object-Oriented Programming

Sounds good. I have decided to just register for the events scoped to the parent, in the childrens' event structures. It does result in some duplicate event handling code between children, which I'd like to live in the parent, but it's not really that big a deal. I may refactor to use something more like the actor framework for this class, but I think with the minimal number of events I don't get a whole lot of lift from refactoring -

Reusable Events Between Parent and Child Classes

GregFreeman replied to GregFreeman's topic in Object-Oriented Programming

Yes, this is the solution I keep wanting to land on except it doesn't cover the case where Event C wants to do something with parent state which is updated from an event. Using your example, assume event A is triggered and the string value becomes state that belongs to the parent. Now assume event C triggers writing that string to a file. The child event handler loop will not have access to the string that was updated in the parent event handler, since the wire was split. I am starting to think that I am trying to make what is more of a by-reference paradigm and fit it into a by value language, and the right solution if this is really needed is to use a by reference class. -

I have struggled with this for a long time, but haven't really come up with a solution. I actually found an old message I sent to AQ about 6 years ago (wow) which is related to this, but his response fell by the wayside and I want to revisit it. In that case, it was about dynamic events to reuse code that is part of a Dialog, much like Windows would use OnOK and OnCancel callbacks that were not coupled to a specific dialog, but instead took a dynamic reference to controls on the child dialog. Assume you have a parent class Base and a child class Child. Base has events A and B, Child has event C. You have dynamic dispatch VI's as event handlers in your Base class -- OnA and OnB. What I haven't figured out is, can I reuse OnA and OnB without having to register for Event A and Event B in the child class's event structure? It seems the general methodology would be to have your child class have an event structure, your parent class have a method called "RegisterForEvents" which returns a registration refnum for the A and B events, and then your child class register for these events in its own event structure. Inside the child's event structure, you would then drop the OnA and OnB methods in their respective event cases. Now assume you have Child1, Child2, and Child3, which all inherit from Base. They all have this duplicate registration code, duplicate event cases, and duplicate handler VIs dropped in those event cases. I would like to be able to pull that duplicate logic out. But the only way I can figure out to do that is to have a VI with an event structure in the Base class to encapsulate all the parent event logic, then another in the child class to encapsulate the child event handling. But, now I end up needing two event handling loops on a block diagram, and because classes are by value, I can't easily share state. Has anyone else battled with this problem? Hopefully this makes sense. I can draw up an example if needed.

-

DLL Linked List To Array of Strings

GregFreeman replied to GregFreeman's topic in Calling External Code

Got it, this makes sense. Thanks! I suppose from this output when I create 'c file I just didn't correctly reverse engineer the data structures in terms of what LabVIEW wanted. TD1 has a TD2 not a TD2Handle. Looking at it again with your clarification makes sense typedef struct { int32_t numPorts; LStrHandle Sn; } TD2; typedef struct { int32_t dimSize; TD2 elt[1]; } TD1; typedef TD1 **TD1Hdl; -

DLL Linked List To Array of Strings

GregFreeman replied to GregFreeman's topic in Calling External Code

I've taken this one step further, because I realized I will need to return more than just an array of strings, but instead return an array of clusters. I have modified the dll, and sprintf statement seems to output the correct values, but I'm getting garbage back in LabVIEW. My best guess is it has something to do with what my handles are pointing at, but I haven't been able to figure out the issue. /*Free an enumeration Linked List*/ void EXPORT_API iir_usb_relay_device_free_enumerate(IIR_USB_RELAY_DEVICE_INFO_T* info) { //usb_relay_device_free_enumerate((struct usb_relay_device_info*)info); IIR_USB_RELAY_DEVICE_INFO_T *t; if (info) { while (info) { t = info; info = info->next; free(t->serial_number); free(t); } } } static MgErr resize_array_handle_if_required(DevInfoArrayHdl* hdl, const int32 requestedSize, int32* currentSize) { MgErr err = mgNoErr; if (requestedSize >= *currentSize) { if (*currentSize) *currentSize = *currentSize << 1; else *currentSize = 8; err = NumericArrayResize(uPtr, 1, (UHandle*)hdl, *currentSize); for (int i = 0; i < *currentSize;i++) { *((**hdl)->elm + i) = (DevInfoHandle)DSNewHClr(sizeof(DevInfo)); } } return err; } static MgErr add_dev_info_to_labview_array(DevInfoHandle* pH, const IIR_USB_RELAY_DEVICE_INFO_T* info) { MgErr err = mgNoErr; int len, i = 0; (**pH)->iir_usb_relay_device_type = info->type; len = strlen(info->serial_number); err = NumericArrayResize(uB, 1, (UHandle*)&((**pH)->elm), len); if (!err) { MoveBlock(info->serial_number, LStrBuf((*(**pH)->elm)), len); LStrLen(*(**pH)->elm) = len; } return err; } static void free_unused_array_memory(DevInfoArrayHdl* hdl) { int n, i = 0; DevInfoHandle* pH = NULL; if (*hdl) { /* If the incoming array was bigger than the new one, make sure to deallocate superfluous strings in the array! This may look superstitious but is a very valid possibility as LabVIEW may decide to reuse the array from a previous call to this function in a any Call Library Node instance! */ n = (**hdl)->cnt; for (pH = (**hdl)->elm + (n - 1); n > i; n--, pH--) { if (*pH) { DSDisposeHandle(*pH); *pH = NULL; } } } } IIR_USB_RELAY_DEVICE_INFO_T EXPORT_API * iir_usb_relay_device_enumerate(void) { //return (IIR_USB_RELAY_DEVICE_INFO_T*)usb_relay_device_enumerate(); IIR_USB_RELAY_DEVICE_INFO_T* ptr = NULL; IIR_USB_RELAY_DEVICE_INFO_T* deviceInfo = NULL; IIR_USB_RELAY_DEVICE_INFO_T *prev = NULL; int len = 0; const char* sn[] = { "abcd", "efgh", "ijkl", NULL }; IIR_USB_RELAY_DEVICE_TYPE deviceType[] = { IIR_USB_RELAY_DEVICE_ONE_CHANNEL, IIR_USB_RELAY_DEVICE_TWO_CHANNEL, IIR_USB_RELAY_DEVICE_FOUR_CHANNEL }; for (int j = 0;sn[j];j++) { IIR_USB_RELAY_DEVICE_INFO_T* info = (IIR_USB_RELAY_DEVICE_INFO_T*)malloc(sizeof(IIR_USB_RELAY_DEVICE_INFO_T)); len = (int)strlen(sn[j]) + 1; info->serial_number = (unsigned char*)malloc(len); info->type = deviceType[j]; memcpy(info->serial_number, sn[j], len); info->next = NULL; if (!deviceInfo) { deviceInfo = info; } else { prev->next = info; } prev = info; } return deviceInfo; } int EXPORT_API iir_get_device_info(DevInfoArrayHdl *arr) { MgErr err = mgNoErr; IIR_USB_RELAY_DEVICE_INFO_T* ptr = NULL, *prev = NULL; DevInfoHandle* pDevInfo = NULL; IIR_USB_RELAY_DEVICE_INFO_T* deviceInfo = (IIR_USB_RELAY_DEVICE_INFO_T*)iir_usb_relay_device_enumerate(); int i = 0, n = (*arr) ? (**arr)->cnt : 0; for (ptr = deviceInfo; ptr; ptr = ptr->next, i++) { err = resize_array_handle_if_required(arr, i, &n); if (err) break; pDevInfo = (**arr)->elm + i; err = add_dev_info_to_labview_array(pDevInfo,ptr); if(err) break; } iir_usb_relay_device_free_enumerate(deviceInfo); free_unused_array_memory(arr); DevInfoHandle* hdl2; char buf[1024]; (**arr)->cnt = i; for (hdl2 = (**arr)->elm,i=0; i<(**arr)->cnt ;i++,hdl2++) { sprintf_s(buf, 1024, "%s: %d", (*(**hdl2)->elm)->str, (**hdl2)->iir_usb_relay_device_type); } return err; } -

DLL Linked List To Array of Strings

GregFreeman replied to GregFreeman's topic in Calling External Code

Very helpful, now it's working. Another big problem I realized is that I had the CLFN set to WINAPI, not C, calling convention 🤦♂️ -

DLL Linked List To Array of Strings

GregFreeman replied to GregFreeman's topic in Calling External Code

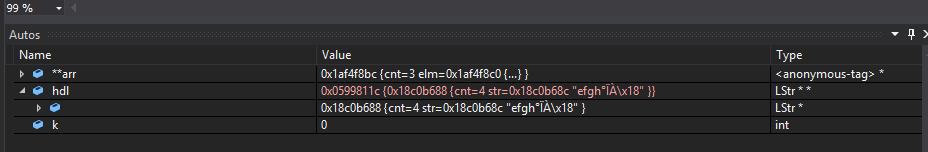

Ah, that makes sense. Needs to be a pointer to the handle... I am not currently crashing mid-function anymore, but i do crash when it reaches the end. I have attached the code as it stands now. I'm creating a linked list myself since I don't have access to the hardware. I am seeing something strange in the visual studio debugger. Notice the value of the str variable in hdl has some junk after "efgh." It's making me think something in the resize and MoveBlock aren't quite right, but I can't figure out what. int EXPORT_API iir_get_serial_numbers(LStrArrayHandle *arr) { MgErr err = mgNoErr; IIR_USB_RELAY_DEVICE_INFO_T* ptr = NULL; IIR_USB_RELAY_DEVICE_INFO_T* deviceInfo = NULL; IIR_USB_RELAY_DEVICE_INFO_T *prev = NULL; LStrHandle *pH = NULL; const char* sn[] = { "abcd", "efgh", "ijkl" }; int len, i = 0, n = (*arr) ? (**arr)->cnt : 0; for (int j = 0;j < 3;++j) { IIR_USB_RELAY_DEVICE_INFO_T* info = (IIR_USB_RELAY_DEVICE_INFO_T*)malloc(sizeof(IIR_USB_RELAY_DEVICE_INFO_T)); info->serial_number = (unsigned char*)malloc(5*sizeof(unsigned char*)); strcpy_s(info->serial_number,5*sizeof(unsigned char*),sn[j]); info->next = NULL; if (!deviceInfo) { deviceInfo = info; } else { prev->next = info; } prev = info; } //IIR_USB_RELAY_DEVICE_INFO_T* deviceInfo = (IIR_USB_RELAY_DEVICE_INFO_T*)iir_usb_relay_device_enumerate(); /* This only works reliably if there is guaranteed that the deviceInfo linked list won't change in the background while we are in this function! */ for (ptr = deviceInfo; ptr; ptr = ptr->next, i++) { /* Resize the array handle only in power of 2 intervals to reduce the potential overhead for resizing and reallocating the array buffer every time! */ if (i >= n) { if (n) n = n << 1; else n = 8; err = NumericArrayResize(uPtr, 1, (UHandle*)arr, n); if (err) break; } len = strlen(ptr->serial_number); pH = (**arr)->elm + i; err = NumericArrayResize(uB, 1, (UHandle*)pH, len); if (!err) { MoveBlock(ptr->serial_number, LStrBuf(**pH), len); LStrLen(**pH) = len; } else break; } if (deviceInfo) { IIR_USB_RELAY_DEVICE_INFO_T *t; while (deviceInfo != NULL) { t = deviceInfo; deviceInfo = deviceInfo->next; free(t); } } /* If we did not find any device AND the incoming array was empty it may be NULL as this is the canonical empty array value in LabVIEW. So check that we have not such a canonical empty array before trying to do anything with it! It is valid to return a valid array handle with the count value set to 0 to indicate an empty array!*/ if (*arr) { /* If the incoming array was bigger than the new one, make sure to deallocate superfluous strings in the array! This may look superstitious but is a very valid possibility as LabVIEW may decide to reuse the array from a previous call to this function in a any Call Library Node instance! */ n = (**arr)->cnt; for (pH = (**arr)->elm + (n - 1); n > i; n--, pH--) { if (*pH) { DSDisposeHandle(*pH); *pH = NULL; } } (**arr)->cnt = i + 1; char buf[1024]; LStrHandle hdl; for (int k = 0; k < ((**arr)->cnt);++k) { hdl = (**arr)->elm[k]; } } //iir_usb_relay_device_free_enumerate(deviceInfo); return err; }