-

Posts

225 -

Joined

-

Last visited

-

Days Won

2

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by AlexA

-

Should File IO be handled by a central actor?

AlexA replied to AlexA's topic in Database and File IO

Ahhh, interesting! Thanks for that take on things. I'll think a little further about what's going on. -

Should File IO be handled by a central actor?

AlexA replied to AlexA's topic in Database and File IO

This is getting a little abstract for me. To concretize the discussion a little, @smithd from what you say I'm visualizing something like: A wrapper (subVI) around TDMS files (for discussions sake) which internally looks like a message handler with messages for open/close/write. I assume it would be a re-entrant VI that you drop on the block diagram of anything that needs file IO and hook up internally. This is what I infer from your 1 file/qmh statement? -

Should File IO be handled by a central actor?

AlexA replied to AlexA's topic in Database and File IO

That's my point I guess. Why manage a central repository of file references for streaming files from different modules when the OS can handle the scheduling better than I can anyway? Why not just let the individual modules open and close their own files as they require. -

Should File IO be handled by a central actor?

AlexA replied to AlexA's topic in Database and File IO

Ok, thanks very much for the clarification. I guess my original question boils down to "how much can we lean on the OS file handling code for handling multiple streaming files?" I acknowledge your point about someone having to write the code. If the OS (windows) can be trusted to handle multiple open file handles; absorbing multiple streams of data gracefully, then it makes more sense to let people write their own file IO stuff local to their module. There's actually not much difference between completely letting the OS handle it, and what I'm currently doing. There's no effort to schedule writes in my current architecture so it might as well be the same thing as everyone just trying to write their own stuff independently. -

Should File IO be handled by a central actor?

AlexA replied to AlexA's topic in Database and File IO

So you're saying if someone comes along with a new module and a new way of saving data, the standard way to interop is for them to implement their own File IO? The workflow for me adding something to your application is to roll my own file IO inside my module? -

Should File IO be handled by a central actor?

AlexA replied to AlexA's topic in Database and File IO

Ok, I think I understand, what do you do if a new module someone is developing wants to use a core service in a different way? I.e. wants to save its data differently? -

Should File IO be handled by a central actor?

AlexA replied to AlexA's topic in Database and File IO

Interesting, let me see if I understand you correctly as there are a number of implementation differences which I might get hung up on. You would copy and paste that File sub VI into every module that may want to do file IO. You access it using the named queues functionality, rather than maintaining queue references or anything like that. I note that the File IO is non-reentrant, what does this mean if there multiple "plugins" which have it on their block diagrams? Or are you proposing that each plugin has essentially their own version of that File IO subVI? -

Following with the idea of division of responsibility. I designed my application such that each individual process in charge of controlling and acquiring data from a specific piece of hardware would send its acquired data to another actor who maintained all file references (TDMS files) and was responsible for opening and closing files, as well as saving the data to disk. A consequence of this decision is that someone wanting to introduce a new piece of hardware + its corresponding control code must go further than just dropping a plugin that meets the communication contract into a directory. They must implement all the file IO stuff in the file IO process. The thought has entered my mind that perhaps it would be better to make File IO the responsibility of the plugin that wants to save acquired data. So each individual process would implement a sub loop for saving its own data. The template for plugins would then include the basic file IO stuff required so it would be much easier for someone to just modify the data types to be saved. My goal here is ease of maintenance/extension. The most important consideration for me apart from ease of extension is whether having a bunch of independant processes talking to the OS will be more CPU heavy than one single actor (who is an intermediary between the OS and all the other processes). Does anyone have any experience in this area?

-

Hi guys, I've just started to play around with Haskell and learn about functional programming paradigms and I was wondering, as per title: Can a typical LV application be constructed along functional paradigms? Hypothetical application: 2 Analog Inputs and 2 Analog outputs connected to some process, some sort of controller, a UI for interacting with the controller, and file IO. I typically don't see functional paradigms applied to hardware control. I don't know if this is just something that people have avoided doing or whether there just hasn't been any interest. In any case, I'm trying to imagine first of all what LV native mechanisms could be uses to construct a "functional" type application, and secondly, whether there would be any advantages in doing so? Particularly in ability to reason about behaviour. Hope this sparks some interesting discussion. Kind regards, Alex

-

Lots of fantastic info, thanks guys! @Shoneill How does your proposed solution deal with the potential situation where the process (Signal Generator) is running remotely from the control software (UI). Over TCP or whatever. I can't see a graceful way of passing a UI type object around. If the UI is a part of the object, then you have a static dependency between the control UI (which contains the subpanel) and the process code don't you?

-

Hi guys, I thought I'd throw this out there to see if anyone has any opinions on a hypothetical (which cartoons my current situation). Say I have some process, implementing some functionality, for example: A signal generator which can generate triangles, sines, ramps and steps. Now I want the process to also generate band limited white noise. The problem is, the parameters to the generator don't accomodate the idea of a frequency cut-off. The ways I can see of dealing with this are: Extend the interface to include a frequency cut-off parameter (redundant for 90% of messages.) Break down the interface and expose different sub-interfaces (pass complexity on to calling code...) Any other ways? Thanks in advance for your insights!

-

MCLB movable column separators and header click events.

AlexA replied to John Lokanis's topic in User Interface

Shaun I have to disagree, the standard Window's interaction is single click to sort, it's what's intuitive to most people so if the expectation isn't met I think it becomes a minor user gripe. -

Hi Ned, Thanks, you've eased my mind a bit. I think this was the discussion http://lavag.org/topic/16908-q-what-causes-unresponsive-fpga-elements-after-restart/ but after re-reading it, I think I read something into it that wasn't there. Or perhaps I'm misrecalling where or if I saw that piece of information. Either way, from what you say I'm not worried about it anymore. Cheers, Alex

-

Hi Shaun, Thanks for all that info! Would it be correct to say the distinction between state machine with message injection and message drive action engine (AE) is how the actions map to commands. I.e., a message driven AE gets you an action with a strictly defined end point, in other words it's gauranteed to complete. Whereas, for state machine with message injection, a single message could just result in a different continuous state? A long time ago I looked at Actor Framework but there were so many layers of abstraction I found it impossible to get started, let alone port my code to it. I'm pretty happy with what I've got at the moment, I've slowly improved it but I'm still wondering if I'm maintaining too much state in my message handlers. For example, I have an FPGA which is driving a number of pieces of hardware (motors, electrodes etc.) The VI in charge of the interface to that FPGA consists of a continuous loop which basically just listens to a Target-To-Host DMA FIFO, as well as a message handler which takes requests like "Update Setpoint Profile" and computes a new profile before uploading it to the FPGA. Should everything be done in a single state machine loop with message injection (I.e. listening is done in the timeout case). My uncertainty stems from something someone said to me a long time ago on LAVA, that it was very strange that I branched the FPGA reference to two different loops, and that they had never needed to do that. Thanks again for sharing your experience! Edit: I get 403 Forbidden errors when trying to follow your links.

-

Hi Shaun, Yeah that looks very much like what I use, but I base it around Daklu's LapDog queue based system. The question I'm asking is targetted at the scenario where the "module" loop is intended to act as an interface for some piece of hardware which has a continuous operating state (constantly filling some sort of buffer with data), but also other states like "setting control parameters" or "updating setpoints". Do you handle that sort of thing inside a timeout?

-

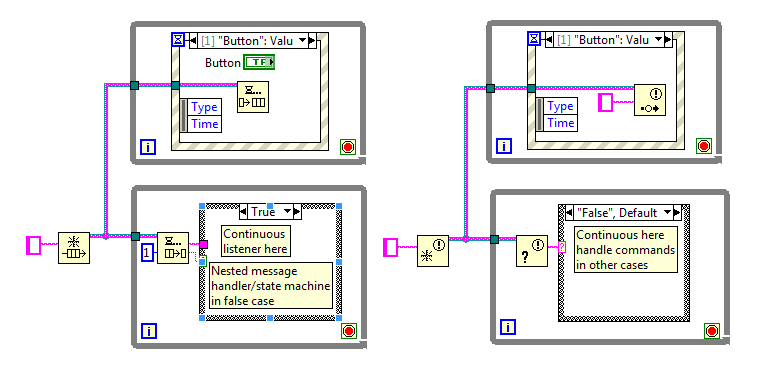

Hey guys, It's been a long time since I've visited this topic but I thought I'd resurrect this rather than start a new one. I'm still uncertain about best practises. Specifically, what is the best way to control a parallel loop that has some "continuous" type behaviour, plus config, init, reinit, stop, etc? I've cartooned up a couple of the ways I'm aware of doing things (the notifier one I kind of made up, but it should work I think EDIT: the case should just read Default, not "False", Default). At the moment, I'm working with a bunch of FPGAs, and our standard approach is to listen to them for data, continuously in a parallel loop, and write config style messages in a separate message handler loop which has direct access to the FPGA reference, so effectively, we're branching the FPGA ref. This has worked fine so far, but I'm always curious if there's a more robust way? In general, how do we feel about handling the "main" continuous function of a loop in the timeout case of some communication system? Regards all, Alex

-

Ok, with red face I must admit having discovered the real source of the problem. It was an unforseen race condition (of course) which was actually happening due to the computer moving faster than the hardware it was controlling. The hardware control process would enable the controller and then immediately check the FPGA status (a "control enabled?" indicator which depends on more than just the user's input), as the FPGA control loop only runs at 20kHz, I believe that the "control enabled?" indicator wasn't being updated before the check. Sorry for the run around!

-

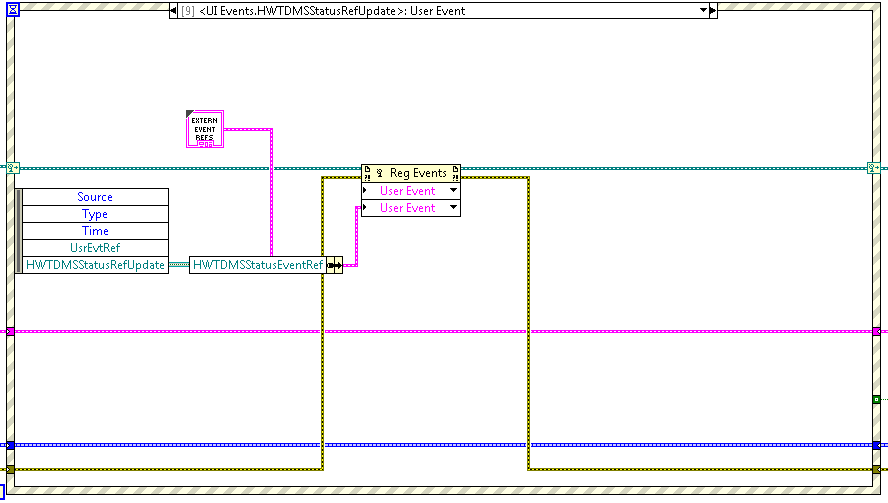

Not for User Events i.e. the ones which you fire by calling Generate Event with some data. Edit: I hunted extensively for such an option, and checking again, I can confirm it's not there. See attached screen shot

-

So after much modification of code I've run into the following phenomenon. Even for relatively low data update rates (20 Hz, 4.8 kB/s) the front panel becomes unresponsive. Buttons are sometimes handled, sometimes not, sometimes they seem to be handled behind the scenes (control is enabled) but the boolean control in question remains in the false state. As I understand it, Dynamically registered events can not be configured such that the front panel remains unlocked while they're being handled? Am I correct? If so, this is a big caveat that I wish I'd been aware of before starting down this path. The paradigm seems really nice, but with some fundamental flaws lurking below the surface. Bugger.

-

Hey guys, The DVR choice was made a while ago due to resource constraints on the system. Specifically, I use the DVR with the "TDMS Advanced Asynchronous Write (Data Ref)" function. We've since moved to a bigger system but I'd obviously prefer to keep the execution tight if I can. The way that "Acquire Read Region" method works, you can configure the FIFO buffer size to be any multiple of your data chunk-size (for me, 1024 x 1024 U8 pixels). The method then iterates over the available buffer generating a new DVR for each chunk. This DVR must be destroyed so that chunk can be used again. Things I'm not sure of: Whether all the DVR's are assigned at start-time or generated on the fly? Since the above post, I've implemented a simple Lossy queue circular-buffer in the producer. The lossy queue is "Size of FIFO Buffer (in chunks) minus one" when the Lossy Enqueue pushes a DVR onto the queue, I delete the DVR that comes off the end. I push raw pixel data to the UI by copying the data out of the DVR and generating a pixel data event. I push the DVR to the File IO by generating a DVR event. I've made a number of assumptions: 1) That copying the data out of the DVR and generating an event doesn't actually do anything if there are no listeners subscribed. 2) Furthermore, if the listener is subscribed, but doesn't do anything with the data (a conditional structure eats the data to prevent the UI updating to quickly), then I assume that doesn't perform an un-necessary copy. This is a big assumption and may be giving too much credit to the LV compiler. 3) The consumer of the DVR (File IO) can operate faster than the producer can fill the circular buffer (I've yet to see this fail, though I definitely need to check this explicitly). The reason it's crucial to delete the DVR outside the File IO is that the user can be using the UI to observe the image, without requesting that the images be streamed to disk. Thus the File IO is not subscribed to the DVR Event. In all honesty, I'm hacking this Events based paradigm over a number of old decisions. So I'm really not sure if I'm papering over some redundancy. Edit: Interesting behaviour that might be useful is that "Delete DVR Reference" has an output terminal for the data that the reference was linked to, I haven't thought if I can take advantage of this somehow to update the UI.

-

Good point, the DVR is generated by the "Acquire Read Region" method on a Target-to-host DMA FIFO from an FPGA. As I understand it, a new DVR is generated on each call to the method. This DVR must be destroyed so that the method can place data into that region the next time it cycles around to it. The thing is, I can't think of a way for this producer to elegantly destroy the DVR. The key being, when does it make the decision to destroy the reference? My thoughts are below: The producer can't destroy the DVR on the same cycle it generates it so it must store it to be destroyed later. The obvious storage structure is an array (circular buffer). I guess you could have a destruction operation acting some indices ahead of the current, for arguments sake lets say 5. On the first cycle through, you get a whole bunch of errors pertaining to invalid refs, but you just squash them. Then the next time through you're destroying refs ahead of your current index (wrapping) so they're gauranteed to be free. But, if the consumer (File IO) is slower than the producer, eventually you're going to catch up and destroy a reference that you're working on (writing to disk). I guess this is a problem regardless of whether its a DVR or actual data, the symptom being a memory leak if its actual data. I don't know if I'm missing some piece of information/subtlety with DVRs or with that particular method which would inform a cleaner solution?

-

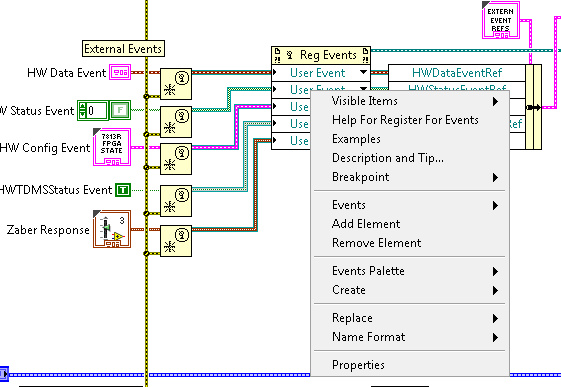

Ok, further question: If I have a buttload of events, and I register them as a cluster, it doesn't seem to be possible to re-register specific elements of that cluster? Here's what I'm doing currently but it doesn't work: There's no way that I can see to make the Event Registration terminal accept a sub-element of a cluster. Attempting to re-register as I am in the picture will destroy the other event references in that cluster (as you would expect). Is this just not possible, or am I missing something obvious?

-

@Hooovahh I've been thinking about this, and it's not obvious to me how pushing a DVR through an Event makes any sense. Specifically, if multiple processes subscribe to a DVR event, who is responsible for destroying the DVR? My specific use case is as follows: I have a process producing high speed image data via DVR. I have a UI which needs relatively slow updates (25-30 FPS, from Cam Data at 100 FPS+) I have a File IO process which needs to get all that data. To me, the File IO is the obvious place to delete the DVR as its the only process gauranteed to receive all the data (at least in my current architecture). If both processes subscribe to the event, it's possible that the File IO process clears the DVR before the UI has a chance to access it resulting in an error at the UI. Do you just handle that error and ignore it? I guess that's one way I could do things.

-

Thanks for the info guys!