alukindo

Members-

Posts

113 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by alukindo

-

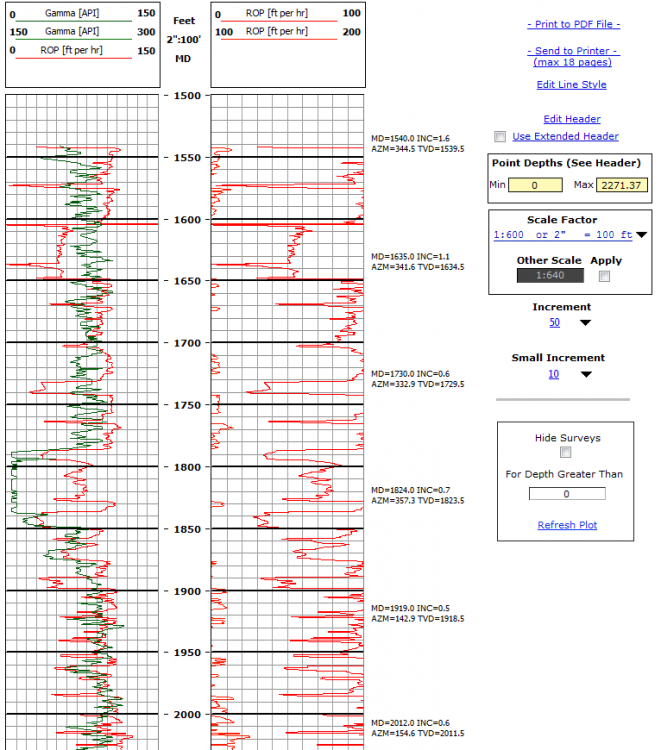

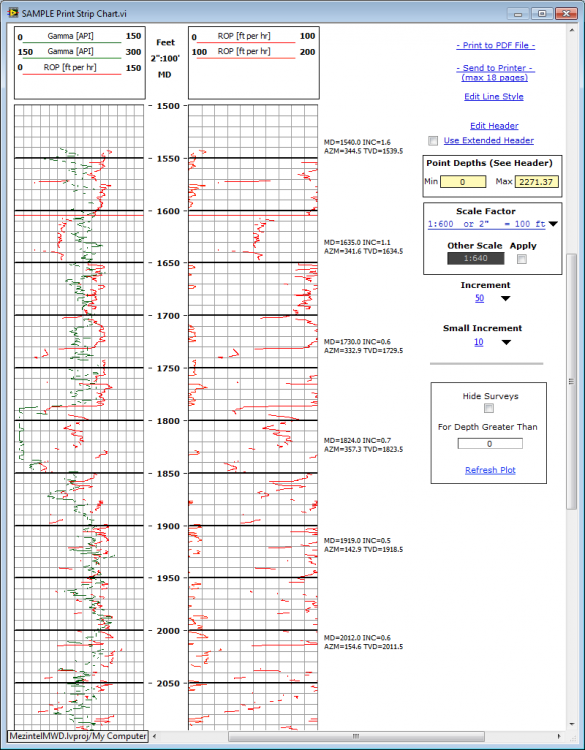

Hi: The LabVIEW Function 'Print Front Panel' Outputs highly pixelated vertical text. Such vertical text is the one created via control captions. Even when converted to PDF the vertically-oriented text is not even available for editing and is actually printed as an image. Question: Has anyone found a way to minimize vertical text pixelation? The text looks OK on screen. It just deteriorates when printing. I have attached an example of a printout with both vertical and horizontal text. Regards Anthony L. PDFPlot_View2013-02-17_16-05-40.pdf

-

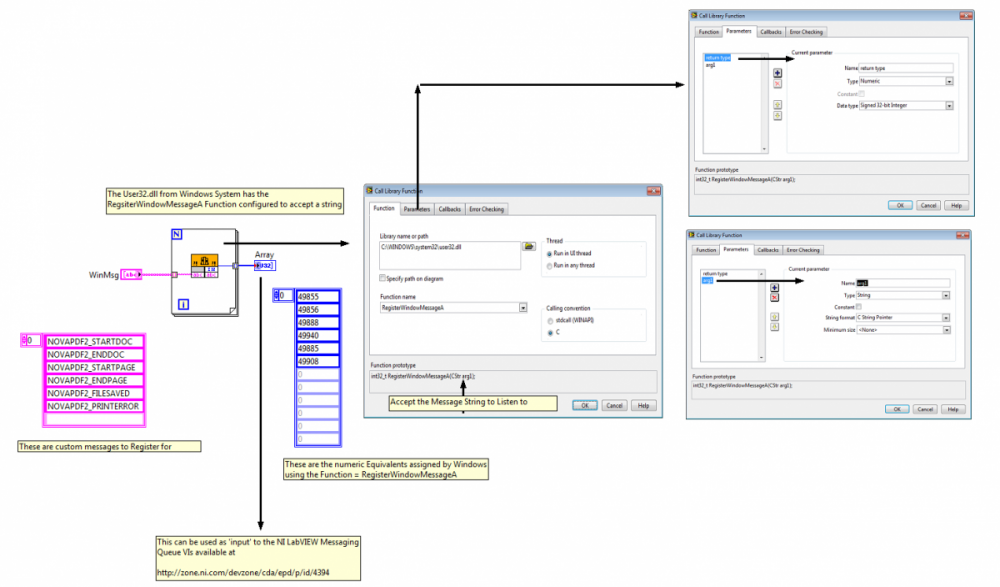

OK: I researched further and determined that the user32.dll has the function to assign Message Register integers for listening-to. I have attached a tip doc on how this can be done. Perhaps this will benefit others looking on how to register custom window messages for listening via the Windows Message queue VIs. Anthony L.

-

Hi: NI Website has VIs for registering Standard WIndows Messages and capturing them via polling a Message Queue Loop. Messages such as Mouse Move, Mouse Down etc are converted to thier known integer equivalents. Then a windows message queue is pollled to read those messages when broadcast by another application. In my case I need to regsiter 'Custom Messages' and listen or poll them in the same manner in LabVIEW. The challenge is how to derive the message unique integer values. Does anyone have a warpper for this? I am not a C++ programmer by any stretch and can only use LabVIEW. Here is the link to the LabVIEW VIs for windows message registration an polling but only for standard windows messages. http://zone.ni.com/devzone/cda/epd/p/id/4394 Regards Anthony L.

-

Consulting Help to Speed Up a Curve Smoothing VI

alukindo replied to alukindo's topic in LabVIEW General

Hi Greg: There is code in your routine which forces the ratio to something other than zero. I later found out that I need to input a higher coefficient compared to the ones I've been using on the original routine. So your revised routine does work but I just need to adjust the smoothing factor after I have tried on several logs. Thanks and Regards Anthony L. -

Consulting Help to Speed Up a Curve Smoothing VI

alukindo replied to alukindo's topic in LabVIEW General

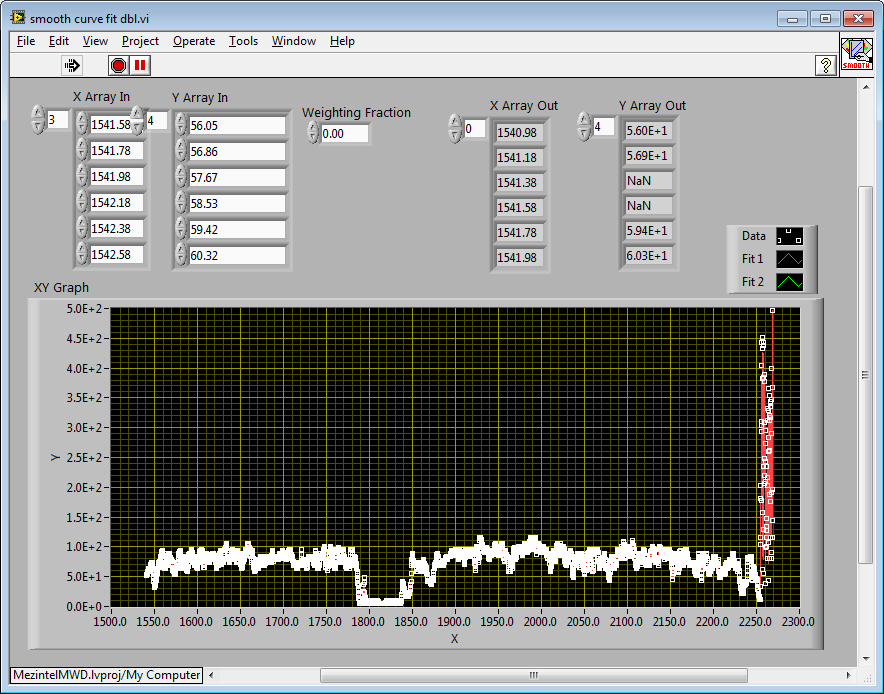

Hi Greg Sands: I tried this on the logs and the smoothing assigned NaN values to some of the Y-Points. Do you know what could be causing this? The final result is that the output curve has many broken regions. I have attached various screen shots that shows this. Regards Anthony L. -

Consulting Help to Speed Up a Curve Smoothing VI

alukindo replied to alukindo's topic in LabVIEW General

Greg Sands: Wow! 15x Faster. I owe you a beer, or a soda --just-in-case you don't drink beer. I have been in search of something more efficient. I really appreciate the wizardry that went into speeding up this routine. Will Test and get back. Thanks and Regards Anthony djdpowell: I like the idea of interpolation to attin uniform X- spacing because we have to that to conform to the LAS file export standard. I will try the Savitsky-Golay fileter when going through the interpolation algorithm. The only thing is that the interpolation is only done during final reporting to conform with Log ASCII file export requirements for report drilling info. I allother cases the data needs to presented as-is but smoothed. Thanks for all these great suggestions. Amazing what can be learned from these forums when minds from around the word come together to contribute solutions. Anthony -

Consulting Help to Speed Up a Curve Smoothing VI

alukindo replied to alukindo's topic in LabVIEW General

drjdpowell: I checked that Savitsky-Golay filtering algorithm. I like the fact that it leaves in all the 'peaks' and 'valleys' while smoothing, but how can I use it with an array of X and Y values where the X values are not collected at the same X-interval? The VI connector pane shows that it will accept only one X- values at-a-time? Note that the Lowess VI in te example that I provide above already has array inputs for pairs of 'X' and 'Y' values. . . . . Or am I missing something? Anthony L. -

Hi: This link points to a great utility to do curve smoothing. http://zone.ni.com/d...a/epd/p/id/4499 The challenge though is that the VI is really slow when trying to smooth a long curve. I am using this in geological logs that happen to be ~45 pages long. Question: Does anyone wish to do code optimization to make the VI run faster. I need it to run more than 10 times faster. Let me know how much the consulting effort will be for this? NOTE: While I will or can pay for the effort to optimize the code, the VI will still be available for others to use and the original author will be acknowleged. Regards Anthony

-

Scan String Date Function into Date & Time Array

alukindo replied to alukindo's topic in LabVIEW General

Mikael: Thanks! That worked Anthony L. -

Scan String Date Function into Date & Time Array

alukindo replied to alukindo's topic in LabVIEW General

Ton P Your format date message arrived after I had posted the above example showing how parsing is done now. I could not figure out a way to scan using your date specifiers. Any help will be greatly appreciated Thanks Anthony L. -

Scan String Date Function into Date & Time Array

alukindo replied to alukindo's topic in LabVIEW General

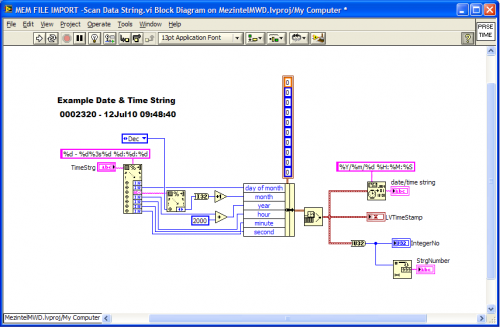

Hi: Attached is how the parsing is done now. Still looking for more efficient alternative. Scan Data String.vi Regards Anthony L. -

Scan String Date Function into Date & Time Array

alukindo replied to alukindo's topic in LabVIEW General

Yes, I thought about that, but how about when the abbrev for month name changes from say Jul to a Aug? Or perhaps are you suggesting catching the scan error and incrementing the month name until no error is encountered? Regards Anthony -

Scan String Date Function into Date & Time Array

alukindo replied to alukindo's topic in LabVIEW General

Hi: I am passing the array into a for Loop and then parsing the 3rd element to get the 3 characters for month #, I then convert the date and time into LabVIEW time. Performance is really important because the array I work with has 50,000+ element. The sad thing is that this data is read from a text file in < than 2 seconds and thereafter a large chunk of time is used to convert that date string column into a calender number that can be computed. Regards Anthony L. -

Scan String Date Function into Date & Time Array

alukindo replied to alukindo's topic in LabVIEW General

Ooops! I forgot to save array data as default and is hence empty. I am including same VI with the data intact Anthony Data Array.vi Anthony -

Hello Lava: Can anyone care to provide a fast method to scan the Date & Time string in column # 3 in the attached VI into a 1-D array of LabVIEW Date & Time data type? I have several methods to do this but I think that there must be a much faster one out there. Thanks in Advance Anthony L Data Array.vi

-

Unable to download installer for MSSQL 2005

alukindo replied to ssingh's topic in Database and File IO

Shourya: I am the author of that subject matter. Tomi Maila maintains the site. It could be that the FTP service is down or the file link is no longer valid. If you have access to some other FTP service I can upload the files for you. Just let me know where to upload them (i.e. FTP server, user login etc). The files cannot be sent via e-mail because of their large size. Anthony L. -

Make PNG Image Transparent as Original

alukindo replied to alukindo's topic in Machine Vision and Imaging

Hi Justin: I am using version 8.6 as well. I have attached the code that I am using. The VI shows that my images are read-in as 8-bit ones. I know the newer 8.6 VI for reading images has a transparency threshold but does not seem to make a difference in my case. Are your images 8-bit or ? Anthony L. Hi Justin: Thought that I should also include samples of my images. Here are two PNG files with transparency. Thanks Anthony L. -

Hi: The original images are transparent. However, when I place them on Treeview nodes they take-on a white background. Can I do anything to keep the images transparent?. Notice the selected row. A.L.

-

Hi Jed: I use LabVIEW with ADO along with MS SQL Server and MS Access databases. I have all along used the 'GET ROWS' method to cast the variant data in to a LabVIEW 2D array of strings with consistent performance and correctness of output. . . . Based on your reported anomaly, it appears that you may be getting the bad result from a new SQL query that may have a syntax error that is intolerant when executed via ADO, while the MySQL query utility somehow corrects the syntax error automatically or is simply tolerant to the syntax error. I am assuming here that the MySQL utility does not use ADO drivers to access MySQL. E.g: in SQL Server T-SQL syntax, concatenating a string with a value column requires you to do something like: 'Result = ' + STR(Value,6,1) in this case the 'Value' is a column that holds a numeric parameter. This numeric parameter column cannot be concatenanted with a string unless it is first converted to a string value itself. The STR() function does that. Otheriwise, if you were to do: 'Result = + STR(Value,6,1)' then the location of apostrophes will cause the result to appear with the plus signs just as you see it in your bug report. Can you otherwise, paste the MySQL query for us to check-out? Regards Anthony L.

-

Fastest Interface between LabVIEW and SQL Server

alukindo replied to John Lokanis's topic in Database and File IO

Hi John: (1) When you say that you use .NET for connectivity, what are you actually referring to? is it ADO.NET or is it some other driver library? (2) Performance measures are relative to some type of bench-mark. So what are your performance metrics as of now? How many records are you able to save per second? How many can you download per second? You may have already reached the universal performance limit for database access if your data upload rate is ~300 rows per second, and your down load rate is ~20,000 rows per second. However, reaching these ideal performance measures depends on other factors as well: (a) In any method of database access, the drivers that you use expose different programming methods to access data. Some of those methods have server-side cursors, some of them lock database rows, some of them are called 'fire hose' forward only methods etc. Basically, each of these methods comes with different overhead that impacts your application's performance. Can you shine some light on your high-level programming steps? (b) How is your database designed? Is it relational? Does it have indexed primary keys? Are you using table joins? Are you using stored procedures with nested queries? These factors affect how fast you can save or re-call data. E.g: Table column indexing can speed data access by a factor of 10 to 20 times! i.e a query that used to run for 30 seconds can be improved to take less than 3 seconds. © If you are using ADO.NET and are trying to compare this with ADO, then for LabVIEW you are better off using ADO -- just because the 'GET ROWS' method was removed from ADO.NET. This is the one critical method that made it extremely fast to move 'retrieved data' from the driver into a LabVIEW 2D array of strings. Using ADO.NET you will have to iterate row-by-row to move data into LabVIEW which will be much slower. Programmers using Visual Studio's ADO.NET with Win Forms have a way to quickly 'bind' data to data grids using the 'DataSet and DataSource' properties of ADO.NET which performs as fast as the 'Get Rows' method. As far as I am aware, LabVIEW, cannot handle these data types natively making ADO.NET suffer a huge performance penalty under LabVIEW. (unless of course you use .NET data grids in LabVIEW that can 'bind' to DataSets). ... in any case, there are a number of unknowns regarding your implemented solution to know for sure how you could improve your LabVIEW app's performance. Anthony -

Hi Draqo85: Regarding saving to database, perhaps you should also consider referencing the path to the data file from the database. In this way when the data is required, the database will pass the data file's 'saved path' rather than downloading the data from a row in the database. Else, from an SQL Server perspective, ( I know you use MySQL, but it is likely that there is an equivalent approach from MySQL) there are two ways to upload large data files: -- You can save the data in a single cell as a BLOB here is a link about BLOB data types. -- The other way is to save your data in a results table using Bulk Insert or Bulk Copy procedure. You can then use a 'REFERENCE KEY' from a single row of your target table to link to the multiple rows in the results table. From LabVIEW, Bulk Insert procedure can upload 300,000 data rows per second. Anthony L.

-

What is your LabVIEW application development process?

alukindo replied to Tomi Maila's topic in LAVA Lounge

Hi Tomi: I have owned a consulting firm for the last 4 years. What I have found regarding software development processes, is that one model does not work for all customers and neither will it work for all projects. Your own working style may also be a huge factor on how you end up fashioning your business process. . . . Now, I know that these comments are not all that helpful, but it all goes to show that the software development business does not have silver bullets on what will work 90% of the time. To shed some more light, I have found that some customers do not exactly know what they want, and when you help try to define what it could be, they may not necessarily be willing to pay to get it done. You end up spending most of your time managing scope and expectations. Some larger companies who outsource software development on a regular basis will be more understanding of a process model and the software development cost. Smaller companies who have been through the software development experience either in-house or via another consultant 'may' be easier to work with. With time, I think that you will develop a preference of the type of 'applications' that you wish to focus on, and the type of 'customer' that you would like to spend your time with. This combination will impose the key factors that define your development process. As you mature as a business, you will also find that developing and licensing your own software 'products' is more sustainable than chasing the one-off projects. A company called 37Signals that does web based software development, including a project collaboration site called 'Basecamp', have a lot of what I have found to be very practical advise on the software development challenges. They also have a book that you can read on-line for free. Although, their advise is mostly for web-based application development, some of their tips do cross-over. I hope that this helps. -

Hello LAVA: The following article has some interesting insights as to why functional spec may not necessarily be critical in designing good applications. 37 Signals are the makers of Basecamp, a simple web based collaboration software that works on the principle that project management is about 'team member communication' rather 'gantt-charting' project execution. Another article on meetings cautions on why these may sometime be ineffective. It is quite interesting to see how others feel about these articles. Anthony L.

-

Connecting to an SQL Server Compact 3.5 database

alukindo replied to Mike C's topic in Database and File IO

Mike: The SQL Server Compact Edition database requires that you reference the System.Data.SqlServerCE.dll so that it can run in-process with your executable. In this case your executable environment is LabVIEW. Once you have compiled your executable, then it will be your custom LabVIEW application run-time environment. I have attached a commented LabVIEW example that shows successful connection, data insert, and data read from an SQL Server CE database file. The database was created using (SQL Server Management Studio Express) SSMSE free edition downloaded from Microsoft Web Site. The database used in this example has an empty password. I tried uploading the CETest.sdf (*.sdf database) file but the upload failed. ---Rejected by the LAVA FTP server? Be sure to download the latest version of SQL Server CE which is 3.5 SP 1 I hope this helps. Anthony L. -

Hi: Regarding *.ldb (lock database file) this one is specific to MS Access databases. This file stores info on users logged-on, as well as other info that protects your database from concurrent editing. Google the keyword: "ldb Access" and you will get plenty of info. So by-and-in-itself the *.ldb file does not necessarily prevent multiple users from using the database or from re-connecting multiple times. But to prevent problems you need to make sure that the following is implemented: 1. Close your connections to the MS Access database and set the reference to nothing (destroy reference) after the LabVIEW session is done using the database. If you forget to do this and LabVIEW re-opens the connection multiple times, then MS Access database will at one point lock out new connection attempts. 2. Make sure that the shared folder hosting the MS Access database has 'read/write' access and if-needed 'delete' priviledges as well. I hope that this helps Anthony L.