-

Posts

693 -

Joined

-

Last visited

-

Days Won

21

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Jordan Kuehn

-

-

You can build an installer after you create your executable build spec. In that you can specify additional installers for drivers and such. I would recommend setting up a quick fresh VM in virtualbox or something to test with. Once you get all the drivers packaged up so that from a fresh VM your application runs via the installer then you can take it around your other computers and deal with IT just once. So often it's hard to catch every single driver or the machine you are checking it on already has some installed and you don't discover you missed one until you go to a brand new machine.

-

We've done a couple one-off IO-Link implementations, but it was by no means an all-encompassing tool that would work with the general standard. We would pay for a development license, but the deployment license costs from the one LV IOLink toolkit vendor were untenable as we have hundreds of systems. We'd be very interested in something like what you described. Please post updates! Is your intention to open source it or to commercialize it?

-

1 hour ago, hooovahh said:

I went to dig up my fast ping utility for Windows, but I see you were already in that thread.

As for this thread. I rarely use the parallel for loop but when I do it is a more simple set of code. It has those caveats Shaun mentioned and I typically use it is very small cases, and where the number of iterations typically are small. In the past I did use it for accessing N serial ports in parallel to talk to N different devices sending the same series of commands and waiting for all of them to give the responses. And I have used it for cases when I want to spin up N of the same actor. Here there is other communications protocols to handle talking between processes. And again these are usually limited to some hardware resource like two DMMs that are independent. If it needs to be very scalable, and have large numbers of instances a parallel for loop probably isn't what you want.

Thanks for the reminder! Since that comment I've had increased need to run this on Windows in a similar use case, but the linux command doesn't port over well. I'll give your tool another look!

-

4 hours ago, ShaunR said:

There are surprising few situations where a parallel for loop (pLoop) is the solution. There are so many caveats and foot-shooting opportunities even if you ignore the caveats imposed by the IDE dialogue.

For example. For the pLoop to operate as you would imagine, Vi's that are called must be reentrant (and preferably preallocated clones). If a called VI is not reentrant then the loop will wait until it finishes before calling it in another parallel loop (that's just how dataflow works). If a called VI is set to reentrant shared clones then you get the same problems as with any shared clone that has memory but multiplied by the number of loop iterations.

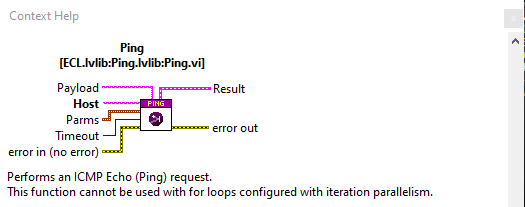

Another that you often come across with shared, connectionless resources (say, raw sockets) is that you cannot guarantee the order that the underlying resource is accessed in. If it is, say, a byte stream then you would have to add extra information in order to reconstruct the stream which may or may not be possible. I have actual experience of this and it is why the ECL Ping functionality cannot be called in a pLoop.

Not to derail the above conversation, but can this ping function reliably time out in Windows sub 1s?

-

I've found the newest files edited in this directory provide some insight when you have crashes or issues with your app starting. It's not always intuitive as to what all is there, but I have been able to identify problem components from the legible portions.

/var/local/natinst/log

I don't share the same experience as Mads regarding reduced reliability or increased bugs, save for the compilation issue I commented on in another thread which was actually a software bug I introduced and discovered via that log directory. We utilize hundreds of cRIOs across the country running for weeks or months at a time in mission critical applications. In fact the reliability of Linux RT is a pretty big factor in keeping with the platform instead of utilizing expansion chassis and a central computer approach. Obviously built-in FPGA is another huge asset when needed.

-

I have many systems that utilize NSVs with programmatic access. They are reliable and work well in these applications. That said, we've transitioned away in newer applications and utilize MQTT for communication. There are several flavors of tcp communication wrappers/messaging libraries around as well.

-

Are you able to see the shared variables listed under the target using the Distributed System Manager? Depending on the type you may or may not be able to see the current value, but its presence alone will help tell you if it was properly deployed or not. Further, are you capturing errors when writing or reading from them? While the error codes aren't always super helpful they can lend some insight. Lastly, are you dropping the variables on the BD from the project to r/w or are you programmatically addressing them and utilizing the shared variable vis to communicate with them? The latter option, while not as convenient, seems to be more robust, scalable, and gives you the most insight into the activity or errors there.

-

Well this is timely. We upgraded to 2023 Q3 64-bit a couple months back. No major issues at the time, even transitioning to 64-bit and programming RT. However for the last several days I have RT builds that proceed successfully but simply will not execute on the target. These were already built and deployed after the transition just fine, but now after minor changes it refuses to build something that will run. It's certainly possible I have a problem in the code or whatnot, but your post popped up on day 3 of me fighting this so I figured I'd chime in.

-

1

1

-

-

Google can be easier at times. If you include site:lavag.org at the end it will filter it. That said, I could not find that thread with Google either. Even searching the title itself. So, my suggestion isn't super helpful in this case, but I use that google feature often with LAVA or NI.

-

Implementing the State Pattern in Actor Framework.pdf

This PDF is in the project template. I'm literally in the middle of a new State Pattern implementation and am using this template for the first time myself. If anything it could use a refresh with the new launch nested actor vis, but aside from that the information is available in this example project.

-

On 11/9/2023 at 4:43 PM, smarlow said:

All I know is that if they don't do something to make it a more powerful language, it will be difficult to keep it going in the long run. It was, in the past always a powerful choice for cross-platform compatibility. With the macOS deprecating (and eventually completely removing) support for OpenGL/OpenCL, we see the demise of the original LabVIEW platform.

I for one would like to see a much heavier support for Linux and Linux RT. Maybe provide an option to order PXI hardware with an Ubuntu OS, and make the installers easier to use (NI Package Manager for Linux, etc.). They could make the Linux version of the Package Manager available from the Ubuntu app store. I know they say the market for Linux isn't that big, but I believe it would be much bigger if they made it easier to use. I know my IT department and test system hardware managers would love to get rid of Windows entirely. Our mission control software all runs in Linux, but LabVIEW still has good value in rapid application development and instrument bus controls, etc. So we end up running hybrid systems that run Linux in a VM to operate the test executive software, and LabVIEW in Windows to control all our instruments and data buses.

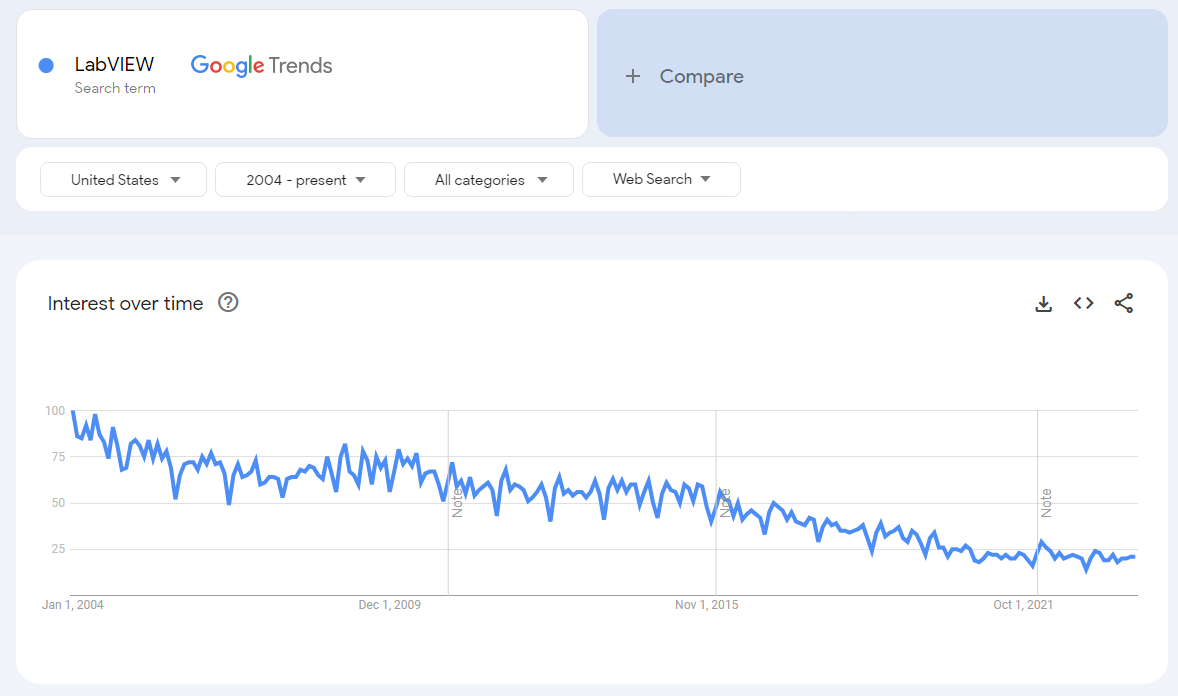

Allowing users the option to port the RT Linux OS to lower-cost hardware, they way did for the Phar Lap OS would certainly help out, also. BTW, is it too much to ask to make all the low-cost FPGA hardware from Digilent LabVIEW compatible? I can see IOT boards like the Arduino Portenta, with its 16-bit analog I/O seriously eating their lunch in the near future. ChatGPT is pretty good at churning out Arduino and RaspberryPi code that's not too bad. All of our younger staff uses Digilent boards for embedded stuff, programming it in C and VHDL using Vivado. The LabVIEW old-timers are losing work because the FPGA hardware is too expensive. We used to get by in the old days buying myRIOs for simpler apps on the bench. But that device has not been updated for a decade, and it's twice the price of the ZYBO. Who has 10K to spend on an FPGA card anymore, not to mention the $20K PXI computer to run it. Don't get me wrong, the PXI and CompactRIO (can we get a faster DIO module for the cRIO, please?), are still great choices for high performance and rugged environments. But not every job needs all that. Sometimes you need something inexpensive to fill the gaps. It seems as if NI has been willing to let all that go, and keep LabVIEW the role of selling their very expensive high-end hardware. But as low-cost hardware gets more and more powerful (see the Digilent ECLYPSE Z7), and high-end LV-compatible hardware gets more and more expensive, LabVIEW fades more and more

I used to teach LabVIEW in a classroom setting many years ago. NI always had a few "propaganda" slides at the beginning of Basics I extolling the virtues of LabVIEW to the beginners. One of these slides touted "LabVIEW Everywhere" as the roadmap for the language, complete with pictures of everything from iOT hardware to appliances. The reality of that effort became the very expensive "LabVIEW Embedded" product that was vastly over-priced, bug-filled (never really worked), and only compatible with certain (Blackfin?) eval boards that were just plain terrible. It came and went in a flash, and the whole idea of "LabVIEW Everywhere" went with it. We had the sbRIOs, but their pricing and marketing (vastly over-priced, and targeted at the high-volume market) ensured they would not be widely adopted for one-off bench applications. Lower-cost FPGA evaluation hardware and the free Vivado WebPack has nearly killed LabVIEW FPGA. LabVIEW should be dominating. Instead you get this:

Excellent points. All of them. I have echoed your frustrations with the ability to put Linux RT on arbitrary hardware, with lack of low/middle cost I/O boards, lack of low cost FPGA, etc.

-

Included in the project is documentation that directly addresses your question about overriding the Substitute Actor.vi including some caveats and considerations to make while doing it. The project itself is a simple functional example as well, as originally requested.

-

1

1

-

-

Stagg54's suggestion was a good one. Take a look at the State Pattern Actor example project that heavily utilizes this override.

-

Thank you for sharing! I would have never seen it since I'm rarely on those forums.

The link is broken, but here is the darkside link: https://forums.ni.com/t5/LabVIEW/Darren-s-Occasional-Nugget-08-07-2023/td-p/4321488

-

If you have a current service agreement I would suggest taking this to NI directly if you haven't yet.

https://sine.ni.com/srm/app/myServiceRequests -

SystemLink is an (expensive) solution to this as well. Though it doesn't give you project or shell access.

Another, cheaper, alternative is to utilize a modem/router based VPN so that you can see the devices. Cradlepoint and Peplink are two that we have used.

-

1

1

-

-

On 5/30/2023 at 1:33 AM, acb said:

Would you share a device you'd like Nigel to create a driver for automatically? That would be a wonderful test for us.

Here's one for you. The AXS Port is a modbus device that monitors power inverters. It would be great to feed Nigel the attached document and have it generate drivers/API for communication with the device.

https://www.outbackpower.com/products/system-management/axs-port

Another one for you, in a similar vein, but a little less straightforward. This is a Banner IO-Link Master that can communicate via ethernet/IP or modbus. An ethernet/IP driver would be great that could configure the device as well as the IFM DTI434 IO-Link RFID read head also linked below with attached documents.

https://www.ifm.com/us/en/product/DTI434

ifm-DTI410-20190125-IODD11-en (2).pdf dxmr904k.pdf axs_app_note.pdf 229732.pdf

-

The demo made me wonder if it could theoretically create drivers from spec sheets automatically. It seems like it would be a well bounded problem, create labview wrappers for the commands listed in the manual.

-

Is anyone headed to Austin for NI Connect in a couple weeks? Since I haven't seen any LAVA BBQ news I assume that's not happening, but perhaps we have some fellow LAVA-ers(?) interested in gathering somewhere?

-

What sample rates and what frequencies are you using?

-

This may be of help:

-

I couldn't find the code repository entry for this library, but I am getting an error:

PQ Connection.lvclass:Connect.vitsdb CONNECTION_BAD: authentication method 10 not supported

with postgres versions 14.7 on linux, and 15.2 on pc, running the toolkit in LV2021 on windows.

Is there an issue with the authentication and SHA-256 on the newer versions of postgres?

-

5 hours ago, ShaunR said:

As you are the only one that has commented on it al all (indirectly). I think that's a resounding "don't bother".

*ahem*

Not that I'm suggesting that you do bother haha.

-

On 3/11/2023 at 8:32 AM, ShaunR said:

Anyone interested in CoAP?

I have had a passing interest. I think you were extoling its virtues some time ago. But when I saw I'd be needing to build the LV implementation of the protocol from scratch I lost interest. I also didn't see a lot of wide adoption at the time in areas where I was working, but that is probably worth a fresh look.

Where'd the conditional terminal go?

in Development Environment (IDE)

Posted

Agreed, 100%.