-

Posts

693 -

Joined

-

Last visited

-

Days Won

21

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Jordan Kuehn

-

-

8 minutes ago, hooovahh said:

So I've been using, and loving OpenG zip functions on my x86 based embedded cDAQ running RT Linux. But I recently ran into an issue. The newest versions of XNet only supports being installed as OPKG packages. These can only be installed by using the System Image install option, and not the custom option that is needed for OpenG Zip. As a test I SSH'ed into my controller and copied the liblvzlib.so where it belonged, and my previously written code seems to find and use it. Is there any plan to update the inno installer to somehow add feeds or something so that the OpenG Zip tools can be installed through MAX, on controllers that use the System Image option?

You can call opkg install from the CLI and manually install a package you have copied over. Some discussion here where I built such a package. We use SystemLink which makes it easy to add the custom feeds and such, but hopefully this could help some?

-

1

1

-

-

Cross posted to the dark side.

Hello,

I'm attempting to work with the launch remote actor function utilizing two cRIOs. I have a working AF application on one cRIO and I would like to spawn a few Actors to run on another one beneath my original top level actor, hoping that the top level actor will be able to interact with them as if they exist locally.

I have watched this presentation from JustACS and have considered using the nested endpoints, but this approach seems more "AF-ish" and doesn't require extra communication actors mid-tree, or mid -> top of tree.

My question at this point is that I don't think I have the dependency injection correct for a built exe. The example doesn't seem to address applications outside the IDE either. I believe I have gotten the VI Server settings working and can launch the actor from the Server side without errors now. I encountered some errors while working on the dependency injection. Note: these are built and deployed startup exes.

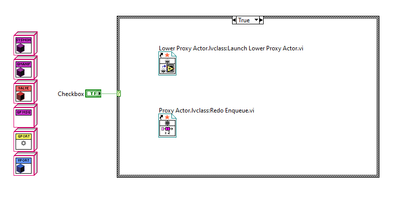

I first started with something like in the webcast:

And here is mine:

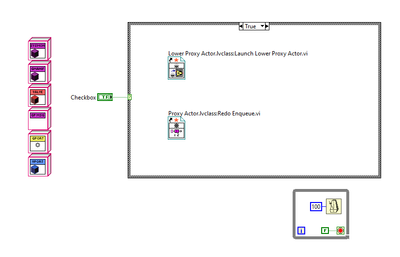

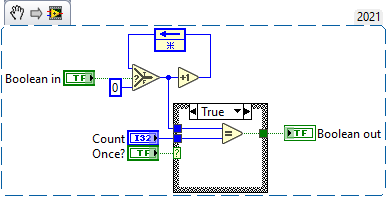

However, I encountered errors on the Server side in the upper proxy vi until I added this loop:

Now the Server side launches, but I don't see any activity from my remote actors. I expect at the very least to have some of the initial logging that happens at the beginning of their Actor Cores happen, even if there are other errors, but I see nothing on the Client side.

Am I missing something simple here on the dependency injection? I attempted to include just a few actors at first, and then added all the actors that could possibly be linked in some way, added subvis to the always include in the build spec, etc.

Any help or pointers here would be greatly appreciated.

Thank you!

-

The (advanced) software track seems light, but I'm going into this with some reasonable expectations coming out of the pandemic and also the rebranding as a smaller event. At least that's what I'm trying to do.

https://www.ni.com/en-us/events/niconnect/austin.html#pinned-nav-section4 -

I'm hesitant to download and open/run this. Do you have some screenshots/snippets? Anything you'd like to discuss about it?

-

I use this on cRIO with the system exec vi:

timeout 0.1 ping -c1 127.0.0.1Replace the 0.1 with the time you want (s) and the 127.0.0.1 with whatever IP address or hostname you want. I use this to determine if I want to attempt to open a shared variable connection to an expansion chassis since the timeouts on that API do not work.

-

I have approval for the trip and will be bringing a few other engineers. LAVA BBQ happening?

-

18 minutes ago, Mads said:

Start with something simple, then work from there... Here is an example of how it looks like with a simple test:

The deflated string is binary so the string indicator/control is set to hex for the deflated input/output...

If the content can be compressed too much and the expected length is not included it can fail yes....We had an issue with that where we could not change a protocol to include the length, we "fixed" it (increased the probability of success that is) by editing the inflate VI so that it would run a few extra buffer allocation rounds - you can do that too...

My simple test is quite like yours. It failed without the expected length wired. It worked with it wired. Thank you for the example. I will look into adjusting the inflate VI to auto-run a few more rounds, thank you for the suggestion.

I just provided the big picture use case if the additional context might shed some light on what I'm after. Definitely working up incrementally to using it in that implementation.

-

On 2/12/2022 at 5:39 PM, Rolf Kalbermatter said:

Hmmm, clipboard copy! That has a very good chance of trying to be smart and to do text reformatting. I would definitely drag the entire control with all the data from one VI to the other, which should avoid Windows trying to be helpful. As a control, LabVIEW puts it in an application private format in the clipboard together with an image of the control. LabVIEW itself can pull the private format out of the clipboard, other applications will not understand that format and pull the image from the clipboard.

If you only select the text, LabVIEW will store it as normal ASCII text in the clipboard and Windows may try to do all kinds of things including trying to translate it to proper Windows text, which could replace all \r "characters" with \r\n and there is even the chance that the text goes through ASCII to UTF-16 and back to ASCII on the way through the clipboard and that is not always a fully 100% back and forth translation, even though they may look optically the same. Text encoding translations is a total pitta to fully understand.

So I just tried that without success. I had several screenshots to post of what I did, etc. and then I tried it with providing the expected length and it worked just fine. Is this input required? I read the description where you say it will work for up to 94% compression unwired. I'm compressing a JSON string of basically an array of clusters (quite compressible). Would it be disadvantageous to wire a sufficiently large constant to this input rather than bundling the actual expected output with the data? It did also work in when I tested with a large input.

My use case here is to reduce bandwidth requirements when transferring JSON encoded status information via MQTT to a 3rd party system (non-LV). I hope to give them the requirement of inflating via zlib after delivery and then proceeding to use the JSON data as they like.

-

I will give it a more thorough test! I copied from one VI indicator to a constant in another application instance. I think. Knowing that it *should* work is already very helpful. Thank you both.

-

I can split this off onto another thread, but I copied a deflated string produced by Linux RT and tried to inflate it on Windows and it did not work. I took the original data and deflated/inflated all within Windows just fine. Is there a compatibility issue between the two OS implementations?

-

I agree with ShaunR. Despite it being posted as replies to several posts all in one day, I don't think it's overly spammy and they were mostly relevant to the discussions at hand. It was enough to get me to poke around at the product page and file it away in my mind should the need arise.

-

1 hour ago, Rolf Kalbermatter said:

LabVIEW realtime support is only existing in the never officially released OpenG ZIP Library 4.1. That package is only available as download from earlier in this discussion thread here on LavaG and over at the NI forum I believe.

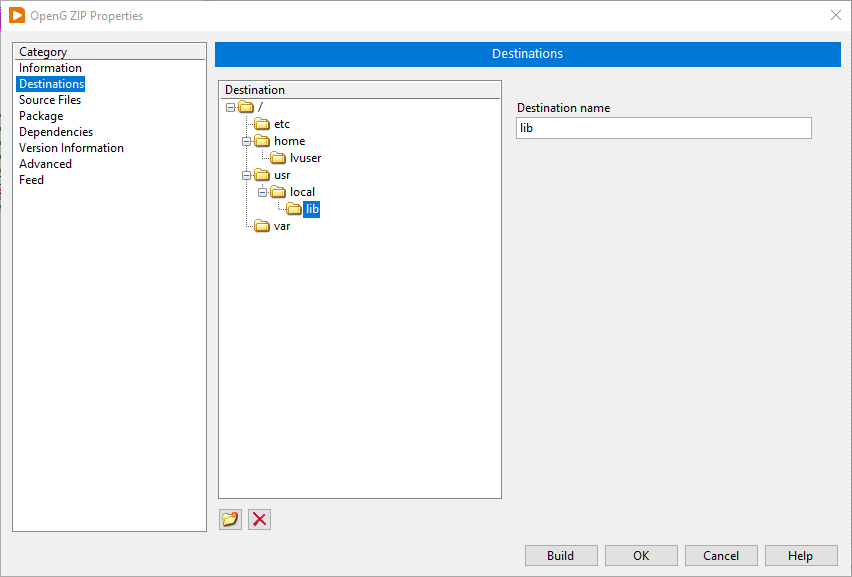

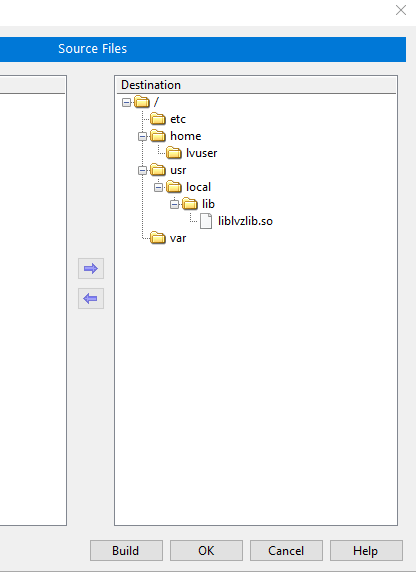

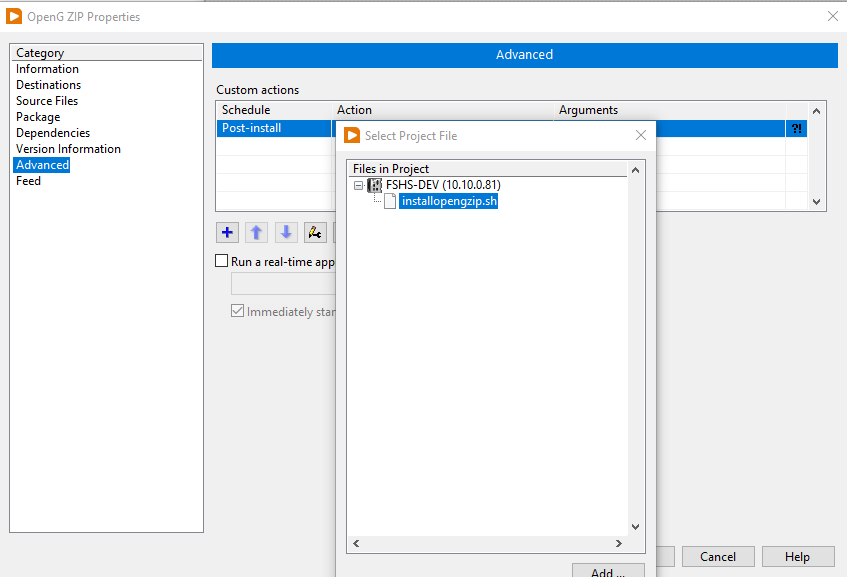

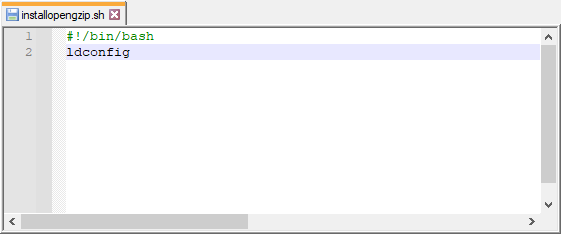

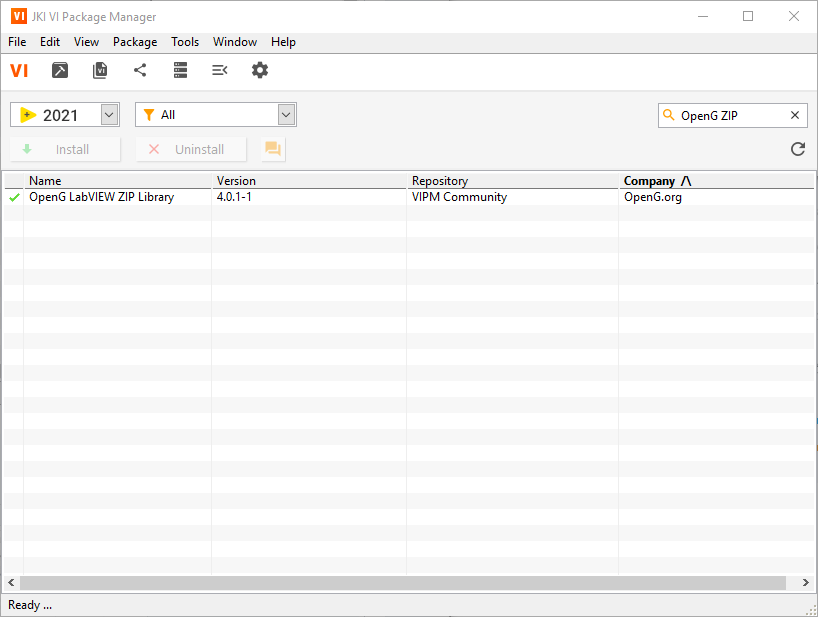

Got it! I got the files installed, put them in a package build spec in the project explorer. Configured the source file to go where you said and wrote a simple script to run the ldconfig as a post install action. See screenshots below. I installed the package and ran the same test code that errored on deployment and it worked great this time. No manual moving of libraries on my end. Package attached. Thanks for all the help!

-

1

1

-

-

4 hours ago, Rolf Kalbermatter said:

Well the OpenG ZIP tools don't really represent a lot of elements on the realtime. Basically you have the shared library itself called liblvzlib.so which should go into /usr/local/lib/liblvzlib.so and then you need to somehow make sure to run ldconfig so that it adds this new shared library to the ldcache file.

When you install the Beta version of the ZIP Tools package you should get a prompt at some point for administrative login credentials (or an elevation dialog if you are already logged in as administrator) which is caused by the ogsetup.exe program being launched as PostInstall hook of the OpenG package.

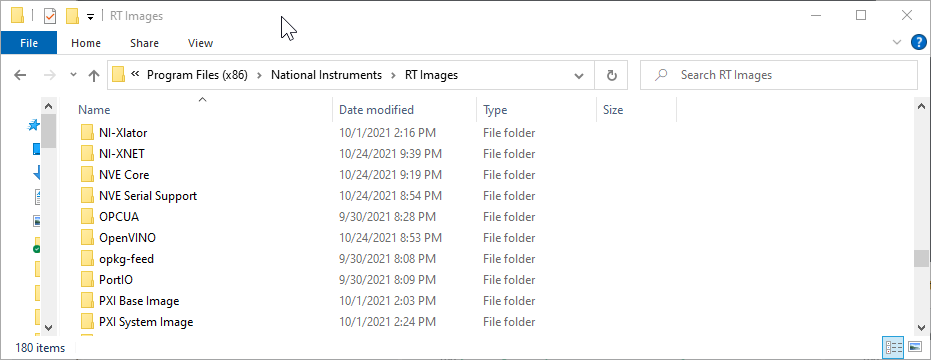

This ogsetup.exe program does nothing more than extract the different shared libraries into C:\Program Files (x86)\National Instruments\RT Images}\OpenG ZIP Tools\4.2.0.

Depending on your target you need to copy the according liblvzlib.so from either the LinuxRT_arm or LinuxRT_x64 subdirectory to /usr/local/lib/liblvzlib.so on your target. That should be all that is needed although sometimes it can be necessary to also run ldconfig on a command line to have the new shared library get added to the ldcache for the elf loader. With the old installation method in NI-MAX this was taken care of by the installer based on the according *.cdf file in the OpenG ZIP Tools\4.2.0 directory.

I tried to checkout the NI Package Builder but can't see how one would make a package for RT targets. I also only see the 20.5 and 20.6 versions of the NI Package Builder as last version, maybe that is why?

I would be happy to see if I can build a package to share or at the very least move the files over to get it working in my application. However, I do not see that directory on my machine. I did reinstall while running VIPM in admin mode but still nothing.

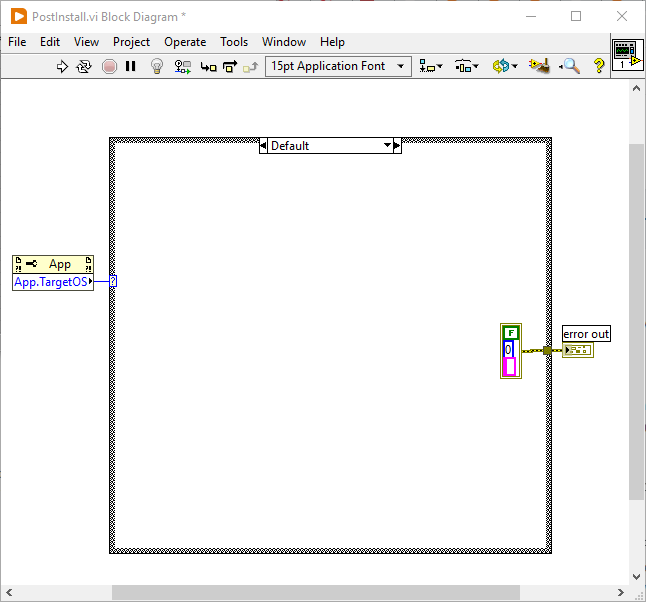

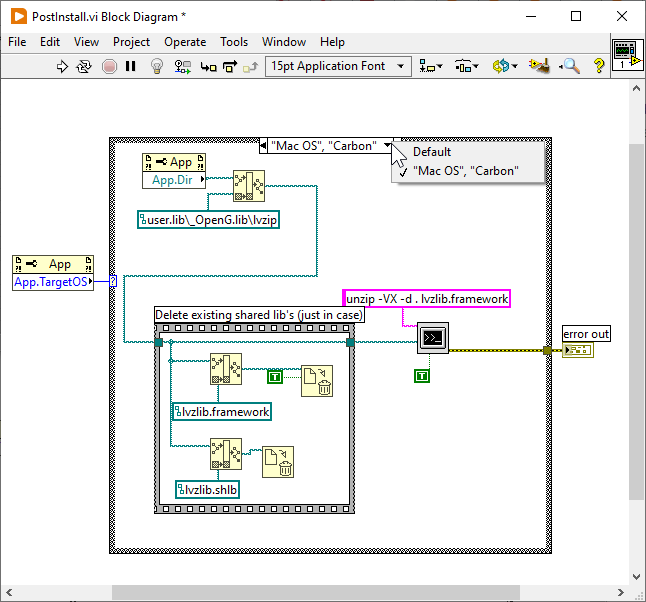

I opened the vipm package via winrar and looked around inside and this is what my post install vi looks like (I'm in Windows):

-

3 hours ago, Rolf Kalbermatter said:

So is there a problem with installing the OpenG ZIP tools for LabVIEW 2020 and/or 2021 on a cRIO at all or was that inquiry from Jordan something else? I can not place packages on the NI opkg streams so yes this library will always have to be sideloaded through NI-MAX in some way, unless you want to copy it over yourself by hand into the right directory and create the necessary symlinks on a command line and then run ldconfig. 😀

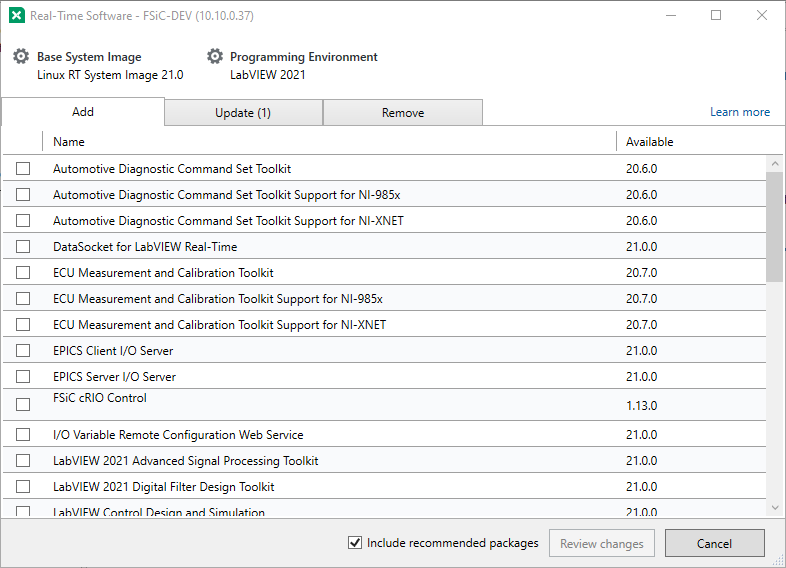

I do not see a way to do this in 2021. Perhaps it is because I have the Linux RT image installed and not a custom image to begin with, but right now, even in MAX, I only see the options to configure feeds and to install packages from those feeds. If a package were available or I could build my own (I’m not sure all of the details for this library installation) I can put it in my project and install it as a dependency without it being in the official NI feed.

Am I missing something here? I remember the old way of installing software, but I do not see where that is available anymore. I’ll grab some screenshots when I get to my desk if that would help.

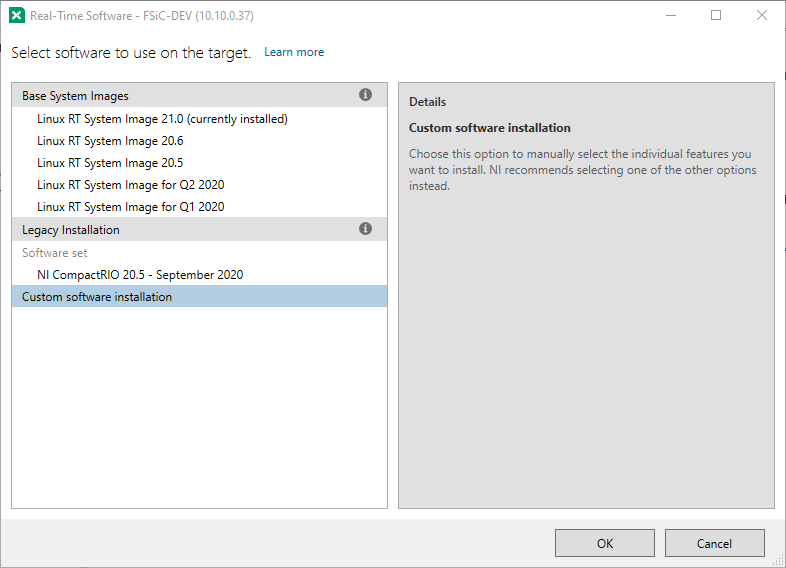

Edit// I think it was like this in 2020 as well now that I’m looking back. I believe it’s the Linux RT image that switches the software installation dialog. I can play with that some too a little later today to see if I can get it working like Mads described. But that would not work long term for me since all of my development is utilizing packages (and SystemLink) now.

Edit 2// Here is what I see when selecting the base system image to use. I believe if I go with the "Custom Software Installation" it will give the old method back, but that is described as "Legacy".

And as you can see here, I only have the ability to add packages via the configured feeds. Some NI feeds, some my own.

-

On 10/12/2017 at 4:59 AM, Rolf Kalbermatter said:

Yes you need to install the shared library too. If you run VIPM to install the package you should have gotten a prompt somewhere along the install that asked you to allow installation of an additional setup program. This will install the NI Realtime extensions for the LVZIP library.

After that you need to go into NI MAX and go to your target and select to install additional software. Deselect the option to only show recommended modules and then there should be a module for the OpenG ZIP library. Install it and the shared library should be on your controller.

Is this still true in LV2021 with Linux RT? I am getting the same error, but the software installer has changed in current max and utilizes packages. In opkg I see a few perl and a python zlib package available, but not lvzlib.

-

1 hour ago, flarn2006 said:

- Sometimes, often when I drag something on the block diagram, wires will suddenly move to illogical locations. One place where I've noticed this often is with tunnels on the bottom edge of a structure, where the wire will suddenly arrange itself so it connects from the left instead (while the tunnel remains on the bottom.).

Perhaps this same issue that is a bug, per AQ's reply:

https://forums.ni.com/t5/LabVIEW-Idea-Exchange/LV2021-Deactivate-Wire-Auto-Routing/idi-p/4183557 -

2 hours ago, brian said:

I was looking at https://www.ni.com/en-us/events/niconnect.html

where it says:

Elsewhere, it hints that there could be external presentations, but my guess is they'll be industry-focused (and apparently with invited speakers only).

Anybody heard more about it? Any thoughts on this?

Thanks for the link and bringing it to our attention! I focused on this part (my emphasis added):

Quote -

4 minutes ago, flarn2006 said:

When is this necessary? FPGA I assume?

FPGA certainly. I have use for the code I posted when say thresholding a value and wanting to ensure that it has exceeded that threshold for a period. That value could be anything. A plain signal or it’s derivatives, a float switch with a digital input, etc. At high rate in an FPGA you’d normally use it for say a mechanical switch that makes intermittent contact rapidly as the contacts first come into contact with each other. I’m sure there are more examples!

-

That certainly fulfills the debounce nature in a more pure manner, debouncing both low and high. Mine is more of a conditional latch with optional single pulse output or latched high output. I think you got my point though about using a counter. As far as it being pretty or not, I don't know that I'd ever look at the BD again after finishing testing. The counts can be adjusted based on where you use it. Certainly sample rate will be a factor, but also expected noise/bounce vs desired responsiveness.

-

I have something like this that I use.

-

So that's what AQ means. Apprentice Questions?

-

6 hours ago, Mads said:

We normally just make the executable reboot the cRIO/sbRIO it runs on instead, through the system configuration function nisyscfg.lvlib:Restart.vi, but here are two discussions on killing and restarting just the rtexe on LinuxRT:

Oh that’s perfect. Just like OP on that post it’s the reboot time that’s the issue for me. This line is what I was missing:

/etc/init.d/nilvrt stop && /etc/init.d/nilvrt start

I’ll give this a try. Thank you.

-

21 hours ago, Rolf Kalbermatter said:

No! An rtexe is not a real executable. It is more like a ZIP archive that can not be started in itself but that needs to be started by invoking the runtime engine and passing it the rtexe as parameter. And the exact mechanism is fairly obscure and not well researched and totally not documented. Unless you are a Linux kernel hacker who knows how to investigate the run level initialization and how the LabVIEW rtexe mechanisme is added in there.

Rolf, I've been looking for this information myself. Not quite in this use case as requested, but simply to restart the application. Do you have any reference for simply restarting the runtime engine and relaunching the configured rtexe? SystemLink is capable of doing this, but I haven't managed to figure out how yet.

-

Awesome, I will give it a try. The cluster writing would be great as well!

NI's New Software Subscription Model

in Announcements

Posted

Isn't this the case with many acquisitions by NI? Buy it up and let it languish instead of developing or integrating it?