-

Posts

690 -

Joined

-

Last visited

-

Days Won

21

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Jordan Kuehn

-

-

I cannot reproduce this behavior on my machines and just have logs and the description from the operator to go off of.

-

Just a small suggestion, but you could place your code in the TRUE case of case structure connected to the "NewVal" output of the event case. That would drop any double clicks by the user (as the second would be false). Can't help with the dialogs; though the primitive 2-button can be OKed with the Enter key, your second one has no key bindings and thus should require a very deliberate User action to dismiss.

How do you know the event happened, BTW? Are you sure the action contained isn't being triggered by some other method than the event case?

-- James

Well, the button is latch when released so there should only be one firing. I know that both dialogs were confirmed because the desired action did occur, and on the confirmation (2nd) dialog the response is logged as either a pass or fail based upon the user's input. It was logged as pass. Also, that action only exists in that specific event.

What event are you using? Could it be the event is supposed to fire twice?

See my second edit. The only event handled by that case is the value change event from that boolean.

-

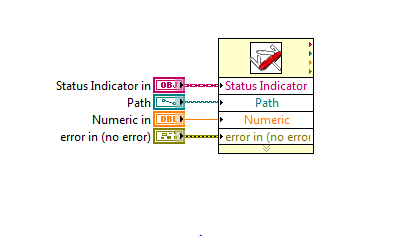

A simplified mock-up. I can add some more functionality if needed. Basically something triggered the event twice and dismissed both prompts with an 'OK' click.

Edit// Sorry, I left out the "Do Something" VI that should be in after the two button dialog and before the display result subvi.

Edit again// There appears to be a bug in the VI snippet thing. It dropped the definition of the event. It's the "Do Action" boolean value change event.

-

In that case, I can understand a second event getting queued up. What about the two dialogs though?

-

Well here's a problem that I'm having trouble with and I was wondering if you guys had any insight. Essentially a front panel event is being triggered twice. Ok, so you say it's probably just queued up a second one. However, there is immediately a primitive 2-button dialog that must be confirmed and a subvi that has a confirm/deny boolean pair that are 'latch when released'. These buttons don't have any key binding that I'm aware of and key focus is elsewhere. Both of these prompts must be confirmed for it to execute and record the action contained in the event as successful. (first one is a confirmation to execute, second one displays data and prompts for confirmation that it occurred.)

Now we've had one of these screens get confirmed before and we though it had been traced down to a MS update. Updated the machines and didn't see the problem. Today, it appears as if it executed a second event and confirmed both screens without the action of the user. Also, cursor is set busy in the event case which should prevent another click. I will point out that this case is not set to lock the front panel. I don't think that should be an issue and cannot imagine how that would trigger both confirm buttons on the dialogs, but there are certainly much smarter people than myself who are hopefully reading this

I'll probably be on the phone with NI first thing Monday morning, but any insight you guys have would be tremendously helpful. I doubt that I can post the actual code due to NDA, but if needed I can mock something up. I tried to describe things fairly thoroughly though.

Darkside crosspost: http://forums.ni.com/t5/LabVIEW/Interesting-intermittent-problem/td-p/1797572

-

Cool. Thanks for the insight guys. It's nice to hear from people who actually use the thing rather than the local NI guy.

-

Please come back and report either way. This could be useful info down the road.

-

^ if so, is it worth the expense to a customer? How is the overhead on the target machine? How accessible and functional is the citadel DB? The features sound great, but nothing that I can't/haven't coded myself.

-

One suggestion (if it's purely UI related) would be to move to LV2011 and display the visibility checkboxes.

-

Without trying to state the obvious, why don't you simply get an external GPS receiver with a serial interface or equivalent?

-

Hey guys,

Thanks ! ... I got the XY 2D scan using stepper motors done! ...

Now the major step is how can I read in the values that I get from the photosensor that is connected to the DAQ board and put it into 2D array ??

This 2D array later can be used to display images.

I need a start this. Please help!

The advice you are getting is non-specific for two reasons. Your questions are very broad, and it feels as if you are asking for us to do the work for you. If you can present targeted questions as you work through the problem, then you will get much more relevant information than to read the manuals.

-

I don't know if you counted me. If not, make that 24.5

-

What is the processor usage while it is running? Could it be maxing out one of the cores and thus killing one of those timed loops that's tied to that core?

-

Both Andorid and iOS have free texting? No extra data plan needed? Because I don't have a separate monthly bill for my netbook to have internet. Skype is not very system intensive and has desktop notifications. I'm not sure if it is free but google talk has free texting and that can just run in a web browser. I think they have a stand alone application with desktop notifications too but never used it.

Over wi-fi, yes there are apps that have free texting and the new iMessage is free. You do actually have a separate bill for your netbook to have internet, your cable/dsl/whatever bill. The data plan is for use on the 3G network, which isn't usually an issue from the living room couch. Skype charges for text messages, but there are skype apps for phones that would be free to message skype-skype.

-

The iOS will never have as much functionality as a computer. That being said, when it comes to tablets more weight is given to ease of use. The purpose of a tablet is consuming information/media or entertainment. The iPad is successful because it does that out of the box and you aren't burdened with any of the minute details. If you want to be bothered by those details and spend more time customizing your experience you can go in that direction. I think at one end you start with an iPad that holds your hand for you and makes sure you have fun and at the other end you find yourself building a linux kernel for your netbook and customizing your display drivers weekly because the manufacturer doesn't support linux.

I have an iPhone and an iPad1. I will never have a mac computer. The difference is that these things serve separate purposes. I use the iPad to surf the internet while watching tv or keep up with football scores. I use my computer to do real things.

-

1

1

-

-

Can't kudo it because it was declined. I see it was pretty popular there and is also popular here on Lava.

When I started using LabVIEW I used icons. Once I gained a lot of experience I switched to the more popular small terminals. Later still I switched back to icons influenced greatly by your arguments in the link you provided.

There just are not enough controls on any of my diagrams to worry much about the space savings. As for straight wires I just gave up long ago. You can't wire the small terminals to the inputs of a multiply prim without bends for example so it makes no difference in that regard. It is impossible to code in LabVIEW without wire bends so I just quit worrying about it. I do try to limit the number of bends to two at most.

Since it is just not possible to avoid wire bends with or without icons that argument doesn't go very far. The only things I know of that you can wire a vertically compressed stack of small control terminals to without wire bends is another stack of indicators or bundle/unbundle by name. You can't wire them straight to adjacent subVI terminals except in one ironic situation. If you deselect view subVI as icon and drag down the nodes so it looks like a bundle by name or more accurately like an express VI. How many people knew you have the option NOT to view a VI as an icon? Does anyone do that?

As for quickly identifying if it is a control or a VI, well they just look like controls and I can immediately tell by where they are located. You just get used to a particular style after a while I guess and anything else just looks weird and wrong.

There are two situations where I regularly show small terminals. If there is a reason I need to put the terminal in a loop or something or if it is not on the connector pane then I do not show it as an icon. I don't know where I picked that up and just realized that's what I do.

Small terminals are almost required when using an FPGA read/write control node with several controls. It could be useful to have RT code default to non-icons.

-

Although it is true that the loop that handles messages does wait for the next message, each Actor may have one or more additional control loops in its Actor Core.vi. I suggest that the reason you don't see anything like what you suggest in the Actor Framework is the existence of these separate control loops. If I needed any "do this until you hear otherwise" behavior in one of my actors, I would encode it in the control loop. An example of that is in this Actor Framework demo in the Temperature Sensor class, where the sensor polls for the current sensor value every five seconds in the absence of any other message.

Ah. Thanks AQ.

-

You could also create an INI file. LabVIEW API for INI files will sparse the text for you.

I would recommend this approach as it lends itself to manual editing of the file more than the xml file, a.k.a. the resultant file is far more readable. It can take a few more steps in the actual code, but you only have five channels and it appears as if the file is going to change more frequently than the software

-

Second is the "message handler," shown below. Most people look at it and think it is a QSM. If you look closely you'll notice I don't extend the queue into the case structure as many QSMs do. I don't allow the message handler to send messages to itself because that's a primary cause of broken QSMs.

More importantly, it's a reminder to me that each message must be atomic and momentary. Atomic in that each message is independent and doesn't require any other messages to be processed immediately before or after this message, and momentary in that once the message is processed it is discarded. It's not needed anymore. (Tim and mje do the same thing I think.)

This was the disconnect between my understanding and your implementation I was expecting. With the assumption that all messages are atomic, and momentary, then of course there is no need to do anything but wait for a new message to execute. Also, I may have unintentionally limited the original question to 'state' machines. I've found that it can be useful with unreliable network communications and the information being transmitted is simple control signals that can be lossy. Things time out every once in awhile, just ignore it and continue with the previous commands. RT targets and such. This may open the door to a myriad of ways to handle such situations.

I suppose the point of this thread is that this approach seems almost obvious to me, but I don't see it in other places. So is that because it's obvious to everyone else, or there is something wrong with it?

-

I've talked to my local DSM, and to Nancy at NIWeek last year. Getting more people to take the advanced courses is the issue. Either that or Nancy just doesn't want to come to hot/humid or cold/rainy DC.

I guess I should ping the DSM again.

Oh, and the 2nd edition of "LabVIEW: Advanced Programming Techniques" is from 2006. Do you think they're working on a third edition??

Not sure if this is the same thing under a different name (I don't have the material you mention), but "Advanced Architectures in LabVIEW" is from 2009. Even if it's not the same, I'm sure a lot overlaps.

-

I don't think there's anything inherently wrong with it. It's a clever improvement over QSM implementations I've seen where the default case has an internal case structure to handle the many different "continue doing the same thing until told otherwise" situations.

IMO, what you have is definitely an improvement but--as the bolded text indicates--you're still using a QSM. Some people swear by them. Personally I find them more trouble than they're worth.

[Even though I don't use and (still) don't like QSMs, that seems to be enough of an improvement to deserve a

.]

.]I mentioned your slave loop architecture because from what I saw when getting into it, the execution loop waits to dequeue a 'message' and then act upon it. Is this not quite similar to a QSM? It's entirely possible that I've missed something important about the architecture as I've only had time to give the thread and the code a cursory look. I also brought up the Actor framework because while even more abstracted from a traditional LV architecture, it seems to me that the actors are simply waiting for messages as well. Objects/text/enums/variants/whatever, it seems to me that you don't necessarily want the other code to simply wait for a new message all the time. Now I'm probably beating a dead horse, but I did want to explore how this affects the other architectures that are more complex than just a QSM.

Edit//

Oh! Thanks for the stars

-

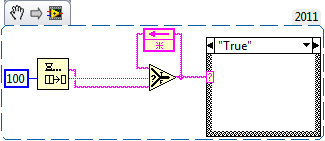

See snippet below.

I have used this approach a few times to dequeue elements while they exist and to simply maintain the previous state when they don't. However, I cannot remember seeing this in any high level asynchronous communication approaches. Most everything I see only reacts to additional messages being sent to the 'slave' process (Daklu's slave loops, actor framework, even QMH). Correct me if I'm mistaken of course. Perhaps I'm over thinking this, but is there anything fundamentally wrong with an approach like this? Like I said, I've used this basic idea a few times before and it has served me well.

The use case is when you want the other loop to continue it's previous command when nothing is left in the queue rather than pausing. A simple example could be a DAQ loop that is restartable/reconfigurable. You can send it all the configuration commands which will be transferred without loss, but then when you start the acquisition it simply continues acquiring without any help from the other loop.

-

LV 2011 has added the ability to place check boxes on the legend to en/disable the display of different plots. IMO should have been done long time ago, but it's the simplest way to do what you want.

-

That makes sense, but what happens when a noob wires something out of a case structure? He gets a broken arrow, which pretty soon makes it obvious that the output tunnel either has to be connected from every case or be set to use default for unwired cases.

But I guess there could be an easy solution for it. While a shift register could be automatically added when a ref or error is wired, may be it can also be automatically wired through the loop to the other end of the shift register.

Another option could be to add this feature, but turn it off by default so noobs don't run into it.

I would also again emphasize the point I was making that when they wire error wires out and they autoindex a broken wire appears when running it into another vi. Of course you could argue that n00bs don't use error wires...

Interesting, intermittent problem

in User Interface

Posted

As I mentioned, in the past we have had hints of this behavior when one of the dialogs would get immediately dismissed or the event would fire right away after the completion of the first event. I was able to witness that while remotely logged into the machine. Never did it make it past more than one window, and I don't think it ever skipped the two button dialog, but I could be wrong.

To counter that we updated windows, there was a .net fix, and disabled tap-to-click on the touchpad. Also, while this machine was exhibiting this behavior we plugged in an external mouse and it stopped. Yup, that's a big can of worms and if it's related to the touchpad I'm not overly optimistic about being able to find something on the internet.

As far as this incident goes, it only happened once, but that was nearly catastrophic for us (there's a reason we have two confirmation screens ). We have two people heading to the location of this laptop (Canada) and we can try to reproduce the issue soon.

). We have two people heading to the location of this laptop (Canada) and we can try to reproduce the issue soon.

If by "human interface issue" you mean an operator covering his... then we have considered it and have not ruled it out completely. However, the timestamps between the logged events differ by 25s and the action takes approximately 22s which helps validate the operator's story. It could be possible that we have a combination of the past behavior and an operator reluctant to admit he hit a button twice. Either way the problem still exists. I'll have some more information in a few hours once we get our hands on the machine.