-

Posts

577 -

Joined

-

Last visited

-

Days Won

25

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by ensegre

-

-

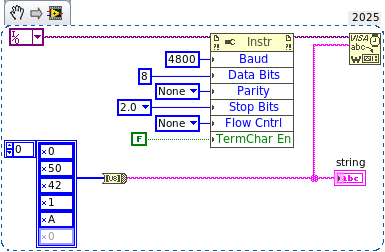

Just to make sure - if you read 5 bytes, you get the first 5 bytes. If you increase the number to 'byte count', you would get a timeout warning if the other program really outputs only 5 bytes, but you would see the trailing ones, if there were.

Also, right-click the string indicator, check 'Hex display' may come handy.

-

Can't say without seeing the rig's documentation (if at all good) and some amount of trial and error. These old devices may be quirky when it comes to response times (the internal uP has to interpret and to effect the command) and protocol requirements. Are you sure the message does not require an EOT or a checksum of some sort? Does the rig respond with some kind of ACK or error, which you could read back? Are you sure of your BCD encoding? Wikipedia lists so many flavours of it: https://en.wikipedia.org/wiki/Binary-coded_decimal

If there are read commands, returning a known value, like the current frequency or the radio model, I would start with them, to make sure that the handshake works as expected.

-

-

-

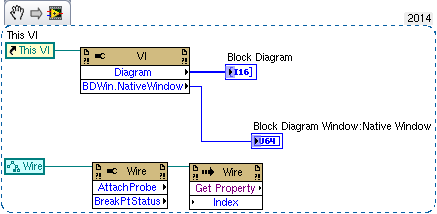

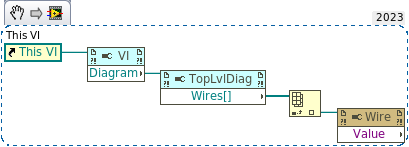

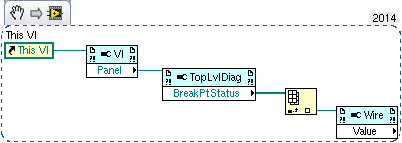

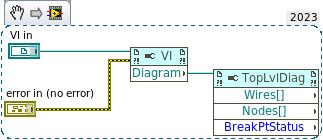

Rolf is correct, I forgot to enable scripting in the LV19 I used to check

OTOH, in LV14 "Block diagram" is indeed present but is an array of I6 rather than a ref. I can't guess what was its intended use back then, maybe it was just an uncooked feature.

-

On 4/9/2025 at 11:10 AM, ManuBzh said:

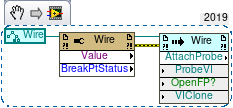

Read values flowing through wires (yes, I’m aware this involves using private properties — if anyone knows from which version of LabVIEW these became accessible, I’d be glad to know!).

Since you asked, here are my findings:

- LV23 - can create the attached snippet (don't know if it works), and save it for LV14 (also attached). All the pulldown menues show relevant properties

-

LV21 and LV 19 - open the saved for LV14,

show a correct image, but the first property node lacks the "Block Diagram" entry in the pulldown; further properties have no menuETA - and show the relevant pulldown menues when "Show VI scripting" is checked. - LV14 - the first property node menu *has* a "Block Diagram" entry, but the further properties don't match LV23

-

1

1

-

I thought it had to be read as in "the *top level node* of the BD of that particular instance", not as "the BD of the Top (prototype) VI", but haven't really tried... How do you get the values running onto a wire, btw, for my education?

-

-

-

There was this thing about getting the VI ref of the actual clone instance; I remember that in the past I asked about that (it must be somewhere here on Lava), and besides, there is this nice Vi commander tool (forgot its name, windows only) which obviously uses it. Once I have a moment of time I'll look for it and report.

ETA:

, of course!

-

A bit sad to have to say this nowadays that most of the traffic on this forum is about leaked videos, money rituals and human sacrifices, but isn't this the part where someone starts to repost links to basic LabVIEW training resources on the NI site?

-

1

1

-

-

My 2c: I guess that the problem with an expiration date on an offline computer is that you have no means for the executable to verify that they didn't set the clock backwards to extend indefinitely their usage.

If you don't expect them to be pro hackers, what about protection by simple obfuscation? E.g. the incremental time the program has run, saved periodically in obfuscated form in an essential key file, masked as "configuration"? With some mechanism to make more complicate to get through just by rewriting an older file in place of it?

-

No pb for me as well, neither today nor past times I consulted it. But all together I'm on it quite seldom.

-

Coming back to report.

Scavenging the net I've found essentially three set of connectors between Redis and LV:

- what can be downloaded from https://forums.ni.com/t5/Example-Code/REDIS-database-LabVIEW-toolkit/tac-p/3508611 taking into account the corrections listed in the thread. This seems to be the more widespread, considering even that it was shown as an option at the CERN LV user group this year (see https://indico.cern.ch/event/1388470/contributions/5911487/attachments/2843544/4971934/lugm_LabVIEW_at_CERN.pdf, slide 22). Dates originally to 2014.

- Nick Folse's https://github.com/tauterra/Redis-Client-for-LabVIEW of about three years ago, according to its author no further developed. Found a couple of flaws, easily corrected.

- https://github.com/Bas-vE/LV-Redis , which claims to be an evolution of 1., promoted to LVOOP. Most recent of the three.

The philosophy of the three toolboxes differs somewhat from one to the other, the first one being more of the kind "one VI for each Redis command", the others putting perhaps more the accent on the transaction protocol than on the completeness of the commands implemented. Redis's huge command set also expanded during the years in question. However, I found in all three something which looks to me a bit of a no brainer, which is that TCP client connection are opened and then closed for each elementary operation. While that might have a minor performance impact, I found that the approach prevents Redis' MULTI pipelining.

I have forked 1. in https://github.com/EastEriq/redis-in-labview and 2. in https://github.com/EastEriq/Redis-Client-for-LabVIEW for dwelving into. Finally, I have resolved for adopting my fork and augmentation of 1. in my project, but only after I modified it so that TCP connections can be kept open throughout the client sessions.

-

1

1

-

IIUC the OP, s/he put an AI node (which has a variable execution time) sequenced with a fixed delay inside a while loop, and then complains that the while loop is not repeating itself at 1/delay time. OTOH s/he says that s/he doesn't really need the AI. I'd answer here that, complexity permitting, since this is a deterministic target, the delay should be concurrent with the code executing in variable time, and that it should be longer than that execution time of the variable part. Then one iteration of the while loop would be guaranteed to take exactly as long as the delay.

Another option, if memory doesn't fail me, could be a SCTL tied to a secondary time reference, running at a submultiple of the master clock. If the complexity of the code doesn't allow execution within the prescribed timing, the compiler will then complain.

Can't say about the actual case, but often complex code can be simplified by factoring out, or by pipelining.

-

AFAIR, from my very limited experience with a single model of FPGA, AI and AO conversions may take a long, variable number of clock cycles (dependent on routing perhaps, of the order of several tens of cycles), and therefore cannot sit in a SCTL. Don't take me literally though, I might be wrong and that may not be true for all FPGA boards.

-

ah, and when you're mentioning two computers - connected how? Is there some network gear along the way, which filters packets and blocks connections on unauthorized ports?

-

Sounds like on your computer port 45321 is already in use by some process (not necessarily labview). Besides, 45321 is still in the IANA range, whereas 58411 is already in the Dynamic range.

https://www.baeldung.com/cs/default-port-network-service

On top of that, you may have lingering.

-

Tools/Profile/Show buffer allocations...

-

well, NSV are out of cause here first because it's a linux distributed system, second because of their own proven merits 😆...

The background is this, BTW. We have 17 PCs up and running as of now, expected to grow to 40ish. The main business logic, involving the production of control process variables, is done by tens of Matlab processes, for a variety of reasons. The whole system is a huge data producer (we're talking of TBs per night), but data is well handled by other pipelines. What I'm concerned with here is monitoring/supervision/alerting/remediation. Realtiming is not strict, latencies of the order of seconds could even be tolerated. Logging is a feature of any SCADA, but it's not the main or only goal here; this is why I'd be happy with a side Tango or whatever module dumping to a historical database, but I would not look in the first place into a model "first dump all to local SQLs, then reread them and merge and ponder about the data". I'd think that local, in-memory PV stores, local first level remediation clients, and centralized system health monitoring is the way to go.

As for the jenga tower, the mixup of data producers is life, but it is not that EPICS or Tango come without a proven reliability pedigree! And of course I'd chose only one ecosystem, I'm at the stage of choosing which.

ETA: as for redis I ran into this. Any experience?

-

Reviving this thread. I'm looking for a distributed PV solution for a setup of some tens of linux PCs, each one writing some ten of tags at a rate of a few per sec, where the writing will mostly be done by Matlab bindings, and the supervisory/logging/alerting whatnot by clients written in a variety of languages not excluding LV. OSS is not strictly mandatory but essentially part of the culture.

I'd would be looking at REDIS, EPICS and Tango-controls (with its annexes Sardana, Taurus) in the first place, but I haven't yet dwelled into them order to compare own merits. In fact I had a project where I interfaced with Tango some years ago, and I contributed cleaning up the official set of LV bindings then. As for EPICS, linux excludes the usual Network Shared Variables stuff (or the EPICS i/o module), but I found for example CALab which looks on spot. Matlab bindings seem available for the three. The ability of handling structured data vs. just double or logical PV may be a discriminant, if one solution is particularly limited in that respect.

Has anyone recommendations? Is anyone aware of toolkits I could leverage onto?

-

Under Linux, it is in the Configure tab of the compile worker, see https://lavag.org/topic/22267-installing-ni-lvfpga-un-ubuntu-20/?do=findComment&comment=144693 . Under Windows? At first sight I haven't found anything relevant in C:\Program Files (x86)\National Instruments\FPGA\CompileWorker .

-

-

I have worked in the past on ADAM5000TCP controllers + modules using this nice toolbox, so it is certainly possible. Unfortunately that was years ago, I don't have access to the hardware anymore and I don't remember details, so I can't be specific. What you describe, though, sounds simply like not querying the right IP, or not having set correctly the netmask on your NIC and in the ADAM (was there an option to do that somewhere? In ADAMview?). Assuming, obviously, that it is not because of a faulty ethernet cable (happens, too).

I also think that at some point in the Modbus tester there was a small bug like that if you once had a connection refused (e.g. because of querying a nonexistent register) you had to stop and restart the tester in order to establish the connection again, but my memory is vague on that. Maybe Porter corrected that since then.

Serial Communication Question, Please

in Remote Control, Monitoring and the Internet

Posted

i.e. crossover cable vs. null modem cable. But the OP says that the exe succeeds with the command, so that doesn't seem to be the issue here.