ChrisClark

Members-

Posts

22 -

Joined

-

Last visited

Profile Information

-

Gender

Not Telling

LabVIEW Information

-

Version

LabVIEW 2010

-

Since

1990

ChrisClark's Achievements

Newbie (1/14)

0

Reputation

-

Ok, I'm lame, my file write subvi was writing two files in parallel and I forgot to set it to reentrant. FYI I was able to get at least 80 MBps with one loop, 10 DAQ Reads and 1or 2 File writes in the same loop, binary or tdms. We replaced the NI drive with a WD Scorpio Black, 7200 rpm. Forgot about the benchmark VIs, thanks. cc

-

Hi, I need to stream 40 MBps to disk, .tdms, on a NI PXIe-8133, quad i7 1.73 GHz from 10 DIO cards. I understand that 40 MBps is roughly the max of what the SATA drive will do. I'm getting at least 30 MBps. Is the 40 MBps limit assumption good? I think I should have a dedicated drive for the data instead of streaming to the controller drive. RAID is surely overkill. Has anyone connected an external drive to a NI controller with the ExpressCard slot? Any other ideas? I think my producer consumer code is ok. cc

-

Hi, You could go through examples in the examples folder, there is a manual called using LabVIEW with TestStand. Definitely call tech support multiple times a day. The best way is to take the TestStand I and II classes. You might be able to just purchase the course materials, workbooks and exercises from NI. TestStand is really a unique (and awesome) product and it has a higher threshold of getting started than LabVIEW. I think I spent 40 hours just getting a GPIB instrument working when I first started on my own. IMHO it's best to get help, either through cust ed or the AEs. cc

-

Used part of my PayPal balance, woo. I figured out how to bill someone with an Amex card through PayPal, but haven't yet gotten to getting the $ back out. Another 30 or 40 LAVA BBQs should do it. cc

-

Hi, I can't seem to depress the control button and drag to either expand/scoot the diagram, or to make a copy by dragging. Does anyone know the Fusion settings to allow this? Bonus: in Bootcamp shift-ctrl-a does not align. (BTW I'm going to try out LV Mac eval) Thanks cc

-

I want to restrict access to a web page that displays live data from a LV web service. Should I use authenticated HTTP requests as described in tutorial 7749, LabVIEW Web Services Security? If so, do I need to use scripting in the web browser client to create the HTTP request? The tutorial just describes a client app, not a browser. Or is there some other way to do this, like just logging in to a service with an ID and a password on the first request. Or would it be easier to achieve this goal by using Remote Panels? Thanks. cc

-

Single threaded math .dll killing cpu and main VI

ChrisClark posted a topic in Calling External Code

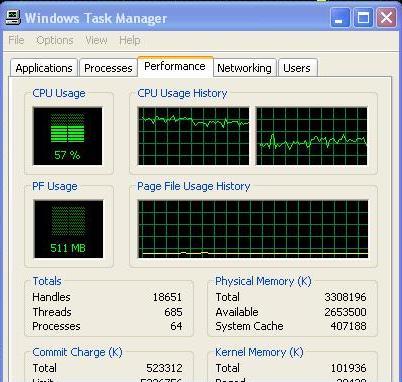

Hi, I've inherited a .dll that analyzes a 2D array containing 4 waveforms, 32KB to 80KB data. The main LabVIEW VI is continuously streaming the 4 DAQ channels, analyzing, and displaying, everything executing in parallel. At higher sample rates the main VI becomes starved and processes running in parallel to the .dll slow way down, up to 30 seconds behind. I've attached a graphic of the task manager that shows an asymmetry in the cores of a Core2duo while the VI is overloaded by the .dll. I always see this asymmetry in the Task Manager when the Main VI has big latencies. I've seen this exact behaviour before in a different VI, different project, when LabVIEW math subvis were coded serially instead of in parallel. Once the VIs were rewired to run in parallel, everything ran smoothly with balanced cores. My challenge now is to convince someone else to refactor their .dll, and they think the best approach is to optimize the single-threaded .dll code to make it run faster. Do I have all my options listed below? What is my best argument to convince all the stakeholders to go with a solution that balances the analysis cpu load across cores? (and is this really the best direction to take?) Thanks, cc Options: 1. port .dll to LabVIEW 2. refactor .dll to be multithreaded and run on multiple cores in a balanced way 3. Mess around with subvi priority for the subvi containing the offending .dll 4. refactor .dll to work faster but still only run in one thread on one core. -

A while ago I heard that the GPIB primitives now are wrappers around VISA functions anyway. You could check out ni.com/idnet for instrument driver-y development info and style. cc

-

I've done something like this in the past. This is a sort of pattern where the execution is sweeping over the nested setup variables and doing the same test over and over, REPEAT -> setup all vars then run test. If you are doing this all in LabVIEW only, I prefer to separate the execution from the "generate the correct commands in the correct sequence." So you already figured that out. You can generate the correct commands and then put them in a cluster array instead of dropping them all on your state machine que. You then have a big stack and your state machine can just pop the next step and execute it. The state machine is flat and not nested so you can pause, resume, jump to the end, etc. Each element on the stack could be a cluster of VI name, VISA resource, variant of parameters. Then the state machine would use VI server to load each VI and execute it, or have a case for each VI. You could use a LV2 functional variable or LVOOP class to encapsulate your stack with the following methods, load stack, pop element, reset to start, peek, check for end, etc. So you would run an initialization with the nested loops, or equivalent, to generate the stack and load it into your object. You mentioned this will take up a lot of memory. For several nested variables you could generate a deep stack. A cluster array of 10,000 elements and 10 MB of memory doesn't seem too big to me. Clearly though you may cross a threshold of too much. In that case you could load only the input information for every voltage, for every temperature, for every channel, for every data rate, take power measurement; into the object and use a linked list, or some other data structure with a pointer to where you are in the sequence. Then instead of popping the next element from a big stack, you would generate the next element, one at a time and never have the whole stack in memory. This takes more thought than the nested loops initialization taking up lots of memory approach. Either way you just have one class or LV2 global that inits and pops the next test, all encapsulated. cc

-

help for useing SNMP in labview

ChrisClark replied to zyh7148's topic in Remote Control, Monitoring and the Internet

If you need SNMPv3, and the agent you are talking to has authentication and/or encryption turned on, it will take you some weeks of work to write that code. Using Net-SNMP with System Exec.vi seems like your best free option, though I have not tried this personally. SNMP Toolkit for LabVIEW at snmptoolkit.com costs $995.00 but is a native LabVIEW Toolkit with one .dll for MIB compilation and a second .dll for SNMPv3 encryption and has been used successfully for many v3 projects. -

What are my OOP options for LVRT?

ChrisClark replied to ChrisClark's topic in Object-Oriented Programming

JG Thanks for the link, I !@#$%^ searched but did not find anything. > See here as this was asked before in the OOP forum My app has an asynch part and a deterministic part so I don't need deterministic classes. I've used the original Endevo LabVIEW 6.1 toolkit before I might just go with that. Thanks again. cc -

I'd like to have a few classes on an embedded app. Is anyone using Endevo GOOP or anything else on LVRT? cc

-

some "marketshare" numbers http://www.macrumors.com/2008/07/02/mac-br...tinues-to-rise/

-

jeez, seeing how this thread is going I guess I would look pretty square if I said I forgot to mention we've hooked up USB Zebra and BradySoft printers to the MacBook with LabVIEW for Windoze and they worked as well. I did send the Steve pic to my wife who always hassles me for wearing that exact same outfit, but with Mizunos and Asics. Thanks! cc

-

Herez what USB things we hooked to a MacBook (not pro) running XP under bootcamp: Atmel AVRISPmkII in-system programmer Ember USBLink programmer some custom zigbee radio on a USB stick, maybe SiLabs (?) and an Ember Insight adapter via ethernet Everything worked first time with LabVIEW using SystemExec and VISA and is deployed overseas. This was the test I have been waiting for - what happens with resources. I figure I can go with Bootcamp in those cases there is some weirdness with Fusion. I have a friend that says ctrl drag copy does not map in Fusion. For development I don't usually connect my laptop to that many resources, VISA and TCP goes a long way. I am now waiting for the next rev of MacBook Pros to come out and after that I will never buy a PC for myself again. I hope the next rev of 15" will have a higher resolution, the 17" seems like a lot to lug. Whoa, I assumed I could run XP (Fusion or Bootcamp) with two monitors, better check that. I'm watching the Macrumors.com buyers guide for next rev news. And it seems like XP should be good for the next couple of years.