- Popular Post

-

Posts

107 -

Joined

-

Last visited

-

Days Won

3

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Christian_L

-

-

- Popular Post

- Popular Post

Excellent - I look forward to seeing what you come up with

Here it is: http://decibel.ni.com/content/docs/DOC-13146

-

3

3

-

The XML Parser in LabVIEW is based on DOM so it doesn't work in LV RT unfortuantely.

As Paul mentioned the Read From XML File Unflatten From XML functions, that use the LV schema, do work in LV RT.

In addition there are a couple of other tookits available that will work in LV RT and use their own schema. In the case of GXML the source code is open so you can adjust the schema to your needs. However, it is designed to store and load LabVIEW data (clusters), so typically the XML structure will be similar to the data structure in a LabVIEW cluster.

EasyXML: http://jkisoft.com/easyxml/

GXML: http://zone.ni.com/devzone/cda/epd/p/id/6330

I'm not aware of a true generic XML parser that works in LV RT, though you could use the GXML parser code as a start and write one.

-

I picked up this discussion on a thread over at NI Community and wanted to provide some input.

Reusing the LabVIEW options (preferences) dialog framework to create your own custom dialog for your application, along the lines of crelf's use case, is fairly simple and I will post a couple of VIs and an example in a day or two that provide a wrapper around PreferencesDialog.vi and a slightly updated template to build your own options pages.

Note: I prefer the term options to preferences so in general I will use that name.

Adding you own pages into the LabVIEW options dialog alongside the existing options pages is a bit more involved as stated in this thread. If you add your own pages and want to load/save your settings (which obviously you do) you need to explicitly add code in your options page VIs to do this. The framework will not handle that for you. The framework uses the TagSet/PropertyBag refnum for this purpose, but you will not be able to piggyback on this, at least not now.

We are discussing ways to allow LV add-on developers to add their own entries in the LabVIEW options dialog in the future, so stay tuned.

-

2

2

-

-

I am pretty sure its along the following lines:

Once the Scan Engine software it deployed to the RT brick, the scan engine will be contently updating the memory map even if you aren't explicitly calling the variables.

That is why they say do not install the SE software unless you use it as you will get a performance hit for no reason.

If network publishing is enabled, these variables will be like any other Network Accessed Shared Variable - you can get a hook into them via the SV Engine and you have use them in your Windows PC application.

Here are some resources you may like to check out:

http://zone.ni.com/d...a/tut/p/id/7338

Correct. Figure 5 in the first link above shows it best. The Scan Engine, once installed on cRIO, always runs regardless of anything else going on on the cRIO. Once you have deployed I/O variables to the cRIO target, they will be hosted by the Scan Engine, even after rebooting the cRIO system. You have to explicitly undeploy or remove them from the Scan Engine using the LV project or Distributed System Manager to get rid of them.

Your host application using the variable nodes connects to the Scan Engine on the cRIO using the PSP protocol and read/write I/O variable value. This happens the same way whether the VI is running in the LV IDE or in a compiled EXE.

-

The problem is that I do not have any master time servers on my network other than a GPS based IEEE1588 master clock node which is not supported by windows network time clients.

You could build a simple SNTP server on your cRIO system and then have Windows sync to it using the built in NTP client. I haven't tried it, but I think it should work.

There's example code for a SNTP client on ni.com; you'd have to build the opposite end in this case.

-

In the attached VI, I have a table and I'd like to separate or group them. Can someone please help me to do this?

Thanks

Assuming there is a predefined finite list of categories/groups, process each row of the table in a For loop. In the loop create a shift register containing an array of clusters of 2D arrays. When you process each row from the table, determine which group it belongs in, pull out the corresponding cluster from the cluster array (A = index 0, B = index 1, etc.). Add the current row to the 2D array in the selected cluster and place the updated cluster back in the cluster array and pass it back into the shift register. After the loop is done you'll have a cluster array where each element contains one of your group arrays.

-

I consider Twitter like RSS. I subscribe to the feeds (people) that I'm interested in hearing from/about. When someone posts a tweet they are not directing it at you, but at their feed. And it's up to you whether you subscribe to that feed or not. I tend to follow more organizations, magazines, etc. than private individuals so Twitter does become a customized news feed for me.

I do frequently Follow and Unfollow people based on what they are posting and whether I'm interested. If someone tweets too much about their food and being at happy hour I unfollow. I look for a good signal to noise ratio from the people I follow. In some cases I may decide to follow someone for a given time period (like NIWeek) and not follow them the rest of the year.

-

Better. Still not completely there yet, tho ...

A cluster is analogous to a structure in C. It is a compound datatype.

In M-W a cluster is defined as "a number of similar things that occur together".

-

I am thinking big screen TV and who play Versus.

Versus is part of the standard digital package from Time Warner in Austin (ch 470 and 471) so many places should have it.

Didn't the Tour end last week?

-

1

1

-

-

Programming LabVIEW RT on cRIO is very similar to PXI when it comes to good design, running multiple processes, memory management, communication, etc. The main difference is that the processor is not as fast.

You are correct that if you need to program the FPGA for your application it will add a little complexity to your development process, specifically learning the differences between programming LV FPGA and LV RT/Windows.

If you are using NI analog and digital modules on cRIO you most likely wouldn't need to program the FPGA and can use the ScanEngine instead. The ScanEngine is a process which runs on the cRIO processor and communicates through the FPGA to I/O modules and provides single point access to I/O channels using I/O variables in LV RT. It is limited to lower acquisition rates (<1 kHz) but that should be adequate for the application you describe.

Does cRIO able to deal with a lot of parallel tasksYou can easily implement parallel tasks, but need to use good design and interprocess communication like you already do in LV RT on PXI.

communicate over Profibus,NI does not have a Profibus module for cRIO, but there are a couple of alliance partners that offer this product. It does mean that these modules will not be supported in the ScanEngine and you will need to do some programming in LV FPGA to access profibus.

Another option would be to look for a Profibus gateway to serial or Ethernet. I'm not familiar with any specific solutions here and you would need to determine what driver support is available in LV RT on cRIO.

handle RFID readers?RFID readers would likely interface to cRIO through serial or Ethernet, so again you need to figure out what drivers are available, or if you have the documentation for the communication to the RFID reader you can likely create the serial or Ethernet interface yourself directly in LabVIEW.

-

yes the values has to get generated as specified.give me solution.......

give me solution....... ???

Not even a simple please?

What have you tried so far? Can you show us your work to date on this problem.

What is the application that you are working on where this is important?

-

Yeah I have been looking courrier. However it looks like the font from Wargames.

Courier is the old typewriter font as typewriters are always monospace.

http://en.wikipedia.org/wiki/Typeface#Monospaced_typefaces

Monaco is a more modern monospace font that is commonly found on Macs.

Lucida Console is another monospace font that is often available on Windows.

-

My Realtime application running in cRIO 9014 ( LV 8.2.1) creates multiple binary files based on the data logged via RS232 port.

I want to monitor the memory status of cRIO 9014 (2GB) from within the application I'm running in it.

Is it possible to erase older data programatically as the memory becomes full?

Use the standard File I/O functions in your LV RT VI to monitor the amount of free disk space on your cRIO controller and to delete any data files you no longer need. The specific functions you need are in the Advanced File Functions and are called Get Volume Info and Delete.

-

What are the circle things supposed to be on the cover of this book?

The LabVIEW Big Bang - It's when Jeff K woke up one morning and had LabVIEW in his mind.

-

1

1

-

-

All 15 of these programs have their own executable and those executables go into one installation package (that resides in the Big Project). I've started attempting to move the ancilliary programs off to their own projects and just pointing to their executables in the installation package. I'm not really sure if I should move all the tools programs off too, mostly because keeping track of 15 projects will be a headache.

Cat

In addition to crelf's comments, you can also store LV projects in a LV project. So you can create your other projects and add them to the Big Project. You can double click on a project in the Big Project tree to open it up.

I personally would keep everything in the main project and create virtual folders to keep things organized and separated, including your 15 exe build specs and any necessary installer build specs.

-

My problem is that now I am required to talk to 7 separate boards in the VME chassis, each with it's own IP address! Adding code to the consumer loop to do this is not a problem, but I now have to add another loop to the block diagram to receive TCPIP messages from each board and to another set of loops for the UDP data being received. Although the code changes are straightforward, this going to take up a lot of block diagram space!

You could also create a single loop/subVI for TCP and UDP each, which create and manage multiple connections. Store the array of TCP/UDP references in a shift array and then go through and peridically check for data received from each of the connections. The A Multi-client Server Design Pattern Using Simple TCP/IP Messaging article on DevZone shows an example of implementing this. It use a Connection Manager VI to store/handle all of the connections for you.

-

Hi,

We've had the same problems with the cRIO platform when NI switched the CPU from Intel to PowerPC

and the RT OS from ETS to VxWorks.

Apparently, when you want to access the TCP packet received by the cRIO 2 different times, it takes

about 200 msec to access it the second time. Depending the CPU type and load.

...

I'm sorry I can't send you any code, but that is under company copyright.

If you have any questions, let me know, I'll try to help.

Tom

Tom,

Your message caught my eye as the Simple TCP Messaging (STM) protocol that we have published on ni.com basically does the same thing. We have tested and benchmarked it on different LV versions and controlleres and can achieve at least 2 ms update rates per packet.

The STM sender prepends the size of the packet in a 4 byte integer, and the STM reader first performs one read operation to read the 4 byte header and then performs a second read to get the payload of the packet. STM is implemented using polymorphic VIs supporting both UDP and TCP under the hood.

I just retested this using LV 8.6 and a cRIO-9012 VxWorks controller and was able to achieve better than 2ms loop times for sending/receiving two packets per loop (4 individual read operations on the cRIO) using both UDP and TCP.

I realize you already have a working solution, but if you're interested I would be available to help determine what caused the behavior and low performance that you saw, which is not typical of TCP/UDP on the VxWorks RT platform.

-

Welcome, Marius.

Full Disclosure: Marius and I attended grad school together.

-

Doesn't it come down to the fact that by-reference objects are really references to by-value objects and all references in LabVIEW basically behave the same?

In my mind I prefer to think of DVRs as reference to something and sometimes those things happen to be (by-val) objects; I don't think of by-ref objects to be a unique type of data type in LabVIEW.

-

- Popular Post

- Popular Post

Congratulations, Jim. I'm sure she'll be wiring in no time. You know peek-a-boo is just a lesson in object persistence.

-

3

3

-

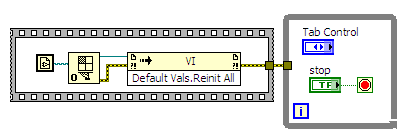

It looks like the problem is not with the Default value of the Tab control, but in the drawing code. i.e. the drawing of the tab control ignores the default and sometimes the current value of the Tab Control.

If you switch to the diagram and then double click the Tab Control terminal, it will jump back to the front panel and will set the Tab Control back to the first page regardless of which page it was on earlier.

The default value for the Tab Control is stored however in the VI, so resetting all controls and indicators back to their default value will work in the VI code.

This has been previously reported as CAR 182844 to LabVIEW R&D.

-

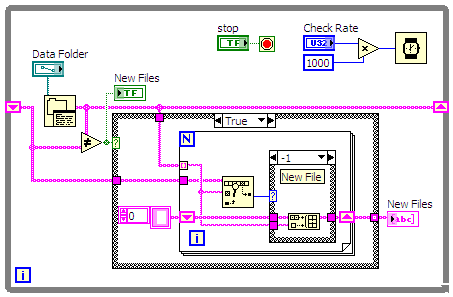

I searched around the forums/online and I can't seem to find anyone who's used LabVIEW to monitor folders for changes in files/folders. I'm collecting data on a network drive in my building and then hoping to have my data post processor monitor that folder for generated data, when data is generated I then want the post processor to automatically process the data but not touch any old data.

Sounds like a very good application for LabVIEW.

Here's a simple example of implementing this.

-

Could probably play 2 games of Solitaire at once with that thing

Or build a really large LabVIEW diagram. No need for subVIs any more.

-

I published the article and examples here:

Corrected link: http://zone.ni.com/devzone/cda/epd/p/id/6287

Add a preferences category

in Development Environment (IDE)

Posted

I'm glad you brought up this concern so that we will consider it. I think the plan will be to make a copy of all the necessary VIs (less than there are now), place them in a separate lvlib and install all the code in its own location separate from any VIs used by the LV IDE.