-

Posts

3,917 -

Joined

-

Last visited

-

Days Won

271

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Rolf Kalbermatter

-

-

23 hours ago, hooovahh said:

I am also rocking version 4.2.0b1-1 for the same reasons.

I assume that support for the old *.cdf NI-Max format for installation onto pre LabVIEW 2020 RT targets is not a topic anymore and you guys rather expect *.ipk files?

Maybe add a download feed to the OpenG Github project for this? 🙂

-

On 9/26/2025 at 9:48 PM, Mads said:

That would be great. Linux RT support is all we need these days. I have not seen any VxWorks targets in ages.

I come across them regularly here at the university. There are quite a few setups that are fairly old already and just need some modifications or updates and they work and work and work and work like a clock once setup properly. Developing for them gets harder as you have to use LabVIEW <= 2019 for that.

-

Actually I do vagualy remember that there was an issue with append mode when opening a ZIP file but that is so far ago, I'm not really sure it was around 4.2 times rather than a lot earlier.

I'll have to get back to the 5.0.x version and finally get the two RT Linux versions compiled. Or are you looking at old legacy RT targets here?

-

You may also want tell people where you can actually download or at least buy this. Although if you want to sell it, do not expect to many reactions. It is already hard to get people to use such toolkits when you offer them for free download.

-

1

1

-

-

Reformatting the post which is more easy on the eyes and uses a normal font and doesn't require a 200" monitor to read properly would already help a lot.

Pretty much all of that Dynamic Signal stuff with Express VI complexity would be possible to do in a simple VI that uses a lot less code and screen space.

And you are not using an Excel file but a tab separated text file, which is of course more than capable to do what you need, and can be read by any application that can read text files.

-

On 7/14/2025 at 9:57 PM, Dan Bookwalter N8DCJ said:

Without getting into it too much , big picture , how limited would we be with PXIe and Base ?

That question seems very unspecific. LabVIEW base package? What has that to do with PXIe? Why high end (and price) hardware on one side and lowest cost LabVIEW license on the other?

LabVIEW Base is simply the IDE. No application builder, no source code control help tools, no analysis libraries, no toolkits and no nothing. You still can get pretty far with it, especially if you use VIPM and OpenG libraries, but yes it is limited.

-

On 8/18/2025 at 11:17 AM, lv_dev said:

LVCompare CRASHES

In my attempt to comapare a VI that is quite bigger than the avarage VI (about 550 KBytes), LabVIEW always crashes after some loading time. Comparing other (smaller) VIs than this one works perfectly.

Output I get:

LabVIEW caught fatal signal

25.1f0 - Received SIGSEGV

Reason: address not mapped to object

Attempt to reference address: 0x41

Segmentation fault (core dumped)(Yes I am using LabVIEW on Linux, which is quite special on its own)

As you may see I am using LabVIEW 25Q1 Pro and this issue with the crashing LVCompare still remains.I already wrote a ticket to NI and gave them some information, and now they are investigating this issue.

If your system is not resource constrained I kind of doubt that the 550kB VI size is the issue. It would more likely seem to be a corrupted VI somehow. While it may still load for simple executing, LV Compare tries to access a lot more information in the VI resources to make a proper comparison, and if some of that is corrupted in one of the two VIs it may simply crash over such inconsistent data.

-

On 7/3/2025 at 11:38 PM, Ronin7 said:

Hello,

I am working in LabVIEW 2022Q3 32 bit.

I have been trying to make a System Style Enum that can be modified since I need to change the RingText.BGColor property to highlight the control if the UI determines there is a settings conflict. I have modified an NXG Style Enum to look correct but when you click on the image that is the down arrow the control does not respond. Any ideas on how to include that image in the area that the control responds to a mouse click? For reasons in the code I'm not able to use a combo box. I have attached the control. I really like the NXG/Fuse style controls but the enums and rings are not great looking.

Tap Passive Load Relay Channel Enum.ctl 4.3 kB · 4 downloads

System style controls adhere to the actual system control settings and adapt to whatever your platforms currently defined visual style is. This includes also color and just about any other optical aspect aside of size of the control.

If you customize existing controls by adding elements you have to be very careful about Z order of the individual parts. If you put a glyph on top of a sub-part with a user interaction you basically shield that sub-part from receiving the user interaction since the added glyph gets the event and not knowing what to do with it will simply discard it.

-

It's funny that this one found the actual error almost fully and then as you try to improve on it it goes pretty much off into the woods.

I have to say that I still haven't really used any of the AI out there. I did try in the beginning as I was curious and I was almost blown away by how eloquent the actual answers sounded. This was text with complete sentences, correct sentence structure and word usage that was way above average text you read nowadays even in many books. But reading the answers again and again I could not help a feeling of fluffiness, cozy and comfortable on top of that eloquent structure, saying very little of substance with a lot of expensive looking words.

My son tried out a few things such as letting it create a Haiku, a specific Japanese style of poem, and it consequently messed it up by not adhering to the required rhyme scheme despite pointing it at the error and it apologizing and stating the right scheme and then going to make the same error again.

One thing I recently found useful is when I try to look for a specific word, and don't exactly know what it was, I blame this on my age. When looking on Google with a short description they now often produce an AI generated text at the beginning which surprisingly often names the exact word I was looking for.

So if you know what you are looking for but can't exactly remember the exact word it can be quite useful. But to research things I have no knowledge about is a very bad idea. Equally letting it find errors in programming can be useful, vibe coding your software is going to be a guaranteed mess however.

-

On 7/1/2025 at 1:43 AM, bessire said:

One thing I thought about is getting LabVIEW to compile a temporary VI at edit time, storing the compiled code in a buffer on the block diagram, and finding some method to load VI's from memory if one exists. That way it can be used in an executable. Unfortunately, I don't know of any such methods.

I'm pretty sure that exists, at least the loading of a VI from a memory buffer, if my memory doesn't completely fail me. How to build a VI (or control) into a memory buffer might be more tricky.

Most likely the VI server methods for that would be hidden behind one of the SuperSecretPrivateSpecialStuff ini tokens.

Edit: It appears it's just the opposite of what I thought. There is a Private VI method Save:To Buffer that seems to write the binary data into a string buffer. But I wasn't able to find any method that could turn that back into a VI reference.

-

6 hours ago, ShaunR said:

I find it interesting that AI suffers from the same problem that Systems Engineers suffer - converting a customers thoughts and concept to a specification that can be coded. While a Systems Engineer can grasp concepts to guide refinements and always converges on a solution, AI seems to brute-force using snippets it found in the internet and may not converge.

If you consider how these systems work it's not so surprising. They don't really know, they just have a fundus of sample code constructs, with a tuning that tells them that it is more than some prosa text. But that doesn't mean that it "knows" the difference between a sockaddr_in6 and a sockaddr_in. The C compiler however does of course and it makes a huge difference there.

The C compiler works with a very strict rule set and does exactly what you told it, without second guessing what you told it to do. ChatGPT works not with strict rules but with probabilities of patterns it has been trained with. That probability determines what it concludes as most likely outcome. If you are lucky, you can refine your prompt to change the probability of that calculation, but often there is not enough information in the training data to significantly change the probability outcome despite that you tell it to NOT use a certain word. So it ends up telling you that you are right and that it misunderstood, and offering you exactly the same wrong answer again.

In a way it's amazing how LLMs can not only parse human language into a set of tokens that are specific enough to reference data sets in the huge training set and give you an answer that actually looks and sounds relevant to the question. If you tried that with a traditional database approach, the needed memory would be really extreme and the according search would cost a lot more time and effort every single time. LLMs move a big part of that effort to the generation of the training set and allow for a very quick index into the data and construction of very good sounding answers.

It's in some ways a real advancement, if you are fine with the increased fuzziness of the resulting answer. LLMs do not reason, despite other claims, they are really just very fast statistical evaluation models. Humans can reason, if they really want to, by combining various evaluations to a new more complex result. You can of course train the LLM model to "understand" this specific problem more accurately and then make it more likely, but never certain, to return the right answer.

In my case I overread that error many many times and the way I eventually found out about it was a combination of debugging and looking at the memory contents and then going to sleep. The next morning I woke up and as I stepped under the shower the revelation hit me. Something somehow had been obviously pretty hard at work while I was sleeping. 😁

Of course the real problem is C's very lenient allowance of typecasts. It's very powerful to write condensed code but it is a huge cesspit that every programmer, who uses it, will sooner or later fall into. It requires extreme discipline of a programmer and even then it can go wrong as we are all humans too.

-

On 6/29/2025 at 3:10 PM, ShaunR said:

The bug is in the AF_INET case.

inet_ntop(addr.ss_family, (void*)&((sockaddr_in6 *)&addr)->sin6_addr, Address, sizeof(strAddress));

It should not be an IPv6 address conversion, it should be an IPv4 conversion. That code results in a null for the address to inetop which is converted to 0.0.0.0.

I found it after a good nights sleep and a fresh start.

Copy paste error 😁.

I'm almost 100% sure I did this exact same error too in my never released LabVIEW Advanced Network Toolkit.

Stop stealing my bugs! ☠️

And yes it was years ago, when the whole AI hype was simply not even a possibility! Not that I would have used it even if I could have. I trust this AI thing only as far as I can throw a computer.

-

7 hours ago, sts123 said:

So, MATLAB or Python are more efficient to convert TMDS to .txt?

Of course not! But TDMS is binary, text is ... well text. And that means it needs a lot more memory. When you convert from TDMS to text, it needs temporarily whatever the TDMS file needs plus for the text which is requiring even more memory.

Your Matlab and Python program is not going to do calculation on the text, so it needs to read the large text file, convert it back to real numbers and then do computation on those numbers. If you instead import the TDMS data directly to your other program it can do the conversion from TDMS to its own internal format directly and there is no need for any text file to share the data.

-

On 6/10/2025 at 5:50 PM, JackC6Systems said:

I've seen memory consumption [leaks] when Queues and notifers are used where multiple instances of 'Create Queue' are called which instigates a new queue memory pool. You need to pass the Queue reference wire to each queue read/write.

I've seen this from novices when using Queues and Notifiers....We had this problem of the code crashing after some time.

Just a thought.

Some people might be tempted to use Obtain Queue and Obtain Notifier with a name and assume that since the queue is named each Obtain function returns the same refnum. That is however not true. Each Obtain returns a unique refnum that references a memory structure of a few 10s of bytes that references the actual Queue or Notifier. So the underlaying Queue or Notifier is indeed only existing once per name, BUT each refnum still consumes some memory. And to make matters more tricky, there is only a limited amount of refnum IDs of any sort that can be created. This number lies somewhere between 2^20 and 2^24. Basically for EVERY Obtain you also have to call a Release. Otherwise you leak memory and unique refnum IDs.

-

1

1

-

-

And are you sure your Rust FFI configuration is correct? What is the LabVIEW API you call? Datatypes, multiple functions?

Basically your Rust FFI is the equivalent to the Call Library Node configuration in LabVIEW. If it is anything like ctypes in Python, it can do a little more than the LabVIEW Call Library Node, but that only makes it more complex and easier to mess things up. And most people struggle with the LabVIEW Call Library Node already mightily.

-

6 minutes ago, Neil Pate said:

@Rolf Kalbermatter the admins removed that setting for you as everything you say should be written down and never deleted 🙂

Drat, and now my typos and errors are put in stone for eternity (well at least until LavaG is eventually shutdown when the last person on earth turns off the light) 😁

-

1

1

-

-

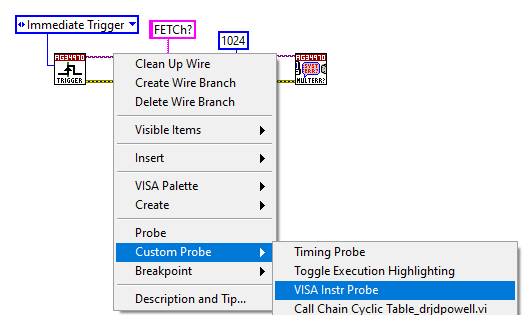

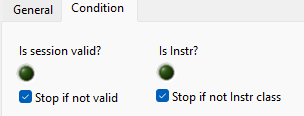

Unless I'm completely hallucinating, there used to be an Edit command in this pulldown menu.

And it is now for this post, but not for the previous one.

-

I believe that I was able to edit in the past posts even if they were older. Just came across one of my posts with a typo and when I tried to edit it, the Edit command is not present anymore. Is that a recent change, or am I just hallucinating due to old age?

-

As far as I can see on the NI website, the WT5000 instrument driver is not using any DLL or similar external code component. As such it seems rather unlikely that this driver is the actual culprit for your problem. Exception 0xC0000005 is basically an Access Violation fault error. This means the executing code tried to cause the CPU to access an address that the current process has no right to access. While not entirely impossible for LabVIEW to generate such an error itself, it would be highly unlikely.

The usual suspects are:

- corrupted installation of components such as the NI-VISA driver but even LabVIEW is an option

But if your application uses any form of DLL or other external code library that is not part of the standard LabVIEW installation, that is almost certainly (typically with 99% chance) the main suspect.

Does your application use any Call Library Node anywhere? Where did you get the according VIs from? Who developed the according DLL that is called?

-

On 5/10/2025 at 1:44 PM, ShaunR said:

What happened to AQ's behemoth of a serializer? Did he ever get that working?

I believe to have read somewhere that he eventually conceded that it was basically an unsolvable problem for a generic, easy to use solution.

But that may be my mental interpretation of something he said in the past and he may not agree with that.

-

The popular serializer/deserializer problem. The serializer is never really the hard part (it can be laborious if you have to handle many data types but it's doable) but the deserializer gets almost always tricky. Every serious programmer gets into this problem at some point, and many spend countless hours to write the perfect serializer/deserializer library, only to abandon their own creation after a few attempts to shoehorn it into other applications. 🙂

-

1

1

-

-

3 hours ago, bbean said:

You are correct...I worded my thought incorrectly. My thought was that since the Keysight code was using Synchronous VISA calls and both the Keysight / Pendulum were most likely running in the UI thread bc their callers were in the UI thread and the execution system was set to same as caller initially, the VISA Write / Read calls to the powered off instrument were probably blocking the other instrument with a valid connection (since by my understanding there is typically only one UI thread).

Well the fact that you have VI Server property nodes in your top level VI should not force the entire VI hierarchy into the UI thread. Typically the VI will start in one of the other execution systems and context switch to the UI thread whenever it encounters such a property node. But the VISA nodes should still be executed within the execution system that the top level VI is assigned too. Of course if that VI has not been set to a specific execution system things can get a bit more complex. It may depend on how you start them up in that case.

-

Synchronous is not about running in the UI thread. It is about something different. LabVIEW supports so called cooperative multitasking since long before it also supported preemptive multithreading using the underlaying platform threading support.

Basically that means that some nodes can be executed in Asynchronous operation. For instance any build in function with a timeout is usually asynchronous. Your Wait for Multiple ms timing node for instance is not hanging up the loop frame waiting for the expiration for that time. Instead it goes into an asynchronous operation setting up a callback (in reality it is based on occurrences internally) that gets triggered as soon as the timeout is expiring. The diagram is then completely free to use the CPU for anything else that may need attention, without even having to more or less frequently poll the timer to check if it has expired. For interfacing to external drivers this can be done too, if that driver has an asynchronous interface (The Windows API calls that Overlapping operation). This asynchronous interface can be based on callbacks, or on events or occurrences (the LabVIEW low level minimalistic form of events). NI VISA provides an asynchronous interface too, and what happens when you set a VISA node to be asynchronous is basically that it switches to call the asynchronous VISA API. In theory this allows for more efficient use of CPU resources since the node is not just locking up the LabVIEW thread. In practice, the asynchronous VISA API seems to be using internal polling to drive the asynchronous operation and that can actually cause more CPU usage rather than less.

It should not affect the lifetime of a VISA session and definitely not the lifetime of a different VISA session.

But disconnecting an instrument doesn't automatically make a session invalid. It simply causes any operation that is supposed to use the connection for actual transfer of bytes to fail.

-

On 4/24/2025 at 9:54 PM, bbean said:

A VISA Session is simply a LabVIEW refnum too, just a different flavor (sepcifically TagRefnum) which has an attached user readable string. Same about DAQmx sessions and any other purple wire. As such the "Is Not A Number/Path/Refnum" works on it too.

One difference is that unlike any other LabVIEW refnum, you can actually configure if the lifetime of the VISA refnums should be tied to the top level VI or just left lingering forever until explicitly closed. This is a global setting in the LabVIEW options.

Design their UI dashboard without any extra code for control values refresh

in User Interface

Posted

LabVIEW DSC does this with an internal tag name in the Control and the according configuration dialog allows to configure that tag name.