-

Posts

3,950 -

Joined

-

Last visited

-

Days Won

275

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Rolf Kalbermatter

-

-

On 2/27/2026 at 4:06 AM, Vandy_Gan said:

Francois,

Is it possible to generate an application that runs in a runtime environment only?

First, attaching your question to random (multiple) threads is not a very efficient way of seeking help.

I'm not sure I understand your question correctly. But there is the Application Builder, which is included in the Professional Development License of LabVIEW, that lets you create an executable. It still requires the LabVIEW Runtime Engine to be installed on computers to be able to run it, but you can also create an Installer with the Application Builder that includes all the necessary installation components in addition to your own executable file.

-

On 2/24/2026 at 2:31 PM, Neon_Light said:

Hello Rolf,

thank you for the help. I did download the source files from HDF5 and HDF5Labview. You're are right it can take some time to get it working. For that reason I'll start with making a datastream from the cRIO to the windowns gui pc and use that for now. At least I'll be able to reduce some risk.

Meanwhile I'll try to get a the Cross compiler working I'll should at lease be able to get a "hello world" running on the cRIO and take some steps if I can find some time. I did find the following site:

https://nilrt-docs.ni.com/cross_compile/cross_compile_index.html

Do you think this site is still up to date or is it a good point to start? I am only targeting a Ni-9056 for now.

I haven't recently tried to use that information but will have to soon for a few projects. From a quick cursory glance it would seem still relevant. There is of course the issue of computer technology in general and Linux especially being a continuous moving target, so I would guess there might be slight variations nowadays to what was the latest knowledge when that document was written, but in general it seems still accurate.

-

4 hours ago, ManuBzh said:

I'd would find useful (may be it's already exists) to choose the editing growing direction of an array. It's used to be from left to right and/or up to down, but others directions could be usefull.

Yes I can do it programmatically, but it's cumbersome and it useless calculus since it could directly done by the graphical engine.

Possible (and I forget about it) or already asked on the wishlist ? (I'm quite sure there's of one the two !)

Emmanuel

I can't really remember ever having seen such a request. And to be honest never felt the need for it.

Thinking about it it makes some sense to support it and it would probably be not that much of work for the LabVIEW programmers, but development priorities are always a bitch. I can think of several dozen other things that I would rather like to have and that have been pushed down the priority list by NI for many years.

The best chance to have something like this ever considered is to add it to the LabVIEW idea Exchange https://forums.ni.com/t5/LabVIEW-Idea-Exchange/idb-p/labviewideas. That is unless you know one of the LabVIEW developers personally and have some leverage to pressure them into doing it. 😁

-

3 hours ago, ManuBzh said:

For sure, this must have been debated here over and over... but : what are the reasons why X-Controls are banned ?

It is because :

- it's bugged,

- people do not understand their purpose, or philosophy, and how to code them incorrectly, leading to problems.Absolutely echo what Shaun says. Nobody banned them. But most who tried to use them have after some more or less short time run from them, with many hairs ripped out of their head, a few nervous tics from to much caffeine consume and swearing to never try them again.

The idea is not really bad and if you are willing to suffer through it you can make pretty impressive things with them, but the execution of that idea is anything but ideal and feels in many places like a half thought out idea that was eventually abandoned when it was kind of working but before it was a really easily usable feature.

-

1

1

-

1

1

-

-

It's a little more complicated than that. You do not just need an *.o file but in fact an *.o file for every c(pp) source file in that library and then link it into a *.so file (the Linux equivalent of a Windows dll). Also there are two different cRIO families the 906x which runs Linux compiled for an ARM CPU and the 903x, 904x, 905x, 908x which all run Linux compiled for a 64-bit Intel x686 CPU. Your *.so needs to be compiled for the one of these two depending on the cRIO chassis you want to run it on. Then you need to install that *.so file onto the cRIO.

In addition you would have to review all the VIs to make sure that it still applies to the functions as exported by this *.so file. I haven't checked the h5F library but there is always a change that the library has difference for different platforms because of the available features that the platform provides.

The thread you mentioned already showed that alignment was obviously a problem. But if you haven't done any C programming it is not very likely that you get this working in a reasonable time.

-

Many functions in LabVIEW that are related to editing VIs are restricted to only run in the editor runtime. That generally also involves almost all VI Server functions that modify things of LabVIEW objects except UI things (so the editing of your UI boolean text is safe) but the saving of such a control is not supported.

And all the brown nodes are anyways private, this means they may work, or not, or stop working, or get removed in a future version of LabVIEW at NI's whole discretion. Use of them is fun in your trials and exercises but a no-go in any end user application unless you want to risk breaking your app for several possible reasons, such as building an executable of it, upgrading the LabVIEW version, or simply bad luck.

-

On 2/4/2026 at 5:26 PM, JBC said:

@BryanThanks for the info, I tried multiple methods to get CE going again but none worked. This is CE on a MAC (my personal computer) which as far as I can tell is no longer supported. It sucks they force you to update the CE version and will not let you simply use the CE version you have. I have a work windows PC that I have a LV2021 perpetual license for though I have kept CE off that computer since that one is my commercial use dev machine and it works. I dont want NI to update packages (during CE install) on it that break my 2021 dev environment.

Actually LabVIEW for Mac OS is again an official thing since 2025 Q3. Community Edition only, can't really buy it as a Professional Version but it's officially downloadable and supported. 2024Q3 and 2025Q1 was only a semi official thing that you had to download from the makerhub website.

-

On 1/27/2026 at 12:56 AM, Mefistotelis said:

This is clearly the assert just after `DSNewPClr()` call.

Anyone knows what packer tool was used to decrease size of LabVIEW-3.x Windows binaries? It is clear the file is packed, but I'm not motivated enough to identify what was used. UPX had a header at start and it's not there, so probably something else.

Anyway, without unpacked binary, I can't say more from that old version.

Though the version 6.0, although compiled in C++, seem to have this code very similar, and that I can analyze:

if (gTotalUnitBases != 9) DBAssert("units.cpp", 416, 0, rcsid_234); cvt_size = AlignDatum(gTDTable[10], 4 * gTotalUnitBases + 18); gCvtBuf = DSNewPClr(cvt_size * (gTotalUnits - gTotalUnitBases + 1)); if (gCvtBuf == NULL) DBAssert("units.cpp", 421, 0, rcsid_234);The 2nd assert seem to correspond to yours, even though it's now in line 421. It's clearly "failed to allocate memory" error.

It could also be the assert a few lines above or somewhere there around there. Hard to say for sure.

gTotalUnitBases being not 9 is a possibility but probably not very likely. I suppose that is somewhere initialized based on some external resource file, so file IO errors might be a possibility to cause this value to not be 9.

The other option might be if cvt_size ends up evaluating to 0. DSNewPClr() is basically a wrapper with some extra LabVIEW specific magic around malloc/calloc.

C standard specifies for malloc/calloc: "If size is zero, the behavior of malloc is implementation-defined" meaning it can return a NULL pointer or another canonical value that is not NULL but still can not be dereferenced without causing an access error or similar. I believe most C compilers will return NULL. The code definitely assumes that even though the C standard does not guarantee that.

AlignDatum() calculates the next valid offset with adjusting for possible padding based on the platform and the typedef descriptor in its first parameter. Difficult to see how that could get 0 here, as the AlignDatum() should always return a value at least as high as the second parameter and possibly higher depending on platform specific alignment issues.

This crash for sure shows that some of the API functions of Mini vMac are not quite 100% compatible to the original API function. But where oh where could that be? This happening in InitApp() does however not spell good things for the future. This is basically one of the first functions called when LabVIEW starts up, and if things go belly up already here, there are most likely a few zillion other things that will rather sooner than later go bad too even if you manage to fix this particular issue by patching the Mini vMac emulator.

Maybe it is some Endianess error. LabVIEW 3 for Mac was running on 68000 CPU which is BigEndian, not sure what the 3DS is running as CPU (seems to be a dual or quad core ARM11 MPCore) but the emulator may get some endianess emulation wrong somewhere. It's very tricky to get that 100% right and LabVIEW does internally a lot of Endianess specific things.

-

-

2 hours ago, hooovahh said:

What did have a measurable improvement is calling the OpenG Deflate function in parallel. Is that compress call thread safe? Can the VI just be set to reentrant? If so I do highly suggest making that change to the VI. I saw you are supporting back to LabVIEW 8.6 and I'm unsure what options it had. I suspect it does not have Separate Compile code back then.

Reentrant execution may be a safe option. Have to check the function. The zlib library is generally written in a way that should be multithreading safe. Of course that does NOT apply to accessing for instance the same ZIP or UNZIP stream with two different function calls at the same time. The underlaying streams (mapping to the according refnums in the VI library) are not protected with mutexes or anything. That's an extra overhead that costs time to do even when it would be not necessary. But for the Inflate and Deflate functions it would be almost certainly safe to do.

I'm not a fan of making libraries all over reentrant since in older versions they were not debuggable at all and there are still limitations even now. Also reentrant execution is NOT a panacea that solves everything. It can speed up certain operations if used properly but it comes with significant overhead for memory and extra management work so in many cases it improves nothing but can have even negative effects. Because of that I never enable reentrant execution in VIs by default, only after I'm positively convinced that it improves things.

For the other ZLIB functions operating on refnums I will for sure not enable it. It should work fine if you make sure that a refnum is never accessed from two different places at the same time but that is active user restraint that they must do. Simply leaving the functions non-reentrant is the only safe option without having to write a 50 page document explaining what you should never do, and which 99% of the users never will read anyways. 😁

And yes LabVIEW 8.6 has no Separated Compiled code. And 2009 neither.

-

1

1

-

-

2 hours ago, hooovahh said:

Edit: Oh if I reduce the timestamp constant down to a floating double, the size goes down to 2.5MB. I may need to look into the difference in precision and what is lost with that reduction.

A Timestamp is a 128 bit fixed point number. It consists of a 64-bit signed integer representing the seconds since January 1, 1904 GMT and a 64-bit unsigned integer representing the fractional seconds.

As such it has a range of something like +- 3*10^11 years relative to 1904. That's about +-300 billion years, about 20 times the lifetime of our universe and long after our universe will have either died or collapsed. And the resolution is about 1/2*10^19 seconds, that's a fraction of an attosecond. However LabVIEW only uses the most significant 32-bit of the fractional part so it is "only" able to have a theoretical resolution of some 1/2*10^10 seconds or 200 picoseconds. Practically the Windows clock has a theoretical resolution of 100ns. That doesn't mean that you can get incremental values that increase with 100ns however. It's how the timebase is calculated but there can be bigger increments than 100ns between two subsequent readings (and no increment).

A double floating point number has an exponent of 11 bits and 52 fractional bits. This means it can represent about 2^53 seconds or some 285 million years before its resolution gets higher than one second. Scale down accordingly to 285 000 years for 1 ms resolution and still 285 years for 1us resolution.

-

1

1

-

-

Well I referred to the VI names really, the ZLIB Inflate calls the compress function, which then calls internally the inflate_init, inflate and inflate_end functions, and the ZLIB Deflate calls the decompress function wich calls accordingly deflate_init, deflate and deflate_end. The init, add, end functions are only useful if you want to process a single stream in junks. It's still only one stream but instead of entering the whole compressed or uncompressed stream as a whole, you initialize a compression or decompression reference, then add the input stream in smaller junks and get every time the according output stream. This is useful to process large streams in smaller chunks to save memory at the cost of some processing speed. A stream is simply a bunch of bytes. There is not inherent structure in it, you would have to add that yourself by partitioning the junks accordingly yourself.

-

1

1

-

-

56 minutes ago, hooovahh said:

Thanks but for the OpenG lvzlib I only see lvzlib_compress used for the Deflate function. Rolf I might be interested in these functions being exposed if that isn't too much to ask.

Actually there is ZLIB Inflate and ZLIB Deflate and Extended variants of both that take in a string buffer and output another one. Extended allows to specify which header format to use in front of the actual compressed stream. But yes I did not expose the lower level functions with Init, Add, and End. Not that it would be very difficult other than having to consider a reasonable control type to represent the "session". Refnum would work best I guess.

-

6 minutes ago, ShaunR said:

Nope. It needs someone better than I.

I can understand that sentiment. I'm also just doing some shit that I barely can understand.🤫

-

On 1/12/2026 at 2:57 PM, ShaunR said:

While we are waiting for Hooovah to give us a huffman decoder

...

...

most of the rest seem to be here: Cosine Transform (DCT), sample quantization, and Huffman coding and here: LabVIEW Colour Lab

You seem to have done all the pre-research already. Are you really not wanting to volunteer? 😁

-

1 hour ago, hooovahh said:

I do wonder why NI doesn't have a native Deflate/Inflate built into LabVIEW. I get that if they use the zlib binaries they need a different one for each target, but I feel that that is a somewhat manageable list. They already have to support creating Zip files on the various targets. If they support Deflate/Inflate, they can do the rest in native G to support zip compression.

They absolutely do! The current ZIP file support they have is basically simply the zlib library AND the additional ZIP/UNZIP example provided with it in the contribution order of the ZLIB library. It used to be quite an old version and I'm not sure if and when they ever really upgraded it to later ZLIB versions. I stumbled over that fact when I tried to create shared libraries for realtime targets. When creating on for the VxWorks OS I never managed to load it at all on a target. Debugging that directly would have required an installation of the Diabolo compiler toolchain from VxWorks which was part of the VxWorks development SDK and WAAAAYYY to expensive to even spend a single thought about trying to use it. After some back and forth with an NI engineer he suggested I look at the export table of the NI-RT VxWorks runtime binary, since VxWorks had the rather huge limitation to only have one single global symbol export table where all the dynamic modules got their symbols registered, so you could not have two modules exporting even one single function with the same name without causing the second module to fail to load. And lo and behold there were pretty much all of the zlib zip/unzip functions in that list and also the zlib functions itself. After I changed the export symbol names of all the functions I wanted to be able to call from my OpenG ZIP library with an extra prefix I could suddenly load my module and call the functions.

Why not use the function in the LabVIEW kernel directly then?

1) Many of those symbols are not publicly exported. Under VxWorks you do not seem to have a difference between local functions and exported functions, they all are loaded into the symbol table. Under Linux ELF, symbols are per module in a function table but marked if they are visible outside the module or not. Under Windows, only explicitly exported functions are in the export function table.

So under Windows you simply can't call those other functions at all, since they are not in the LabVIEW kernel export table unless NI adds them explicitly to the export table, which they did only for a few that are used by the ZIP library functions.

2) I have no idea which version NI is using and no control when they change anything and if they modify any of those APIs or not. Relying on such an unstable interface is simply suicide.

Last but not least: LabVIEW uses the deflate and inflate functions to compress and decompress various binary streams in its binary file formats. So those functions are there, but not exported to be accessed from a LabVIEW program.

I know that they did explicit benchmarks about this and the results back then showed clearly that reducing the binary size of data that had to be read and written to disk by compressing them, resulted in a performance gain despite the extra CPU processing for the compression/decompression. I'm not sure if this would still hold with modern SSD drives connected through NVE but why change it now. And it gave them an extra marketing bullet point in the LabVIEW release notes about reduced file sizes. 😁

-

On 1/11/2026 at 11:43 AM, ShaunR said:

There is an example shipped with LabVIEW called "Image Compression with DCT". If one added the colour-space conversion, quantization and changed the order of encoding (entropy encoding) and Huffman RLE you'd have a JPG [En/De]coder.

That'd work on all platforms

Not volunteering; just saying

You make it sound trivial when you list it like that. 😁

-

On 1/8/2026 at 10:37 PM, hooovahh said:

So a couple of years ago I was reading about the ZLIB documentation on compression and how it works. It was an interesting blog post going into how it works, and what compression algorithms like zip really do. This is using the LZ77 and Huffman Tables. It was very education and I thought it might be fun to try to write some of it in G. The deflate function in ZLIB is very well understood from an external code call and so the only real ever so slight place that it made sense in my head was to use it on LabVIEW RT. The wonderful OpenG Zip package has support for Linux RT in version 4.2.0b1 as posted here. For now this is the version I will be sticking with because of the RT support.

Still I went on my little journey trying to make my own in pure LabVIEW to see what I could do. My first attempt failed immensely and I did not have the knowledge, to understand what was wrong, or how to debug it. As a test of AI progression I decided to dig up this old code and start asking AI about what I could do to improve my code, and to finally have it working properly. Well over the holiday break Google Gemini delivered. It was very helpful for the first 90% or so. It was great having a dialog with back and forth asking about edge cases, and how things are handled. It gave examples and knew what the next steps were. Admittedly it is a somewhat academic problem, and so maybe that's why the AI did so well. And I did still reference some of the other content online. The last 10% were a bit of a pain. The AI hallucinated several times giving wrong information, or analyzed my byte streams incorrectly. But this did help me understand it even more since I had to debug it.

So attached is my first go at it in 2022 Q3. It requires some packages from VIPM.IO. Image Manipulation, for making some debug tree drawings which is actually disabled at the moment. And the new version of my Array package 3.1.3.23.

So how is performance? Well I only have the deflate function, and it only is on the dynamic table, which only gets called if there is some amount of data around 1K and larger. I tested it with random stuff with lots of repetition and my 700k string took about 100ms to process while the OpenG method took about 2ms. Compression was similar but OpenG was about 5% smaller too. It was a lot of fun, I learned a lot, and will probably apply things I learned, but realistically I will stick with the OpenG for real work. If there are improvements to make, the largest time sink is in detecting the patterns. It is a 32k sliding window and I'm unsure of what techniques can be used to make it faster.

Great effort. I always wondered about that, but looking at the zlib library it was clear that the full functionality was very complex and would take a lot of time to get working. And the biggest problem I saw was the testing. Bit level stuff in LabVIEW is very possible but it is also extremely easy to make errors (that's independent of LabVIEW btw) so getting that right is extremely difficult and just as difficult to proof consistently.

Performance is of course another issue. LabVIEW allows a lot of optimizations but when you work on bit level, the individual overhead of each LabVIEW function starts to add up, even if it is in itself just tiny fractions of microseconds. LabVIEW functions do more consistency checking to make sure nothing will crash ever because of out of bounds access and more. That's a nice thing and makes debugging LabVIEW code a lot easier, but it also eats performance, especially if these operations are done in inner loops million of times.

Cryptography is another area that has similar challenges, except that security requirements are even higher. Assumed security is worse than no security.

I have done in the past a collection of libraries to read and write image formats for TIFF, GIF and BMP. And even implemented the somewhat easier LZW algorithm used in some TIFF and GIF files. On the basic it consists of a collection of stream libraries to access files and binary data buffers as a stream of bytes or bits. It was never intended to be optimized for performance but for interoperability and complete platform independence. One partial regret I have is that I did not implement the compression and decompression layer as a stream based interface. This kind of breaks the easy interchangeability of various formats by just changing the according stream interface or layering an additional stream interface in the stack. But development of a consistent stream architecture is one of the more tricky things in object oriented programming. And implementing a decompressor or compressor as a stream interface is basically turning the whole processing inside out. Not impossible to do, but even more complex than a "simple" block oriented (de)compressor. And also a lot harder to debug.

Last but not least it is very incomplete. TIFF support is only for a limited amount of sub-formats, the decoding interface is somewhat more complete while the encoding part only supports basic formats. GIF is similar and BMP is just a very rudimentary skeleton. Another inconsistency is that some interfaces support the input and output to and from IMAQ while others support the 2D LabVIEW Pixmap, and the TIFF output supports both for some of the formats. So it's very sketchy.

I did use that library recently in a project where we were reading black/white images from IMAQ which only supports 8 bit greyscale images but the output needed to be 1-bit TIFF data to transfer to a inkjet print head. The previous approach was to save a TIFF file in IMAQ, which was stored as 8-bit grey scale with only really two different values and then invoke an external command to convert the file to 1 bit bi-level TIFF and transfer that to the printer. But that took quite a bit of time and did not allow to process the required 6 to 10 images per second. With this library I could do the full IMAQ to 1-bit TIFF conversion consistently in less than 50 ms per image including writing the file to disk. And I always wondered about what would be needed to extend the compressor/decompressor with a ZLIB inflate/deflate version which is another compression format used in TIFF (and PNG but I haven't considered that yet). The main issue is that adding native JPEG support would be a real hassle as many PNG files use internally a form of JPEG compression for real life images.

-

Ok, you should have specified that you were comparing it with tools written in C 🙂 The typical test engineer has definitely no idea about all the possible ways C code can be made to trip over its feet and back then it was even less understood and frameworks that could help alleviate the issue were sparse and far between.

What I could not wrap my head around was your claim that LabVIEW never would crash. That's very much controversial to my own experience. 😁

Especially if you make it sound like it is worse nowadays. It's definitely not but your typical use cases are for sure different nowadays than they were back then. And that is almost certainly the real reason you may feel LabVIEW crashes more today then it did back then.

-

1 hour ago, ShaunR said:

The thing I loved about the original LabVIEW was that it was not namespaced or partitioned. You could run an executable and share variables without having to use things like memory maps. I used to to have a toolbox of executables (DVM, Power Supplies, oscilloscopes, logging etc. ) and each test system was just launching the appropriate executable[s] at the appropriate times. It was like OOP composition for an entire test system but with executable modules.

One BBF (Big Beauttiful F*cking) Global Namespace may sound like a great feature but is a major source of all kinds of problems. From a certain system size it is getting very difficult to maintain and extend it for any normal human, even the original developer after a short period.

QuoteAdditionally, crashes were unheard of. In the 1990's I think I had 1 insane object in 18 months and didn't know what a GPF fault was until I started looking at other languages. We could run out of memory if we weren't careful though (remember the Bulldozer?).

When I read this I was wondering what might cause the clear misalignment of experience here with my memory.

1) It was ironically meant and you forgot the smiley

2) A case of rosy retrospection

3) Or are we living in different universes with different physical laws for computers

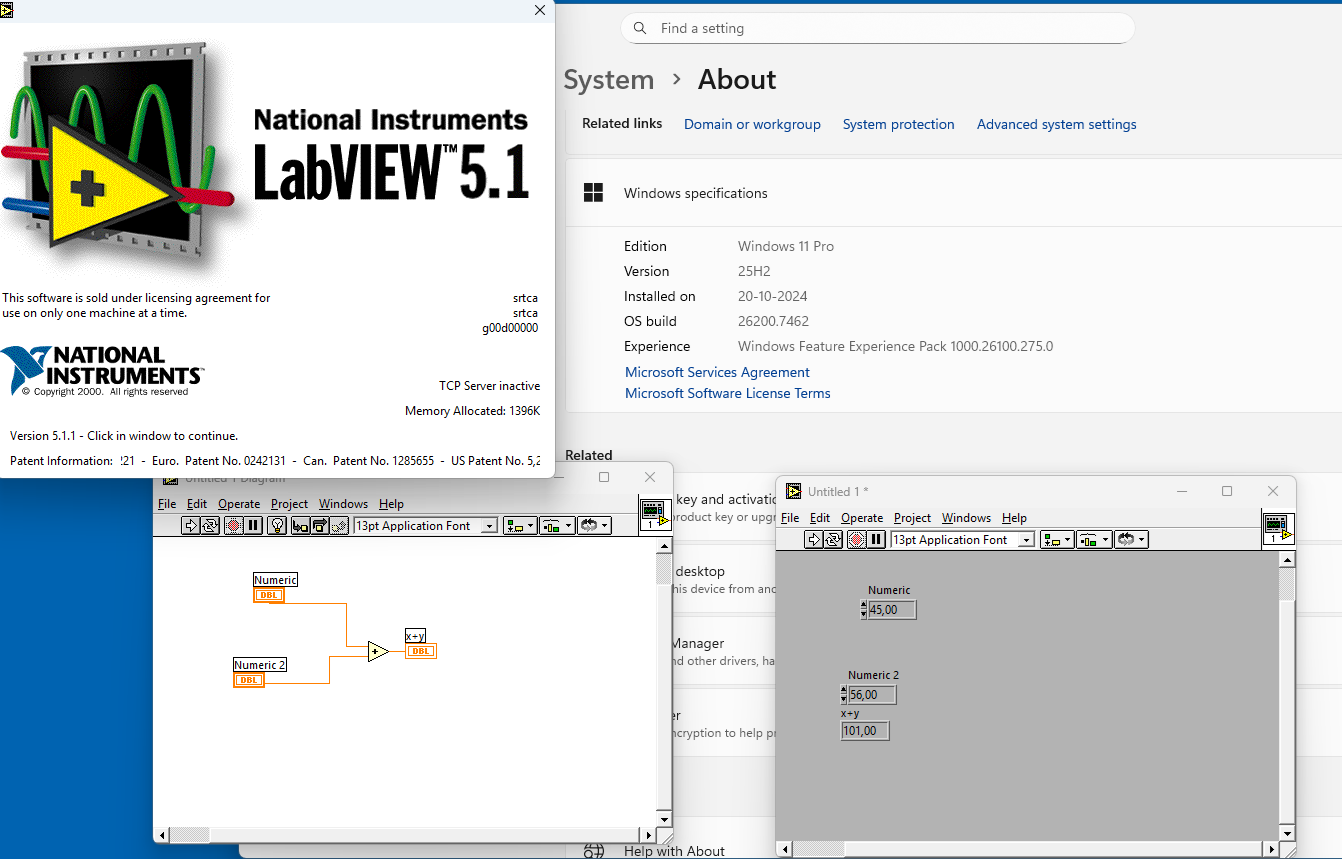

LabVIEW 2.5 and 3 were a continuous stream of GPFs, at times so bad that you could barely do some work in them. LabVIEW 4 got somewhat better but was still far from easy sailing. 5 and especially 5.1.1 was my first long term development platform. Not perfect for sure but pretty usable. But things like some specific video drivers for sure could send LabVIEW frequently belly up as could more complicated applications with external hardware (from NI). 6i was a gimmick, mainly to appease the internet hype, not really bad bad far from stable. 7.1.1 ended up to be my next long term development platform. Never touched 8.0 and only briefly 8.2.1 which was required for some specific real-time hardware. 8.6.1 was the next version that did get some use from me.

But saying that LabVIEW never crashed on me in the 90ies, even with leaving my own external code experiments aside, would be a gross under-exaggeration. And working in the technical support of NI from 1992 to 1996 for sure made me see many many more crashes in that time.

-

26 minutes ago, dadreamer said:

I also believe, those versions didn't really need some pirate tools. Just owner's personal data and serial number were needed. If not available, it was possible to use 'an infinite trial' mode: start, click OK and do everything you want.

True there is no active license checking in LabVIEW until 7.1. And as you say, using LabVIEW 5 or 6 as a productive tool is not wise, neither is blabbing about Russian hack sites here. What someone installs on his own computer is his own business but expecting such hacks to be done out of pure love for humanity is very naive. If someone is able to circumvent the serial check somehow (not a difficult task) they are also easily able to add some extra payload into the executable that does things you rather would not want done on your computer.

-

4 hours ago, Tom Eilers said:

I know it runs (mostly), installation is a slightly different story. But that's still no justification to promote pirated software no matter how old.

-

LabVIEW 5 is almost 30 years old! It won't run on any modern computer very well if at all. Besides offering software even if that old like this is not just maybe illegal but definitely. So keep browsing your Russian crack sites but leave your offerings away from this site, please!

-

8 minutes ago, ShaunR said:

I think, maybe, we are talking about different things.

Exposing the myriad of OpenSSL library interfaces using CLFN's is not the same thing that you are describing. While multiple individual calls can be wrapped into a single wrapper function to be called by a CLFN (create a CTX, set it to a client, add the certificate store, add the bios then expose that as "InitClient" in a wrapper ... say). That is different to what you are describing and I would make a different argument.

I would, maybe, agree that a wrapper dynamic library would be useful for Linux but on Windows it's not really warranted. The issue I found with Linux was that the LabVIEW CLFN could not reliably load local libraries in the application directory in preference over global ones and global/local CTX instances were often sticky and confused. A C wrapper should be able to overcome that but I'm not relisting all the OpenSSL function calls in a wrapper

.

.

However. The biggest issue with number of VI's overall isn't to wrap or not, it's build times and package creation times. It takes VIPM 2 hours to create an ECL package and I had to hack the underlying VIPM oglib library to do it that quickly. Once the package is built, however, it's not a problem. Installation with mass compile only takes a couple of minutes and impact on the users' build times is minimal.

Wow, over 2 hours build time sounds excessive. My own packages are of course not nearly as complex but with my simplistic clone of the OpenG Package Builder it takes me seconds to build the package and a little longer when I run the OpenG Builder relinking step for pre/postfixing VI names and building everything into a target distribution hierarchy beforehand. Was planning for a long time to integrate that Builder relink step directly into the Package Builder but it's a non-trivial task and would need some serious love to do it right.

I agree that we were not exactly talking about the same reason for lots of VI wrappers although it is very much related to it. Making direct calls into a library like OpenSSL through Call Library Nodes, which really is a collection of several rather different paradigms that have grown over the course of over 30 years of development, is not just a pain in the a* but a royal suffering. And it still stands for me, solving that once in C code to provide a much more simple and uniform API across platforms to call from LabVIEW is not easy, but it eases a lot of that pain.

It's in the end a tradeoff of course. Suffering in the LabVIEW layer to create lots of complex wrappers that end up often to be different per platform (calling convention, subtle differences in parameter types, etc) or writing fairly complex multiplatform C code and having to compile it into a shared library for every platform/bitness you want to support. It's both hard and it's about which hard you choose. And depending on personal preferences one hard may feel harder than the other.

Unicode Display (TabControl and Tree Menu)

in User Interface

Posted · Edited by Rolf Kalbermatter

A bit confusing. You talk about French but show an English front panel. But what you see is a typical Unicode String displayed as ASCII. On Windows, Unicode is so called UTF16LE. For all the first 127 ASCII codes this means that each character results in two bytes, with the first byte being the ASCII code and the second byte being 0x00. LabVIEW does display a space for non-printable characters and 0x00 is non-printable unlike in C where it is the End Of String indicator.

So you will have to make sure the control is Unicode enabled. Now Unicode support in LabVIEW is still experimental (present but not a released feature) and there are many areas where it doesn't work as desired. You do need to add a special keyword to the INI file to enable it and in many cases also enable a special (normally non-visible) property on the control. It may be that the Tab labels need to have this property enabled separately or that Unicode support for this part of the UI is one of the areas that is not fully implemented.

Use of Unicode UI in LabVIEW is still an unreleased feature. It may or may not work, depending on your specific circumstances and while NI is currently actively working on making this a full feature, they have not made any promises when it will be ready for the masses.