-

Posts

429 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Mark Yedinak

-

-

I think more information about how your application is used needs to be provided before any meaningful suggestions can be given. Does the user start your application every time they want to test? How is your application started? Depending on these answers there are a variety of approaches you can take for obtaining your test limits. You can get them from a file as suggested, you can retrieve them from the command line or you could prompt the user for the values. All are valid approaches but which you choose depends on how your application will be used.

-

I have been talking with the vendor for the test equipment I will be controlling and it looks like we have another much more straightforward approach. After looking at the network traces of the communication it didn't look like it would be a very easy protocol to implement.

Thanks for all the suggestions.

-

QUOTE (Aristos Queue @ Jan 30 2009, 03:34 PM)

Except that it hasn't been a problem for most of our history. Our bread and butter comes from the zero-experience-with-CS engineers and scientists. Those of you who are skilled programmers and use LabVIEW as a "fully armed and operational battle station" are a much smaller group, though you're growing. But because the bulk of users are in that first group, we do tend to spin LabVIEW for only that first usage. Heretofore it has paid the bills well and we've spread in more advanced contexts by word of mouth and the lone LV user who smuggles a copy of LV into his/her circle of C++-using co-workers. It certainly will be a problem in the future if the "large, full applications" group starts eclipsing the "one while loop around three Express VIs" group, but my understanding is we're still a ways away from that inflection point.I have often thought that if NI were to market LabVIEW more as a pure development language and environment that "much smaller group" would grow much faster. I have a CS degree and I use LabVIEW to develop large scale applications. The type of designs that I put together most casual users who simply learn LabVIEW on their would not be able to design. There is a big difference between writing a simple program and designing a large scale application. There are many ascpets of G that make designing systems very easy for a programmer. Multitasking, parallelism, synchronization via queues, and other features that greatly simply the impementation of complex designs. Similar architectures in traditional programming language are not as easy to implement since the programmer is required to do more of the work himself. G encapsulates many things for the programmer. I firmly believe that if NI did a better job promoting LabVIEW as a pure development environment than its adoption would be much faster. Keeping it a "secret" does nothing to promote its growth.

-

I would consider designing a simple messaging interface that and use that in all of your VIs you want to call and run. This messaging interface would have the actions of Start, Stop, Pause, Resume, etc. and be passed in to the subVIs via a queue or a notifier. The main application could create the queue and all the subVIs would connect to it when they ran. This assumes that there is only one running VI at any one time. If you need to control multiple subVIs at the same time I would consider using network queues. There is a nice example in a discussion from a couple of months ago (sorry, I didn't put the link in for you) that would allow each subVI to have its own queue. The main application would have to keep track of which queue went with each subVI but it could be down. If you define your queue data to be cluster of a message ID (integer) and a variant you allow yourself to do just about anything. This is a very flexible design and it scales nicely.

-

-

QUOTE (gleichman @ Jan 23 2009, 03:54 PM)

QUOTE (gleichman @ Jan 23 2009, 03:54 PM)

"I raise and breed tarantulas" - Why??

Why not? They are fascinating creatures. Once you start to study them you start to realize how interesting they are. At the moment my collection is fairly small. I have around 40 tarantulas right now. Down from several hundred in the past.

QUOTE (gleichman @ Jan 23 2009, 03:54 PM)

I also received my CLD today. Now it's time to start preparing for the CLA.Yeah, me too. Good luck on your prep and exam.

-

I realize that I am not that active here, more of a lurker, but I simply wanted to announce that I passed my CLD exam. Funny thing is I failed the first time and walked out of that exam thinking I nailed it. I walked out of the last exam sure that I blew it and I passed it quite easily. Goes to show you never know.

As for the exam itself I have to agree with many of the various topics regarding the certification testing and process. Passing or failing the exam doesn't necessarily reflect an individual's true ability but having gone through the process I can definitely say that I have learned quite bit and I know the process itself has improved my skills.

-

QUOTE (mesmith @ Jan 21 2009, 10:32 AM)

OK - so this is the ONC RPC as specified in IETF RFC 1831 (that's a pretty ancient standard in computing years)? I guess I'm still a little confused because to the best of my knowledge there is no "basic" RPC. RPC (Remote Procedure Call) has been implemented a thousand different ways - SOAP, CORBA, Windows Remoting, etc, and in the grand tradition of computing none of them are compatible with any of the others! So, I don't really have anything else to offer except encouragement!Good Luck!

Mark

Yes, it is a pretty old RFC. It looks like I may need to look at a network trace. I am sure it will be fairly straight forward but I was hoping it might have been done already. My guess is that it will be a Windows based method since their GUI interface is a Windows based application.

Thanks again for the suggetions.

-

If you are working with the entire array of data have you considered using queues. I have done some analysis of the efficiency of array manipulation for large circular buffer and found that queues are more efficient. In addition, in 8.6 you can configure them to automatically drop the oldest data when you set a maximum size.

-

QUOTE (mesmith @ Jan 21 2009, 09:50 AM)

Mark,I'm making the assumption that you're talking about the XML-RPC server project in the CR? If so, that does include an example of creating a LabVIEW call into an XML-RPC server. You are correct that this project mostly supports the server side, but it includes all of the stuff you need to pack XML-RPC into a request for any XML-RPC server and parse the response (see the Call Generate Sine Wave.vi for an example). But, you first just call it RPC, so I'm not clear exactly which RPC implementation and protocol you mean - there are lots of them and the XML-RPC project only supports one!

Mark

The chassis I want to control only uses your basic RPC. They do not support XML-RPC. their commands are documented but I the mechanics of the RPC for sending the commands. I looked at the RFC specs for RPC and could work from those but if someone has already done this it would make my life easier.

I can also capture a network trace of their GUI communication with the chassis and reverse engineer the communication. I will go down this road as a last result though.

-

Has anyone implemented the RPC protocol in G to control external applications via RPC? I am looking at automating some tests using an external chasis which can be controlled via RPC calls. I would like to use LabVIEW as the automation platform to leverage our current code bas for communicating and controlling the product under test. I have seen the LabVIEW XMPRPC server but that is for controlling LabVIEW via RPC, not the other way. Any pointers or suggestion would be appreciated.

Thanks

-

I am not sure about the entire front panel but it is possible when using splitter bars and separate window panes. The catch though is that you can only get to the method from the front panel. You highlight the splitter bar, select which pane you want and create an invoke node from there. I ran across this myself recently and this approach was given to be by NI. I am not sure why you can't create the invoke node directly but this does work.

-

QUOTE (jdunham @ Dec 17 2008, 12:22 PM)

I see lots of benefits for making reusable UI components, but I think the economics don't justify making a lot of XControls. I would love to hear any success stories, though. Anyone?I have a fairly complex X-control that has been very useful for us. My team and I write application to test printers which support different communication interfaces. We built a communication class that handles all of the various interface types (built on top of VISA) and therefore allows our applications to work with all the interfaces seamlessly. As part of our user interfaces we need a clean selection process for the user to configure the specific communication interface. The X-control that I wrote supports this. In the past we had to have multiple controls for all the various parameters and code to hide or show what was relevant. the X-control made it very easy for us to do this. In our new application we simply dropped the one control and we were ready to go.

-

I would say that both are valid technologies to learn and are not replacements for each other. X-controls are a method for developing complex custom controls. LVOOP, or more appropriately, OOP is a design methodology for developing systems. Objects do not have to be controls on the user interface and are used to encapsulate your system designs into reusable objects. An object is a representation of some item in your system that encapsulates all the data for the object as well as the methods, or operations, on that object. One could argue that an X-control is a specialized user interface object but it should not be viewed as a replacement for LVOOP by itself.

-

QUOTE (Yair @ Dec 16 2008, 11:29 AM)

Here are some screen shots to illustrate what I am trying to accomplish:

Display window with hidden progress bar:

Display window with visible progress bar:

Display window with invisible progress bar:

As you can see the hidden progress bar looks cleaner than the one where it is simple not visible.

Sample code: Download File:post-4959-1229459430.zip

-

Thanks for the suggestions. The splitter bars seem to do the trick quite nicely. However I do have one more question. Is it possible to quickly and easily turn a pane's visibility on or off. In my application the progress bar will not always be visible. It is only displayed if the transaction will take a significant amount of time. I wold like to hide the progress bar when it is not active. It would seem that being able to hide whole panes would be a useful feature but this does not appear to be possible via the property nodes. In my application I actually have two separate panes that I would like to be able to hide. One is on the top of the window (some general status information) and the other (the progress bar) is on the bottom. I will take a look at playing with the origin settings and setting the panel size accordingly but I was hoping there would be a more simple solution.

Thanks again

-

Does anyone have a good example or an easy method to resize a cluster programmatically. Specifically, I have a cluster which is a progress bar that contains several elements (the slider, string, remaining time) and I would like this cluster to resize when the panel is resized. The panel is a display window which is echoing data that is being transmitted to a device. In some cases the data takes quite some time and the progress bar is used in these cases. The user can resize the window and I would like to have the progress bar's width expand to the width of the panel. This will also mean that some of the internal elements will need to be repositioned. I was planning on using the resize panel event and then use the property nodes (bounds and positions) of the elements but they are coming up as read only. Any suggestions?

-

Can you post an example of your code? I have used the VISA USB VIs for several years to communicate with various printers. I suspect it is something in the way you are doing it that is causing you a problem. Also, is your application going to need to continually read data or is it more of a command and response system? If you are continually reading a data stream is the data formatted in such a way that you can read it in fixed sizes or will you need to simply read and pass the data off for processing?

-

We are in the process of deploying a custom user interface for TestStand and are running into some issues with the build process. First, our UI and the TestStand sequences will need to share some data and we are using shared variables for some of the data. Secondly, we are using LabVIEW 8.5.1 and TestStand 4.0. We have been testing in the development environment and everything appears to be working fine. When we did get a system to build we noticed our shared variables did not appear to be getting updated correctly. Data was not getting added or updated. Everything worked as expected in the development environment. In an effort to isolate some of the issues we are seeing we decided to use a very basic system to test things out. We built the example Shared Variable Client-Server.lvproj from the NI examples. When we try to build the system we get the following error message:

The VI is broken. Open the VI in LabVIEW and fix the errors. C:\Program Files\National Instruments\LabVIEW 8.5\examples\Shared Variable\Variable Server.vi<Call Chain>Error 1003 occurred at AB_Application.lvclass:Open_Top_Level_VIs.vi -> AB_Build.lvclass:Build.vi -> AB_EXE.lvclass:Build.vi -> AB_Build.lvclass:Build_from_Wizard.vi -> AB_UI_Page_Preview.vi Possible reason(s): LabVIEW: The VI is not executable. Most likely the VI is broken or one of its subVIs cannot be located. Open the VI in LabVIEW using File>>Open and verify that it is runnable.[/quote]

Some research seemed to indicate that the variables had to be configured as absolute rather than target relative. Changing the shared variables to absolute does appear to eliminate this error but I believe we will need to use the target relative for our implementation. Does anyone know why this would happen? Besides upgrading to 8.6 (there was something in the release notes regarding a fix for this type of issue) is there some work around which we can try.

-

QUOTE (Aristos Queue @ Sep 12 2008, 10:31 AM)

Regarding the CAR that the wire routed under the string constant... I got an update from one of the developers who works on Diag. Clean Up:This is the expected behavior, with the default settings for auto Horizontal-Compactness. If the user moves the setting for Horizontal compactness to lowest, then the Diagram Clean Up will ensure that the wires will not go under the blocks.

Quoting from the Tools>>Options dialog documentation:

"Horizontal compactness—Determines how compact to make the block diagram. Higher compactness causes LabVIEW to take longer to clean up the block diagram and can cause LabVIEW to reroute wires under objects. This control is grayed out unless you select Manual tuning. LabVIEW considers labels as part of a block diagram object. "

Even though this is a known issue, I am keeping this bug open because I could see that wire-routing in general is pretty bad in the given VI. The excessive bends in wires appear to be related to another known issue caused by shift registers, which we are currently working on.

I guess what is needed is the concept of anchoring labels. If we could anchor a label to string or a free floating label to a section of code then the cleanup tool could do a better job of keeping things together in the first place.

-

When I create user events I use a variant as the data type. It provides the most flexibility since I can use it for whatever I want and make the data specific to the type of user event. This is the most general method and allows for extension of the user events without requiring any code rework.

-

Thanks one and all. Those examples did the trick.

-

Does anyone know of a good way to obtain the system directory information on a Windows platform? Specifically I need to get to the driver directory. Normally this directory is c:\Windows\inf however since the base directory for the OS is not guaranteed I would like a more general approach. I would be satisfied if I could get the base OS directory as well. I am open to using .net (I am not a .net expert so it may be there but I just haven't found it) some some other means if necessary.

Thanks

-

QUOTE (jdunham @ Oct 9 2008, 01:13 AM)

Well NI can tell me until they are blue in the face that VISA is awesome, but I will keep my opinions to myself.Yes, it seems like asynchronous should help.

But, yes, you can get what you want.

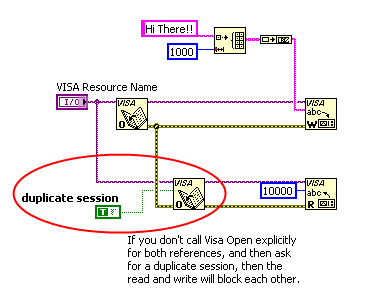

http://lavag.org/old_files/monthly_10_2008/post-1764-1223532516.png' target="_blank">

I did suggest to Dan that this useful nugget be put on the NI Developers' Zone, but a quick search turns up nothing.

Let me know if it works for you.

This approach will not work if you are using TCP connections though. If you use this on a TCP connection you will establish two independent connections to the device. The device should be smart enough that it returns the response on the same connection that it received the data on. It would be nice if VISA allowed true asynchronous communication. In addition, it would be nice if VISA allowed independent control of the various settings on the resource. In particular, it should allow different timeout values for the transmit and receive.

Topic about LabVIEW just started on slashdot.org

in LAVA Lounge

Posted

QUOTE (bsvingen @ Feb 2 2009, 06:39 PM)

As jdunham stated we can agree that LabVIEW is very useful when working with data flows but to suggest that it is not as useful for anything else is a very simplistic view of application design. Any system can be designed with data flow as the primary design concern. Yorton and DeMarco had several books on structured programming in the late 80's. Their work had nothing to do with LabVIEW. All you need to do is look at the work on structured programming and you will see that its underlying principle was data flow. An extension (structured analysis) of this was to include events, which can be viewed as asynchronous data flow. LabVIEW just happens to be one of the languages that supports data flow directly. In general data flow is not taught in most computer sciences or programming classes. They still teach to view things as procedures and sequencial programming techniques. However once you learn to design systems with data flow one naturally gravitates towards using LabVIEW because it is a natural fit. I have been working with LabVIEW since the mid 90's and I can count on one hand how many times my applications were dealing with data capture. I use LabVIEW as a general purpose programming language because I see the value in designing systems using state machines and data flow (nothing to do with data logging). These designs tend to be more flexible and robust than viewing the system as a simple flow chart (sequential programming).