-

Posts

429 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Mark Yedinak

-

How are your applications deployed? Are they built as stand-alone applications or are they actual VIs? If they are stand-alone applications you shouldn't have issues with new applications using updated versions of VIs. If you are distributing raw VIs and hoping to keep everything up to date the only option you have would be to recompile the VIs. You could potentially write an application that would go through your code and load, recompile and save the affected applications but this isn't an ideal solution. This application would need to have a list of the applications it needed to update and somehow report any failures it encountered. This would make the maintenance process fairly complex and subject to problems. The best solution is to build your applications are stand-alone executeables. Also, are you using projects for your applications? If not you should really consider using them. They help to manage this process.

-

You can run a mass compile on the directories that contain the affected code. The mass compiler is available at "Tools->Advanced->Mass Compile". You will need to select the directories containing the top level VIs that you want to fix. If everything is under a single directory you will only need to select that one directory.

-

You could also use queues to pass messages from one vi to another. You would need the top level vi spawn the other vis unless you used a networked queue solution. Based on the messages you pass your sub-tasks would show their display, do something and perform some type of task. One other thing you could do is to use subpanels in your top level vi. When your even t occurs load the appropriate vi's front panel in the subpanel.

-

My team all has dual monitors as well. However when we first started getting dual monitors they were rolled out in phases and it was interesting at first as we would open code and it would be "invisible". This was back in the 8.2 days so I am not sure if they fixed the issue or not. I just mentioned it as something to keep in mind.

-

In addition to what Francois has suggested you should note that you will most likely perform an extra step when the Stop button is pressed. The button will most likely be read before the action in your case statement is processed. Remember, just because something is on the right of the diagram doesn't mean it will execute after the stuff to the left of it. You probably want to include some artificial data dependency to force the read of the Stop button after you process your action. Remember to initialize your controls when the program starts. If you actually press the buttons before starting the program it will immediately start the economy wash cycle. Speaking of button controls I don't recall if the requirements in the sample exam included it but it is generally a good idea to disable the controls while you are in a wash cycle. In addition, using this architecture you are able (albeit you have to be real fast) to press both buttons at the same time. The selection most likely would need to be mutually exclusive. Remember to document everything, including constants. Overall your code is clean and easy to read. I would avoid saving your code with the block diagram maximized. You want to try and make it fit in a single screen. When I deselected the "Maximize window" only a portion of the code would be visible at any one time and I would have to scroll around to see the code. Speaking of monitors and displays, I know that in earlier versions of LabVIEW if code was saved on the second monitor and then opened on a system with a single monitor you couldn't see the code until the window was maximized. I have not tested to see if this is still a problem or not but as a result I always save the final code opened on the primary monitor. If you have the time and would like to really impress the folks grading your code try to align the code in each case so that when you cycle through the code you don't see it jumping around. This sample exam generally ends up with multiple cases all having essentially the same code. The final code looks especially clean when you can cycle through the cases without seeing the code jump around. (I know, this is extremely anal but it is impressive when it is done. I doubt you would ever lose points for not doing this.) Nice job. I would suspect that this code would pass the exam. Good luck with the exam.

-

tcp ip comunication between labview and c+++ application

Mark Yedinak replied to aizen's topic in LabVIEW General

You are basically correct in what you are saying but not entirely accurate. Every established TCP connections actually consists of two port numbers that are the source and destination port numbers. This pair of port number along with the pair of IP addresses are used to uniquely identify every TCP connection. As you stated the server will establish a listener on a know port (see below regarding port number assignments) and waits for a connections. The client will use the server's known port as the connection's destination address and will generally choose a random port number for the source port number when it establishes a connection to the server. This pair of ports allows along with the source and destination IP addresses establishes a unique connection. This allows a server to have multiple connections open to one or many clients at the same time. To establish a connection on a single machine the client will attempt to open a connection using the server's known port number and either the machine actual IP address or the special address 127.0.0.1 which is the special address known as "localhost". In other words, this machine. Using the LabVIEW TCP VIs unless the client application specifically specifies the source port a random port number will be chosen. This means the only thing the client will need to know is the IP address and port number of the server to establish a connection. Generally you should always allow the client to randomly select it's source port when establishing a connection. Only under rare situations do you need to specify the client's port number. You can run into trouble if you use a fixed port number for the client because you may choose a port number that already exists or more commonly will be unable to open a new connection using the same port server/client port pair. The TCP specification states that the port cannot be reused for at least 30 seconds. Windows generally sets this time at four minutes. So, if your application will be opening and closing connections to the server fairly quickly you will be limited by the wait time for tearing down a connection. TCP port numbers: PORT NUMBERS (last updated 2010-01-15) The port numbers are divided into three ranges: the Well Known Ports, the Registered Ports, and the Dynamic and/or Private Ports. The Well Known Ports are those from 0 through 1023. DCCP Well Known ports SHOULD NOT be used without IANA registration. The registration procedure is defined in [RFC4340], Section 19.9. The Registered Ports are those from 1024 through 49151 DCCP Registered ports SHOULD NOT be used without IANA registration. The registration procedure is defined in [RFC4340], Section 19.9. The Dynamic and/or Private Ports are those from 49152 through 65535 -

Under the Help menu there is an option to "Find Examples". This will bring up a dialog window which will allow you to search through the examples that ship with LabVIEW. Additionally you can search the forum here for examples of the producer/consumer architecture.

-

To be honest I don't understand what you want to do exactly so it is difficult to give you help on the functional requirements. However, there are several things you can do to help clean up your code. First, I think you may want to separate the user management functionality from the control of the solenoid. Creating new users should have no effect on the operation of the solenoid, at least not in most applications. Operation of the solenoid would require the user to be validated and only allow a valid user to control it. This type of flexibility can be achieved using a traditional producer/consumer architecture. One loop will contain your event structure and process the UI events. You may also use user defined events if required. The second parallel loop would contain a queued state machine which would receive commands/actions from the producer (UI loop) to indicate a new user has been created, a user is logging in, they are attempting to control the solenoid. Using shift registers you can maintain the appropriate state information in your consumer loop for whether a user is logged in or not as well as the current state of the solenoid. You can disable the control to turn the solenoid on or off depending on whether the user is logged in or not. If I get some time later I can try to put together a quick example of this architecture. In the meantime you can look at the examples for the producer/consumer architecture in the NI examples. Unless I completely misunderstand your requirements I think you you mixing functionality (adding users, validating users, and controlling the solenoid) which will overly complicate the application.

-

It may not have fixed it in this instance but it can definitely lead to issues.

-

I would look into using a state machine to replace your stacked sequence frame structure. There are several advantages to state machines versus sequence structures. They are easier to modify and add functionality. They are easier to pass data between states using shift registers. They allow you to abort or modify the order of operations whereas a sequence structure must run all frames in order and cannot terminate prematurely. These are just a few benefits of using states machines. Also, it has been mentioned that you should make sure that all your cluster references are tied to the same typedef. I noticed in your original post that there were coercion dots (the small red dots on the wire connecting to a terminal) on the bundle by name. This means that there is a possible mismatch in the data types. This can lead to the types of problems you are seeing.

-

The length of the TCP TIME-WAIT is dependent on the maximum segment lifetime. The TIME-WAIT is defined as twice the maximum segment lifetime. I have been using the TCP VIs of LabvIEW for years and have not encountered the extreme delay taht you are seeing. This leads me to believe that the issue is with the network settings on your machine, not with the LabVIEW code. I would look to see where your maximum segment lifetime is getting set and change that. This would have to be done outside of LabVIEW or NI stuff.

-

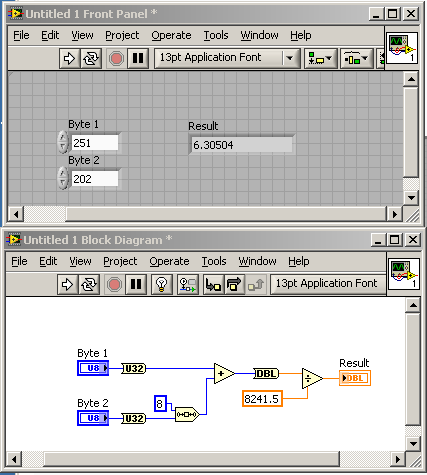

My first though was to use the join numbers but for some reason I had a mental block when I actually whipped up the example and used the shift and the add VIs. However the main reason I posted the alternative solution was that the OP may not have been aware it could be done without all the string conversions. Unless required for something specific his procedure required lots of extra work to accomplish a very simple task.

-

There is a much more straight forward method if he is only interested in the numeric aspect of the conversions. The whole thing can be done numerically without the need to convert the numbers to strings.

-

I can think of several good uses of a singleton class. For starters the application can have a log file. We will want to add entries to the log file anywhere in the application, including in parallel tasks. Now, let's say we give the user the ability to change the name of the log, modify attributes which control what to log, or even if the log itself generates new files based on time or size. I have just named several parameters that control the log that must be shared by all instances of the log. If this needs to be shared why not have a singleton of the class. After all, there is only one log file. Using the current data flow only classes that exist as soon as the wire splits the attributes are duplicated and a change on one branch of the wire will not affect an instance on another branch. In addition to a log any time we have a single instance of something in the system such as a piece of hardware, a network connection or other physical interface we have good candidates for singletons. There are ways to kind of achieve this in LabVIEW such as your example but it would be easier on the programmer if this could be handled automatically if the instance of the class is declared a singleton.

-

I know that you probably don't want to hear this but as Ben suggested I would seriously consider removing the globals from your application. There are much better and safer ways to share information between parallel tasks. These include action engines, queues and notifiers.

-

There aren't many LabVIEW specific OO books. However, if you want to learn and use OO (GOOP) with LabVIEW I highly recommend getting some basic books on OO in general. The classic gang of 4 book on OO design patterns is very useful but it is better if you understand the basics of OO before diving into that book.

-

We are in the process of rolling out a very large TestStand application for our internal engineering tests. Currently our test is approximately 2000 sequences and over 200000 test points. What we have found that works well is to use a database to manage the data parameters and expected results. We also dynamically link the sequences that we will run based on the selected UUT. Given the size of our test suite we also implemented a tree view of the sequences. This has been very useful for navigating through the tests and allowing the user to individually select what will run. In our database we also store test dependencies so that we can skip over tests that don't apply to the UUT even if the test was selected to run. The other thing that we have done is to keep the sequences themselves fairly basic. We created lots of custom step types that implement the complex tasks. All of our custom step types are implemented in LabVIEW. I definitely think you should seriously consider using TestStand.

-

Well, after speaking with NI regarding this issue it appears that at least with TCP connections, and possibly other VISA connections, it is impossible to performance a synchronous write to a device. The VISA write will return immediately after handing the data to the stack. Therefore if your communication is unidirectional it is impossible to synchronize your application with the actual transmission of the data. I see this as a major flaw since you cannot have your application wait until all the data has actually been transmitted. You can only wait until it has been buffered which is effectively immediately. I may have to experiment to see if transmitting the data in small chunks will work.

-

The only way to get the performance you are looking for is to use parallel tasks. You could have a task specifically for broadcasting the data to the other channels, one for reading the data and another for any internal processing that you might need to do. In addition you can have other tasks that work on other activities if needed. I certainly wouldn't try to do everything in a single state machine or event structure.

-

I am not aware of a way to do this in LabVIEW. What I would recommend that you do is to create a parallel task that will perform your TCP reads. This task will ignore timeouts since it is only interested in reading the raw data. When data is read it would pass that to another parallel task for processing. It could use a queue to pass the data and this would make your processing task event driven. This should give you the overall functionality that you are looking for.

-

How can I get an application to wait for a VISA-TCP to wait for all of the data to be written? I have tried using both the synchronous and asynchronous modes of the VISA write but the application is not waiting for all of the data to be transmitted. Using a LAN analyzer I can see that the program continues on even though the lower level drivers handling the TCP stack are still writing data out to the device. Unfortunately for the test that I am doing I cannot rely on a response from the device to indicate that it has received all of the data. A look through the VISA events didn't find anything remotely related to this. My application is repeatedly opening a connection to the device, sending some data and then closing the connection. This application is simulating a print queue and traces of actual print queues show that it waits to establish a new connection until the previous job has been completed. My LabVIEW application has this logic however from watching the program execution as well as the network trace it is clear that the application is not actually waiting until the data has actually been completely transmitted. Once the VISA write passes the data to the stack (some lower level code, Winsock possibly) it returns immediately. Any thoughts? Cross posted on NI Forums.

-

I heard recently that Tom is going to be publishing a book regarding this very same topic. I liked his presentation when I saw it at NI Week.

-

I know what you mean. I look at some of the old code that I wrote that I thought was pretty good when I wrote it and I cringe now. There is always room to improve. I know that going through the certification process helped to improve the quality of my code.

-

How will you determine the number of modules in the program?

Mark Yedinak replied to GSR's topic in LabVIEW General

Is there a specific reason you need to know the number of modules? The design and architecture of a system can be done without any knowledge of the language that will be used to implement the design. Naturally you need to have an idea if you are going to use OO or not however you can design a system using UML and still not use an OO language to implement it. It is not easy but it is possible. The abstraction of the system shouldn't depend on the language used to implement it. -

Yes, but simply using LabVIEW on a daily basis doesn't necessarily prepare you for the certification exam either. I am sure that there are lots of sel taught LabVIEW programmers out there that think they are writing great code. However if you really looked at you would see that it is not very good code.