-

Posts

53 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by JimPanse

-

-

It can't be that the specification says that the frame grabber is supported and then it doesn't work.

I can't afford such a procedure with my products.

I could also imagine a different solution approach, e.g. with an additional extension.

Make Windows real-time capable.But here I am still at the beginning. Providers who promise this are among others

www.intervalzero.com/

https://kithara.com/

www.sybera.de/

…. There are certainly many other providers here.Advantage:

The Framegrabber works under Windows.

No Labview Realtime would be necessary anymore and the associated expensive licenses and development extensions.What do you think of this solution?

-

Hello, bbean,

thank you for your answer.

The frame grabbers PCIe 1477 and PCIe 1473r work under my Windows System. I had the frame grabber PCIe 1473r on loan from NI and can't test it under Labview Realtieme anymore. The frame grabber PCIe 1477 does not work with Labview Realtime on my System.

According to the specification:

http://download.ni.com/support/softlib//vision/Vision Acquisition Software/18.5/readme_VAS.html

NI-IMAQ I/O is driver software for controlling reconfigurable input/output (RIO) on image acquisition devices and real-time targets. The following hardware is supported by NI-IMAQ I/O:

.......

- NI PCIe-1473R

- NI PCIe-1473R-LX110

- NI PCIe-1477

the frame grabbers should work under Labview Realtime. Do you see it that way?

The statement of NI (Munich) is now (after the purchase) that the frame grabber PCIe 1477 should not work under Labview Realtime.I also had the impression that NI was not really interested in solving the problem. For those, Labview Realtime is an obsolete product.The question to NI (Munich) whether the frame grabber PCIe 1473r works under Labview Realtime has not been answered until today.

Too bad that nobody else made experience with the frame grabbers under Labview Realtime.

A nice week start

Jim

-

Hello, experts,

it's a shame that nobody has had any practical experience with the frame grabber under LabVIEW REaltime (Phalab).

The documentation contains the following, among other Things:

http://download.ni.com/support/softlib//vision/Vision Acquisition Software/18.5/readme_VAS.html

NI-IMAQ I/O is driver software for controlling reconfigurable input/output (RIO) on image acquisition devices and real-time targets. The following hardware is supported by NI-IMAQ I/O:

.......

- NI PCIe-1473R

- NI PCIe-1473R-LX110

- NI PCIe-1477

This means that the frame grabbers NI PCIe-1473R-LX110 ,NI PCIe-1477

work under LabVIEW Realtime (Pharlab)?Thank you very much for your help..

Jim

-

Does anyone have an idea what you need for the framegrabber to work under LabVIEW Realtime? Has anyone ever made one of the two framegrabbers work under LabVIEW Realtime?

Greetings, Jim

-

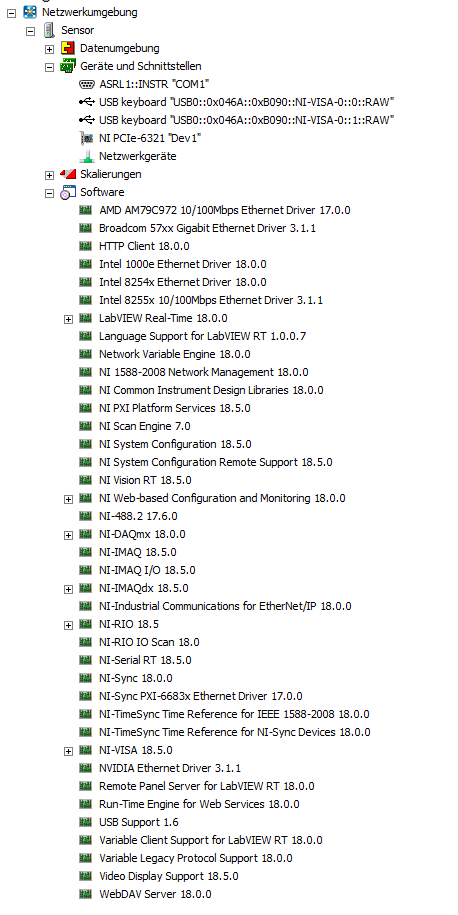

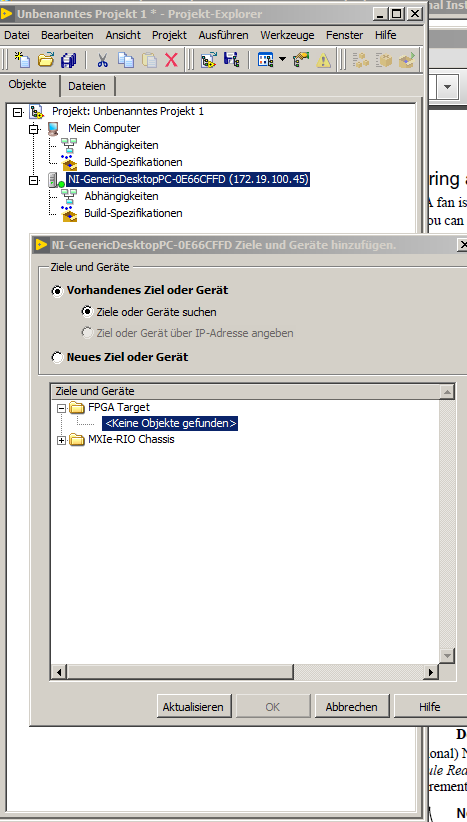

The FPGA tool is also available. The frame grabber runs on the same PC under Windows 7 (other hard disk).

Does anyone have an idea why the frame grabber is not recognized in realtime?a nice weekend

Jim

-

The frame grabber requires the Imaq IO 18.5. According to NI, Vision Acquistion is supported by Labview Realtime (Pharlab). And all the hardware included in itIt would be interesting to find out if anyone was able to integrate the frame grabber (pcie 1473r or pcie 1477) under Pharlab. Then there would be hope then :)a pleasant timeJim

-

Hello, experts,

I am trying to put the frame grabber PCIe 1477 into operation under Labview Realtime (Desktop PC). Unfortunately the card is neither displayed in the MAX nor in the project. As an alternative the frame grabber PCIe 1473r LX110 would be conceivable. Has anyone ever worked with such a frame grabber under Labview Realtime? Or does anyone know what to consider to make the frame grabber work with Labview Realtime ???

Many thanks for the numerous answers

Regards, Jim

-

Hello experts,

I've figured out how it works by now.

A code on the FPGA side like on the PCe 1473 is no longer necessary.

The required VI´s on the host side for the serial communication can be found at:\LabVIEW 2018\examples\Vision-RIO\PCIe-1477\Common\CL Serial NIFlexRIO

Merry Christmas

Jim -

Hello experts,

I am interested in the frame grabber PCIe-1477.

http://www.ni.com/de-de/support/model.pcie-1477.html

Unfortunately, the examples are not complete in my opinion. At first glance I do not have the serial communication for the parameterization of the camera.

(Requires: NI Vision Acquisition Software 18.5).

Does anyone have experience with the frame grabber and how was the parameterization done?

Have a nice Weekend

Jim

-

Thanks for the friendly answer!

The FFT calculation does not have to be done every 4 μs. At that point I should have contributed more. It is a 250 kHz continuous detector and this processing must be guaranteed. It would be quite conceivable to store a certain amount of data and then parallel to processing.For example ... So if an FFT calculation takes 100 μs, you could cache 25 measurements and calculate them in parallel. If that would be feasible .... The question arises, which low-cost FPGA is able to store the data between and calculate it in parallel?

Simulation can determine the FPGA type containing the necessary resources....not the timing

Have fun sweating, Jim

-

Hello experts .... Thank you for your answers.

The FPS are really 250 kHz @2048 Pixel

GPU

So a current graphics card e.g. 6GB Asus GeForce GTX 1060 Dual OC Active PCIe 3.0 x16 (Retail) should have a transfer rate of 15 Gbytes / s. This year PCIe 4.0 will come out with twice the data rate. At an expected data rate of about 1 Gbyte / s that should not be the bottleneck, right? So theoretically it should work with CUDA.

What should be the limiting element in data transmission?

In the USB3.0 variant, the USB3.0 interface is the data bottle neck. This should max. 640Mbytes / s transfer. That would probably work only at the expense of a reduction in dynamics. 2048 pixels * 250 kHz * 8 bits = 488 Mbytes / s.

FPGA

Ok, that's Xilinx, but you say: "According to NI, the FFT runs in 4 μs (2048 pixels, 12bit) with the PXIe 7965 (Virtex-5 SX95T)." - I can not find it, could you provide reference?

That's a statement from an NI systems engineer. Which has tested on the mentioned hardware. He tested it under Labview FPGA 32 bit and he said that it should go even better under Labview 64 bit.

If you look at the latency in the document

https://www.xilinx.com/support/documentation/ip_documentation/xfft_ds260.pdf

of the Virtex-5 SX95T looks then this is always larger 4μs @ 2k point size. The data type with LV FPGA can only be u8 or u16, right?

That raises the question: How did he do that? Is it possible to gain speed through parallelization and why should it be even faster with LV FPGA 64 bit?

The document is from 2011. Maybe something has changed in recent years?

The result of the FFT (A value) is then stored in the main memory or on the hard disk.

A nice stay, Jim -

Hello and thank you for your answers.

Then an FFT of a line with 2048 pixels and corresponding parallelization in 4μs would be possible. As far as I know, the mentioned NI FPGAs run at 400 MHz. The question that arises is which type of FPGA is suitable? Because he has a decisive influence on the costs.

According to NI, the FFT runs in 4 μs (2048 pixels, 12bit) with the PXIe 7965 (Virtex-5 SX95T). The card unfortunately costs 10,000 € and would probably blow up the project.Therefore, I would like to know if it also with the variants:

Kintex-7 160T / IC-3120 (USB3)

Virtex-5 LX50 / PCIe 1473 (camera link)

is working.

If you look at the document:http://www.bertendsp.com/pdf/whitepaper/BWP001_GPU_vs_FPGA_Performance_Comparison_v1.0.pdf

Then it should also work with CUDA and a GPU. And at significantly lower costs. how do you see it? ... @ PiDi: In principle, you are already doing with

Have a nice time, Jim

-

Hello experts,

I have a camera application (USB3 or camera link) and would like to do an FFT analysis of a line with 1024 or 2048 pixels. Data type U8, U16, I16. The processing must be very fast due to the process.The processing time should not take longer than 4μs. It would be conceivable to process with an FPGA, e.g.

Kintex-7 160T / IC-3120 (USB3)

Virtex-5 LX50 / PCIe 1473 (camera link)

or on the GPU using CUDA.

I also heard that Labview 64 bit FPGA should be much faster.

Does anyone know if the processing time of 4 μs is possible with the described methods ???

.... or what would you prefer and why? .... Or is there another solution with Labview?

Is CUDA running under Labview Realtime?

A good start to the week

Jim

-

Hello Rolf,

thanks for the expanded view. If I configure a desktop PC with a Pharlab Labview RT system I need the appropriate license. NI also has to pay for this eventually. These costs should not be incurred in Linux. Licensing costs for example, DAQ should not apply also. These costs should be compared with the hardware procurement. Therefore, some applications would be implemented with a desktop Linux NI RT system. Or did I miss something?

A pleasant start to the week, Jim -

Hi Experts,

there is a guide, experience or an image with which you can configure a desktop PC to a Linux NI RT?

a pleasant week, Jim -

Hi Experts,

has someone brought the NI Linux RT kernel with a desktop PC to work? Similar to the PharLab operating system. Or is there a how to create the NI Linux RT operating system itself? Otherwise, I would be very interested in a working group that developed the NI Linux RT operating System for Desktop PC´s.

for Desktop PC´s.

a pleasant start to the week

Jim -

Hello Experts,

I would like to realize an encoder triggered analog acquisition with a PCI-6220 (A = PFI8, B = PFI10, Decoder = X4).

At each linear change of the encoder is to be done a anlaloge detection. In principle, the encoder is the analog capture triggers.

In the Labview examples I can not find any solution. Google could not help me.

About a link to a solution or a description how to configure the analog trigger, I would be very grateful.

Thanks in advance, Jim

-

Hello Experts,

it is possible to synchronize the distributet clock from a PXI RT (Phar Lap ETS) EtherCAT Master with a third party slave (Beckhoff EL6692 or el6695 http://www.beckhoff.de/default.asp?ethercat/el6695.htm)?

Thanks in advance, Jim

-

I like the solution with the Mutltimaster EtherCAT system. It also enables synchronisation of the distributed clocks.

A LabVIEW RT EtherCAT master and a Beckoff EtherCAT master. Between the Bridge Terminal EL6692.

log it to work.

Are there things that speak against it?

-

Throughput is about 128 bytes per message.When it comes, it should be an industry-standard solution.

Another approach would be a multi-master system. With the EL6692

http://www.beckhoff.de/default.asp?ethercat/el6692.htm

http://www.ethercat.org/de/products/E2DC6EE8DB5D4740BE8A0E4FCA61A309.htm

EtherCAT bridge terminal probably can communicate deterministically two EtherCAT Master. Is that right? Then I could operate the LV RT target as EtherCAT master.

-

http://www.ethercat.org/en/products/92F6D9A027D54BABBBEAFA8F34EA1174.htm

In prinzipell it would be the right way. The PCI Interface could i solve with LabVIEW RT for desktop. But I dont think, there is a driver for the card that works under pharlab ets???

The latency is in the range of 10 ms. The latency period should be constant. However, the jitter should be no greater than 100 µs. Can I achieve this with Modbus TCP? Or is there a other faster RT interface from Labview RT to a Beckhoff control?

-

Hello and thanks for the help,

in the RT system several cameras incl. image processing are required. This is not possible with the 9144.

One imaginable solution could with Anybus X-gateway with Its Modbus RTU Slave / EtherCAT slave. Modbus under LabVIEW RT was supposed to work. What do you think?Is there any other indirect solution?

Thanks in advance

-

80 views but no help .....

-

Hello Experts,

I would like to operate a Labview RealTime system (PXI) as EtherCAT Slave. The master system should be a Beckhoff control. Is it possible to operate the Labview RealTime system as EtherCAT slave?

Thanks in advance

PCIe 1477 / PCIe 1473r under Labview Realtime

in Machine Vision and Imaging

Posted

There is currently no 100% definition of the requirements. This is all in a state of flux.

Full or Extended Full is required. Power over CL is not mandatory. I need real-time for communication with the 5 most common fieldbus Systems.

I didn't understand your suggestion to transfer the FPGA code to the frame grabber under Windows and then use it under RT.

How should the communication under Labview Realtime with the frame grabber work if the frame grabber is not recognized under RT?