ASalcedo

-

Posts

60 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by ASalcedo

-

-

Hi to all,

First to all thanks for being able to help and reading this post.

I would like to ask two question:

1- I have RUN TIME asigned to a PC, but know I would like to change this PC and install the same application to another PC (of course, keeping the same Run Time) Is it possible and how can I do that? because when I put a run time in a PC it is associated with that pc.

2- I would like to associate the application to a PC, so if the application run to another PC it can not.

I see some post that associate the application with serial number of PC and serial number of hard disk, is this the best way?

Thanks a lot.

-

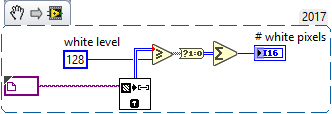

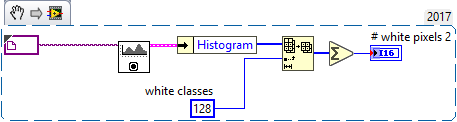

@ensegre How can I do the same but just counting white pixels in a specific ROI? With a mask or something like this?

Thanks a lot

-

I would like to know if there is a parameter in line scan camera which allow me to control time trigger along NI MAX or labview with imaqdx.

I mean, I would like to triggering camera during 100ms which specific shutter and specific line/sec.

And if I change shutter or line/sec the time trigger keep being the same (100ms)

I don't know if this is possible with some parameter of line scan camera.

Thanks a lot.

-

19 minutes ago, ensegre said:

PS: you may have to cast number arrays to U32 to avoid wraparound of the sum, if you have more than 32767 pixels, sorry I didn't pay attention to that.

Sorry, how can I do that? I do not understand really well.

Thanks a lot again.!

-

-

Hi to all.

I would like to get the number of white pixels in an image (so get the white area).

I see histogram.vi but I do not know how to do that.

Any help?

Thanks a lot!

-

Hi to all,

First of all thanks a lot for reading this post and being able to help.

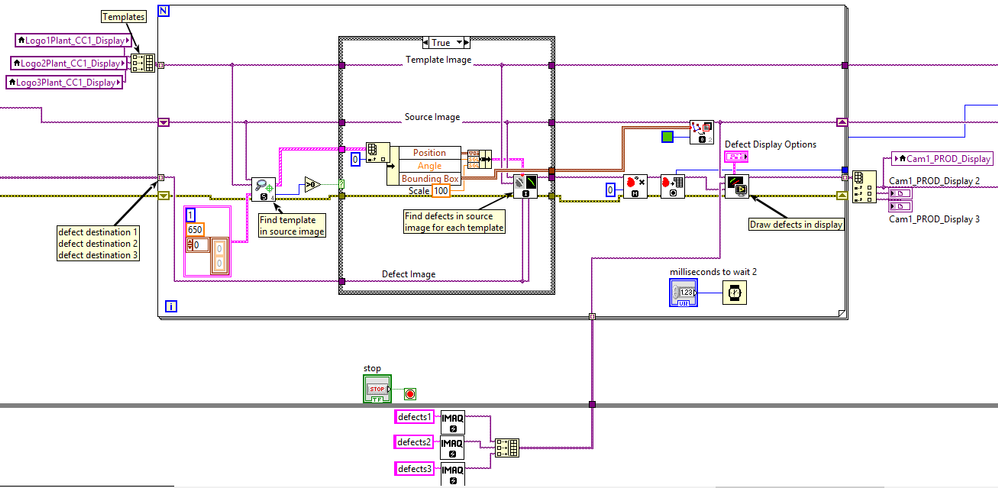

I am trying to use Imaq Compare Golden Template vi in a for loop.

I am trying to do the next:

1- I have 3 templates and 1 image inspection.

2- The templates are in the image inspection. So image that the image inspection is an image with a big 1 2 3 numbers. So the templates are 1, then 2, and then 3. An for loop has 3 iterations.

3- In each iteration I pass a template, with IMAQ pattern matching vi template is found in an image (getting bounding box) and then do the compare golden template to get the defects (differences between template and image).

I am doing the program based on this example "LabVIEW\examples\Vision\Golden Template Comparison\Golden Template Inspection.vi"

My program is the next:

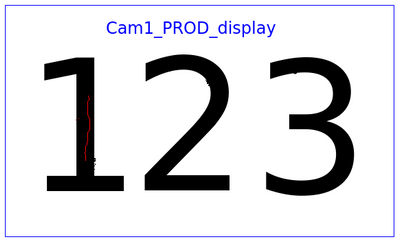

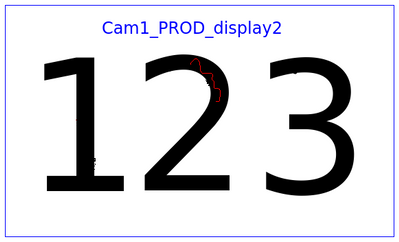

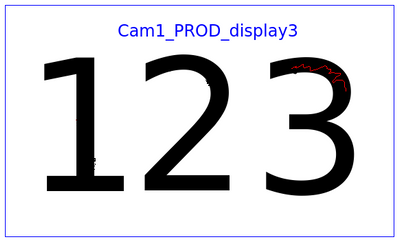

In the program I have three images (Cam1_PROD_display, Cam1_PROD_display2, Cam1_PROD_display3) with defects drawn in each templates, I mean, defects in template 1 are drawn in Cam1_PROD_display, defects in template 2 are drawn in Cam1_PROD_display2, defects in template 3 are drawn in Cam1_PROD_display3. Something like this:

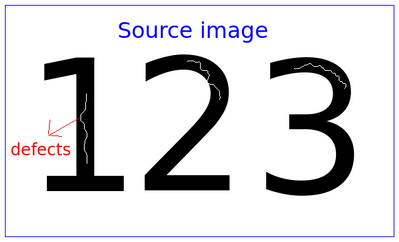

-Templates are three (1, 2, 3):

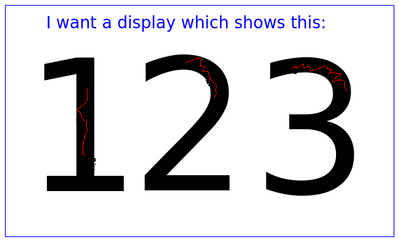

- And the displays Cam1_PROD_display, Cam1_PROD_display2, Cam1_PROD_display3 show the next in each display (with defects in red):

- But I want just one display which shows all defects in one image like this:

How can I do that with the code that I have?

I think that I have to do some operations (merge) of the three displays in one.

Any ideas?

Thanks a lot.

-

Hello to all.

I am looking for a good interface. I would like to find "buttons, controls, indicators, combo box..." so free toolkits like this: ni theme.

Where can I find more?

Just to get ideas to create a proffesional interface.

Thanks a lot.

-

On 8/3/2017 at 9:17 PM, rolfk said:

The quick answer is: It depends!

And any more elaborate answer boils down to the same conclusion!

Basically the single most advantage of a 64 bit executable is if your program uses lots of memory. With modern computers having more than 4GB of memory, it is unlikely that your application is trashing the swap file substantially even if you get towards the 2GB memory limit for 32 bit applications. So I would not expect any noticeable performance improvement either. But it may allow you to process larger images that are impossible to work with in 32 bit.

Other than that there are very few substantial differences. Definitely in terms of performance you should not expect a significant change at all. Some CPU instructions are quicker in 64 bit mode since it can process 64 bits in one single go, while in 32 bit mode this would require 2 CPU cycles. But that advantage is usually made insignificant by the fact that all addresses are also 64 bit big and therefore a single address load instruction moves double the amount of data and therefore the caches are also filled double as fast.

This of course might not apply to specially optimized 64 bit code sections for a particular algorithm, but your typical LabVIEW application does not consist of specially crafted algorithms to make optimal use of 64 bit mode, but instead is a huge collection of pretty standard routines that simply do their thing and will basically operate exactly the same in both 32 bit and 64 bit mode.

If your application is sluggish, this is likely because of either hardware that is simply not able to perform the required operations well within the time you would wish, or maybe more likely some programming errors, like un-throttled loops, extensive and unnecessary disk IO, frequent rebuilding of indices or selection list, building of large arrays by appending a new element every time, or synchronization issues. So far just about every application I have been looking at because of performance troubles, did one or more of the aforementioned things, with maybe one single exception where it simply was meant to process really huge amounts of data like images.

Trying to solve such problems by throwing better hardware at it is a non-optimal solution, but changing to 64 bit to solve them is a completely wasteful exercise.

Thanks a lot for replying.

Absolutely change from 32 bits to 64bits didn't change anyhthing. Finally I decided to check different issues in my program and that is the solution.

Specially synchronization issues.

Thanks a lot.

-

On 24/2/2017 at 2:27 PM, hooovahh said:

Is this just detecting a rising and falling edge? The OpenG boolean palette has Boolean Trigger, and there is an NI function Boolean Crossing. Beyond that you can do the same thing with a feedback node and a compound arithmetic function configured correctly.

I will try it !! Thanks a lot!

-

Hello to all.

I wonder if there is a One Shot rising/falling vi for free in some library out there.

Just which works like "one shot rising" and "one shot falling" from Real Time module.

Thanks a lot.

-

4 hours ago, smithd said:

to just do this for display you'd use the imaq cast (convert to u8 or i8, be sure to bit shift by 4 or 8 depending on the source bit depth) and then bin using imaq resample with zero order sampling and x1=x0/2, y1=y0/2.

Thank you a lot.

Could you post me a little example in LV using imaq cast and resample as you said?

One more thing. have I to do this every time that camera snaps a image? so just after that?

Thanks again.

-

14 hours ago, smithd said:

I had a similar issue with a large number of quickly-updating images, never really came up with a solid solution. Binning (cuts resolution in half for display) and casting (cuts data from 16 bits/pixel to 8) the image helps but as mentioned that increases processing time.

But if binning and casting take shorter time that having a large and big resolution image it helps me.

By the way, how can I do binning and casting? In NI MAX?

14 hours ago, smithd said:Is this a real-time target?

My application runs in a industrial PC and the camera has to take 4 image every 130ms and process them in 200 ms. It runs in windows 7.

-

First of all thanks a lot for replying!

2 hours ago, ensegre said:The application has to render a larger number of scaled pixels onscreen. The performance drop may be particularly noticeable on computers with weaker graphic cards (e.g. motherboards with integrated graphic chipsets) which defer part of the rendering computations to cpu.

Okey so the problem is normal. Perfect!

2 hours ago, ensegre said:If you process smaller images, your processing time may also be proportionally smaller. Additionally, image transfer and memcopy times will be smaller. But images at lower resolution contain less information. You are the one who has to decide how small the resolution can be, in order to still derive meaningful results from the analysis.

If the bottleneck is rendering, you could constrain the GUI so that the display never gets too wide, or the zoom factor never too high. Another popular approach is to process every image from the camera, but to display only one image every so many.

User has to visualize a big display so the perfect solution here is to process every image from the camera but just display only one image every so many.

My question here is the next. I have a ROI property node of my display. I can process the image with that ROI even if I don't wire the final image processed to display?

2 hours ago, ensegre said:Depends on the camera. Look for image size, ROI (AOI), binning.

My camera is a basler acA1300-30gm.

I have used this vi imaq_setimagesize . But the problem is that I can't notice if it really changes the resolution. Have I to run this vi afeter each time camera snaps a photo or just once (just only one after I run ImaqCreate)?

Thanks again.

-

Hello to all.

First of all thanks a lot for reading this post and being able to help.

I have noticed the next problem:

My application has a camera, this camera takes images (snap mode) and application process them (detect some edges in the image). It works fine.

But when I make the Display bigger my application takes longer to process images (and for me that is crucial). I think that this happens because my application in this case has to process a bigger image (bigger display = bigger image??)

So maybe if My camera takes images with lower resolution I solve the problem.

So how can I change image resolution captured by my gigE camera? In NI MAX or in Labview?

Thanks a lot!

-

Thanks to all.

What I have finally used is two parallel loops. So like that it works fine.

-

44 minutes ago, drjdpowell said:

You need some kind of parallel structure so your dialog can go on in parallel to your hardware handling. I actually use a special version of dialogs that are asynchronous and send their results back as a message, but the more common way is to have a separate loop of some sort.

Could you tell me how I can use those can of asynchronous dialogs that you use?

thanks a lot

-

Hello to all.

First of all thanks a lot for reading this post.

I have a while with event structure.

The event structure has the next two events:

· Timeout: each 25ms, this timeout reads variables from PLC.

· Button event: When user press this button, a dialog box appears to ask "yes or no" to user.

Well, here maybe there is a problem because if usser takes more than 25ms to enter an input in dialog box then timeout does not run in that iteration and It is critical for me.

Is there any way to do that program runs and it does not stop until usser put an input in dialog box?

Or the best way is to do another event structure in another while with button event?

Thanks a lot.

-

Hello to all.

I am using NI MAX to get images from a camera (snao mode and hardware trigger).

The camera is a basler 1300-30gm.

The problem is the next:

My camera snaps 4 images (1 image each 40ms).

In pylon viewer (camera oficcial software) I can see the four images correctly.

The problem is that when I am trying to see the 4 images in NI MAX sometime the camera can not take the 4th image... like there is no time to take the 4 image (or process it to visualize in NI MAX).

So maybe there is a configuration parameter in NI MAX to solve this.

Any ideas? How can I solve it?

I set up package size to 8000. Maybe package size is the problem?

Thanks a lot.

-

On 2/2/2017 at 5:57 PM, Porter said:

For #3, check the "MB_Master Simple Serial.vi" example located in "<LabVIEW>\examples\Plasmionique\MB Master\" or search for it in the Example Finder.

Yes! That simple!! Thanks!!

I have another question.

In my application I have to read constantly a few variables from PLC (coils, registers...). For example if a coil is 1, then an event occurs in my application (in an event structure).

Sometimes as I said in #1 some variables are not read.

So what is the best way to do a poll? Just one while reading every variables (coils and registers) each 10ms for example and then pass the information in local variables to anothers whiles?

Thanks a lot!

-

On 10/2/2017 at 4:59 AM, smithd said:

If your code is using a large amount of memory ~2 GB or greater then yes you might benefit from 64 bit. I'm guessing at the processor level there are also some differences in performance -- for example I believe x86_64 has more processor registers, making some calculations quicker, but on the other hand instructions are larger which uses more memory just for the code itself. Long story short the only way to really answer that is "try it" and the likely answer is not more than a few percent faster or slower.

The better starting point would be to use the profile http://digital.ni.com/public.nsf/allkb/9515BF080191A32086256D670069AB68 and DETT (if you already own it, http://sine.ni.com/nips/cds/view/p/lang/en/nid/209044) tools to evaluate your performance. The profile tool is kind of weird to use and understand but its handy. DETT gives you a trace which can help you detect things like reference leaks (you forget to destroy your imaq image ref, for example) or large memory allocations (building a huge array inside of a loop). And of course there is no substitute for breaking down your code into pieces and testing the parts separately.

Thanks a lot for replying.

Is there any examples to use DETT toolkit?

Thanks.

-

On 9/2/2017 at 2:56 PM, hooovahh said:

This document talks about the 32 to 64 bit comparison.

http://www.ni.com/white-paper/10383/en/

The main take away is that not all toolkits are 64 bit compatible and you may not be able to make your program in 64 bit. Vision appears to be one that is supported so you might be okay. I've never made a 64 bit LabVIEW program but I've heard the benefits are minimal. You can give it a try but if your program is running slow now, don't expect it to run fast in 64 bit. A much larger contributor to performance is going to be how the program was written, and the state of the machine running it. Is the computer slow in general? Then this program likely isn't going to run any better. If you do make the switch be sure and report what your experience is.

I am going to use performance toolkit to improve speed program. But I am going to heck if 64 bits program is better.

Thanks!

-

On 9/2/2017 at 2:37 PM, hooovahh said:

I don't have IMAQ installed, but I used a similar technique for detecting the edges of LCD segments. First get consistent lighting and positioning. Good, consistent, and uniform lighting is an art form. Once you have that turn the image into a binary one. You'll want some kind of thresholding applied and maybe a routine that allows you to adjust the levels. Alternatively you could try to auto detect the binary levels needed using the histogram data. From there I'd use pure math and abandon any premade NI functions. Partially because I'm not familiar with all of them, but also because if they fail to work properly I can't debug them and figure out why. In this case the math probably should be too hard. Your image is now just black or white pixels and you can apply a small filter to maybe eliminate any noise (like remove any black pixels not surrounded by black pixels). Then I'd split the image in half and process each half looking for the black pixels that are the farthest right, and farthest left. Then row by row look to see which points are the closest, and calculate the number of pixels between them.

Thanks a lot for answering.

I have just done it with imaq pallete. It is easy to do it.

thanks a lot.

-

Hello to all.

I have a 32 bit application created with application builder 32 bits Labview 2015 SP1.

It runs in 64 bits OS but sometime it is slow.

So I think that one solution could be to create a 64 bits application.

is it going to improve from 32 bits to 64 bits?

I am using Vision adquisition software toolkit and its run-time vision.

Thanks a lot.

Run time to another PC, associate application to a specific PC

in LabVIEW General

Posted

Hi, first of all thanks a lot for replying.

I have the application with Run time licensing in a specific PC. That PC is brokern now, so I would like to use that Run Time license in another new PC. Is it possible or I have to buy another run time license?

Thanks again.