Mellroth

-

Posts

602 -

Joined

-

Last visited

-

Days Won

16

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Mellroth

-

-

Added support for SGL and EXT types. Uploaded package to Code Repository: http://lavag.org/files/file/259-floating-point-almost-equal/

Nice idea, but please don't use pale-yellow for addons.

The pale-yellow background color makes it easily mistaken to be a primitive in LabVIEW, and I think NI also recommends against it.

/J

-

I need a wiring soluition for this:

exp(sqrt(-1)*angles)

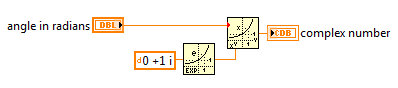

Rewrite exp(i*v) to exp(i)^v, or in LabVIEW notation

LabVIEW 2010:

/J

-

I've had issues with VIPM 2014 not resolving items paths correctly. To be more precise, I rebuilt an old package with VIPM 2014, and all additional installation directories would not install properly. In this case the problem was that previous versions of VIPM used '\' as a separator, but the 2014 version had switched to '/'.

http://forums.jki.net/topic/2406-frustrated-by-vipm-2014/page__view__findpost__p__5830

You could check the OpenG spec file and have a look at the different paths.

/J

-

What about this:

1. First find the chirp by edge trigger or peak detect etc.

2. Define window, either manually or through an algorithm that can detect when echo is finished, and get only that data.

3. Remove the DC component of the windowed data.

4. Measure the time between zero crossings

/Jonas

-

For future reference, and perhaps a different way to fix this at the root cause...

Justin:

I booted into Windows from the Bootcamp partition, so the Windows setting really was the root cause for me.

/J

-

Solved. Sincerely -- thank you! I will report this back via support channels to NI on this issue.

You're welcome Jack.

It is actually quite funny that I first noticed the issue right after I read through this thread... ;-)

/Jonas

-

1

1

-

-

I cannot figure this out.

I made some changes to the private data of a class that caused the various unbundle nodes to get mislinked when they tried to auto-select the right new elements, leaving several broken methods. No problem, I thought, I will revert to the previous clean copy in Source-code control (using TortoiseHg). However, whenever I reopen the class, the unbundle nodes are still mislinked and VIs are broken! I reverted the entire codebase to a week ago to be sure I had a good copy, and I rebooted the computer — still broken! All my methods, even the unbroken ones say they need to resave because “Type Definition modifiedâ€.

My question is: what is modifying my “Type Definition� It can’t be any of my source code, so what can it be? Where is the change located on disk?

Do you have the compiled code separated from source?

/J

-

1

1

-

-

- Popular Post

I just noticed this this morning, but in Windows 7(x64) running in bootcamp (LabVIEW 2014).

My problem seems related to the input-locale, or more specifically, which keyboard-type that were used.

I’m using an external keyboard but windows defaults to the “Swedish (Apple)†keyboard, and for some reason that keyboard doesn’t forward multiple modifiers to LabVIEW (all keyboards work in MS Word). In this little test VI; pressing shift-a, ctrl-a and shift-ctrl-a should all give me the scan code 30 on my keyboard.

But the Apple keyboard doesn't react when both modifiers are used. By switching keyboard layout during the VI execution the ctrl-shift-a functionality comes and goes.

So, my solution was to go to the control panel and change the default input to use the external keyboard layout instead of the apple-type. I also explicitly changed keyboard type when LabVIEW was open.

Hope this helps someone

/J

-

3

3

-

Is it possible to copy the VI from a previous version, rename it to "Open File in Default Program" and then use it in 2014?

On a specific platform - Maybe, but to get the platform independence NI has to maintain different versions for different platforms.

Different versions of OS'es could have different commands to open files/folders and I really prefer to have NI to do the work ;-)

-

Thanks for the clarification Darren.

I agree that the name now matches the action, but the VI has acted like this at least since LabVIEW 2009 so I would suspect that a number of users will end up with the same result as me. Especially since I also know that a this "trick" has been suggested by people at NI, referring to two VIs that are in the same llb as this one, namely:

- vi.lib\Platform\browser.llb\Open Acrobat Document.vi

- vi.lib\Platform\browser.llb\Open a Document on Disk.vi

Any plans for a new platform independent VI to do the action this VI used to do?

/J

-

Thanks,

I guess I might have to do something like this, but I really liked the old way since that was platform independent (NI handled the different platforms).

/J

-

In previous versions of LabVIEW we have used the "Open URL in default browser" (with path input) to open folders, files etc, using the program associated with the file extension.

LabVIEW 2014 seemed to have changed this behavior so that documents are really opened inside the default browser. Folders are treated differently depending on the default browser: with IE as the default browser a folder path opens a windows explorer window, with Chrome the folder content is listed in a new Tab.

The llb containing the Open URL... VI (<LabVIEW>\vi.lib\Platform\browser.llb) also contains "Open Acrobat Document.vi", but since this is using the "Open URL..." it also opens in the default browser

Is there any specific switch that can be used to tell LabVIEW to still use the default program instead of the browser?

/J

-

I saw something in the GenerateCode ability VI for one of NI's XNodes that says to open the VI in the NI.LV.XNode context. Would this let me do that?

You can open a VI in any context you want by using the code Jack showed earlier to get the context references.

Then use any of these references as an input to the Open VI method, to open your VI.

/J

-

Just thinking further, I wonder if LabVIEW RT could be set to run one core (or several) of a Windows machine? I wouldn't be surprised if NI hadn't tried this at some stage, and now it's easy to get a dozen cores or more.

The NI Real-Time Hypervisor supports this, but only on dedicated targets http://sine.ni.com/nips/cds/view/p/lang/sv/nid/207302.

/J

-

I guess the answer is cost. Not only the hardware itself, but we are very tight when it comes to development time, as we are a small team and have a lot on our plate.

We are using the standard NI-CAN Frame API.

Our problem is not so much on the incoming side. The biggest issue is that if our application doesn't send a specific message at least every 50ms let's say, the customer's hardware enters a fault protection mode... And the data of this message keeps changing, so we can't just set a periodic message and let the low level CAN driver handle it.

That really helps, thanks!

Hooovahh already mentioned the XNET CAN api, and I think you would see a big performance increase by using XNET supported HW instead of legacy CAN.

We were using CAN on a Pharlap system and needed to reduce jittering to be able to run simulations at at 1kHz, acting as up to 10 CAN busmasters.

It took a lot of tweaking to be able to get CAN run as we wanted it to. We used the Frame API and a frame to channel converter of our own (that supported multi frames for j1939) and changed some stuff in the NI-CAN driver, e.g.;

* changed reentrancy setting for some VIs (e.g. the ncReadNet).

* moved indicators out of case structures

* Pre-allocated buffers for nxReadMultWe were also actively participating in the beta testing for XNET 1.0, and once we got the XNET HW our CAN-performance issues were gone.

/J

-

Thanks for the response.

Yes I'm using timed loops. Most of the time there is only 1 timed looped running, it is a loop that is responsible for broadcasting some communication values up to the PC. It's set with a low priority and it's period is 200 ms. I've recently gone back and switched my low priorty timed loops out to while loops with waits, and it doesn't seem to be helping at all (although i've only run it once or twice). Related followup question: If a timed loop only has a little bit of work to do in a longer period (2ms of work to do in a 10ms period we'll say) is this affecting my CPU more than I would think?

I've done some CPU profiling with RT trace toolkit and there doesn't seem to be any spikes. I usually have a pretty healthy amount of time in the "VxWorks Null thread"

Im currently investigating the possibility of a bad 9512 module (admittedly it's a longshot). I have 5 axis that I need to control. 4 of them are on an ethernet rio and all work fine, the one axis that is plugged into the CRIO is the one giving me problems.

What cRIO are you using?

I've seen very bad performance with the older range of cRIOs (VxWorks), and was told that that was because they had a very limited number of threads.

The point was that anything that had a sleep function, like TimedLoop, Dequeue, Enqueue etc. caused the performance to go down, and caused a lot of jittering

Our solution was to move to a more poll-based design rather than interrupt driven.

/J

-

You will have to escape special characters like '', for the match pattern to work.

In the TRUE case it is present,and unintentionally escapes the next character in the search string.

/J

-

Thanks for the reminder.

I'll still stick to the DataLog Refnum due to type checking

/J

-

LabVIEW is too clever to let you do that, but there's a trick:

It is neat, but I would cast and store a Data Log refnum instead.

That way I believe you'll get a cast that will work regardless if the DVR is in a 32- or 64 bit environment.

You'll also be able to better detect bad wiring because of the strict type of the Data Log Refnum

/J

-

So, the best workaround we could come up with on our end was to force a recompile of the Excel_Print.vi on every installed system by installing a version saved in an older LabVIEW. We figured requiring a save of the VI was less of a burden on users that requiring an edit of the diagram (deselecting and reselecting the method).

But, wouldn't this mean that the workaround only works if LabVIEW is installed after the ActiveX components?

If I make an update to the ActiveX component after LabVIEW has recompiled the VI, I would still have to fix this manually?

What if you add another ActiveX property/method to the BD that is actually not called, would this force the VI to detect the change in the external component correctly?

/J

-

Hello

I’m new to LabVIEW and was hoping someone could help. I am sorry in advance if I ask basic questions but please keep in mind I am new however am willing to learn.

I am trying to send data from serial port and trying to plot the data, however the data comes as an array of three values at a time. I want to learn how to split the array of three to each individual values. The reason being, I would like to plot each value on a graph that plots the incoming values against time. The data would be fed continuously with different values each second.

Hope it made sense

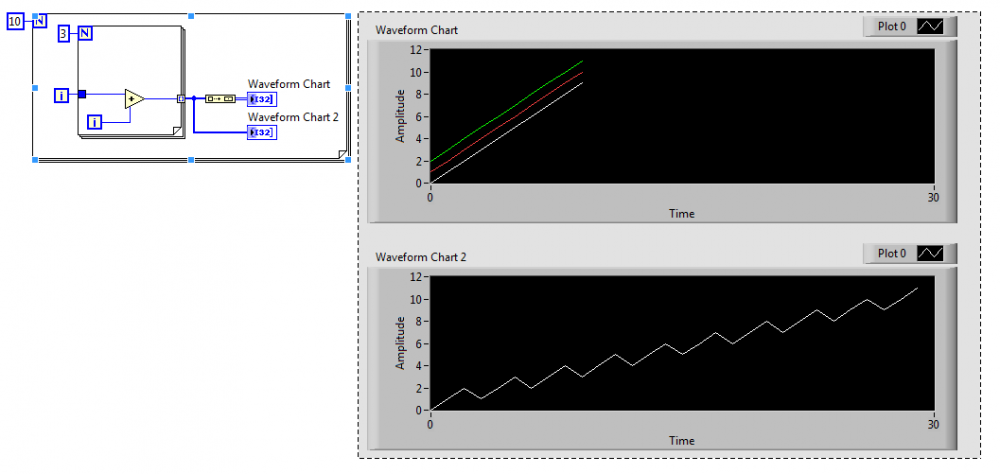

I guess you are using a WfmChart and try to plot the array values in the chart as they arrive?

A 1D-array fed into a WfmChart will update one plot with three new values.

But, if you just add a build array on the 1D array, data will be plotted in three individual traces.

/J

-

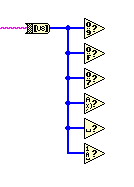

Today I learned you can pass an I32 into the To Upper Case prim. Did this totally by accident.

Has anybody ever used this feature before?

I have used it many times. This as well as the fact that many of the string-comparison nodes accepts numbers as well

This can be quite useful when processing strings, e.g. stream data, and you want to stay in the U8-array domain for speed.

/J

-

2

2

-

-

Could you share your code?

Comparing the LED arrays should be quite straight forward, and goes something like;

1. compare the LED arrays using NotEqual

2. Find index of first element in the NotEqual output that is TRUE, e.g. using ArraySearch

3. Continue to search for changes starting at the last detected index+1

4. stop search when no more changes are found

/J

-

In my LV2013 installation I still have the "Real-Time->RT Utilities - RT Debug string.vi" that can write to the console interface.

I haven't had time to test it, but it looks to be the same as before.

/J

-

1

1

-

[CR] Floating Point Almost Equal

in Code Repository (Certified)

Posted

I responded to the other thread regarding using pale-yellow for the background color, but for reference I add a comment here as well.

/J