Mellroth

-

Posts

602 -

Joined

-

Last visited

-

Days Won

16

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by Mellroth

-

-

...The important of all is that I have sample rates of 1 kHz. Higher is even better.

So the array will be quick huge. The LabVIEW program has to run the hole day on my laptop.

I'm getting my info trough UDP. There is a CAN network on and with a Analog2CAN converter i'm reading my potentio meters.

I get about 200 ID's in my program and I'm putting them in several arrays, but is this the best, less failure, fast way?...

I agree with Shaun on this one, the best is probably to stream data to disk.

If you go the memory path anyway, be aware of that having all data in one array might fail due to that there is not enough contiguous space in memory, even if the reported amount of memory is enough. Having many smaller arrays are therefore less likely to fail.

/J

-

Just a word of warning based on my recent experience...

If your RT code has lots of static NSV nodes and you are also programmatically opening NSV references then

you need to make sure that the behind-the-scenes init code that comes with each static NSV node

has completed before attempting to use the NSV API. If you do not then you may corrupt the SVE

and will have to reboot to recover. Currently I just do a dumb wait (30s in my case), it would

be nice if there was a way to find out programmatically when it was safe to start executing your RT code.

I have a similar issue with NSV:s and RT.

If the RT application is executed from development environment, everything works fine, but if the application is built into a rtexe and set as startup the application is broken.

If the rtexe was built with debug enabled and I connect to the rtexe, the panel displays a broken arrow but no information about what caused the application to break.

I've tried the delay before accessing any shared variable, but it is still the same.

The only solution that seems to solve my issue is to replace all static NSV:s with programmatic access. Lucky for me I only have 5-10 static NSV's in the application.

/J

-

... But if I set (true) input "disable buffering" in Create/Open/Replace File vi, I get error 6...

Hi,

If you want to stream data to disk as fast as possible, you must know the block size of the harddrive, and write data in chunks with size that is a multiple of this block size.

The reason is that RT does not use buffering (probably the cause of error 6), and it must therefore read the old block before writing a partial block to disk. So, writing partial blocks to disk results in unwanted disk access as well as decrease in performance.

Hope this helps

/J

-

Thx, but as u can see, i have wire a false constant (LV 2010 constant) into the "prepend array or string size".

U gut right. The original excel file whas a .xlsx so if a give the copy the extension of .xlsx it wors fine.

U gut right. The original excel file whas a .xlsx so if a give the copy the extension of .xlsx it wors fine.

Thx for all help.

Hi

If you are reading files with "unknown" formatting, there is no need to use the "Binary file" functions, just use the "Text file" functions but disable the EOL conversion.

Personally I don't use the binary versions unless I have to read/write values that are to be displayed/handled in LabVIEW, and then you need to know exactly how the data was stored (byte order?, size prepended? etc.) Using the "Text file" to copy data from end to another have never failed me.

Don't forget to escape the special characters during the RS232 transfer, or are you prepending length information to the transfer?

/J

-

AND...

Per these replies--> it appears that LV 8.5+ (and higher) addressed this issue with the in-place structure.

Now I need to convince my employer to leave 2007 (8.2.1) behind!

Hi,

Even if you convert to a newer LabVIEW version I think you will still have to do some major redesign;

In the picture posted in response to Bens question, you are entering the loop with both the complete cluster as well as an individual cluster element.

Doing so should force LabVIEW into copying data.

/J

-

I have a tab control where each page contains a plot. I can set the tab control to scale with the pane but then the graphs don't scale with the changing size of the tab control. Alternatively if I have just one plot (and no tab control) I can set that plot to scale to the frame.

Is there a way that I can set a graph to scale to the changing size of the tab control? I guess I could do it manually but I was hoping there was an inbuilt way.

Hi,

I might be missing the point, but if you put a graph/chart on each tab and set the "Scale all objects with pane" (right click on scroll-bar) property then the Tab as well as all graphs/charts will scale with the pane?

You might have to reposition the Tab control after the resize operation has finished, but this can be handled with the Panel Resize event.

/J

-

Yup. that's my solution too. Except I just used the value d92 instead of a cast

Always nice to hear that others think the same

For clarity I prefer to use the cast, and because of constant folding I believe that there will be no hit in performance.

/J

-

-

Thanks for the input. What I am planning on doing is what you suggested, but I was going to upload the new executable to a folder based on the time/date. You don't happen to have a VI that will create a directory? I know the FTP command is MKD and I can write it myself, but if it is already done there is no point in re-inventing the wheel.

Thanks,

Paul

I'll see what I can find.

/J

-

I'm curious, what do you think of my particular approach for solving the problem? In my specific case, I have several VI that I intend to export from my application. By forcing the VIs to be placed in the "root" of the application, I can find the VI's directly by name without having to rely on any other lookup mechanism. I was actually trying to highlight a trick with the application builder, i.e. creating a custom Destination that pointed to the Application itself and by explicitly setting the Destination for any VIs that I want to export. Now I can use a general purpose Call Exported Function VI to access the VI.

I'm not particularly found of using the properties in the application builder to solve something that could be solved in LabVIEW. If everything is handled in LabVIEW I could launch the application on one computer and use another PC as a client, and this gives me complete control during testing/debugging. The application builder trick prevents me from doing this, or requires special handling if we are in an application or in development.

Maybe I'm missing the point, but once connected you can always query the application for a list of exported VIs

- On the server side you can use the Static VI refs to get the qualified names of the VIs to export, and then define these as exported using the "Server:VI Access List Property" to enable remote access.

- The client then only has to connect to the server, and use the "Application:Exported VIs In Memory Property" to get the available server methods regardless of where they are located in the application.

/J

-

I've hit a brick wall for a solution. Any ideas?

I've used a lossy-queue (size 1) to filter events that are sent to fast, pushing new events with the Lossy-Enqueue primitive.

If the consumer loop can keep up with the event loop, all events will be handled. If the consumer loop runs behind, older events will be discarded, but the last event will always be handled.

/J

-

-

...

Does anyone know how to do one of the following:

1) Stop an exe running on the real-time programmatically. (This way I could delete it, place my new one on the real-time, reboot, and then have it boot on startup)

2) Start an exe on the real-time programmatically (not using VI-Server). (Reboot to kill the exe, delete it, place the new one on the real-time,start it programmatically)

3) Any other ideas on how ot deploy and run my exe.

NOTE: Per project requirements, I must deploy a new executable at the start of my program...

The quickest way (AFAIK) to deploy a RT applikation (with just one reboot), is to

1. upload the new rtexe, including dependencies, to the real time target into a new folder (perhaps with the version in the name)

2. download the ni-rt.ini file from the RT target to the local disk

3. Change the RTApp.StartupApplication path to point at your newly uploaded rtexe

4. upload the edited ini-file to the RT target

5. reboot

6. new rtexe should start running

I also believe that it is possible to use private VI-server methods to edit the ini file directly on the RT target (if remote access is enabled) but I cannot check at the moment. Doing so would replace steps 2 to 4 above.

/J

-

1

1

-

-

I normally merge the VI's error with the node's error (with the node's error taking priority) - after all, they both are differnt things: the node's error relates to being able to call the particular VI specified, whereas the VI's error depends on the inner workings of the VI being called.

I agree, if the node generates an error the outputs of the called VI should probably be discarded anyway.

/J

-

Look forward to hearing back!

Cheers

-JG

I have nothing more to add at the moment, used it successfully on 3 or 4 classes.

I have nothing more to add at the moment, used it successfully on 3 or 4 classes.Thanks

/J

-

I really like that icon. I vote we use that as the palette category icon.

+1

/J

-

I have updated this plugin to add support for the following:

- 1.1-1 2010 09 02

- Added (): Support for templates with connector panes: 5x3x3x5, 6x4x4x6, 8x6x6x8.

- Fixed (): Class Control must belong to the same Class in order to be updated

- Fixed (): Compatiable with VIPM 2010 - No read-only files included in package.

I have not tested the new version, but according to the video it looks perfect.

More feedback tomorrow (some of us do sleep

)

)/J

-

Is that cool?

Its more than that, its close to perfect (if you just add 6x4x4x6 it will be

).

).(and I don't understand when you have time to sleep, its like you are logged in all day and night)

/J

-

Thanks Jonas for the feedback!

You are welcome, I really like the idea of the tool.

My Design Decisions:

The tool was designed for working with LVOOP, so I made the assumption (which combined with scripting this is a very powerful concept - I think Norm mentions that on the Darkside) of using the 4x2x2x4 CP as standard. I did not see the need to support every CP (nor did I want to code it when I never would use it)

Unless you are using GDS, you would have a lot of work for changing each connector pane from the default and if you did you probably have your own scripting tools?

But I will add that to the Readme for now and look into possible support for other panes in the future.

Which makes me ask - what CP patterns do you use frequently with LVOOP?

The class currently edited used 5x3x3x5, and the work made is not that much really; I start by creating the first method, then I just save a copy of that method whenever I need a new one.

So the reason for silent error handling is that it not really an error from my point of view

I.e if the plugin encounters something that doesn't fit the criteria then it just ignores it and completes the rest of the tasks.

In this case I had to go through the code to understand why my renaming didn't work. I don't mean that we should throw errors for any control not matching the criteria, I would just like to have more control. In this case the main issue was probably the missing information in the ReadMe.

As an alternative, you could pop-up a dialog if shift-z was pressed, and give the user a number of replacement options; move label?, limit to CP X?, replace on all VIs in class?, etc..

Wow and a code review too - thanks heaps! (LAVA members rock!)

Agreed on all parts!

Sorry, couldn't help it.

/J

-

PS - Nice yelling by the way

Thanks, let's hope Michael agree...

Tool feedback;

- At first I couldn't understand why the tool was failing, but then I looked into the code and realized that you had to use a specific connector pane pattern.

- this should at least be stated in the ReadMe

- ideally I'd like to be able to use any connector layout. In most cases it really doesn't matter where the LVOOP controls are connected, only on methods that combine, compare etc. is this a problem.

[*]The first point leads me to the next one, error handling. I really don't like that the tool runs without any feedback. If it fails on a number of VIs, this should be displayed to the user.[*]The tool should check that the control being renamed is of the correct class. If another class is connected to the upper left corner this will also be renamed to the class name

Once I figured this out, the tool runs very smooth.

Code feedback

- The VI "Rename LVOOP FP Objects__icon_lib_scripting.vi" does not close control references if cating to "LabVIEWClassControl" fails

- The VI "Rename LVOOP FP Objects__icon_lib_scripting.vi" fetches the library name in every loop iteration, but this could be done before we enter the loop.

- The VI "Align All Block Diagram Labels__icon_lib_scripting.vi! could be realized with just one loop instead of three, using a select node to select left or right justification depending on if the object is an indicator or not.

/J

-

1

1

- At first I couldn't understand why the tool was failing, but then I looked into the code and realized that you had to use a specific connector pane pattern.

-

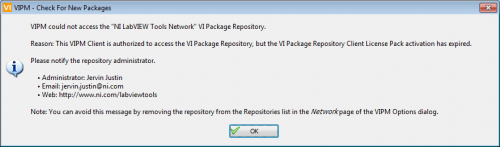

My screenshots are VIPM 2010 (the Splash Screen gives it away

)

)Sorry about the cross-post, originally my post was just about fixing the tool in LAVA CR.

I noticed that you are using VIPM2010, but the tool could have been installed with an earlier version of VIPM (I read a note about how much you just love VIPM3.0

)

)Feedback is coming...

/J

-

1

1

-

-

I filed a bug report for VIPM, see http://forums.jkisoft.com/index.php?showtopic=1465 for more info.

I have tried multiple PC:s, both community edition as well as Pro version of VIPM. Once I edit the ogp file so that icon.bmp is not ReadOnly anymore I can successfully install the package.

Have you installed using VIPM 2010 or VIPM 3.0? I believe that this is a issue only with VIPM 2010.

Regarding feedback, check back later today (its morning here in Sweden) as I have not really started using it yet.

/J

-

1

1

-

-

...

We don't have a VIPM compatible LAVA CR repository yet and I don't know if we will ever have one. But I would love to have one and if the LAVA community yells loud enough (hint hint) maybe we will. Who knows

<YELL>I REALLY LIKE THE IDEA OF A LAVA REPOSITORY</YELL>

, it would be much easier to find packages using VIPM instead of digging through the CR.

, it would be much easier to find packages using VIPM instead of digging through the CR.It would also solve the issues of dependencies between LAVA submissions (I don't know if there are any today).

/J

-

Jonathon,

I had trouble installing this package using VIPM 2010, and this was probably because the icon.bmp file included in the .ogp file was ReadOnly.

The main problem is within VIPM (AFAIK), but to enable other users to use this Quick Drop Plugin, I just opened your original zip and the included ogp file and removed any ReadOnly flags.

rename_lvoop_fp_object_labels-1.0-1_LV2009.zip

/J

Labview FPGA issue

in Embedded

Posted

Hi

A others already have stated, the host VI is probably where you can gain the most performance by simplifying the code. The FPGA VI seem to be pretty straight forward, but as Hooovahh said it depends on if you are planning on repeating a specific signal, having On/Off functionality etc..

Comments to simplify the code;

1. why store each AO waveform in separate files? TDMS is well suited to have multi-channel measurements in one single file. If you can have a single file instead, you'll gain a lot of file reading performance since only one file pointer is managed, and data is correctly formated directly out of the TDMS-Read function

2. why are you configuring the FIFO in each loop iteration, this should be done before you enter the loop

3. the same goes for the FPGA start, this should be done before the loop starts.

4. don't split the files in smaller chunks, this will get you a lot of overhead opening and closing files all the time. Instead you should read smaller chunks of data and while you are reading the next chunk to be transmitted, you write to the FIFO in parallel, i.e. you can use pipelining in Windows as well as on the FPGA (as sachsm linked to).

5. When reading the TDMS files you can specify the data type directly, this will clean up your diagram and get rid of a number of nodes.

6. The code currently autoindexes the error output constantly growing an error array, this will also decrease your performance. Instead use a shift register to pass around the error, and act on the error correctly.

/J