Search the Community

Showing results for tags 'deep learning'.

-

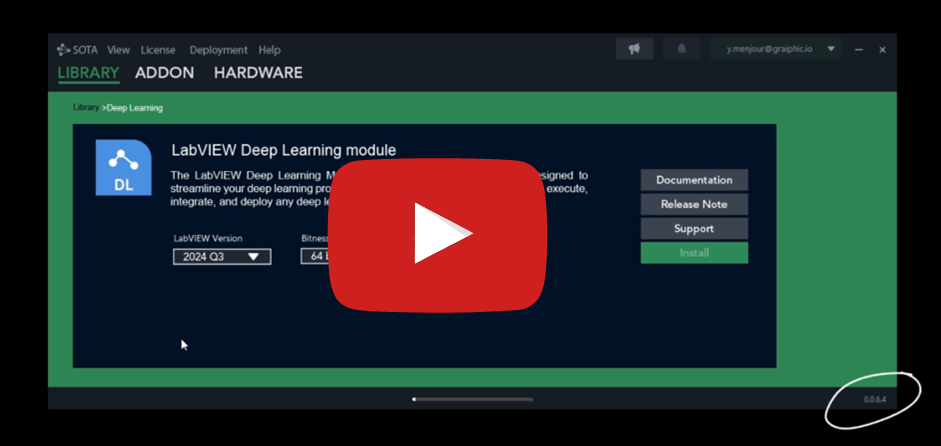

We are still looking for beta testers to join the ongoing testing phase of SOTA, our unified development environment for deep learning in LabVIEW. Now in its 36th version, SOTA is designed for developers interested in exploring deep learning using graphical programming. If you're passionate about innovation and eager to shape the future of graphical deep learning, we would love to hear from you! 🚀 𝐉𝐨𝐢𝐧 𝐭𝐡𝐞 𝐋𝐚𝐛𝐕𝐈𝐄𝐖 𝐒𝐎𝐓𝐀 𝐁𝐞𝐭𝐚 𝐓𝐞𝐬𝐭𝐞𝐫 𝐏𝐫𝐨𝐠𝐫𝐚𝐦 𝐚𝐧𝐝 𝐒𝐡𝐚𝐩𝐞 𝐭𝐡𝐞 𝐅𝐮𝐭𝐮𝐫𝐞 𝐨𝐟 𝐀𝐈 𝐃𝐞𝐯𝐞𝐥𝐨𝐩𝐦𝐞𝐧𝐭! Are you passionate about artificial intelligence? Do you want to be part of a groundbreaking journey to revolutionize AI workflows? SOTA, the unified AI development platform, is looking for beta testers to explore its latest capabilities and provide valuable feedback. 𝐖𝐡𝐲 𝐉𝐨𝐢𝐧 𝐭𝐡𝐞 𝐒𝐎𝐓𝐀 𝐁𝐞𝐭𝐚 𝐏𝐫𝐨𝐠𝐫𝐚𝐦? Be the First to Explore: Get early access to the 64th version of SOTA, featuring powerful tools like the Deep Learning Toolkit and Computer Vision Toolkit. Collaborate and Innovate: Share your insights and ideas to help us refine and improve the platform. Experience Unmatched Simplicity: Discover how SOTA’s graphical programming language, ONNX interoperability, and optimized runtime simplify complex AI workflows. ⭐ 𝐖𝐡𝐚𝐭’𝐬 𝐢𝐧 𝐢𝐭 𝐟𝐨𝐫 𝐘𝐨𝐮? 𝐄𝐱𝐜𝐥𝐮𝐬𝐢𝐯𝐞 𝐀𝐜𝐜𝐞𝐬𝐬: Be part of an exclusive group shaping the next generation of AI tools. 𝐄𝐚𝐫𝐥𝐲 𝐈𝐧𝐧𝐨𝐯𝐚𝐭𝐢𝐨𝐧𝐬: Test cutting-edge features before they’re released to the public. 𝐃𝐢𝐫𝐞𝐜𝐭 𝐈𝐦𝐩𝐚𝐜𝐭: See your feedback implemented as part of SOTA’s evolution. 💡𝐖𝐡𝐨 𝐂𝐚𝐧 𝐀𝐩𝐩𝐥𝐲? Whether you're an engineer, researcher, or AI enthusiast, your voice matters. If you're curious, innovative, and ready to explore, we want you on board! 👉Contact us if you want to join the open beta! https://lnkd.in/dWrckRJV or hello@graiphic.io or PM Stay informed and follow us on our youtube channel ! 👉 https://lnkd.in/dmP49rCa Stay informed on our website ! 👉 https://www.graiphic.io

-

- 1

-

-

- deeplearning

- deep learning

-

(and 3 more)

Tagged with:

-

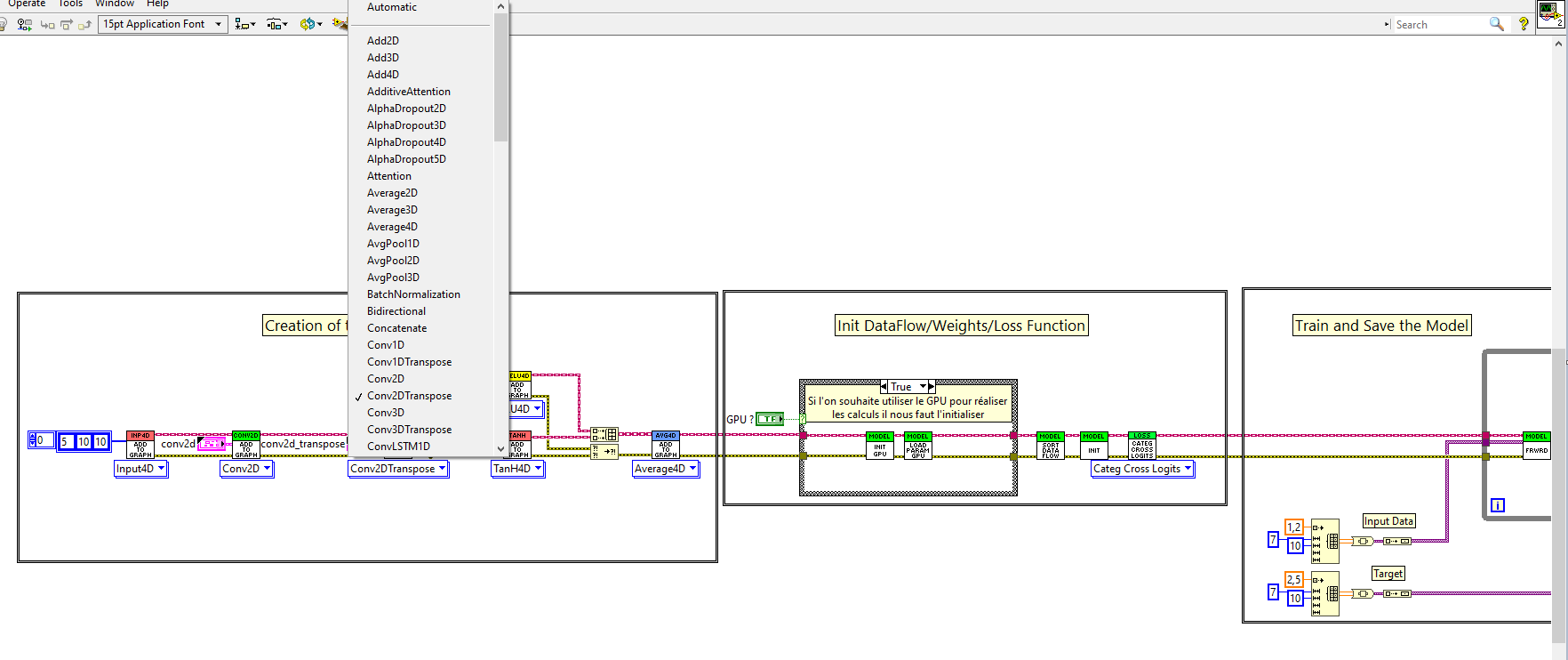

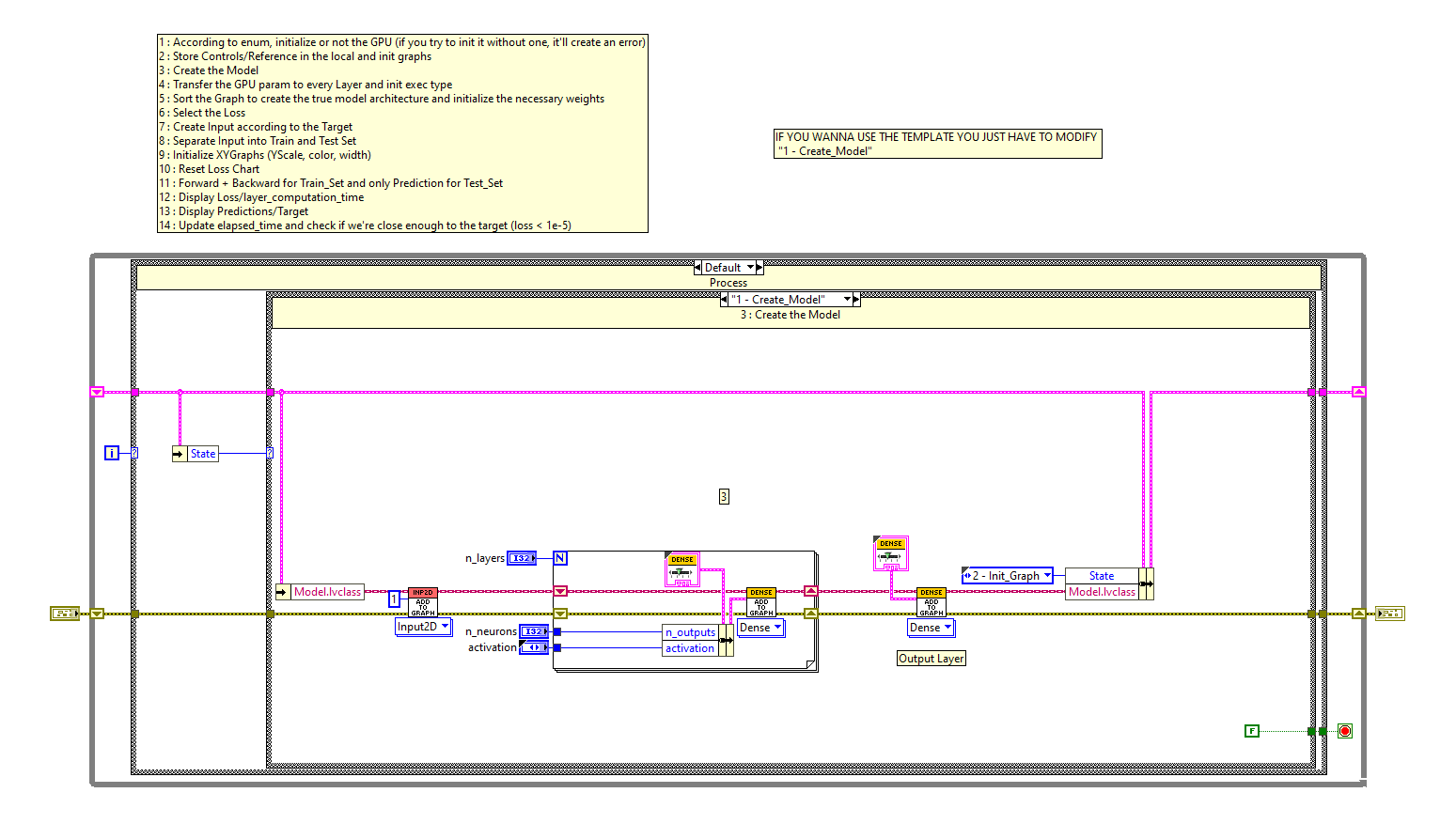

Dear Community, TDF is proud to announced the coming soon release of HAIBAL library to do Deep Learning on LabVIEW. The HAIBAL project is structured in the same way as Keras. The project consists of more than 3000 VIs including, all is coded in LabVIEW native:😱😱😱 16 activations (ELU, Exponential, GELU, HardSigmoid, LeakyReLU, Linear, PReLU, ReLU, SELU, Sigmoid, SoftMax, SoftPlus, SoftSign, Swish, TanH, ThresholdedReLU), nonlinear mathematical function generally placed after each layer having weights. 84 functional layers/layers (Dense, Conv, MaxPool, RNN, Dropout, etc…). 14 loss functions (BinaryCrossentropy, BinaryCrossentropyWithLogits, Crossentropy, CrossentropyWithLogits, Hinge, Huber, KLDivergence, LogCosH, MeanAbsoluteError, MeanAbsolutePercentage, MeanSquare, MeanSquareLog, Poisson, SquaredHinge), function evaluating the prediction in relation to the target. 15 initialization functions (Constant, GlorotNormal, GlorotUniform, HeNormal, HeUniform, Identity, LeCunNormal, LeCunUniform, Ones, Orthogonal, RandomNormal, Random,Uniform, TruncatedNormal, VarianceScaling, Zeros), function initializing the weights. 7 Optimizers (Adagrad, Adam, Inertia, Nadam, Nesterov, RMSProp, SGD), function to update the weights. Currently, we are working on the full integration of Keras in compatibility HDF5 file and will start soon same job for PyTorch. (we are able to load model from and will able to save model to in the future – this part is important for us). Well obviously, Cuda is already working if you use Nvidia board and NI FPGA board will also be – not done yet. We also working on the full integration on all Xilinx Alveo system for acceleration. User will be able to do all the models he wants to do; the only limitation will be his hardware. (we will offer the same liberty as Keras or Pytorch) and in the future our company could propose Harware (Linux server with Xilinx Alveo card for exemple --> https://www.xilinx.com/products/boards-and-kits/alveo.html All full compatible Haibal !!!) About the project communication: The website will be completely redone, a Youtube channel will be set up with many tutorials and a set of known examples will be offered within the library (Yolo, Mnist, etc.). For now, we didn’t define release date, but we thought in the next July (it’s not official – we do our best to finish our product but as we are a small passionate team (we are 3 working on it) we do our best to release it soon). This work is titanic and believe me it makes us happy that you encourage us in it. (it boosts us). In short, we are doing our best to release this library as soon as possible. Still a little patience … Youtube Video : This exemple is a template state machine using HAIBAL library. It show a signal (here it's Cos) and the neural network during his training has to learn to predict this signal (here we choose 40 neurones by layers, 5 layers, layer choose is dense). This template will be proposed as basic example to understood how we initialize, train and use neural network model. This kind of "visualisation exemple" is inspired from https://playground.tensorflow.org/ help who want to start to learn deep learning.

- 7 replies

-

- 1

-

-

- deeplearning

- pytorch

-

(and 3 more)

Tagged with: