-

Posts

135 -

Joined

-

Last visited

-

Days Won

2

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Götz Becker

-

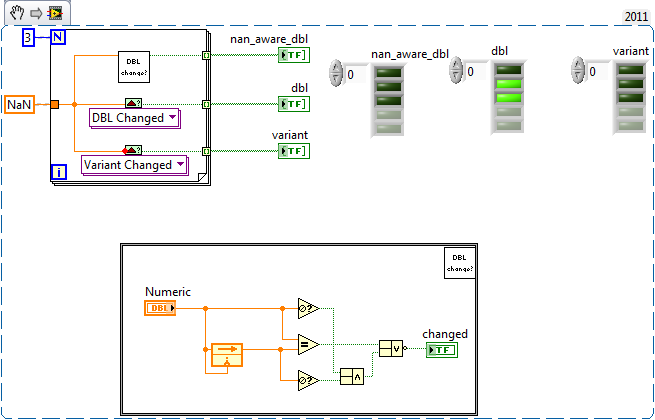

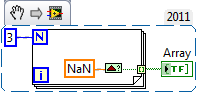

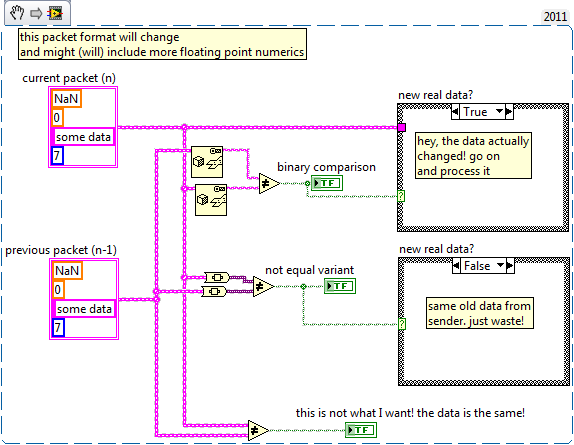

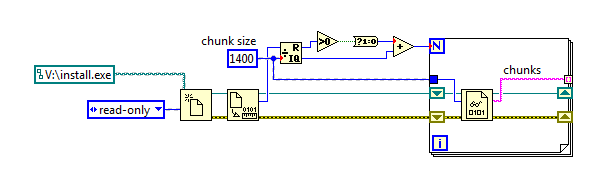

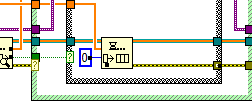

The data comes from a fixed set of subsystems, mostly LabVIEW-based. This code fragment comes from a proxy style application which tries to reduce load to the backend data storage (TCP connected, 400GB ringbuffer) in which itself is a fine grained isNaN check in the floating point transient recorder.

-

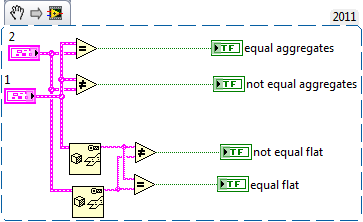

I updated my usecase example to clarify more what I am looking for: Basically I do want to compare the cluster raw contents against each other. The flatten to string works but I hope that it can be made more efficient and elegant. Updating a custom routine for all cluster elements when the cluster changes and implementing the isNaN for both sides is cumbersome and might be forgotten.

-

Hi, is there an easy way for checking a mixed cluster for equality, ignoring the rules for floating point comparison (NaNs)? I just want to check if the data I receive is new or not. My current idea is: Loosing type safety and the buffer allocation is for my current use case ok (slow and non RT), but I wonder if I miss something. Edit: A colleague mentioned that a "to variant" does the some "trick" but might be faster... I guess I'll have to benchmark a little.

-

[CR] Create_UDL_File_LV2010

Götz Becker replied to Prabhakant Patil's topic in Code Repository (Uncertified)

Why are all VIs password protected? -

file transfer using UDP protocol

Götz Becker replied to moralyan's topic in Remote Control, Monitoring and the Internet

The last part I don't understand. What do you mean with "graph" in respect to the TDMS file structure? From my understanding a error rate of >0% _will_ always result in some broken data. TDMS has no integrated consistency checks, as long as the TDMS segment headers survive, the whole file will look correct when opened. Nothing I would want in any form of production quality code/system. -

file transfer using UDP protocol

Götz Becker replied to moralyan's topic in Remote Control, Monitoring and the Internet

I still don't see why you want to build the file transfer on UDP, but I could imagine that if you know your packet loss rates and patterns, you could build a FEC (like RS codes) to counter the losses. At least it would be a fun thing to try -

file transfer using UDP protocol

Götz Becker replied to moralyan's topic in Remote Control, Monitoring and the Internet

-

[CR] GPower toolsets package

Götz Becker replied to Steen Schmidt's topic in Code Repository (Uncertified)

The "owner" is still the VI and it gets cleaned up no matter what. This can lead to a race condition which may lead to lost writes. I hacked together a small nutshell (LV2011) to demonstrate the problem. test_vi.vi tcp_server.vi tcp_conn_server.vi As long as you know all variable names in the static part and access them at first there, this is not a problem. But this detail can be easily forgotten and when such an app grows, it could be a nightmare to debug. -

[CR] GPower toolsets package

Götz Becker replied to Steen Schmidt's topic in Code Repository (Uncertified)

The dynamic nature of VIRegister has a drawback when used with dynamic code. Since the obtain refnum is done directly done, the potentially new global refnum has the lifetime of the calling VI references. e.g. use this with a dynamic TCP handler and created a new named register inside the dynamically instanced VIs. This new ref will be invalid when the instanced VI goes idle. This can produce some very hard to find bugs if not all references are obtained in a permanent running VI! -

I have seen a medical application switched back from LVOOP to normal clusters because of a much longer startup time on cRIO (LV2009) with LVOOP. Other than that it did work very nice with LVOOP.

-

I opened a thread in the idea exchange for this: http://forums.ni.com/t5/LabVIEW-Idea-Exchange/Inplace-Typecast/idi-p/1771970

-

This is what I actually did for getting rid of the buffer allocation, basically switched from array of I32 to an array of cluster of I32 and SGL as type for the RTFIFO for the interloop communication. Now the typecast is done in a lower priority loop where I can tolerate the timing/jitter induced. The general question remains... is there a way of an inplace typecast in LV?

-

I was optimizing a tight realtime loop. During my first tries I ignored the buffer shown at the typecast because of the remarks from AQ here in the forum. After hours of tracing the code with various trace user events (I presumed the "scanned variable read" function was the cause of all the "wait on memory" trace entries), it was clear that the cast of a SGL to I32 takes some microseconds (~ 2 us on the used PXIe-8133). The way the type cast works is actually well documented in the help... too bad I thought I knew it better. It would be nice if the typecast would only do a buffer allocation if the source and target data type were different sized.

-

Hi, is there a way to do a typecast e.g. from SGL to I32 inplace, without the buffer allocation?

-

-

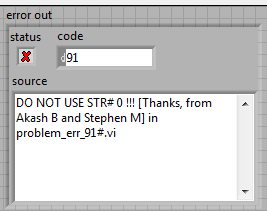

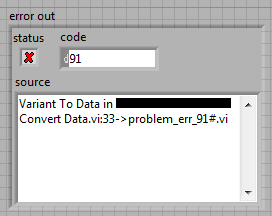

Hi, I was trying to track down a strange error in a library function and got the following: For debugging I had to disable VI inlining and the error changed in something more meaningful: Basically the problem was a parse error resulting in a wrong variant to data conversion (path to i16). I wonder who is "Akash B" and "Stephen M". About the latter I have a guess .

- 1 reply

-

- 4

-

-

Currently there are about 90 classes involved. "Save as" would reset the version but then I would have to fix all child classes to their new base class (+SCC and documentation overhead). That's why I am looking for a way of doing the reset in place. I'll try the XML-only approach, removing NI.LVClass.Geneology and reseting the version string directly.

-

For my current usecase, preparing a release version of a OOP-based framework and medium sized sample application, I tried my inplace reset snippet on all .lvclasses in the project. After restarting LV and reloading the project everything seemed OK, I could open the .lvclasses and see the version was reset to 1.0.0.0. Opening the main VI looked good too, running it resulted in a LV crash (no errors, LV just gone). Reopening and masscompile using the project, same result. Reopening and forced recompile (ctrl+shift+run) & save all, did fix the crash. Is this a expected behavior since I changed the XML file directly? Or did I hit some other problem?

-

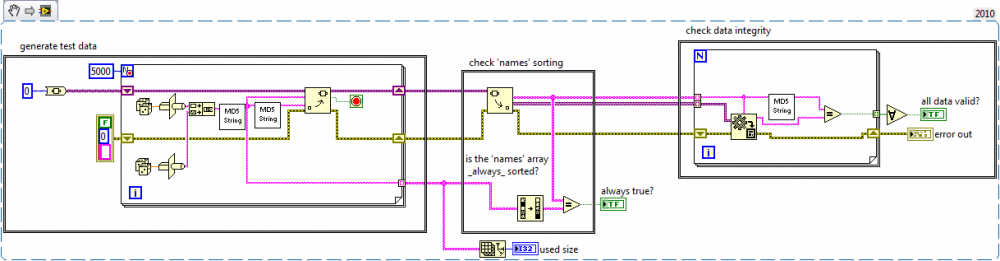

Is the list of names/attributes returned by "get variant attribute" without a name connected always (guaranteed) sorted? It is sorted at all my tests but is this a feature or just a coincidence and might change if the underlying algorithms change. Since this isn't documented, it could change without notice in a new LV version!?!