Matthew Zaleski

Members-

Posts

27 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Gallery

Everything posted by Matthew Zaleski

-

LabVIEW 2009 and Windows 7 64-bit

Matthew Zaleski replied to Matthew Zaleski's topic in LabVIEW General

Posts 7, 8 and 9 from me in this thread give the solution that worked for me. I have not had reason to re-install LV2009 on my Win7 machine so I don't know how much those instructions can be simplified. Suffice to say I really don't understand why it was so difficult to get it installed. -

Off topic but Tom's Hardware recently crushed the performance of the video you saw: 3.4 GB/s Sorta on topic, be very careful about the brand and model SSD you buy. There are a ton of useless drives that shouldn't even be sold. The good ones like Intel, Samsung, OCZ are insane performance coming from the world of spinning disks. I have an OCZ Vertex 250 GB drive. With 512 KB blocksize (reasonable for your data acq I/O), the drive averaged 145 MB/sec reads and 185 MB/sec writes. It could keep that up all day since the blocksize you are writing is >= to the internal flash blocksize.

-

In general you need to know what is your frequency range of interest. If the data contains usable frequency content from 0 to 3 Hz, then applying a 5 or 6 Hz lowpass filter should help. But sometimes your frequency of interest is not near 0 Hz. In those cases, look at passband or highpass filters. Your initial post seems to indicate you may need a notch filter (60 Hz noise from electrical?).

-

Bugzilla for requirements management

Matthew Zaleski replied to ASTDan's topic in Application Design & Architecture

Essentially, this is how MKS Integrity implemented their Requirements Solution. Integrity allows multiple issue types, each with their own list of fields and states they move through, AND they can link from one issue type to another. I'm getting ready to add the Requirements Management Solution to my MKS Integrity installation. The whole package ain't cheap but I've yet to find a freebie solution that comes close to its power. I think the MKS website itself is a piece of **** though. It has all the hooks for building Requirement Documents from all the little Requirements and reusing Requirements from previous projects. -

LabVIEW 2009 and Windows 7 64-bit

Matthew Zaleski replied to Matthew Zaleski's topic in LabVIEW General

My post just prior to yours was full success. I'm running LabVIEW 32 to actually do my work. I only installed LabVIEW 64, based on other suggestions in the thread, in order to get all of the hardware drivers loaded. I doubt I will ever use LabVIEW 64 2009. It looks like it is only good for NI-Vision work; No other modules are supported. It looks like the 3.2.1 install/upgrade doesn't play well with a 3.2.0 install. Now I'm just beating my head against the wall trying to get the FPGA to compile; Doesn't want to fit in 3 MGate anymore I'm beginning to think that each new version of FPGA compiler becomes LESS efficient at using slices. Are the NI-FPGA and Xilinx boys taking lessons from Microsoft Windows? It's funny that you say the cRIO is far too expensive. Compared to equivalent hardware from non-NI companies: it's the cheapest. I do agree that there are other cheaper solutions but we needed the rugged external i/o without requiring continuous connection to a PC. -

LabVIEW gives me alot of spare time for LAVA

Matthew Zaleski replied to Minh Pham's topic in LabVIEW General

I just put a OCZ Vertex 250 SSD in my home machine. Holy Cow! LabVIEW starts up fast and even a big project loads faster than I can get a cup of coffee. Now I just need to get my work machine upgraded (spending freeze with the economy, go figure, hehe). -

LabVIEW 2009 and Windows 7 64-bit

Matthew Zaleski replied to Matthew Zaleski's topic in LabVIEW General

I attacked the problem again tonite. Using the logic of a computer scientist, I uninstalled all NI-RIO stuff, rebooted, then re-installed NI-RIO 3.2.1. The project is now working. It shouldn't be this difficult... -

LabVIEW 2009 and Windows 7 64-bit

Matthew Zaleski replied to Matthew Zaleski's topic in LabVIEW General

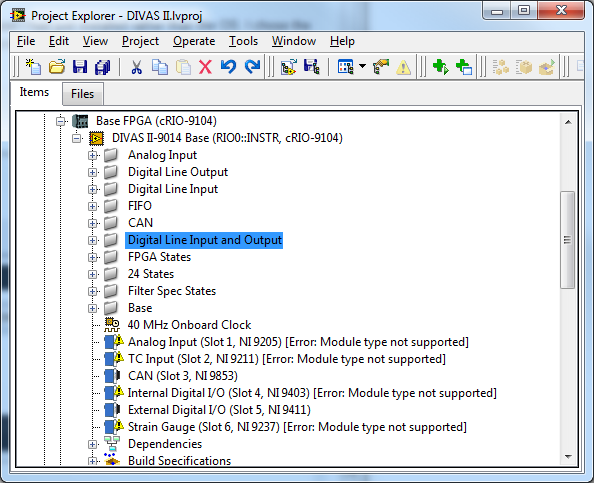

It took me longer than expected to reload Windows 7 64-bit RTM. I ended up doing the following: Installed LabVIEW 64 by itself (since it isn't on the DVDs afaict) Ran normal DVD installer and picked all the toolkits for which I have licenses. The installer seemed to recognize the existing LabVIEW 64 and automatically activated the appropriate entries for it along with LabVIEW 32. Reboot Ran the NI-RIO 3.2.1 installer from NI.com Reboot Opened my project and went to the CRIO FPGA node I'm further along than before since Realtime nodes are now recognized. But LabVIEW still thinks I don't have all my files. Look at the screenshot: I tried rerunning NI-RIO 3.2.1 and picking all the options. It resulted in a no-op and didn't see the need to install any files. A google search didn't give me any useful hits. Any suggestions? -

LabVIEW 2009 and Windows 7 64-bit

Matthew Zaleski replied to Matthew Zaleski's topic in LabVIEW General

Hrmm, interesting. I didn't try the "Run as administrator" option. I expected that as a Vista aware installer it would elevate itself properly. I am installing the LabVIEW 32 bit and the driver installers realize I have a 64 bit OS and pick the 64 bit drivers. From what I could find on the web LabVIEW x64 doesn't have any toolkits other than Vision. I need Realtime, FPGA, Advanced Signal Processing, the trace toolkits, etc. From that info, I determined that what I need to install is LabVIEW x32 on my Win 7 x64 machine. Is that an incorrect conclusion? The signed drivers may be an issue. I'll give that a try this weekend too. I did see the installer status mention driver signing for each driver. However, since it was the installer mentioning it, I assumed that NI had signed all of their drivers. Regardless, I'm glad to see there is proof that I should be able to install LV2009 on Win7 x64. -

LabVIEW 2009 and Windows 7 64-bit

Matthew Zaleski replied to Matthew Zaleski's topic in LabVIEW General

That is a good suggestion. I did a variation on that. I re-ran the setup last night with compatibility mode Vista SP1. I chose that because I need the installer to realize it needs to install 64-bit Vista drivers. Again, I got the same failure as before. I think I will be forced to install LabVIEW into a virtual machine in VMWare. It will take a few days but I will get VMWare running, install Win7 32 bit and then see if LabVIEW is compatible with that. If that doesn't work, then I'll install Vista in a VM. It really shouldn't be this many hoops. Win 7 is really nothing more than an optimized Vista kernel with some new UI tricks. I installed plenty of Vista drivers in Win 7 early on in Win 7 testing when native drivers were much rarer. My gut feeling is that NI's installers are doing queries that are looking for Vista-specific identifiers rather than querying for "must be Vista or newer". There have been several articles in the Windows 7 developer blogs talking about how there are many programs and installers using poorly designed OS detection methods. On the flip side, it looks like NI is using an MSI installer which is supposed to limit the chance of this occurring. -

I know it's not officially out yet. But I'm attempting to install the 32 bit edition on my copy of Win7-x64 (Release Candidate); I've downloaded Win 7 full release via my MSDN account but haven't loaded it since it will wipe my hard drive. It looks like nearly every 64 bit driver is failing during the install and rolling back. Given that LV2009 supports Vista 64 this shouldn't be happening. Vista drivers generally work well in Win 7. There isn't any error codes beyond a "failed to install XXX x64, do you wish to continue with rest of install?". Does anyone know of a workaround? A google search came up with no good hits.

-

QUOTE (Neville D @ Apr 8 2009, 12:53 PM) Thanks for the tip. I've sent an email his way. QUOTE (crelf @ Apr 8 2009, 01:16 PM) I'm not in front of my system at the moment, so this might be a little rusty: when you're in the build installer dialog, and you select DAQ as one of the things to install, there is an option in the top right where you can select between 5 types. From NI's website: There's a table on ni.com somewhere, but I can't find it right now. Thanks Chris. I've bookmarked that NI page for further review. I think that will at least let me get the right drivers on the laptops accessing the cRIOs without the user wading through that massive list of choices.

-

QUOTE (Neville D @ Mar 24 2009, 06:41 PM) That's unfortunate. Does anyone know if the NI-MAX CD/DVD installer can be fully scripted to only install the drivers I know are needed and not ask the user any questions? And can NI-MAX itself be driven by a LabVIEW app to do all of the necessary driver updates of a cRIO target? I need a way of automating this. My users don't know, nor do they want to know, how to configure the gobs of settings needed to support a cRIO target. And as I mentioned before, these units are now deploying worldwide; I can't walk up to them to update the software.

-

QUOTE (NeilA @ Apr 6 2009, 03:43 PM) Welcome to the world of XML. What MJE is telling you is that you did a deliberate override of the namespace for the UUID element (you gave it the namespace "http://RMS/"). Therefore /soap:Envelope/soap:Header/UUID/UUID will fail at the first UUID element since it isn't in the default namespace within the XML. I'm not familiar with the namespace "http://RMS/". Did you make that up? If so, modify your XML header: <soap:Envelope xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:soap="http://schemas.xmlsoap.org/soap/envelope/" xmlns:rms="http://RMS/"> and add that rms: prefix to your UUID element in XML file. Then the following XPath will be valid: /soap:Envelope/soap:Header/rms:UUID

-

QUOTE (Aristos Queue @ Mar 26 2009, 07:20 PM) Thanks AQ for all those details! The geek side of me loves to know how programs tick (and would have to to get all the way through the Computer Architecture book I linked earlier). I do hold my nose-in-the-air, but not for scripting; I reserve that righteous attitude for Visual Basic :thumbdown: . I do think LabVIEW suffers from an unusual stigma from the traditional CS crowd. I'm a programmer turned engineer rather than the typical "engineer needs an easy language to program a test measurement system". I was introduced to a LabVIEW/E-series based data-acquisition system 13 years ago (just now being phased out). At the time I thought LabVIEW was not for "real programmers". This was biased by the other graphical development systems I had seen in the 90's, including Visual Basic and competitors to LabVIEW whom I can't even remember the names. The interface for LabVIEW is quite foreign to traditional text programmers. Eighteen months after my boss told me point-blank that I would learn LabVIEW, I've come full circle. The range of hardware supported and the power of a good dataflow language is dazzling to this old CS fart. For our team, the rock-solid experience of working with NI hardware (and then seeing the power of the cRIO system) convinced us to learn more about the NI software solution. I don't know how many CS degrees include exposure to LabVIEW, but I think NI should be pushing hard in that direction at the university level; engineers shouldn't be allowed to have all this fun by themselves.

-

QUOTE (Neville D @ Mar 20 2009, 12:44 PM) Did I misread the provide NI link to RT Target System Replication? It seems to imply that I could image the drive in my cRIO, drivers and all, then replicate it to other cRIOs. The tricky part would be the INI file controlling IP address. Assuming I misread that, then yes, I'd love to be able to somehow script the whole device driver install for NI-MAX. I now have theoretically identical systems scattered worldwide. Explaining NI-MAX to someone half a world away isn't my idea of fun, hehe.

-

QUOTE (Mark Yedinak @ Mar 24 2009, 11:34 AM) I haven't had a need for embedded beyond cRIO at this point. Is the LabVIEW ARM or Microprocessor SDK of any value or are you further down the chain (i.e. cheaper end) in the embedded space? Not knowing a lot about the embedded space, I had thought the new ARM microcontroller package was specifically targetted at folks like you.

-

QUOTE (Aristos Queue @ Mar 24 2009, 01:24 AM) I would not have expected that based on the IDE interface. When are the compile steps happening then? I'm in the middle of writing G, then hit the run button. Was it compiling chunks as I wired or when I saved? Or when I build an application in the project? The debugger's visualizations of wire flow and retaining values is not something I'd expect from fully compiled code. QUOTE (Aristos Queue @ Mar 24 2009, 01:24 AM) But you'd be surprised I think just how many of C++ optimizations are possible within LV, and we have a few tricks of our own that C++ can only dream about. When the next version of LV comes out, we'll have some new surprises in that arena. That would not surprise me. Abstracting to a higher level than pure C++ gives new avenues for optimization. QUOTE (Aristos Queue @ Mar 24 2009, 01:24 AM) Sometimes we can piggyback on C++. There are some nodes on a VI that are compiled into assembly that calls back into the LV engine -- essentially precompiled chunks that are written in C++ that the VI does a call and return to access. Those are exposed in the runtime engine lvrt.dll. The PDA and FPGA targets go even further, not relying upon the lvrt.dll at all and generating the entire code structure of the VI hierarchy in their code. We could do the same thing on the desktop, but it is more efficient for VIs to share those large blocks of common code than to have every VI generating the assembly necessary for every operation. But an Add node turns into an "add ax bx" assembly instruction. Out of curiosity, are you treating the VI akin to a .c file with a pile of functions (1 function per chunk) or are these chunks handled in a more raw form (pointer arrays to code snippets)? I still feel that the main points of my argument stand (since you can compile Python, Matlab and Java to native code). The value proposition from LabVIEW (since it isn't "free") is enhancing my productivity and allowing me to ignore the gritty details of C++/assembly most of the time.

-

QUOTE (bsvingen @ Mar 22 2009, 01:26 PM) Your original post nor my reply was not about making multiple cores run efficiently. You stated a loss of speed due to OOP (not arguing that, but stated that programmer time is valuable these days). Then you asked about C++ templates and if they could be a solution to LabVIEW's OOP speed penalty. I worked with them a LOT for about 2 years after already having 5+ years of heavy C++ coding/designing under my belt. I think I can say, without lying, that I was proficient in C++ templates. C++ templates are primarily a COMPILED language solution to a single class not working efficiently for all data types. They cause a massive explosion in object code size (which kills processor caches btw). They are also notoriously difficult to code properly; and the resulting code looks even more tortured than C++ is. Mind you, at the time, I thought all "good" code had to be that cryptic. Years of working in newer non-compiled languages (Python, Matlab, and now LabVIEW being the major part of my experience) gave me an appreciation of "developer productivity". Blazingly fast code delivered 2 years late and also prone to all the issues C++ can cause (pointer violations, weird memory leaks) is not always the best use of a programmer's time. I agree with you that "yes, sometimes it is." Users generally want reliable code that is "fast enough" (not fastest) and preferably delivered to them yesterday. Applying C++ templates to LabVIEW would not necessarily gain you speed. I think you are sort of corrrect that it would be like asking for a polymorphic VI. It is still a scripted dataflow language (graphical prettiness notwithstanding). Your VI, whether part of a class or not, still has to run through an engine designed by NI, optimized by NI, enhanced by NI before it executes on a CPU (ignoring FPGA for the moment as an unusual beast). Any optimization along the lines of C++ templates is likely best handled by the wizards at NI optimizing the G execution engine and delivered in a new version of LabVIEW without us changing a single VI. Can C++ code run faster than LabVIEW for certain tasks? Yes because it is effectively object oriented assembly language. I challenge you to quickly write C++ code that is reentrant and threadsafe (like most of G) and still runs fast and, more importantly, not likely to crash hard when encountering an unexpected error. Oh, and design your UI quickly in C++ as well. Gawd I hated UI work in C++ even with a framework library! Implied questions in my original response: Are you currently not achieving performance demanded in an application spec document due to using LVOOP? Is it due to poor algorithm design/choice (which can sometimes be covered up by a compiled language if the end result is "fast enough")? It could very well be that LabVIEW isn't the correct implementation solution for your project today. Playing devil's advocate: Python is slower than C++, right? Then why is Google built on a massive system of Python backends? Java is slower than C++, right? Then why are more enterprise-class systems built on Java than C++ today? LabVIEW is slower than C++, right? Then why are large numbers of solutions being delivered via LabVIEW today? I feel that a lot of the answer boils down to programmer productivity: delivering a functioning solution sooner with fewer gotchas. This is all assuming proper development methodologies were used regardless of what language used for the solution. In short, use the tools you got to deliver a maintainable solution quickly. In my experience, the result executes fast enough. Now, I see that you related an embedded programming anecdote. The programmer productivity curve/payback is different. You are amortizing a programmer's time over thousands or millions of units sold and need super-low variable costs. I think most LabVIEW projects are amortized to a handful of (or one) built system. In the latter case, doubling the CPU horsepower purchased from Intel is cheap compared to 6 months' salary for example. QUOTE (bsvingen @ Mar 22 2009, 01:26 PM) Over-generalizations and complex solutions is very seldom a good substitute for proper knowledge of how to solve simple problems. I don't get paid to solve simple problems; anybody can do that in a lot of different langages. I'm employed to solve the tough ones. Solving the tough ones usually results in complex solutions where I strive to make it still easy to maintain.

-

I'm still getting up to speed with LVOOP concepts, mainly translating my brain views of other OOP languages into what LVOOP requires. Having read the stickied thread and attachments, I'm still hazy about race conditions. Here is the example I conjured: Class FileLineReader: # reads a text file line by line Members:file refnum other fluff about the file [*]Methods: OpenFile() - Opens a file GetNextLine() - returns string SeekToLine() - seeks to a given line in text file more fluff I create an instance of this class. Can I have worker threads that each have a copy via wire of this object, where each worker is called GetNextLine()? How is LabVIEW enforcing that my file refnum isn't being hit by 2 reads at same time? I know there are other ways to design this to avoid contention but I'm curious about this edge case.

-

QUOTE (bsvingen @ Feb 11 2009, 02:39 PM) Having putzed with C++ templates in the 90s AND been in a scripting-ish world since the late 90s... The benchmarks from the 90's are virtually meaningless today. A modern Intel chip does SO much voodoo that the cost of using a dynamic language (as opposed to a compiled language like C/C++) has become less of an issue. If you are a real geek and love to understand what makes a modern CPU so magical (as well as what the practical limits are), check out Computer Architecture, Fourth Edition: A Quantitative Approach. LabVIEW (and hopefully recent textual scripting languages) are all about programmer productivity which is usually worth far more than a faster CPU these days. I spent over a year working with C++ templates and the Standard Template Library. It's a year of my life I want back! Use the tools LabVIEW gives you. Only then if you find the code is too slow, examine your algorithm and perhaps spend time making your code utilize multiple cores or machines efficiently.

-

QUOTE (Aristos Queue @ Feb 2 2009, 06:54 PM) I'm jumping in here late and I'm still learning LabVIEW OOP (in general). I come from a background of doing practical OOP in C++, Java, Python, and Matlab. I can't speak to what features LabVIEW has or doesn't have with respect to OOP ability. If I have 10 public methods (public API) depending on 1 private method (part of the private API) at some point or another, I want to unit test the private method. I want to guarantee that I haven't broken the private API. Othewise I'm chasing ghosts at the public API level. Depending on the complexity of your classes, it can be downright painful to exercise the private API via the public API. Sometimes it is painful in the other direction; easy to unit test the public API. Why are we discussing ways of making the tool (LabVIEW) harder on the developer? After spending a few years with Python, I've come around to their way of thinking. We are all adults. If you tell me that touching the stove will burn me, you don't also need to encase the stove in Lexan so that I can NEVER burn myself (nor can I cook food on it easily). Python lets you easily touch the private API but the access method lets you know that you are "bypassing standard safety protocols, proceed at your own risk." It goes hand in hand with http://en.wikipedia.org/wiki/Duck_typing' rel='nofollow' target="_blank">duck typing to make a programmer's life easier. *steps off the soap box*

-

QUOTE (MichaDu @ Mar 3 2009, 07:48 AM) I can't answer most of your question. But are you sure you are at your slice limit? The compiler is an incremental optimizer. Once it gets your code to fit it stops optimizing for space. A better proxy is based on how long your compiles are taking. When compile times start rising a lot for small additions to the code, then you are getting close the limit of your particular FPGA.