-

Posts

437 -

Joined

-

Days Won

29

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by X___

-

-

Consider yourself lucky. It could have been all green... super green.

-

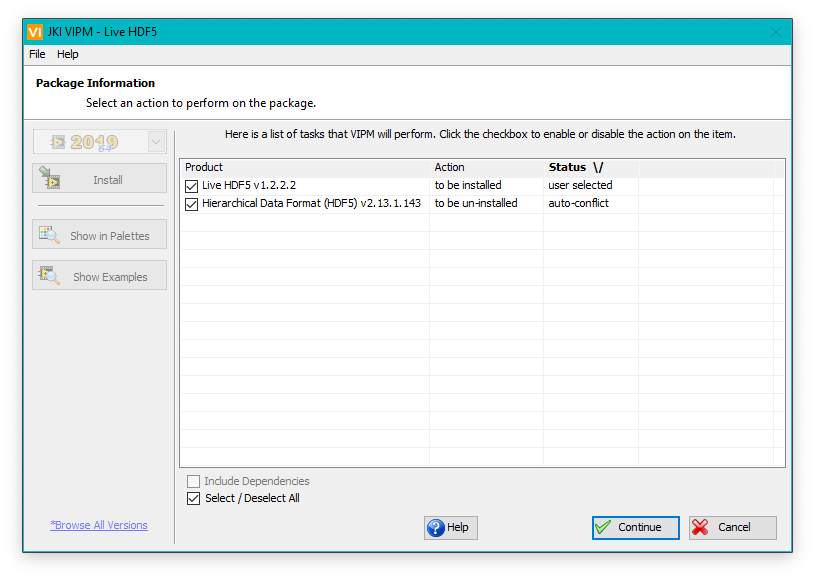

Are you forcing a conflict with Martijn Jasperse's H5labview package, or is this something made up by VIPM?

-

Free is maybe pushing it. Zero technical support without a SSP would be an appropriate model associated with open source. Of course this wouldn't prevent a vibrant community support (independent from NI) from existing, but this is not what the big industrial companies using LV would go for. They would remain NI's proverbial cash cows.

I think the misunderstanding on the corporate side of why open source is beneficial for code safety and reproducibility is understandable, as I can witness the same ambivalence if not resistance in academia.

As for NXG and webUI (or whatever they call it now), as discussed elsewhere, it looks like NI doesn't have the resources to bring the vision (whatever it was originally) to fruition, and their recent decision to abandon it will probably lead (or has already led) to morale cratering and talent effusion, so I wouldn't hold my breath...

One thing I'd add to the list is this: stop the yearly versioning breaking backward compatibility. This is frankly moronic and the clear and only reason why this exists is NI pricing scheme. Adopt the scheme suggested in the first paragraph and this can go right away.

-

2

2

-

-

18 hours ago, hooovahh said:

Well at least every NI Week since a couple years before NXG was released up until the last one. Hackathons were a pretty open environment to give feedback. You'd have the attention of some pretty important people in the design of the UI and UX decision making process. And the IDE actually had a button in it where you could just send NI a message about something you liked or didn't like in it. Not sure if that stayed for the newest versions. I can't say what NI did or didn't do with this information but they seemed to have plenty of places for users to give feedback on.

Sounds like King Louis the XIV's court. If someone had just pointed out that the natural next step of such an IDE was to introduce "text shortcuts" to diagram objects, they were quite a short distance from reinventing a text-based programming language. Brilliant!

-

1 hour ago, ShaunR said:

Interesting...

I don't like the single VI view. I like to view multiple VI diagrams and panels when debugging and editing. I also don't like the menubar only reflecting the currently selected item ala Mac - it trips me up all the time.

However. The toolbars are another matter. You can kind of do the above by docking palettes and Project Explorer to the desktop sides (which is what I do). What you can't do is dock to the top and bottom of the desktop or dock the context help or dock windows to each other.

I really like the way Codetyphon/Lazarus works for toolbars etc. Each is a separate window (like LabVIEW toolbars) but you can dock and nest. So. Out of the box you have something like:

You can grab the yellow bar below the title-bar and dock it to other windows (the blue shaded area in the source view shows you where it plans to put it, for example). You can do this with any window (there are a lot of them) to create split bars and even tabbed, split bars.

Ultimately. You end up with (my preferred layout) which is:

It's only a tabbed page view in the middle which is a pain switching back and forth between FP design and source but the way it handles the ability to make your own IDE layout is great.

Yeah, well, when was the last time NI asked users what they were expecting in a IDE UI? Or what they did or did not like in the mock up designs they were cooking up?

I am sorry, I forgot about the "Idea Exchange" forum, which had so many transformative effects on the development of subsequent LabVIEW versions...

Of course, ultimately this is probably not what killed NXG (the dislikes of a few old geezers for the IDE).

-

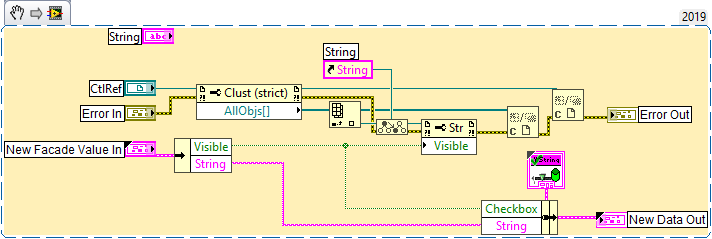

4 hours ago, X___ said:

When the user changes the checkbox (for visibility) to True, the string is hidden and reciprocally. Am I missing something?

Following paul_cardinale's review of my demo, it turns out there was no glitch after all (well, in my understanding or attention level, maybe).

-

54 minutes ago, paul_cardinale said:

Can you send me the entire Y Control?

Sent as a PM.

Also, is there a way to drop a Y Control in an array (or cluster)?

-

LV 2019 SP1f1 64 bit crashed upon closing my first Y Control... but seems to have recovered fine.

So, I can now resize objects within a Y Control cluster, but there seems to be a glitch (race conditions?) in the Process New Value.vi:

When the user changes the checkbox (for visibility) to True, the string is hidden and reciprocally. Am I missing something?

-

More on this saga, picked by chance in a Forums thread (https://forums.ni.com/t5/LabVIEW/Save-the-progams-user-interface-as-picture-or-serve-it-as-a-web/m-p/4106252?profile.language=en

In essence, the loop is looped and we are back to LabVIEW Web UI, released what, 10 years ago?

The roadmap is pretty unclear past 2021...

-

cf Brian Powell referring to the "hackish" nature of XControls in the infamous NXG obituary thread...

-

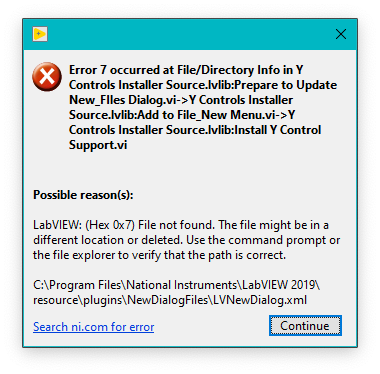

1. I removed LVNewDialog.xml before re-installing 1.0.0.2 and got this:

which, after I pressed continued, left the installer window opened in a stopped state, but at least now the help is back in the menu (but in Help>>Y Control, not Help -> New -> Y Control as you have it in the Readme.txt)

However, the File>>New... dialog is now empty, so removing LVNewDialog.xml is definitely not the thing to do (putting it back resolves the issue but is missing the Y Control item).

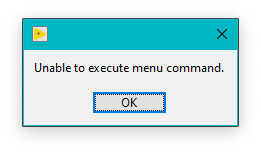

2. Re-uninstalling Y Control Support now LEAVES the Help >> Y Control menu but nothing happens when selecting it:

3. Re-reinstalling 1.0.0.2 restores the Y Control item in the New... dialog box...but removes the Help menu!

Getting somewhere?

-

Still can't seem to be able to access the Help. Certainly not in the Help -> New -> Y Control -> Y Controls menu and the chm file still doesn't work for me?

It seemed quite straightforward when I first installed it, but maybe the UNinstall step messed up with something?

I just uninstalled 1.002 and tried again the release from post 22 and am without an easy way to find Help within LV (and the chm file is not working anymore as a standalone) 😞

-

5 hours ago, paul_cardinale said:

That issue has been corrected (see attached files). However it won't fix already-created Y Controls. To fix them, make sure that Facade.ctl and the .yctl file are set as "TypeDef" and not "Strict TypeDef"

Other improvements:

- The installer and uninstaller will ignore RO status of an existing installation of Y Controls.

- The wizard fixes up the label of the cluster in the .yctl file.

Y Controls Support Version 1.0.0.1 Installer.zip 1.4 MB · 0 downloads Y Controls Version 1.0.0.1 Source.zip 1.59 MB · 0 downloads

How do I access the help again? It worked fine in the previous version, but is nowhere to be found in the new one, and the chm help file in the installer doesn't work as is...

-

4 hours ago, Rolf Kalbermatter said:

That could be because it’s not available (and never may be). VHDL sounds like a good idea, until you try to really use it. While it generally works as long as you stay within a single provider, it starts to fall apart as soon as you try to use the generated VHDL from one tool in another tool. Version differences is one reason, predefined library functions another. With the netlist format you know you’ll have to run the result through some conversion utility that you often have to script yourself. VHDL gives many users the false impression that it is a fully featured lingua franca for all hardware IP tools. Understandable if you believe the marketing hype but far from the reality.

One more of those dead links on NI's website then... and no information whatsoever.

Strangely enough, I never got a "We'd love to hear your feedback" pop-up on that page. 🙂

-

39 minutes ago, Rolf Kalbermatter said:

It's back online. Probably some maintenance work on the servers.

Not if you select VHDL

-

Playing around with it. It looks like, as for X and Q controls, compound controls need to be bundled up into a cluster. As a result, the facade is rigid, in the sense that when dropping an instance of the Y control, I cannot adjust the components of the control (or the whole control itself for that matter) unless I modify the definition of the control itself.

This is fine for a "one of" control, but somewhat of an issue for something intend to be generic. As an example, I was trying to try out the package with a simple string with visibility checkbox. Once dropped on a FP, neither the checkbox nor the string can be resized.

This type of ability (or lack thereof) is one of the things which immediately cooled my enthusiasm for the X and Q controls.

I may be missing something though.

-

1 hour ago, crossrulz said:

Not quite what you are asking for, but...LabVIEW FPGA IP Export Utility

Online shopping for this product is currently unavailable?

-

7 hours ago, LogMAN said:

My applications are driven by technology that other programming languages simply can't compete with. Scalability is through the roof. Need to write some data to text file? Sure, no problem. Drive the next space rocket, land rover, turbine engine, etc.? Here is your VI. The clarity of code is exceptional (unless you favor spaghetti). The only problem I have with it is the fact that it is tied to a company that want's to drive hardware sales.

I wouldn't use a language which is based on a lot of inaccessible C++ code for mission critical software... like driving a space rocket, for instance. I have encountered a lot of "corner cases" in the math routines which had probably some trivial algorithmic flaws at their core, but this was not possible to detect (let alone correct) until I drove the code in the ground, so to speak.

One more reason to open the belly of the beast.

-

3 hours ago, brian said:

"at great cost"? I don't think so. They are pretty hackish.

Would you put Channel Wires in the same boat? They are not intrinsic, built-in objects, either. Malleable VIs, Classes, Interfaces? Most of the dialogs? Also built in LabVIEW.

That may explain things then.

I am not a channel wire convert, use VIM occasionally and have stayed clear from classes (and thus interfaces). Which I guess excludes me from the conversation?

Some dialogs are obviously LabVIEW-built, and have aged pretty badly. The GSW is a recent example of accelerated obsolescence.

But as far as I know, the universal answer to requests and suggestions has been: "NI has other priorities and limited resources". How could it be otherwise indeed? Maybe, just maybe, tapping in a free multiplier of resources could change things? It is not that there are only negative examples out there of open source programming language development... But I'd understand that a scalded cat fears cold water.

-

3 hours ago, brian said:

XControls, anyone?

More seriously, Jeff's vision is for more of LabVIEW to be written in LabVIEW, with the intent that it empowers users like us to extend it.

LabVIEW doesn't have to be open source to do that, and my optimism comes from the possibility that the R&D team is going to have more resources to increase extensibility.

Sure, why not rewrite Linux or Android in LabVIEW too... 🙂

XControls are not standard LV objects. Can't put them in array, they have all kinds of limitations, and while I understand that they were created at great cost and with the best intentions, they essentially failed (I think I have developed one and concluded that this was not worth the effort - in large part because of the impossibility to array them).

I'd say pessimism seems warranted after the failure of NXG and the complete lack of a clear roadmap (this is what I was referring to with the term quixotic - with a typo).

I have since cut NI some slack and understood that little guys like me are of no monetary values to them. Fair enough and in any case, this is not the type of programming language I can publish code in since it is hieroglyphics to most. And even that at some point will have no value, as we will just tell Alexa the general features we need in our software, maybe draw a GUI mock-up and a DL network will generate the code...

-

3 hours ago, brian said:

I've thought a lot about this, but I just don't think it would be a successful open source project. It's not like it's a small library that's easy for someone to understand, much less modify. You need guides with really strong flashlights to show you the way. I speak from experience. When I led the team that created 64-bit LabVIEW 10+ years ago, I had to visit every nook and cranny in the source code, and find the right people with the right flashlights.

I think a more viable alternative (if NI didn't want to own LabVIEW any more) would be to spin it out as a subsidiary (or maybe non-profit?) along with the people who know the source code. It wouldn't be super profitable, but might be strong enough to independently support its development. The main impediment to this happening is that LabVIEW FPGA (and to a lesser extent, LabVIEW Real-Time) are really valuable to NI and probably too intertwined with the rest of LabVIEW for NI to keep FPGA and spin out the rest.

There is a problem right there (in the flash light story), but who is really surprised about it?

Of course there would be a cost to open sourcing the code: cleaning it up and documenting it as the team fixes things up in the upcoming releases is something that would be expected from a pro team anyway.

They wouldn't have to accept any and all contributing candidate, and they wouldn't have to commit any and all contributions. But they might get some good feedback from dedicated believers (see e.g. https://openjdk.java.net/contribute/). At worse none, but that in itself might be a good indicator for management to take a hard look at LV's relevance. They could spin-off improvements to control/indicator modifications to the community and focus on the things that matter most to their core business.

You'd be surprised how focused debuggers would be at squishing those pesky bugs of yore... not mentioning expanding the capabilities of LV. There is no guarantee that LV would eventually rule the world (I don't think anyone of us has ever had this dream). But maybe it would give it a chance to not look hopelessly outdated.

The real obstacle seems to be the "NI culture". I have no idea what it is, but the recent past has painted it has rather quitoxic if not readily toxic.

-

17 hours ago, brian said:

The other day, I wrote up a lengthy response to a thread about NXG on the LabVIEW Champions Forum. Fortunately, my computer blue-screened before I could post it--I kind of had the feeling that I was saying too much and it was turning into a "drunk history of NXG" story. Buy me some drinks at the next in-person NIWeek/CLA/GLA Summit/GDevCon, and I'll tell you what I really think!

First, I'll say that abandoning NXG was a brave move and I laud NI for making it. I'm biased; I've been advocating this position for many years, well before I left NI in 2014. I called it "The Brian Powell Plan".

I'm hopeful, but realistic, about LabVIEW's future. I think it will take a year or more for NI to figure out how to unify the R&D worlds of CurrentGen and NXG--how to modify teams, product planning, code fragments, and everything else. I believe the CurrentGen team was successful because it was small and people left them alone (for the most part). Will the "new world without NXG" return us to the days of a giant software team where everyone inside NI has an opinion about every feature and how it's on the hook for creating 20-40% revenue growth? I sure hope not. That's what I think will take some time for NI to figure out.

Will the best of NXG show up easily in CurrentGen? No! But I think the CurrentGen LabVIEW R&D team might finally get the resources to improve some of the big architectural challenges inside the codebase. Also, NI has enormously better product planning capability than they did when I left.

I am optimistic about LabVIEW's future.

This is material for the Ph D thesis on the NXG fiasco I was fearing (in another related thread on this forum) would never be written... and is now lost to the microcosm of LabVIEW Champions (I understand knuckleheads like us were not intended to be privy to this juicy stuff)

It seems that one way to give a future (or definitely burry any hopes for LabVIEW at the sight of the quagmire) would be to open source it.

-

23 hours ago, X___ said:

The official statement (by two top brasses, not a mere webmaster) is not particularly crystal clear as to what is forthcoming. Coming right after the Green New Deal campaign and in the midst of the pandemic, it might just as well be a forewarning of major "reorganization" within NI... The surprise expressed by AQ in a different thread would seem to support this hypothesis.

Maybe that is relevant: https://www.cmlviz.com/recent-development/2020/10/29/NATI/national-instruments---announced-workforce-reduction-plan-intended-to-accelerate-its-growth-strategy-and-further-optimize-operations-cost-structure

Another variant: https://www.honestaustin.com/2020/10/30/mass-layoffs-at-national-instruments-remedy/ ("Thursday, he said that sales staff and administrative staff would be most affected", so maybe unrelated to NXG's demise after all...)

And from the top dog's mouth: https://www.reddit.com/r/Austin/comments/jkyid6/ni_is_laying_off_9_of_its_global_workforce/

-

27 minutes ago, ShaunR said:

Well. Aren't we a ray of sunshine nowadays

Isn't this serious stuff though? Most of us may have had disagreements with NI on some of the paths they had chosen to go down to, and were lamenting the crawling pace of their progress, but at least we all agreed that the current LV IDE/UI development toolset was outdated.

The official statement (by two top brasses, not a mere webmaster) is not particularly crystal clear as to what is forthcoming. Coming right after the Green New Deal campaign and in the midst of the pandemic, it might just as well be a forewarning of major "reorganization" within NI... The surprise expressed by AQ in a different thread would seem to support this hypothesis.

At least it gives me something to speak about in my next "how do we use LabVIEW in the lab" intro session...

NI abandons future LabVIEW NXG development

in Announcements

Posted

A new chapter in the NI saga?

Found in a recent SEC disclosure (I was trying to figure out whether the slow increase in NI stock value since last November was due to something else than the recent announcement that they were firing 10% of their employees to increase the shareholder happiness):

"On January 3, 2021, Carla Pineyro Sublett, the Company’s Senior Vice President, Chief Marketing Officer, and General Manager of the Portfolio Business, and a named executive officer, notified the Company of her intent to resign from the Company, effective February 1, 2021, to pursue other opportunities."

Who is Carla? Well, she is Mrs Super Green in the flesh: Carla Piñeyro Sublett - NI, first ever Chief Marketing Officer for NI.

Intrigued, I looked around and found a couple of (moderately) interesting podcasts:

Carla Piñeyro Sublett - Top podcast episodes (listennotes.com)

in which she sounds like a very capable lady, and confess that she "discovered" that the company was in need of not just a rebranding (which she carried out brilliantly, we will all agree) but a complete reform. Her decision seems to indicate that that opinion might not have sounded too great to her top brass colleagues...

I want to believe she was sincere when she says (said) that her customers are amazing people (a little bit emphatic, are we not?), and that they should be empowered (hence the picture of someone I assume is a customer "engineering ambitiously" on the landing page). I am not sure whether that meant "listened to", but if that was it, she definitely was onto something.

NI: if your customers don't think you have what it takes to provide them with the tools they need to stay on the top, what do you think will happen? And if you don't want this to happen, what do you think you need to do?