infinitenothing

-

Posts

372 -

Joined

-

Last visited

-

Days Won

16

Content Type

Profiles

Forums

Downloads

Gallery

Posts posted by infinitenothing

-

-

How much data do you need from your sensor? My usual go-to for the raspberry pi is to run a webserver, build that as a startup VI and then the server will run any time the Pi is on. You can use LabVIEW's HTTP Get function from Untilted 4 to get your data. This might not work so well if you need a lot of data. I'd probably use the STM library instead of a webserver in that case.

-

Alternatively, have you tried the split string function?

https://www.ni.com/docs/en-US/bundle/labview/page/glang/search_split_string.html

Or, you could try a monospaced font and tape on the screen.

-

I'm curious why you went with the 6556. I probably would have gone with a LINX solution. Anyhow, I did a little searching and it looks like there's a SPI library/examples for that card.

https://forums.ni.com/t5/Digital-I-O/SPI-Communication-using-PXIe-6556/td-p/3591472

-

Protocol buffers is one part and I think a cross platform scripted method of defining a typedef is cool.

I definitely got lost a couple times figuring out what was "my code" and what was the toolkit and I had to reinstall the toolkit at least once.

I think if I were making the tool, for a unary server, I would have used VI references similar to "nonlinear curve fit" uses them instead of an event structure. The event structure comes with too much baggage and doesn't enforce a response.

I still don't fully understand how to manage a streamed response. Shouldn't the read timeout be set at each place the data is read instead of a global? Seems like it lends itself better to a queue or something.

-

I feel like REDIS accomplishes most of the goals that shared variables attempt to cover (minus some UI sugar) in a nice cross platform way. Does anyone have an opinion on "in memory" key-value stores that are accessible over a network?

-

5 hours ago, ShaunR said:

If Emerson buy NI, you can look forward to no LabVIEW at all.

At least we'd know where we stood rather than being strung along in uncertainty.

-

I found a related thread that might be helpful

It would be nice to be able to do more with the call library node without having to wrapper everything. If anyone wants to post something on the idea exchange forums, I'd likely give it a vote

-

Yes, I'm in. Thank you

-

That link is still invalid for me

-

I've never used the openg zip library but most of the packages I've used are static linked and just get uploaded/compiled as needed.

FYI, the 9627 doesn't have enough disk space with the system image to use the RAD/system imaging tools so you won't be able to replicate it. I'm stuck with 2019 until all our 9627 targets are out of support.

-

On 11/10/2022 at 11:26 AM, Lipko said:

Yup, I mentioned the reference thing too. With reference array you of course have to do explicit array indexing and wire the reference all around, I don't see much improvment. It's just personal preference

The advantage is that indexing an array of refnums does not change the internal semi-hidden active plot state of the control.

-

2 hours ago, Lipko said:

I don't see how should Labview know which plot you intend to work on and what different method there could be. Sure, the above example should be easy to figure out but it's easy to come up with funkier situations. Maybe each plot should have its own reference, but I don't see that a superior solution. Or maybe choosing the active plot should be forced (invoke node instead of property node)? That's not too sympathetic either.

This pattern is far better than having an array of sub-property clusters and to manipulate those arrays. Though I agree that all these types of properties should have array version too, like graph cursors and annotations for example.

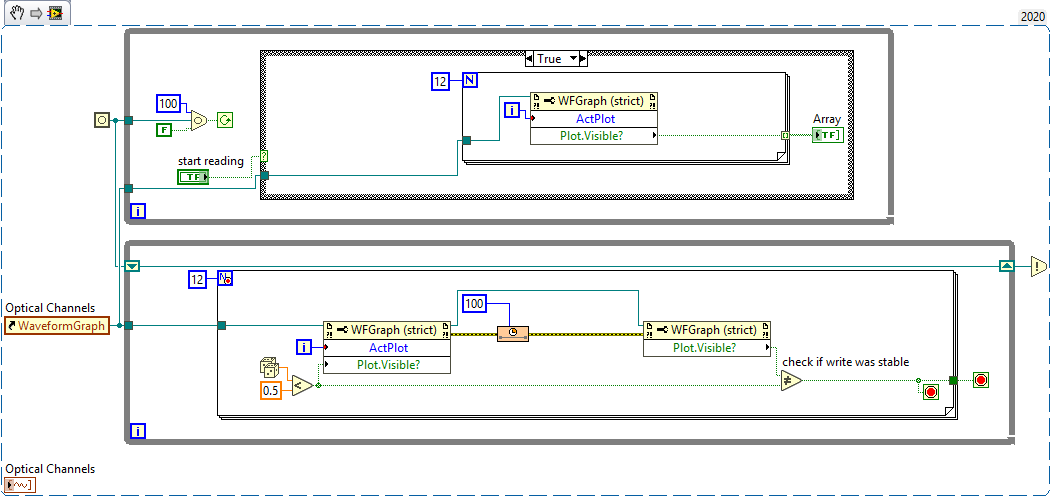

I often do ugly hacks with graphs and this problem never really got me. The code in the original post (I don't understand as it has some new blocks I don't know) seems like the model-view-controller is not separated enough.

I think either of those ideas would be superior. The graph should have a plots property that returns an array of plot references. We see this architecture with things like tabs having an array of page references. An invoke node that didn't force you to do a write when you only want to do a read would also avoid this problem.

38 minutes ago, drjdpowell said:Does a Property Node, with multiple Properties set, execute as a single action, without a parallel Property Node executing in the middle? If so, then resetting the Active Plot in the second Property Node in the bottom loop would prevent any race condition.

Even if it was a single action, is there a promise to maintain that in future versions? I solved this issue by wrapping the graph reference in a DVR.

-

I just got hit by this bug. My writer below works fine (never exits) as long as I don't start enable the reader (top loop). I see my error now but the design was ripe for it.

-

Ah got it. Old school DVRs! 😆

-

10 hours ago, ShaunR said:

That is a typical symptom of a race condition.

I would suggest taking a look at "LabVIEW <version>\examples\lvoop\SingletonPattern". It is much simpler and easier to understand than GOOP examples which have a lot of infrastructure included.

I couldn't actually find that example there. Is there a package I need? Or maybe it was moved or renamed in a different version of LV

-

- Popular Post

- Popular Post

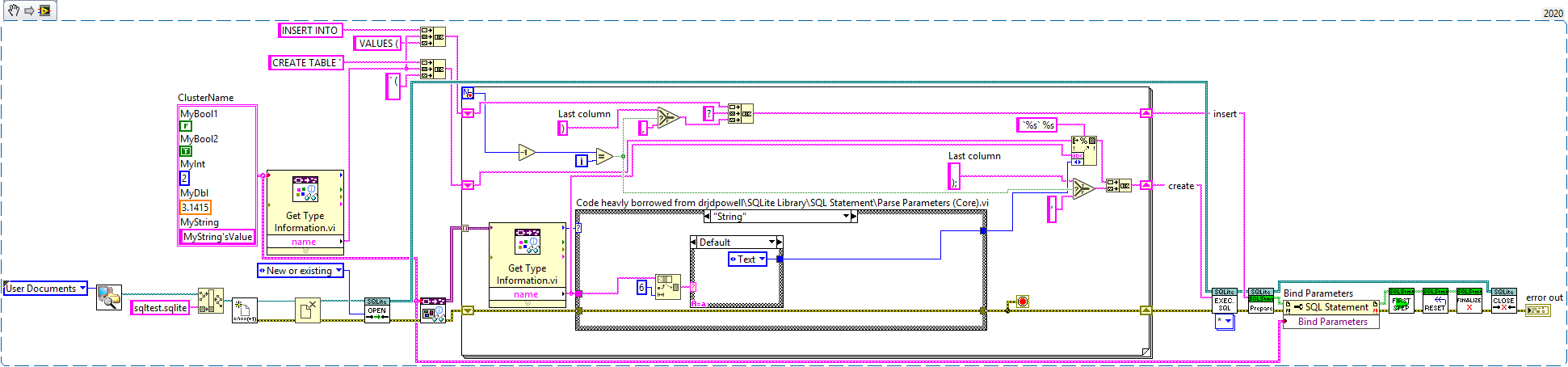

I don't know about you guys but I hate writing strings. There's just too many ways to mess it up and, in this case, it might just be to tedious. So, I wanted to create a way to make SQLite create and insert statements based on a cluster.

The type infrencing code was based on JDP Science's SQLite Library. Thanks!

-

4

4

-

This sounds like a DVR might be a better fit.

-

How was the Con? Do you know if/when the videos will be posted to Youtube?

-

10 hours ago, ShaunR said:

That's not good at all. With some fiddling you might get 4-5Gb/s but that's what you expect from a low-end laptop. Are you sure it's Gb/s and not GB/s? You are hoping for at least 20Gb/s+.

I tested two other computers. Interestingly, I found out on those computers, the consumer looking for the end condition couldn't keep up. I would think a U8 comparison would be reasonably speedy. But, once I stopped checking the whole array, I could get 11Gbps. The video was pretty useless as the mfg doesn't have recommended settings as far as I know. I don't know if I have the patience to fine tune it on my own.

6 hours ago, Phillip Brooks said:Are you using the parallel streams option in iperf3? This will help saturate the link.

Parallel helps. I don't understand why but the improvement is a few times faster than you'd expect by multiplying the single worker rate by the number of workers. I'm more interested in single connections at this time though.

-

Good tip. I hate type guessing. I'm looking at you TDMS Pallet.

-

Localhost is a little faster: 3.4gbps

Seeing 80% CPU use single logical processor and 35% averaged over all the processors

iperf on local host with default options isn't doing great: ~900Mbps but very little cpu use

I suspect there's a better tool for Windows.

-

I submitted my code. I don't think I had any greedy loops. I wonder if there's a greedy section of TCP Read though.

-

I got similar performance from iperf. My question then is what knobs do I have to tweak to get closer to 10Gbps?

I attached my benchmark code if anyone is curious about the 100% CPU. I see that on the server which is receiving the data, not the client which is sending. The client's busiest logical processor is at 35% CPU. The server still has 1 logical processor at 100% use and as far as I can tell, its all used in the TCP Read primitive

-

Has anyone done a bandwidth test to see how much data they can push through a 10GbE connection? I'm currently seeing ~2Gbps. One logical processor is at 100%. I could try to push more but I'm wondering what other people have seen out there. I'm using a packet structure similar to STM. I bet jumbo frames would help.

Processor on PC that transmits the data: Intel(R) Xeon(R) CPU E3-1515M v5 @ 2.80GHz, 2808 Mhz, 4 Core(s), 8 Logical Processor(s)

Using panther programmer

in LabVIEW Community Edition

Posted

Could you make an array of hex strings and then use index array with an enum wired to the index to select the right program? Then you use an event structure to execute that when someone presses "send" button